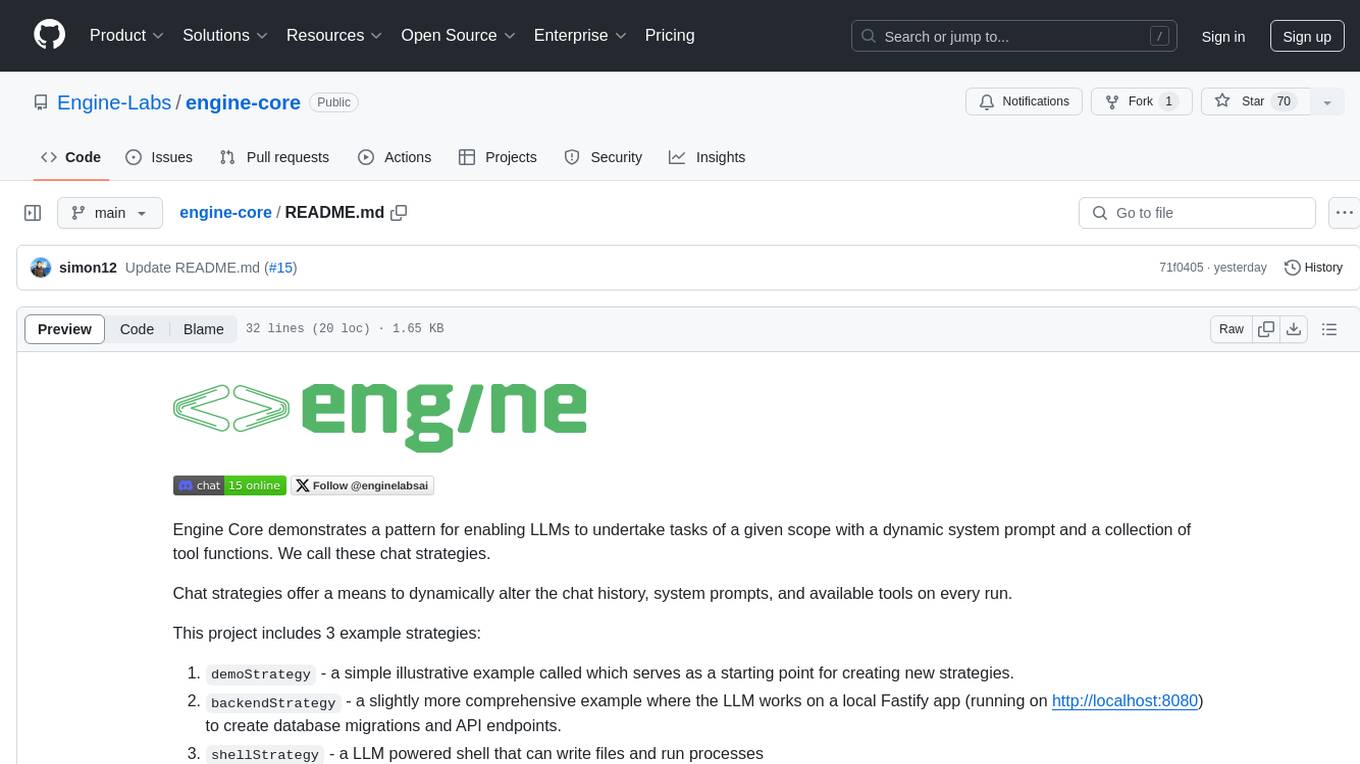

engine-core

Chat strategies for LLMs

Stars: 75

Engine Core is a project that demonstrates a pattern for enabling Large Language Models (LLMs) to undertake tasks with a dynamic system prompt and a collection of tool functions known as chat strategies. These strategies allow for the dynamic alteration of chat history, system prompts, and available tools on every run. The project includes example strategies such as demoStrategy, backendStrategy, and shellStrategy. Additionally, LLM integrations like Anthropic or OpenAI have been extracted into adapters to enable running the same app code and strategies while switching foundation models.

README:

Engine is an open source software engineer.

It is model agnostic and extensible, based on 'strategies' and 'adapters'.

Chat strategies offer a means to dynamically alter context, system prompts, and available tools on every run to optimise for a particular engineering task or environment.

This project includes 3 example strategies:

-

demoStrategy- a simple illustrative example which serves as a starting point for creating new strategies -

backendStrategy- a slightly more comprehensive example where the LLM works on a local Fastify app (running on http://localhost:8080) to create database migrations and API endpoints -

shellStrategy- a LLM powered shell that can write files and run processes

Adapters make any foundational LLM (GPT, Claude) hot swappable.

- Ensure Docker is installed and running

- Copy

.env.exampleto.envand add at least one ofOPENAI_API_KEYorANTHROPIC_API_KEY - Run

bin/cli - Select a LLM model for which you have provided an API key

- Type

helpto see what you can do

Pull requests are welcome. For major changes, please open an issue first to discuss what you would like to change.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for engine-core

Similar Open Source Tools

engine-core

Engine Core is a project that demonstrates a pattern for enabling Large Language Models (LLMs) to undertake tasks with a dynamic system prompt and a collection of tool functions known as chat strategies. These strategies allow for the dynamic alteration of chat history, system prompts, and available tools on every run. The project includes example strategies such as demoStrategy, backendStrategy, and shellStrategy. Additionally, LLM integrations like Anthropic or OpenAI have been extracted into adapters to enable running the same app code and strategies while switching foundation models.

wandb

Weights & Biases (W&B) is a platform that helps users build better machine learning models faster by tracking and visualizing all components of the machine learning pipeline, from datasets to production models. It offers tools for tracking, debugging, evaluating, and monitoring machine learning applications. W&B provides integrations with popular frameworks like PyTorch, TensorFlow/Keras, Hugging Face Transformers, PyTorch Lightning, XGBoost, and Sci-Kit Learn. Users can easily log metrics, visualize performance, and compare experiments using W&B. The platform also supports hosting options in the cloud or on private infrastructure, making it versatile for various deployment needs.

lumigator

Lumigator is an open-source platform developed by Mozilla.ai to help users select the most suitable language model for their specific needs. It supports the evaluation of summarization tasks using sequence-to-sequence models such as BART and BERT, as well as causal models like GPT and Mistral. The platform aims to make model selection transparent, efficient, and empowering by providing a framework for comparing LLMs using task-specific metrics to evaluate how well a model fits a project's needs. Lumigator is in the early stages of development and plans to expand support to additional machine learning tasks and use cases in the future.

council

Council is an open-source platform designed for the rapid development and deployment of customized generative AI applications using teams of agents. It extends the LLM tool ecosystem by providing advanced control flow and scalable oversight for AI agents. Users can create sophisticated agents with predictable behavior by leveraging Council's powerful approach to control flow using Controllers, Filters, Evaluators, and Budgets. The framework allows for automated routing between agents, comparing, evaluating, and selecting the best results for a task. Council aims to facilitate packaging and deploying agents at scale on multiple platforms while enabling enterprise-grade monitoring and quality control.

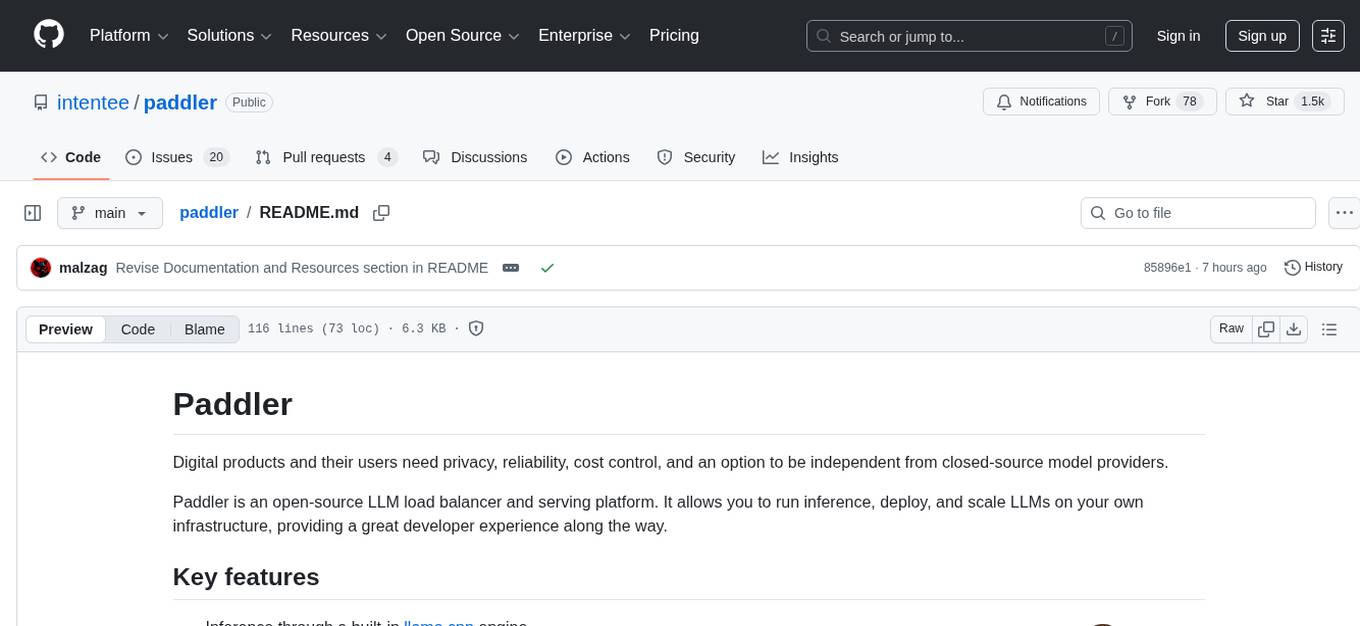

paddler

Paddler is an open-source LLM load balancer and serving platform designed for digital products and users who prioritize privacy, reliability, cost control, and independence from closed-source model providers. It allows running inference, deploying, and scaling LLMs on personal infrastructure, offering a seamless developer experience. Key features include inference through llama.cpp engine, LLM-specific load balancing, dynamic model swapping, request buffering, and built-in web admin panel for management and monitoring. Paddler is suitable for product teams needing LLM inference, DevOps/LLMOps teams deploying LLMs at scale, organizations handling sensitive data, and product leaders aiming for predictable LLM costs and reliable model performance.

md-agent

MD-Agent is a LLM-agent based toolset for Molecular Dynamics. It uses Langchain and a collection of tools to set up and execute molecular dynamics simulations, particularly in OpenMM. The tool assists in environment setup, installation, and usage by providing detailed steps. It also requires API keys for certain functionalities, such as OpenAI and paper-qa for literature searches. Contributions to the project are welcome, with a detailed Contributor's Guide available for interested individuals.

blades

Blades is a multimodal AI Agent framework in Go, supporting custom models, tools, memory, middleware, and more. It is well-suited for multi-turn conversations, chain reasoning, and structured output. The framework provides core components like Agent, Prompt, Chain, ModelProvider, Tool, Memory, and Middleware, enabling developers to build intelligent applications with flexible configuration and high extensibility. Blades leverages the characteristics of Go to achieve high decoupling and efficiency, making it easy to integrate different language model services and external tools. The project is in its early stages, inviting Go developers and AI enthusiasts to contribute and explore the possibilities of building AI applications in Go.

ModernBERT

ModernBERT is a repository focused on modernizing BERT through architecture changes and scaling. It introduces FlexBERT, a modular approach to encoder building blocks, and heavily relies on .yaml configuration files to build models. The codebase builds upon MosaicBERT and incorporates Flash Attention 2. The repository is used for pre-training and GLUE evaluations, with a focus on reproducibility and documentation. It provides a collaboration between Answer.AI, LightOn, and friends.

tau

Tau is a framework for building low maintenance & highly scalable cloud computing platforms that software developers will love. It aims to solve the high cost and time required to build, deploy, and scale software by providing a developer-friendly platform that offers autonomy and flexibility. Tau simplifies the process of building and maintaining a cloud computing platform, enabling developers to achieve 'Local Coding Equals Global Production' effortlessly. With features like auto-discovery, content-addressing, and support for WebAssembly, Tau empowers users to create serverless computing environments, host frontends, manage databases, and more. The platform also supports E2E testing and can be extended using a plugin system called orbit.

dataherald

Dataherald is a natural language-to-SQL engine built for enterprise-level question answering over structured data. It allows you to set up an API from your database that can answer questions in plain English. You can use Dataherald to: * Allow business users to get insights from the data warehouse without going through a data analyst * Enable Q+A from your production DBs inside your SaaS application * Create a ChatGPT plug-in from your proprietary data

quick-start-connectors

Cohere's Build-Your-Own-Connector framework allows integration of Cohere's Command LLM via the Chat API endpoint to any datastore/software holding text information with a search endpoint. Enables user queries grounded in proprietary information. Use-cases include question/answering, knowledge working, comms summary, and research. Repository provides code for popular datastores and a template connector. Requires Python 3.11+ and Poetry. Connectors can be built and deployed using Docker. Environment variables set authorization values. Pre-commits for linting. Connectors tailored to integrate with Cohere's Chat API for creating chatbots. Connectors return documents as JSON objects for Cohere's API to generate answers with citations.

atomic_agents

Atomic Agents is a modular and extensible framework designed for creating powerful applications. It follows the principles of Atomic Design, emphasizing small and single-purpose components. Leveraging Pydantic for data validation and serialization, the framework offers a set of tools and agents that can be combined to build AI applications. It depends on the Instructor package and supports various APIs like OpenAI, Cohere, Anthropic, and Gemini. Atomic Agents is suitable for developers looking to create AI agents with a focus on modularity and flexibility.

eureka-ml-insights

The Eureka ML Insights Framework is a repository containing code designed to help researchers and practitioners run reproducible evaluations of generative models efficiently. Users can define custom pipelines for data processing, inference, and evaluation, as well as utilize pre-defined evaluation pipelines for key benchmarks. The framework provides a structured approach to conducting experiments and analyzing model performance across various tasks and modalities.

autoMate

autoMate is an AI-powered local automation tool designed to help users automate repetitive tasks and reclaim their time. It leverages AI and RPA technology to operate computer interfaces, understand screen content, make autonomous decisions, and support local deployment for data security. With natural language task descriptions, users can easily automate complex workflows without the need for programming knowledge. The tool aims to transform work by freeing users from mundane activities and allowing them to focus on tasks that truly create value, enhancing efficiency and liberating creativity.

PromptAgent

PromptAgent is a repository for a novel automatic prompt optimization method that crafts expert-level prompts using language models. It provides a principled framework for prompt optimization by unifying prompt sampling and rewarding using MCTS algorithm. The tool supports different models like openai, palm, and huggingface models. Users can run PromptAgent to optimize prompts for specific tasks by strategically sampling model errors, generating error feedbacks, simulating future rewards, and searching for high-reward paths leading to expert prompts.

sublayer

Sublayer is a model-agnostic Ruby AI Agent framework that provides base classes for building Generators, Actions, Tasks, and Agents to create AI-powered applications in Ruby. It supports various AI models and providers, such as OpenAI, Gemini, and Claude. Generators generate specific outputs, Actions perform operations, Agents are autonomous entities for tasks or monitoring, and Triggers decide when Agents are activated. The framework offers sample Generators and usage examples for building AI applications.

For similar tasks

engine-core

Engine Core is a project that demonstrates a pattern for enabling Large Language Models (LLMs) to undertake tasks with a dynamic system prompt and a collection of tool functions known as chat strategies. These strategies allow for the dynamic alteration of chat history, system prompts, and available tools on every run. The project includes example strategies such as demoStrategy, backendStrategy, and shellStrategy. Additionally, LLM integrations like Anthropic or OpenAI have been extracted into adapters to enable running the same app code and strategies while switching foundation models.

aitour26-WRK541-real-world-code-migration-with-github-copilot-agent-mode

Microsoft AI Tour 2026 WRK541 is a workshop focused on real-world code migration using GitHub Copilot Agent Mode. The session is designed for technologists interested in applying AI pair-programming techniques to challenging tasks like migrating or translating code between different programming languages. Participants will learn advanced GitHub Copilot techniques, differences between Python and C#, JSON serialization and deserialization in C#, developing and validating endpoints, integrating Swagger/OpenAPI documentation, and writing unit tests with MSTest. The workshop aims to help users gain hands-on experience in using GitHub Copilot for real-world code migration scenarios.

Geoweaver

Geoweaver is an in-browser software that enables users to easily compose and execute full-stack data processing workflows using online spatial data facilities, high-performance computation platforms, and open-source deep learning libraries. It provides server management, code repository, workflow orchestration software, and history recording capabilities. Users can run it from both local and remote machines. Geoweaver aims to make data processing workflows manageable for non-coder scientists and preserve model run history. It offers features like progress storage, organization, SSH connection to external servers, and a web UI with Python support.

CrewAI-Studio

CrewAI Studio is an application with a user-friendly interface for interacting with CrewAI, offering support for multiple platforms and various backend providers. It allows users to run crews in the background, export single-page apps, and use custom tools for APIs and file writing. The roadmap includes features like better import/export, human input, chat functionality, automatic crew creation, and multiuser environment support.

gptme

GPTMe is a tool that allows users to interact with an LLM assistant directly in their terminal in a chat-style interface. The tool provides features for the assistant to run shell commands, execute code, read/write files, and more, making it suitable for various development and terminal-based tasks. It serves as a local alternative to ChatGPT's 'Code Interpreter,' offering flexibility and privacy when using a local model. GPTMe supports code execution, file manipulation, context passing, self-correction, and works with various AI models like GPT-4. It also includes a GitHub Bot for requesting changes and operates entirely in GitHub Actions. In progress features include handling long contexts intelligently, a web UI and API for conversations, web and desktop vision, and a tree-based conversation structure.

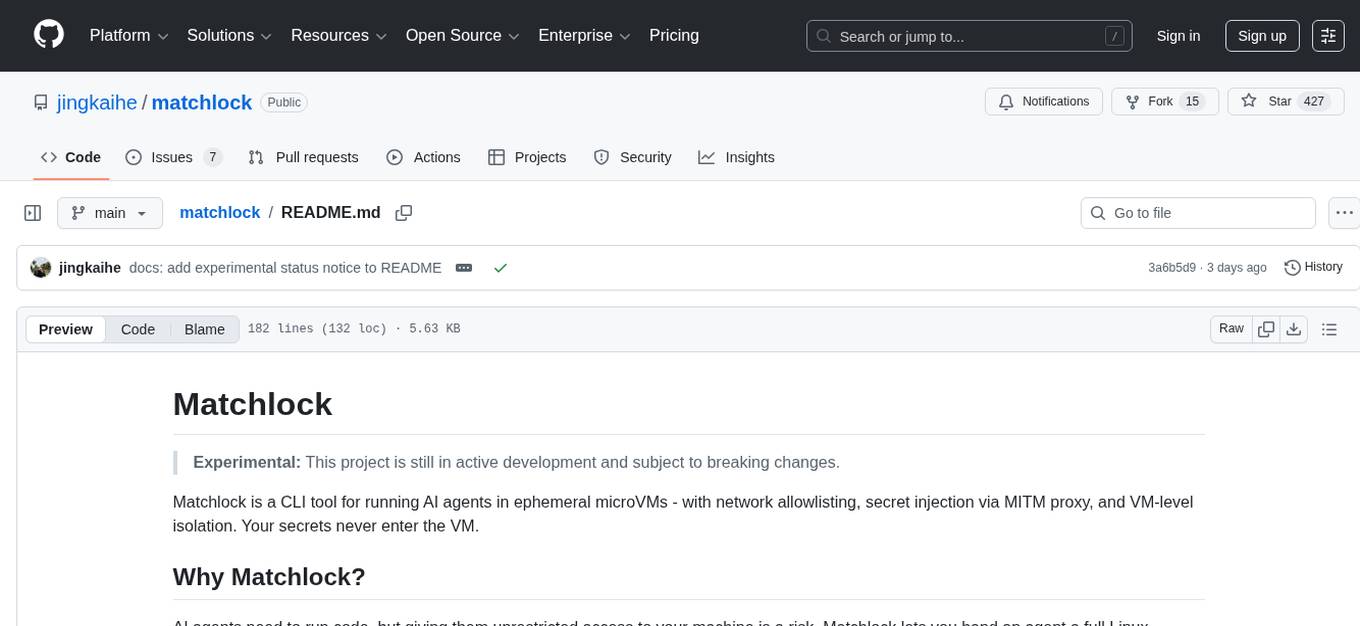

matchlock

Matchlock is a CLI tool designed for running AI agents in isolated and disposable microVMs with network allowlisting and secret injection capabilities. It ensures that your secrets never enter the VM, providing a secure environment for AI agents to execute code without risking access to your machine. The tool offers features such as sealing the network to only allow traffic to specified hosts, injecting real credentials in-flight by the host, and providing a full Linux environment for the agent's operations while maintaining isolation from the host machine. Matchlock supports quick booting of Linux environments, sandbox lifecycle management, image building, and SDKs for Go and Python for embedding sandboxes in applications.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.