suno-music-generator

基于 suno.ai 实现的文字快速创作音乐网站 (A text-based rapid music creation website based on suno.ai )

Stars: 153

Suno Music Generator is an unofficial website based on NextJS for suno.ai music generation. It can generate songs you want in about a minute by using user input prompts. The project reverse engineers suno.ai's song generation API using JavaScript and integrates payment with Lemon Squeezy. It also includes token update and maintenance features to prevent token expiration. Users can deploy the project with Vercel and follow a quick start guide to set up the environment and run the project locally. The project acknowledges Suno AI, NextJS, Clerk, node-postgres, tailwindcss, Lemon Squeezy, and aiwallpaper. Users can contact the developer via Twitter and support the project by buying a coffee.

README:

这是一个基于 NextJS 开发的非官方 suno.ai 音乐生成网站。可以通过用户输入的 prompt 在一分钟左右的时间生成你想要的歌曲。

最新版本:https://sunomusic.fun v1 版本:https://v1.sunomusic.fun

开源版本对应 v1 版本,最新版本正在优化中,详情可加微信了解:chengzisangeban。

通过 JavaScript 逆向工程解析 suno.ai 生成歌曲的 API,并使用 Lemon Squeezy 进行支付。同时,项目内置了 token 更新和保活功能,无需担心 token 过期。

- 获取 app.suno.ai 账户的 cookie

找到包含关键词 "client?_clerk_js_version" 的请求。找到请求的 Cookie 部分,并复制 Cookie 的值

- 克隆项目

git clone https://github.com/Alvin-Liu/suno-music-generator.git- 安装依赖

cd suno-music-generator

pnpm install- 初始化数据库

使用本地数据库: local postgres 或者使用在线数据库: vercel-postgres

在 data/install.sql 文件中复制创建数据库用到的 sql

- 设置环境变量

在项目跟目录添加 .env.local 文件,填入如下配置:

NEXT_PUBLIC_CLERK_PUBLISHABLE_KEY=""

NEXT_PUBLIC_CLERK_SIGN_IN_URL=/sign-in

NEXT_PUBLIC_CLERK_SIGN_UP_URL=/sign-up

NEXT_PUBLIC_CLERK_AFTER_SIGN_IN_URL=/

NEXT_PUBLIC_CLERK_AFTER_SIGN_UP_URL=/

SUNO_COOKIE=""

LEMON_SQUEEZY_HOST=https://api.lemonsqueezy.com/v1

LEMON_SQUEEZY_API_KEY=

LEMON_SQUEEZY_STORE_ID=

LEMON_SQUEEZY_PRODUCT_ID=

LEMON_SQUEEZY_MEMBERSHIP_MONTHLY_VARIANT_ID=

LEMON_SQUEEZY_MEMBERSHIP_SINGLE_TIME_VARIANT_ID=

LEMONS_SQUEEZY_SIGNATURE_SECRET=

POSTGRES_URL=

SUNO_COOKIE 是你第一步获取的 cookie 值

- 本地开发

pnpm dev打开预览:http://localhost:3000

你可以通过以下 Twitter 链接与我联系: https://twitter.com/alvinliux 。作为 Twitter 新人,我非常真诚地请求你的关注和支持。

如果此项目对你有所帮助,请考虑请我喝杯咖啡

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for suno-music-generator

Similar Open Source Tools

suno-music-generator

Suno Music Generator is an unofficial website based on NextJS for suno.ai music generation. It can generate songs you want in about a minute by using user input prompts. The project reverse engineers suno.ai's song generation API using JavaScript and integrates payment with Lemon Squeezy. It also includes token update and maintenance features to prevent token expiration. Users can deploy the project with Vercel and follow a quick start guide to set up the environment and run the project locally. The project acknowledges Suno AI, NextJS, Clerk, node-postgres, tailwindcss, Lemon Squeezy, and aiwallpaper. Users can contact the developer via Twitter and support the project by buying a coffee.

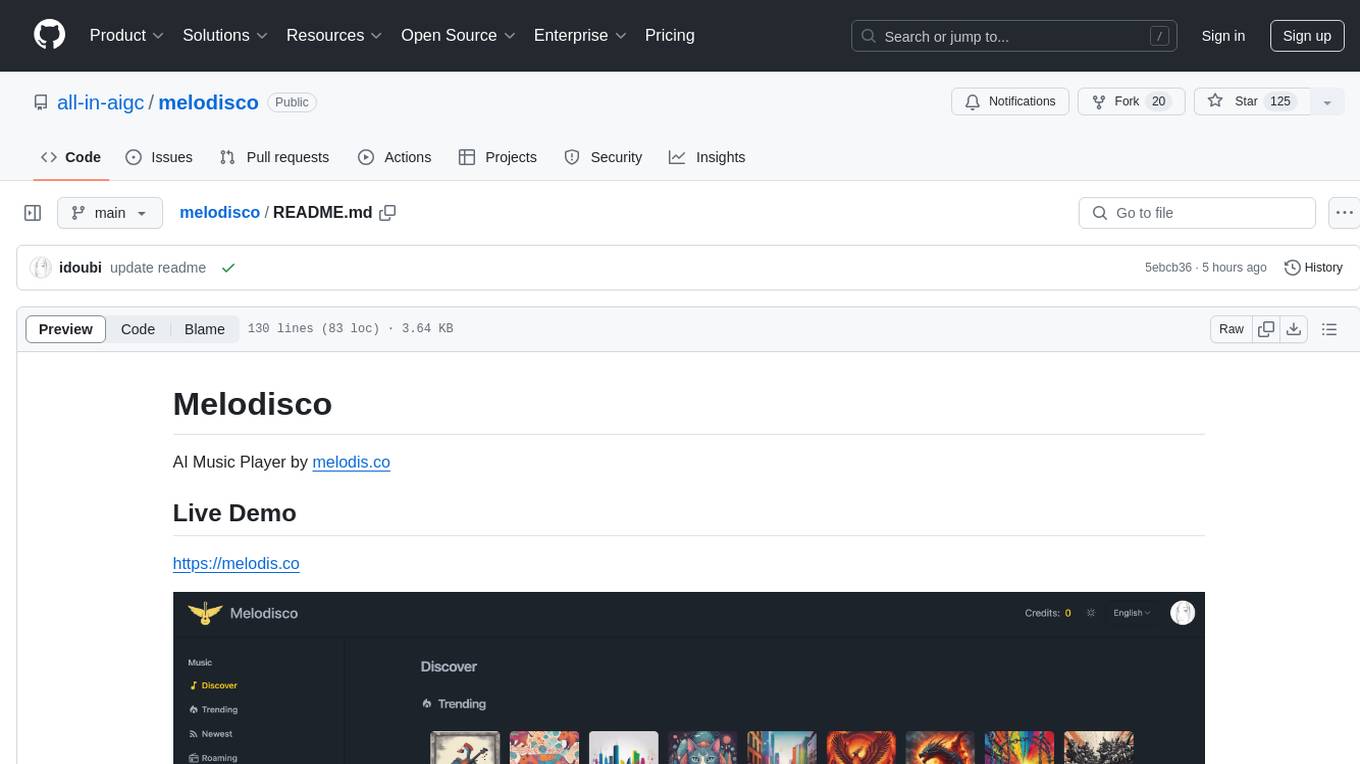

melodisco

Melodisco is an AI music player that allows users to listen to music and manage playlists. It provides a user-friendly interface for music playback and organization. Users can deploy Melodisco with Vercel or Docker for easy setup. Local development instructions are provided for setting up the project environment. The project credits various tools and libraries used in its development, such as Next.js, Tailwind CSS, and Stripe. Melodisco is a versatile tool for music enthusiasts looking for an AI-powered music player with features like authentication, payment integration, and multi-language support.

quickvid

QuickVid is an open-source video summarization tool that uses AI to generate summaries of YouTube videos. It is built with Whisper, GPT, LangChain, and Supabase. QuickVid can be used to save time and get the essence of any YouTube video with intelligent summarization.

next-money

Next Money Stripe Starter is a SaaS Starter project that empowers your next project with a stack of Next.js, Prisma, Supabase, Clerk Auth, Resend, React Email, Shadcn/ui, and Stripe. It seamlessly integrates these technologies to accelerate your development and SaaS journey. The project includes frameworks, platforms, UI components, hooks and utilities, code quality tools, and miscellaneous features to enhance the development experience. Created by @koyaguo in 2023 and released under the MIT license.

airsonic-refix

Airsonic (refix) UI is a modern responsive web frontend designed for airsonic-advanced, navidrome, gonic, and other subsonic compatible music servers. It offers features such as responsive UI for desktop and mobile, browsing library for albums, artists, genres, playback with persistent queue, repeat & shuffle, MediaSession integration, playlist management with drag and drop, search, favorites, internet radio, and podcasts.

fragments

Fragments is an open-source tool that leverages Anthropic's Claude Artifacts, Vercel v0, and GPT Engineer. It is powered by E2B Sandbox SDK and Code Interpreter SDK, allowing secure execution of AI-generated code. The tool is based on Next.js 14, shadcn/ui, TailwindCSS, and Vercel AI SDK. Users can stream in the UI, install packages from npm and pip, and add custom stacks and LLM providers. Fragments enables users to build web apps with Python interpreter, Next.js, Vue.js, Streamlit, and Gradio, utilizing providers like OpenAI, Anthropic, Google AI, and more.

ollama4j-web-ui

Ollama4j Web UI is a Java-based web interface built using Spring Boot and Vaadin framework for Ollama users with Java and Spring background. It allows users to interact with various models running on Ollama servers, providing a fully functional web UI experience. The project offers multiple ways to run the application, including via Docker, Docker Compose, or as a standalone JAR. Users can configure the environment variables and access the web UI through a browser. The project also includes features for error handling on the UI and settings pane for customizing default parameters.

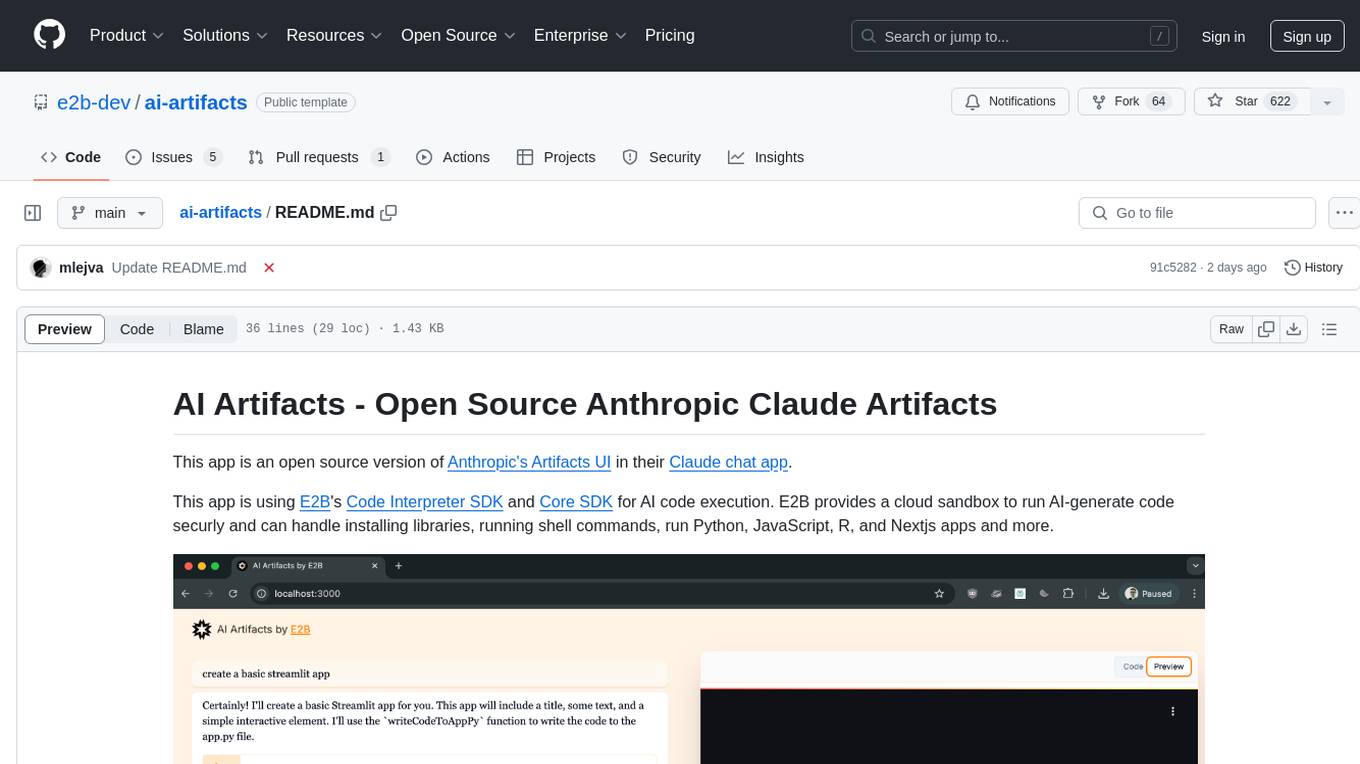

ai-artifacts

AI Artifacts is an open source tool that replicates Anthropic's Artifacts UI in the Claude chat app. It utilizes E2B's Code Interpreter SDK and Core SDK for secure AI code execution in a cloud sandbox environment. Users can run AI-generated code in various languages such as Python, JavaScript, R, and Nextjs apps. The tool also supports running AI-generated Python in Jupyter notebook, Next.js apps, and Streamlit apps. Additionally, it offers integration with Vercel AI SDK for tool calling and streaming responses from the model.

copywriterproai-backend

CopywriterProAI is the world's first open-source AI writing platform for SEO and Ad Copy. The backend repository powers the AI capabilities and manages content processing for smooth operation. It provides an AI writing assistant that works behind the scenes to assist users in content creation.

ChatIDE

ChatIDE is an AI assistant that integrates with your IDE, allowing you to converse with OpenAI's ChatGPT or Anthropic's Claude within your development environment. It provides a seamless way to access AI-powered assistance while coding, enabling you to get real-time help, generate code snippets, debug errors, and brainstorm ideas without leaving your IDE.

SciPIP

SciPIP is a scientific paper idea generation tool powered by a large language model (LLM) designed to assist researchers in quickly generating novel research ideas. It conducts a literature review based on user-provided background information and generates fresh ideas for potential studies. The tool is designed to help researchers in various fields by providing a GUI environment for idea generation, supporting NLP, multimodal, and CV fields, and allowing users to interact with the tool through a web app or terminal. SciPIP uses Neo4j as its database and provides functionalities for generating new ideas, fetching papers, and constructing the database.

trieve

Trieve is an advanced relevance API for hybrid search, recommendations, and RAG. It offers a range of features including self-hosting, semantic dense vector search, typo tolerant full-text/neural search, sub-sentence highlighting, recommendations, convenient RAG API routes, the ability to bring your own models, hybrid search with cross-encoder re-ranking, recency biasing, tunable popularity-based ranking, filtering, duplicate detection, and grouping. Trieve is designed to be flexible and customizable, allowing users to tailor it to their specific needs. It is also easy to use, with a simple API and well-documented features.

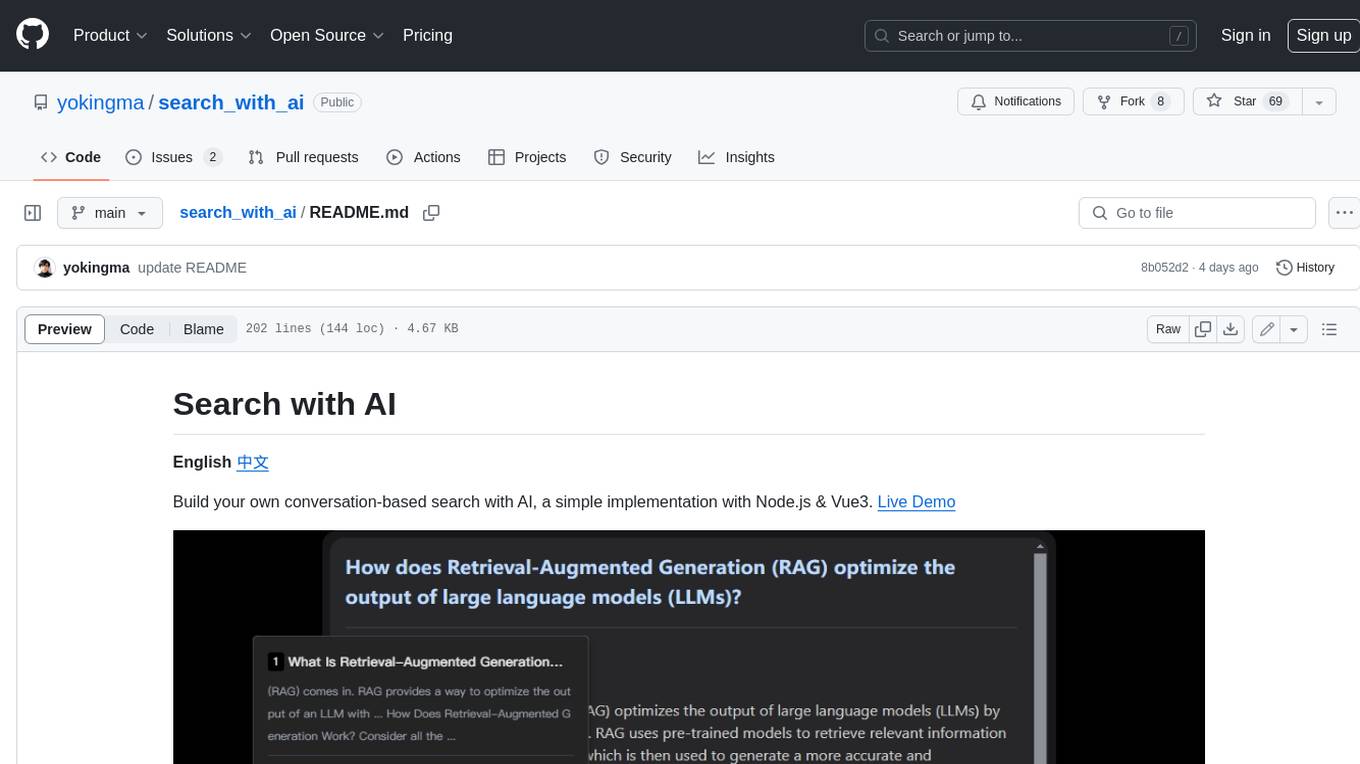

search_with_ai

Build your own conversation-based search with AI, a simple implementation with Node.js & Vue3. Live Demo Features: * Built-in support for LLM: OpenAI, Google, Lepton, Ollama(Free) * Built-in support for search engine: Bing, Sogou, Google, SearXNG(Free) * Customizable pretty UI interface * Support dark mode * Support mobile display * Support local LLM with Ollama * Support i18n * Support Continue Q&A with contexts.

openai-kotlin

OpenAI Kotlin API client is a Kotlin client for OpenAI's API with multiplatform and coroutines capabilities. It allows users to interact with OpenAI's API using Kotlin programming language. The client supports various features such as models, chat, images, embeddings, files, fine-tuning, moderations, audio, assistants, threads, messages, and runs. It also provides guides on getting started, chat & function call, file source guide, and assistants. Sample apps are available for reference, and troubleshooting guides are provided for common issues. The project is open-source and licensed under the MIT license, allowing contributions from the community.

ChatGPT

The ChatGPT API Free Reverse Proxy provides free self-hosted API access to ChatGPT (`gpt-3.5-turbo`) with OpenAI's familiar structure, eliminating the need for code changes. It offers streaming response, API endpoint compatibility, and complimentary access without an API key. Installation options include Docker, PC/Server, and Termux on Android devices. The API can be accessed through a self-hosted local server or a pre-hosted API with an API key obtained from the Discord server. Usage examples are provided for Python and Node.js, and the project is licensed under AGPL-3.0.

pear-landing-page

PearAI Landing Page is an open-source AI-powered code editor managed by Nang and Pan. It is built with Next.js, Vercel, Tailwind CSS, and TypeScript. The project requires setting up environment variables for proper configuration. Users can run the project locally by starting the development server and visiting the specified URL in the browser. Recommended extensions include Prettier, ESLint, and JavaScript and TypeScript Nightly. Contributions to the project are welcomed and appreciated.

For similar tasks

suno-music-generator

Suno Music Generator is an unofficial website based on NextJS for suno.ai music generation. It can generate songs you want in about a minute by using user input prompts. The project reverse engineers suno.ai's song generation API using JavaScript and integrates payment with Lemon Squeezy. It also includes token update and maintenance features to prevent token expiration. Users can deploy the project with Vercel and follow a quick start guide to set up the environment and run the project locally. The project acknowledges Suno AI, NextJS, Clerk, node-postgres, tailwindcss, Lemon Squeezy, and aiwallpaper. Users can contact the developer via Twitter and support the project by buying a coffee.

awesome-hosting

awesome-hosting is a curated list of hosting services sorted by minimal plan price. It includes various categories such as Web Services Platform, Backend-as-a-Service, Lambda, Node.js, Static site hosting, WordPress hosting, VPS providers, managed databases, GPU cloud services, and LLM/Inference API providers. Each category lists multiple service providers along with details on their minimal plan, trial options, free tier availability, open-source support, and specific features. The repository aims to help users find suitable hosting solutions based on their budget and requirements.

langroid-examples

Langroid-examples is a repository containing examples of using the Langroid Multi-Agent Programming framework to build LLM applications. It provides a collection of scripts and instructions for setting up the environment, working with local LLMs, using OpenAI LLMs, and running various examples. The repository also includes optional setup instructions for integrating with Qdrant, Redis, Momento, GitHub, and Google Custom Search API. Users can explore different scenarios and functionalities of Langroid through the provided examples and documentation.

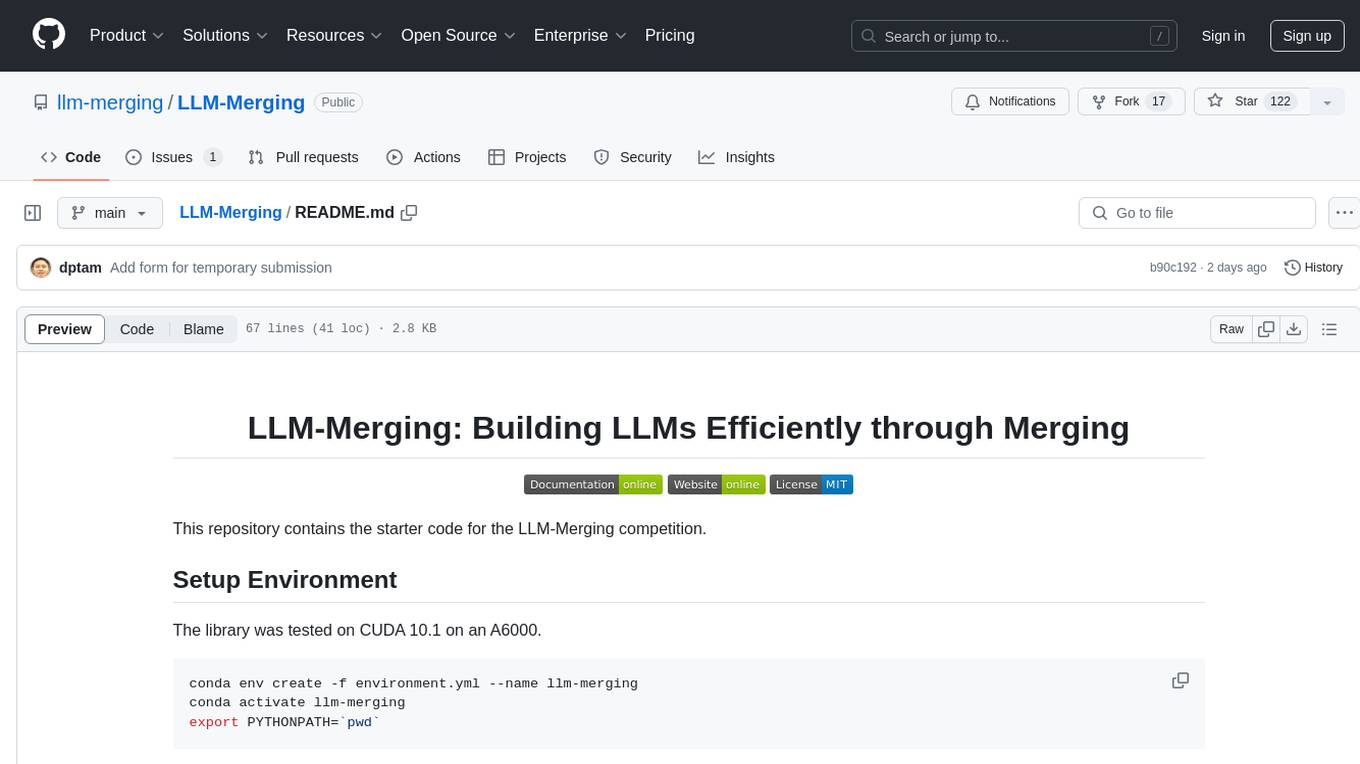

LLM-Merging

LLM-Merging is a repository containing starter code for the LLM-Merging competition. It provides a platform for efficiently building LLMs through merging methods. Users can develop new merging methods by creating new files in the specified directory and extending existing classes. The repository includes instructions for setting up the environment, developing new merging methods, testing the methods on specific datasets, and submitting solutions for evaluation. It aims to facilitate the development and evaluation of merging methods for LLMs.

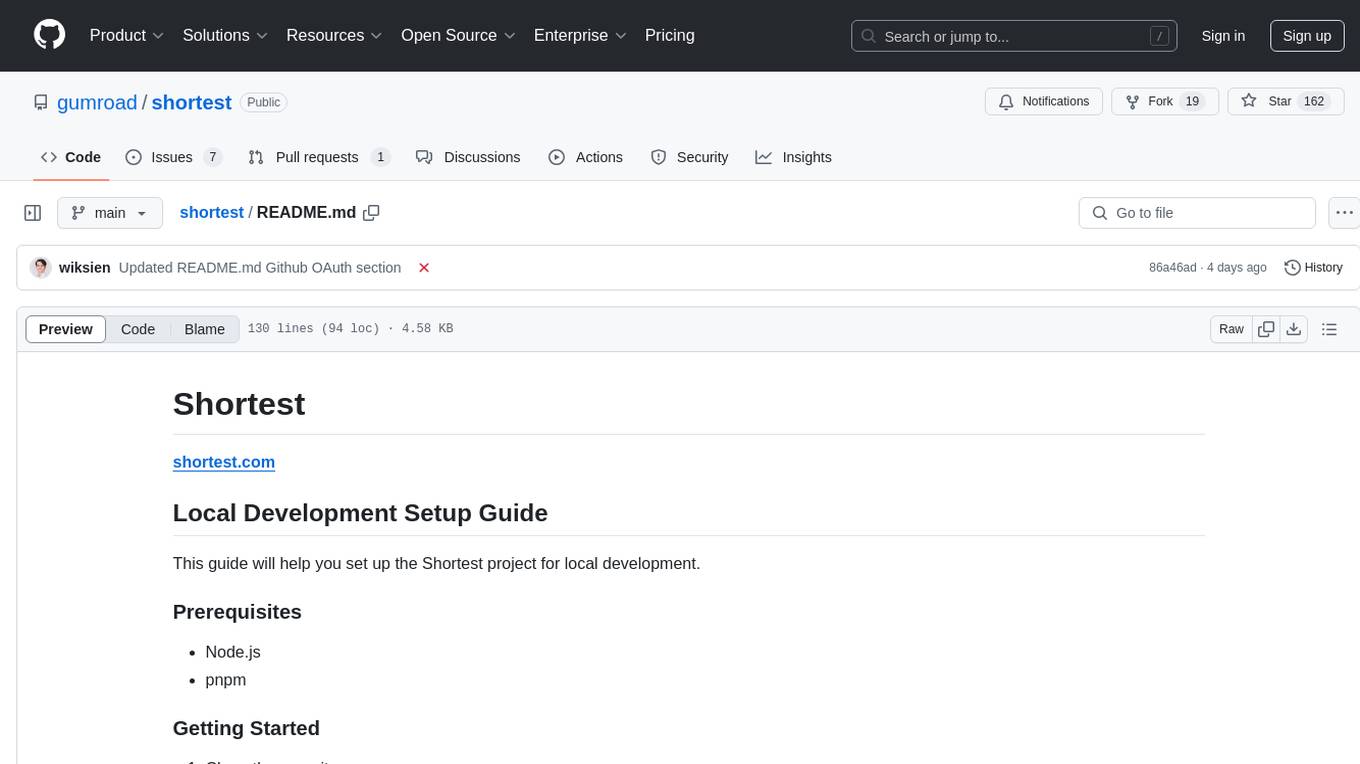

shortest

Shortest is a project for local development that helps set up environment variables and services for a web application. It provides a guide for setting up Node.js and pnpm dependencies, configuring services like Clerk, Vercel Postgres, Anthropic, Stripe, and GitHub OAuth, and running the application and tests locally.

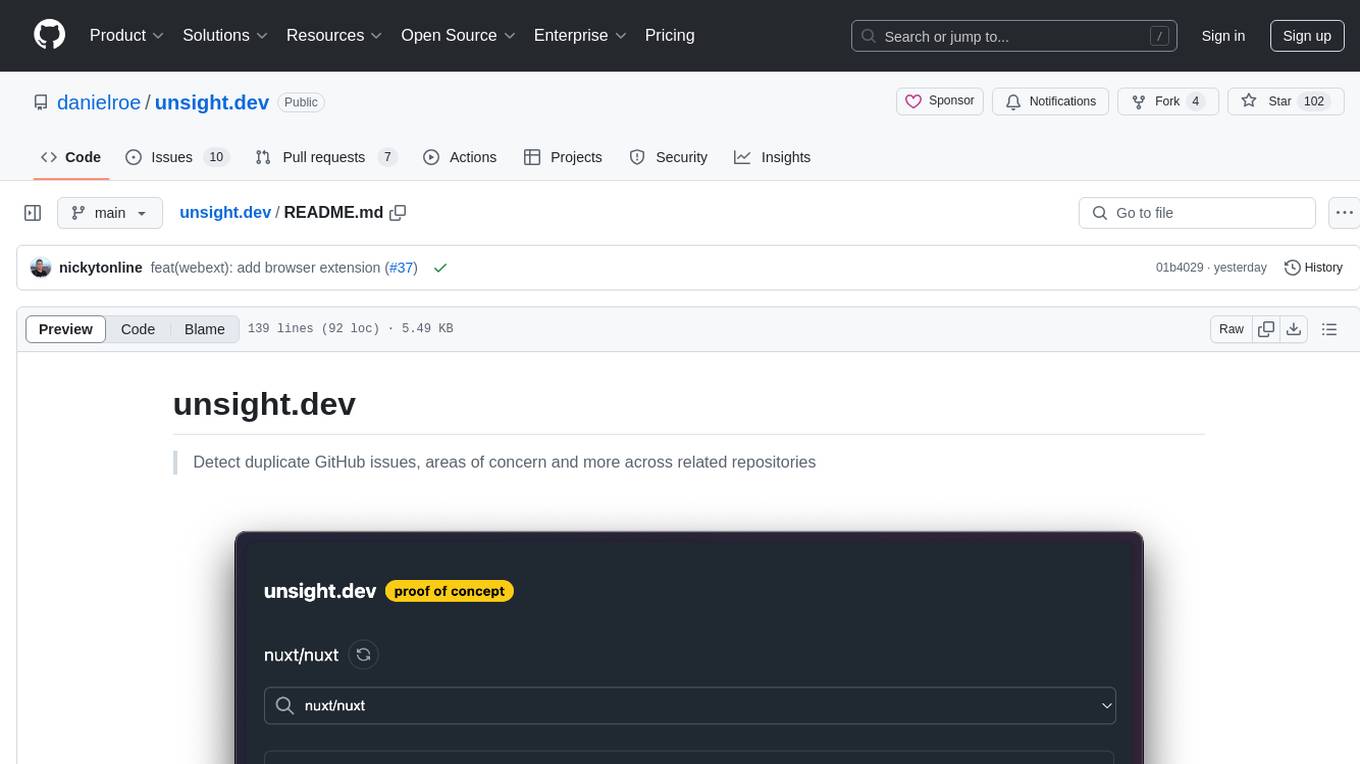

unsight.dev

unsight.dev is a tool built on Nuxt that helps detect duplicate GitHub issues and areas of concern across related repositories. It utilizes Nitro server API routes, GitHub API, and a GitHub App, along with UnoCSS. The tool is deployed on Cloudflare with NuxtHub, using Workers AI, Workers KV, and Vectorize. It also offers a browser extension soon to be released. Users can try the app locally for tweaking the UI and setting up a full development environment as a GitHub App.

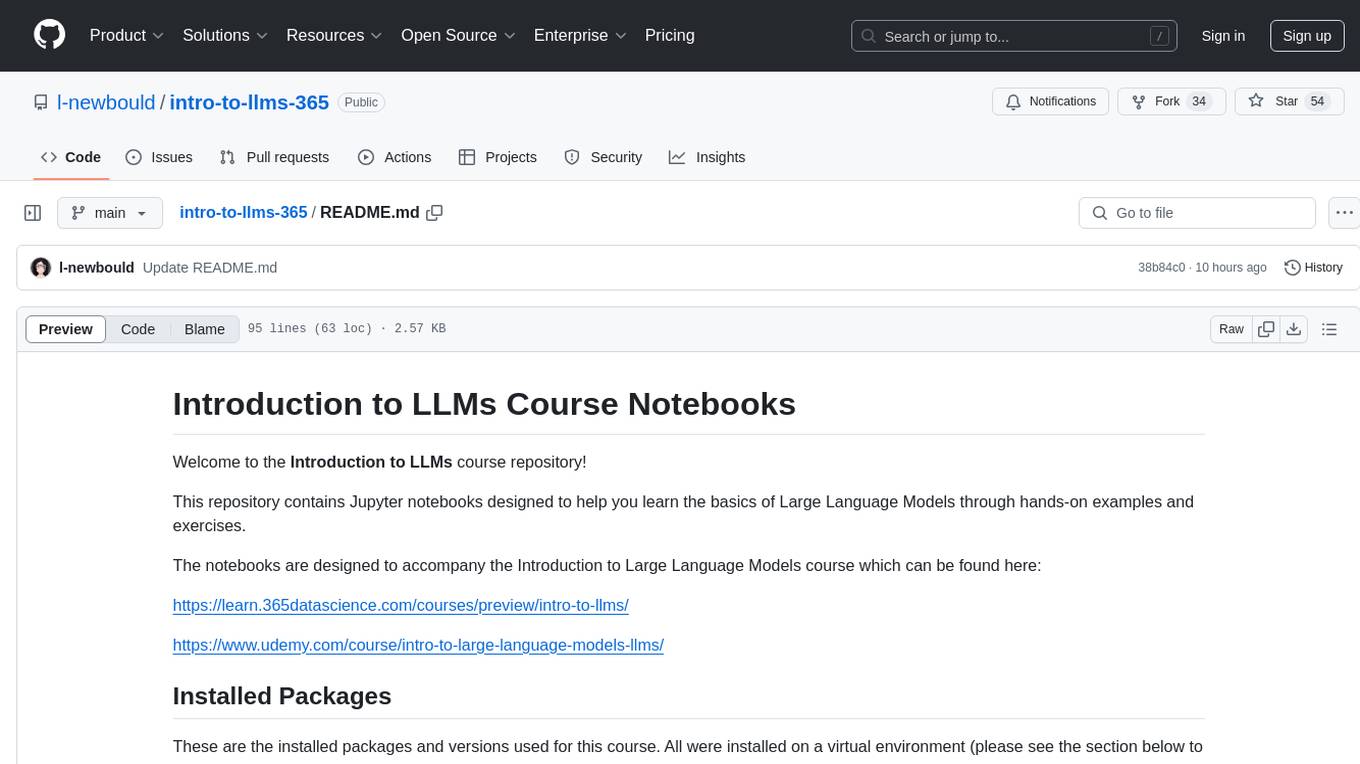

intro-to-llms-365

This repository serves as a resource for the Introduction to Large Language Models (LLMs) course, providing Jupyter notebooks with hands-on examples and exercises to help users learn the basics of Large Language Models. It includes information on installed packages, updates, and setting up a virtual environment for managing packages and running Jupyter notebooks.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.