Best AI tools for< host models >

20 - AI tool Sites

Empower

Empower is a serverless fine-tuned LLM hosting platform that provides cost-effective LoRA serving without compromising performance. It is compatible with any PEFT-based LoRAs, allowing users to train their models anywhere and deploy them on Empower effortlessly. Empower's technology ensures a sub-second code start time for LoRAs, eliminating cold starts. Users only pay for what they use, with no expensive dedicated instance fees.

Trieve

Trieve is an AI search infrastructure that offers an all-in-one solution for building search, recommendations, and RAG (Retrieve and Generate) capabilities into applications. It combines language models with tools for fine-tuning ranking and relevance, providing production-ready infrastructure to enhance search experiences. Trieve supports features such as semantic vector search, hybrid search, merchandising relevance tuning, date recency biasing, and sub-sentence highlighting. With Trieve, users can easily integrate AI-powered search functionalities into their products, focusing on building unique features while leveraging state-of-the-art retrieval models.

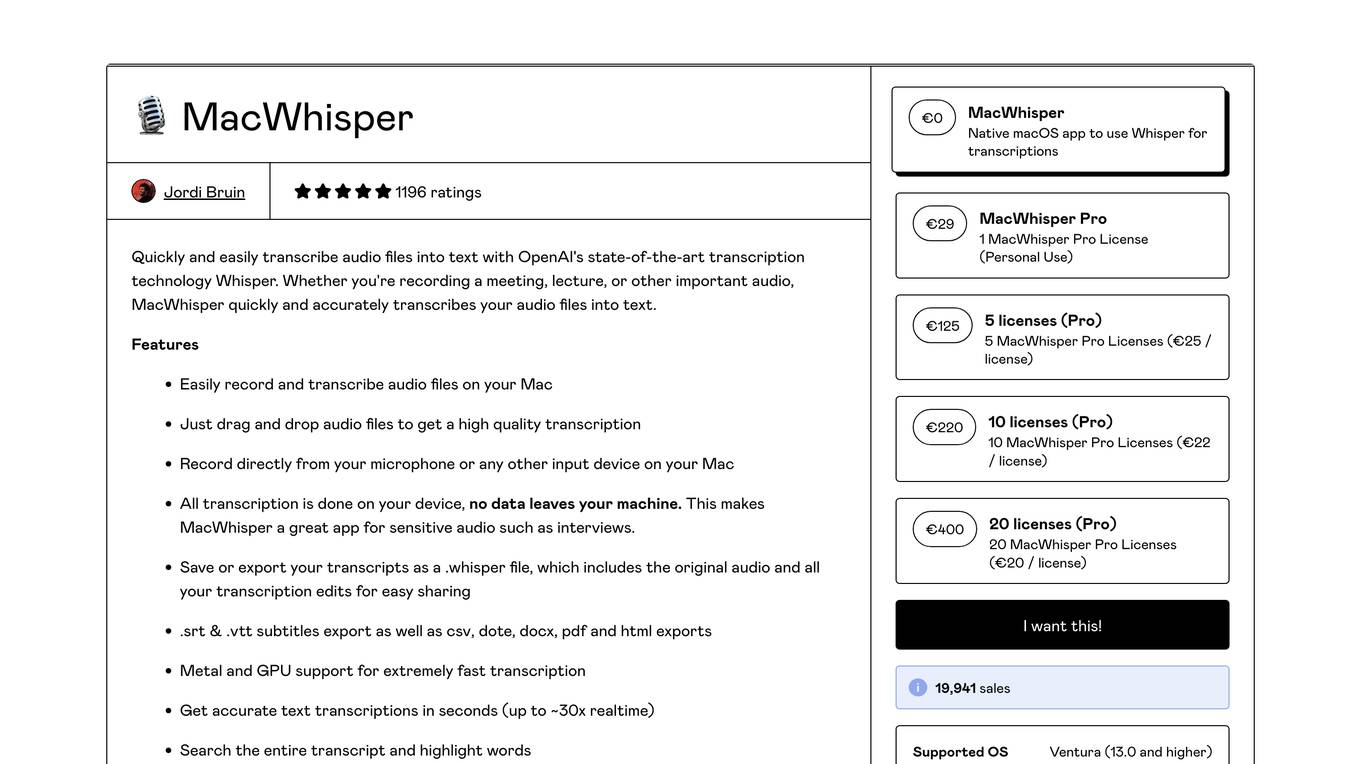

MacWhisper

MacWhisper is a native macOS application that utilizes OpenAI's Whisper technology for transcribing audio files into text. It offers a user-friendly interface for recording, transcribing, and editing audio, making it suitable for various use cases such as transcribing meetings, lectures, interviews, and podcasts. The application is designed to protect user privacy by performing all transcriptions locally on the device, ensuring that no data leaves the user's machine.

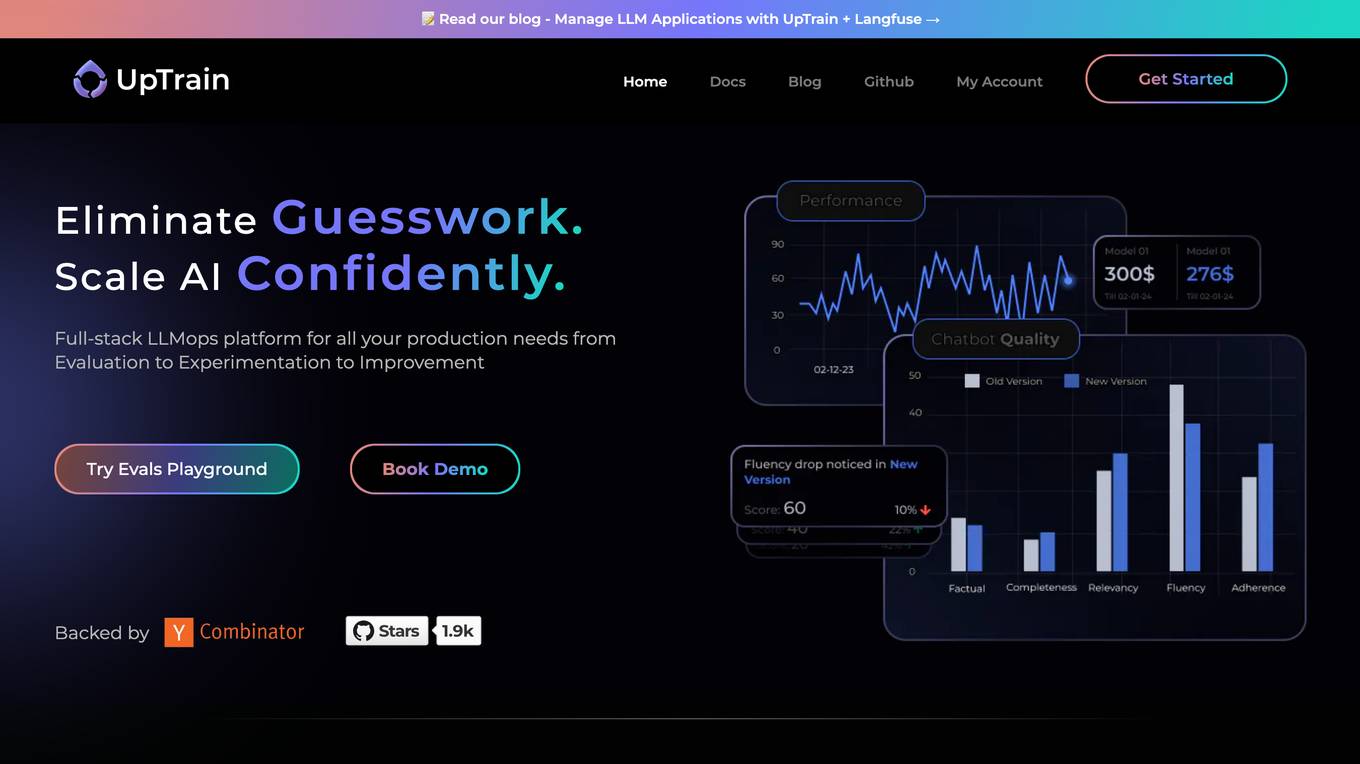

UpTrain

UpTrain is a full-stack LLMOps platform designed to help users with all their production needs, from Evaluation to Experimentation to Improvement. It offers diverse evaluations, automated regression testing, enriched datasets, and innovative techniques to generate high-quality scores. The platform is built for developers, compliant to data governance needs, cost-efficient, remarkably reliable, and open-source. UpTrain provides precision metrics to understand LLMs, safeguard systems, and solve diverse needs with a single platform.

Amazon Web Services (AWS)

Amazon Web Services (AWS) is a comprehensive, evolving cloud computing platform from Amazon that provides a broad set of global compute, storage, database, analytics, application, and deployment services that help organizations move faster, lower IT costs, and scale applications. With AWS, you can use as much or as little of its services as you need, and scale up or down as required with only a few minutes notice. AWS has a global network of regions and availability zones, so you can deploy your applications and data in the locations that are optimal for you.

Earkind

Earkind is an AI-powered podcast generator that creates engaging and informative podcasts on artificial intelligence news, research, and trends. It combines large language models (LLMs), neural expressive text-to-speech, and programmatic audio editing to produce full podcast episodes with a conversational format featuring a cast of characters. The podcasts are designed to be both informative and entertaining, with a focus on making AI accessible and relatable to a wider audience.

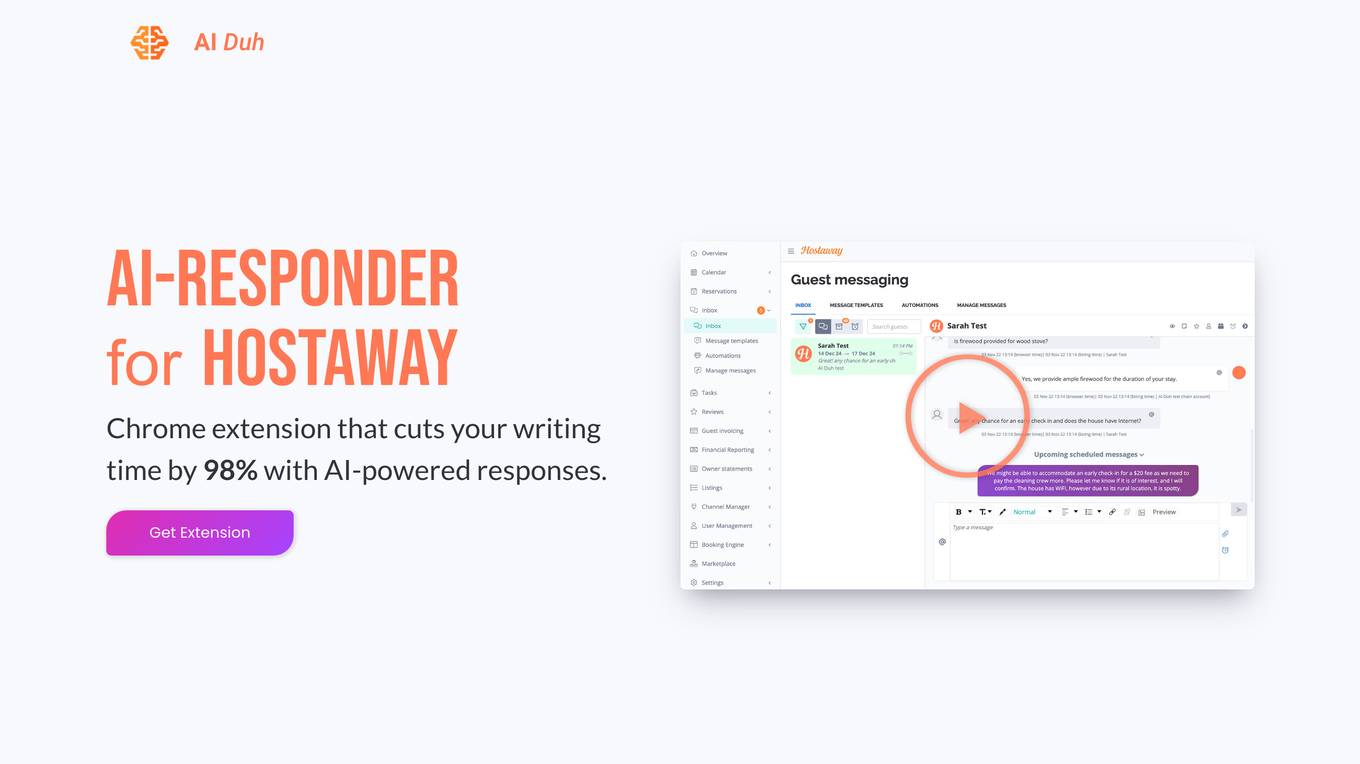

AI-Responder for HostAway

AI-Responder for HostAway is an AI-powered Chrome extension designed to significantly reduce writing time by providing AI-generated responses. The tool leverages property-specific knowledge documents, guest data, and previous chat history to deliver high-quality responses. It utilizes advanced AI models like OpenAI GPT3 Davinci-003 and GPT NeoX 20B to ensure the generated text is of exceptional quality, often indistinguishable from human-written content. The application offers a 14-day free trial and a lifetime price lock for beta users, with pricing estimated at $95/month/listing post-beta. It aims to streamline communication processes for hosts by automating responses and enhancing guest interactions.

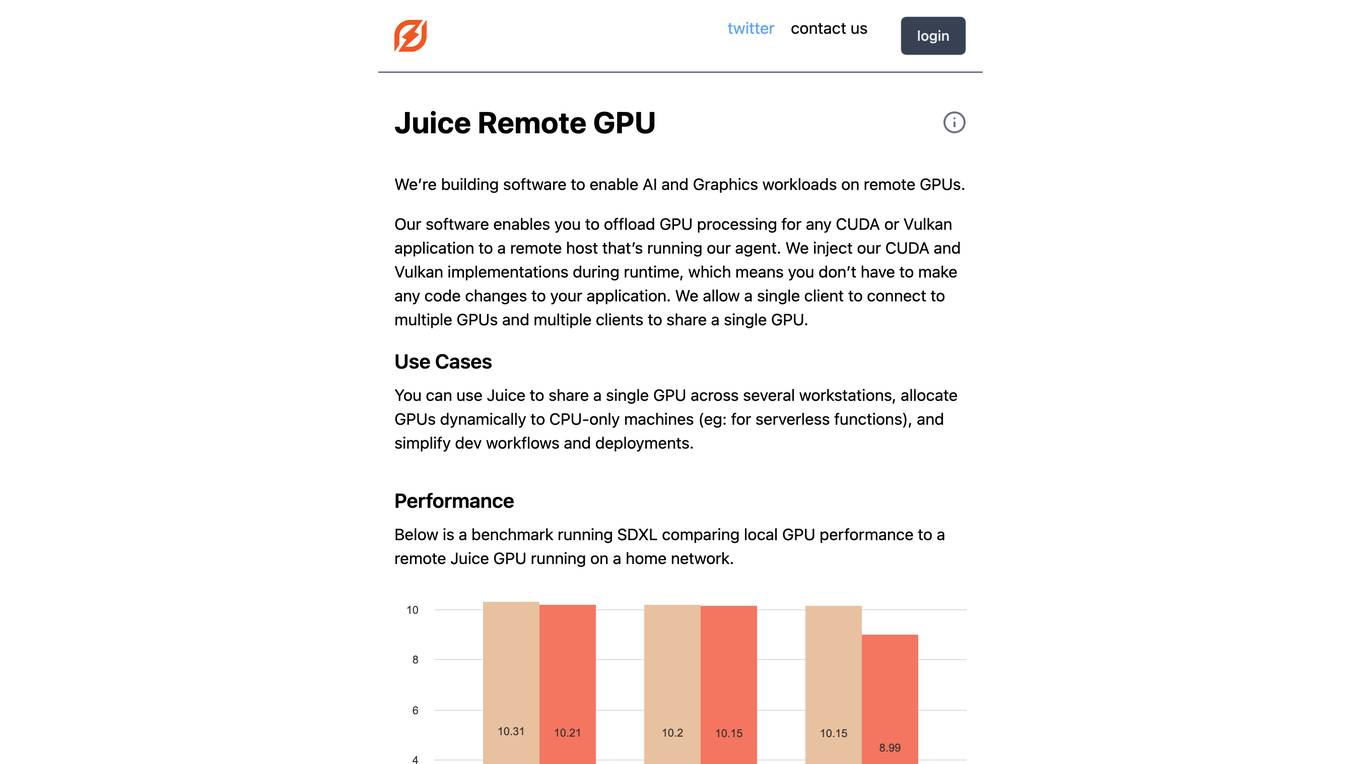

Juice Remote GPU

Juice Remote GPU is a software that enables AI and Graphics workloads on remote GPUs. It allows users to offload GPU processing for any CUDA or Vulkan application to a remote host running the Juice agent. The software injects CUDA and Vulkan implementations during runtime, eliminating the need for code changes in the application. Juice supports multiple clients connecting to multiple GPUs and multiple clients sharing a single GPU. It is useful for sharing a single GPU across multiple workstations, allocating GPUs dynamically to CPU-only machines, and simplifying development workflows and deployments. Juice Remote GPU performs within 5% of a local GPU when running in the same datacenter. It supports various APIs, including CUDA, Vulkan, DirectX, and OpenGL, and is compatible with PyTorch and TensorFlow. The team behind Juice Remote GPU consists of engineers from Meta, Intel, and the gaming industry.

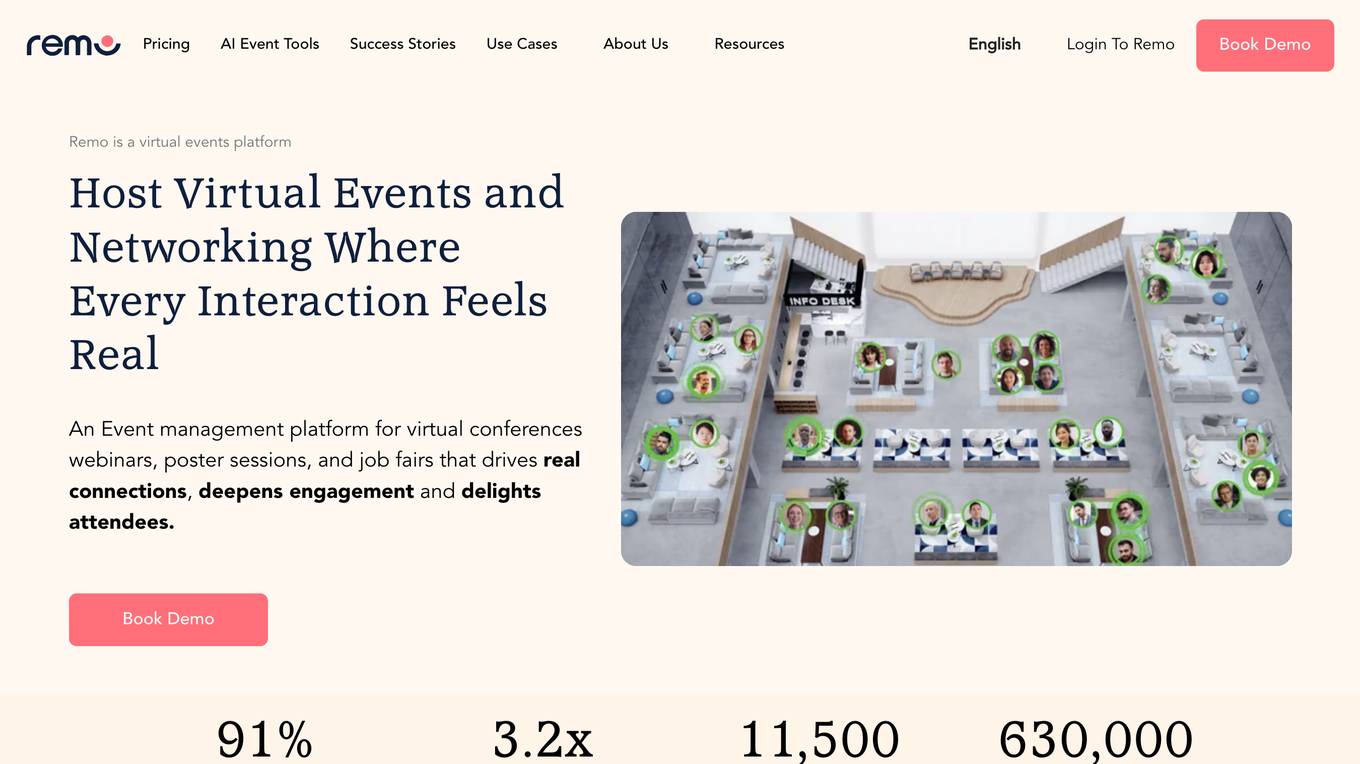

Remo

Remo is an all-in-one event solution that allows users to host immersive online events with real connections and engagement. It is a virtual event platform designed for virtual conferences, webinars, poster sessions, job fairs, and more, aiming to drive real connections, deepen engagement, and delight attendees. With features like networking virtual tables, visually stunning environments, and seamless transitions between webinar and networking modes, Remo offers a unique and interactive online event experience.

Bibit AI

Bibit AI is a real estate marketing AI designed to enhance the efficiency and effectiveness of real estate marketing and sales. It can help create listings, descriptions, and property content, and offers a host of other features. Bibit AI is the world's first AI for Real Estate. We are transforming the real estate industry by boosting efficiency and simplifying tasks like listing creation and content generation.

AI Placeholder

AI Placeholder is a free AI-Powered Fake or Dummy Data API for testing and prototyping. It leverages OpenAI's GPT-3.5-Turbo Model API to generate fake content. Users can directly use the hosted version or self-host it. The API allows users to generate various types of data with specified rules, such as forum users, CRM sales deals, products from a marketplace, tweets, and more. It provides flexibility in retrieving data and is suitable for testing and prototyping purposes.

Be.Live

Be.Live is a livestreaming studio application that allows users to create beautiful livestreams and repurpose them into shorter videos and podcasts. It offers features like inviting guests to livestreams, adding fun elements like falling snow and countdown timers, and customizing streams with branding and overlays. Users can go live from anywhere using the mobile studio app, stream to multiple destinations, and engage with viewers through on-screen elements. Be.Live aims to help users easily produce and repurpose video content to enhance audience engagement and brand visibility.

CodeDesign.ai

CodeDesign.ai is an AI-powered website builder that helps users create and host websites in minutes. It offers a range of features, including a drag-and-drop interface, AI-generated content, and responsive design. CodeDesign.ai is suitable for both beginners and experienced users, and it offers a free plan as well as paid plans with additional features.

10Web

10Web is an AI-powered website builder that helps businesses create professional websites in minutes. With 10Web, you can generate tailored content and images based on your answers to a few simple questions. You can also choose from a library of pre-made layouts and customize your website with our intuitive drag-and-drop editor. 10Web also offers a range of hosting services, so you don't have to worry about finding a separate hosting provider.

Wave.video

Wave.video is an online video editor and hosting platform that allows users to create, edit, and host videos. It offers a wide range of features, including a live streaming studio, video recorder, stock library, and video hosting. Wave.video is easy to use and affordable, making it a great option for businesses and individuals who need to create high-quality videos.

Elementor

Elementor is a leading website builder platform for professionals on WordPress. It empowers users to create, manage, and host stunning websites with ease. Elementor's drag-and-drop interface, extensive library of widgets and templates, and seamless integration with WordPress make it an ideal choice for web designers, developers, and marketers alike. With Elementor, users can build professional-grade websites without the need for coding or technical expertise.

Replit

Replit is a software creation platform that provides an integrated development environment (IDE), artificial intelligence (AI) assistance, and deployment services. It allows users to build, test, and deploy software projects directly from their browser, without the need for local setup or configuration. Replit offers real-time collaboration, code generation, debugging, and autocompletion features powered by AI. It supports multiple programming languages and frameworks, making it suitable for a wide range of development projects.

Contrast

Contrast is a webinar platform that uses AI to help you create engaging and effective webinars. With Contrast, you can easily create branded webinars, add interactive elements like polls and Q&A, and track your webinar analytics. Contrast also offers a variety of tools to help you repurpose your webinar content, such as a summary generator, blog post creator, and clip maker.

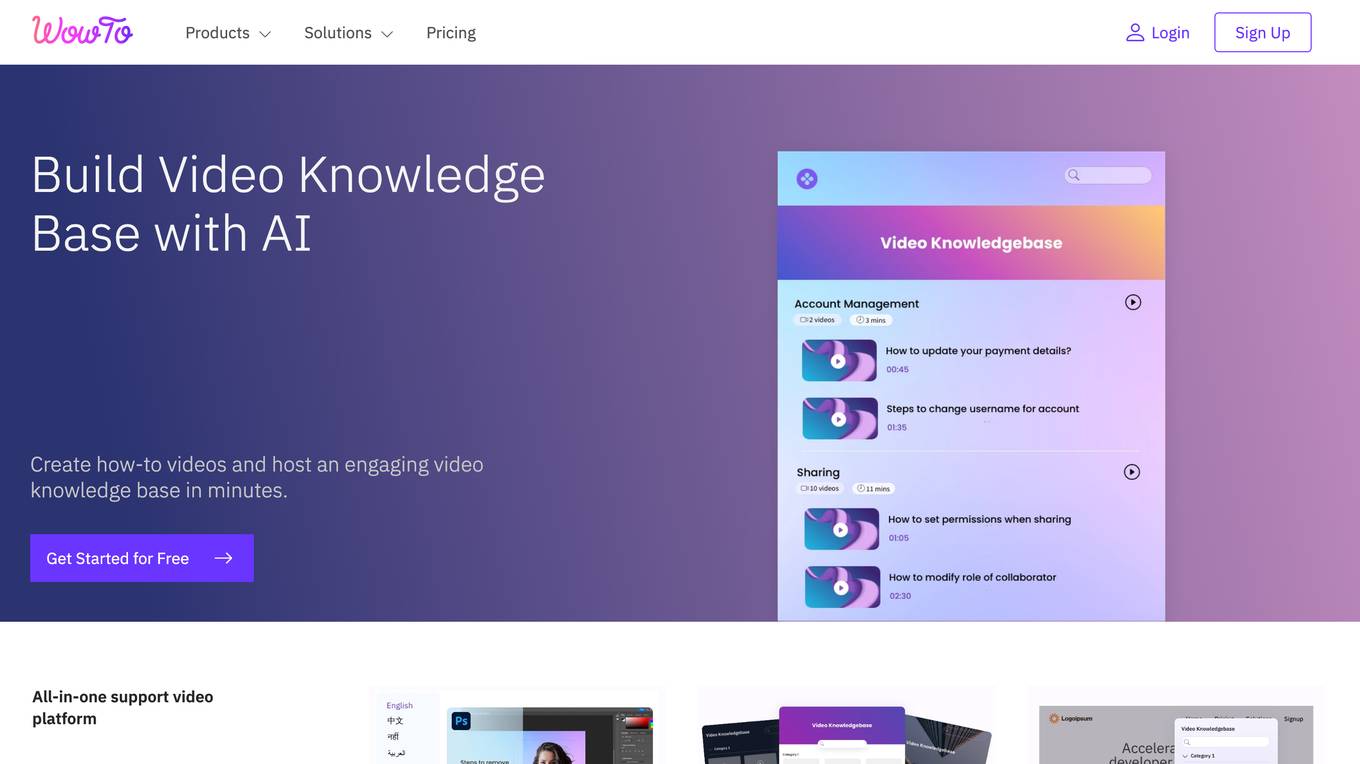

WowTo

WowTo is an all-in-one support video platform that helps businesses create how-to videos, host video knowledge bases, and provide in-app video help. With WowTo's AI-powered video creator, businesses can easily create step-by-step how-to videos without any prior design expertise. WowTo also offers a variety of pre-made video knowledge base layouts to choose from, making it easy to create a professional-looking video knowledge base that matches your brand. In addition, WowTo's in-app video widget allows businesses to provide contextual video help to their visitors, improving the customer support experience.

The Video Calling App

The Video Calling App is an AI-powered platform designed to revolutionize meeting experiences by providing laser-focused, context-aware, and outcome-driven meetings. It aims to streamline post-meeting routines, enhance collaboration, and improve overall meeting efficiency. With powerful integrations and AI features, the app captures, organizes, and distills meeting content to provide users with a clearer perspective and free headspace. It offers seamless integration with popular tools like Slack, Linear, and Google Calendar, enabling users to automate tasks, manage schedules, and enhance productivity. The app's user-friendly interface, interactive features, and advanced search capabilities make it a valuable tool for global teams and remote workers seeking to optimize their meeting experiences.

20 - Open Source AI Tools

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

refact

This repository contains Refact WebUI for fine-tuning and self-hosting of code models, which can be used inside Refact plugins for code completion and chat. Users can fine-tune open-source code models, self-host them, download and upload Lloras, use models for code completion and chat inside Refact plugins, shard models, host multiple small models on one GPU, and connect GPT-models for chat using OpenAI and Anthropic keys. The repository provides a Docker container for running the self-hosted server and supports various models for completion, chat, and fine-tuning. Refact is free for individuals and small teams under the BSD-3-Clause license, with custom installation options available for GPU support. The community and support include contributing guidelines, GitHub issues for bugs, a community forum, Discord for chatting, and Twitter for product news and updates.

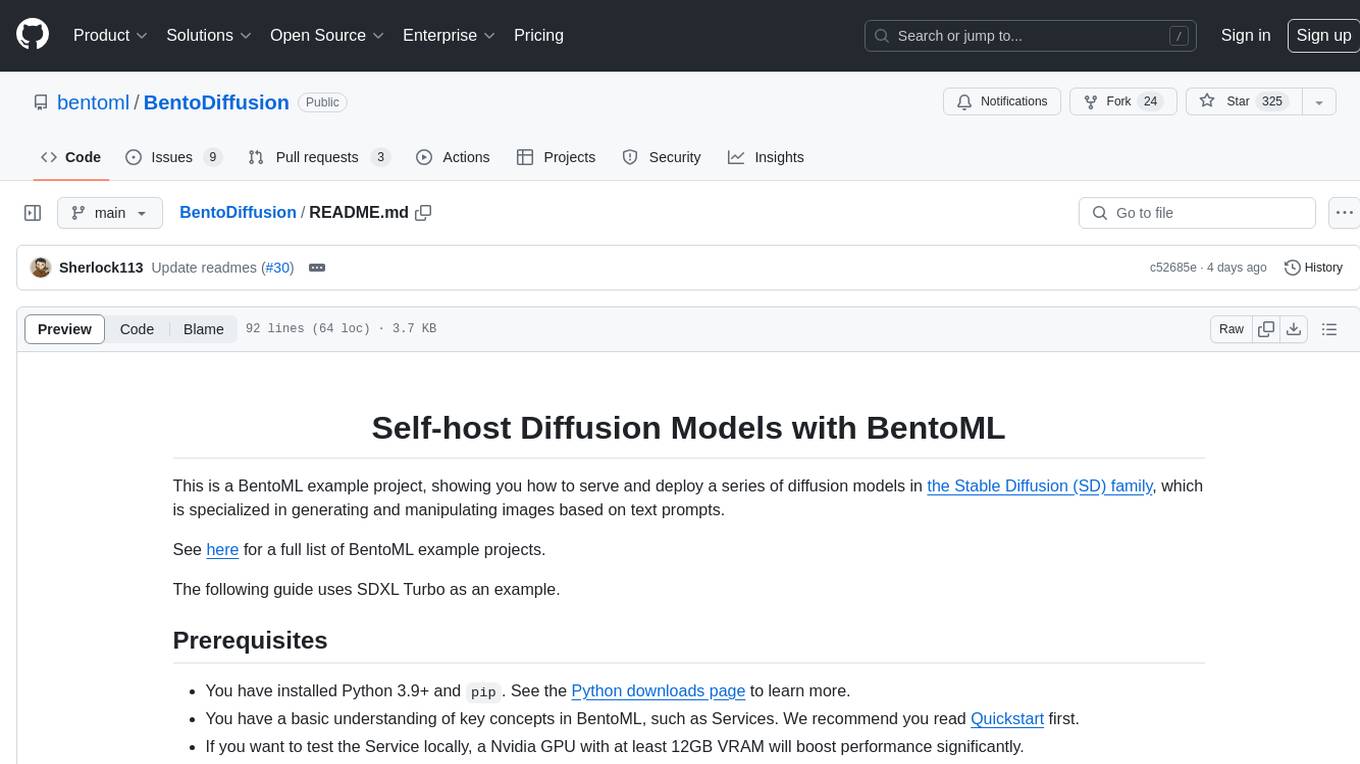

BentoDiffusion

BentoDiffusion is a BentoML example project that demonstrates how to serve and deploy diffusion models in the Stable Diffusion (SD) family. These models are specialized in generating and manipulating images based on text prompts. The project provides a guide on using SDXL Turbo as an example, along with instructions on prerequisites, installing dependencies, running the BentoML service, and deploying to BentoCloud. Users can interact with the deployed service using Swagger UI or other methods. Additionally, the project offers the option to choose from various diffusion models available in the repository for deployment.

petals

Petals is a tool that allows users to run large language models at home in a BitTorrent-style manner. It enables fine-tuning and inference up to 10x faster than offloading. Users can generate text with distributed models like Llama 2, Falcon, and BLOOM, and fine-tune them for specific tasks directly from their desktop computer or Google Colab. Petals is a community-run system that relies on people sharing their GPUs to increase its capacity and offer a distributed network for hosting model layers.

minimal-chat

MinimalChat is a minimal and lightweight open-source chat application with full mobile PWA support that allows users to interact with various language models, including GPT-4 Omni, Claude Opus, and various Local/Custom Model Endpoints. It focuses on simplicity in setup and usage while being fully featured and highly responsive. The application supports features like fully voiced conversational interactions, multiple language models, markdown support, code syntax highlighting, DALL-E 3 integration, conversation importing/exporting, and responsive layout for mobile use.

embedJs

EmbedJs is a NodeJS framework that simplifies RAG application development by efficiently processing unstructured data. It segments data, creates relevant embeddings, and stores them in a vector database for quick retrieval.

Midori-AI

Midori AI is a cutting-edge initiative dedicated to advancing the field of artificial intelligence through research, development, and community engagement. They focus on creating innovative AI solutions, exploring novel approaches, and empowering users to harness the power of AI. Key areas of focus include cluster-based AI, AI setup assistance, AI development for Discord bots, model serving and hosting, novel AI memory architectures, and Carly - a fully simulated human with advanced AI capabilities. They have also developed the Midori AI Subsystem to streamline AI workloads by providing simplified deployment, standardized configurations, isolation for AI systems, and a growing library of backends and tools.

dash-infer

DashInfer is a C++ runtime tool designed to deliver production-level implementations highly optimized for various hardware architectures, including x86 and ARMv9. It supports Continuous Batching and NUMA-Aware capabilities for CPU, and can fully utilize modern server-grade CPUs to host large language models (LLMs) up to 14B in size. With lightweight architecture, high precision, support for mainstream open-source LLMs, post-training quantization, optimized computation kernels, NUMA-aware design, and multi-language API interfaces, DashInfer provides a versatile solution for efficient inference tasks. It supports x86 CPUs with AVX2 instruction set and ARMv9 CPUs with SVE instruction set, along with various data types like FP32, BF16, and InstantQuant. DashInfer also offers single-NUMA and multi-NUMA architectures for model inference, with detailed performance tests and inference accuracy evaluations available. The tool is supported on mainstream Linux server operating systems and provides documentation and examples for easy integration and usage.

arena-hard-auto

Arena-Hard-Auto-v0.1 is an automatic evaluation tool for instruction-tuned LLMs. It contains 500 challenging user queries. The tool prompts GPT-4-Turbo as a judge to compare models' responses against a baseline model (default: GPT-4-0314). Arena-Hard-Auto employs an automatic judge as a cheaper and faster approximator to human preference. It has the highest correlation and separability to Chatbot Arena among popular open-ended LLM benchmarks. Users can evaluate their models' performance on Chatbot Arena by using Arena-Hard-Auto.

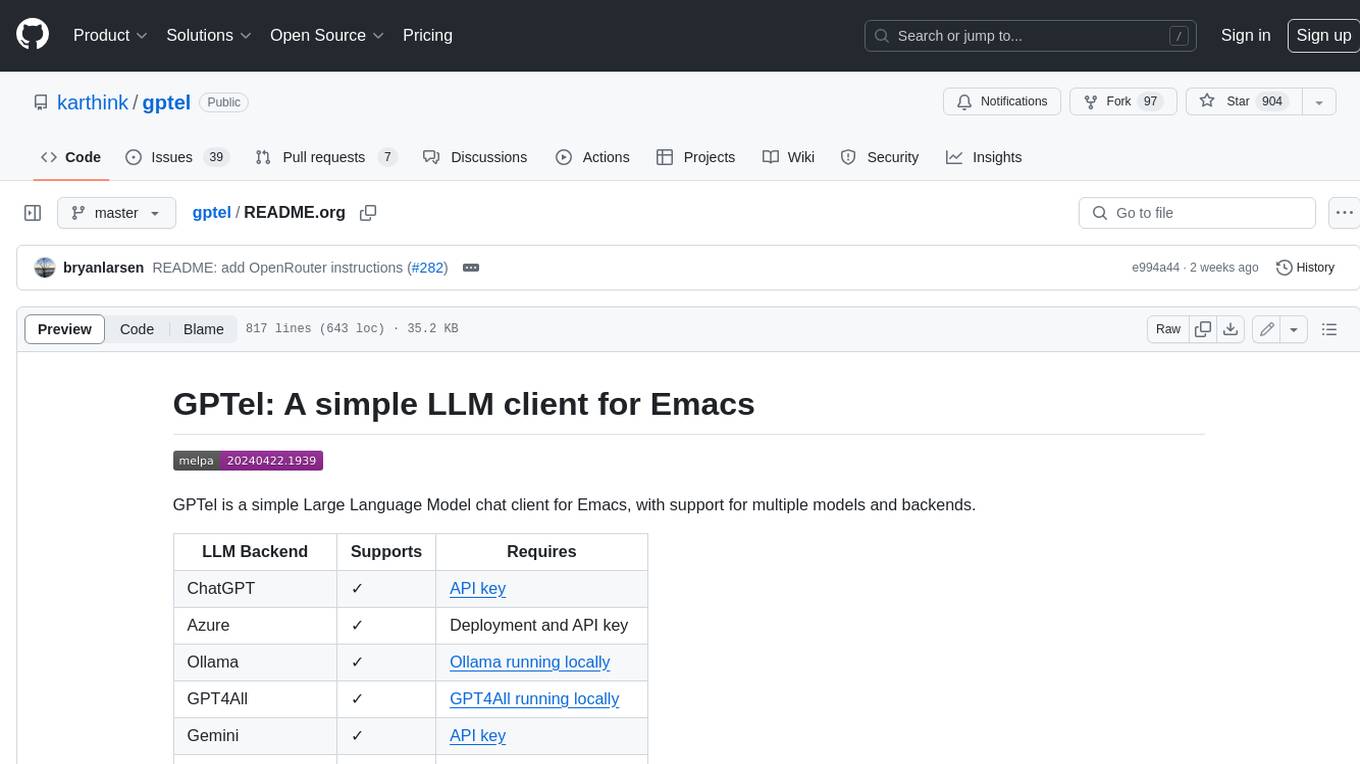

gptel

GPTel is a simple Large Language Model chat client for Emacs, with support for multiple models and backends. It's async and fast, streams responses, and interacts with LLMs from anywhere in Emacs. LLM responses are in Markdown or Org markup. Supports conversations and multiple independent sessions. Chats can be saved as regular Markdown/Org/Text files and resumed later. You can go back and edit your previous prompts or LLM responses when continuing a conversation. These will be fed back to the model. Don't like gptel's workflow? Use it to create your own for any supported model/backend with a simple API.

noScribe

noScribe is an AI-based software designed for automated audio transcription, specifically tailored for transcribing interviews for qualitative social research or journalistic purposes. It is a free and open-source tool that runs locally on the user's computer, ensuring data privacy. The software can differentiate between speakers and supports transcription in 99 languages. It includes a user-friendly editor for reviewing and correcting transcripts. Developed by Kai Dröge, a PhD in sociology with a background in computer science, noScribe aims to streamline the transcription process and enhance the efficiency of qualitative analysis.

llm-hosting-container

The LLM Hosting Container repository provides Dockerfile and associated resources for building and hosting containers for large language models, specifically the HuggingFace Text Generation Inference (TGI) container. This tool allows users to easily deploy and manage large language models in a containerized environment, enabling efficient inference and deployment of language-based applications.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.

bittensor

Bittensor is an internet-scale neural network that incentivizes computers to provide access to machine learning models in a decentralized and censorship-resistant manner. It operates through a token-based mechanism where miners host, train, and procure machine learning systems to fulfill verification problems defined by validators. The network rewards miners and validators for their contributions, ensuring continuous improvement in knowledge output. Bittensor allows anyone to participate, extract value, and govern the network without centralized control. It supports tasks such as generating text, audio, images, and extracting numerical representations.

aimet

AIMET is a library that provides advanced model quantization and compression techniques for trained neural network models. It provides features that have been proven to improve run-time performance of deep learning neural network models with lower compute and memory requirements and minimal impact to task accuracy. AIMET is designed to work with PyTorch, TensorFlow and ONNX models. We also host the AIMET Model Zoo - a collection of popular neural network models optimized for 8-bit inference. We also provide recipes for users to quantize floating point models using AIMET.

lunary

Lunary is an open-source observability and prompt platform for Large Language Models (LLMs). It provides a suite of features to help AI developers take their applications into production, including analytics, monitoring, prompt templates, fine-tuning dataset creation, chat and feedback tracking, and evaluations. Lunary is designed to be usable with any model, not just OpenAI, and is easy to integrate and self-host.

OllamaSharp

OllamaSharp is a .NET binding for the Ollama API, providing an intuitive API client to interact with Ollama. It offers support for all Ollama API endpoints, real-time streaming, progress reporting, and an API console for remote management. Users can easily set up the client, list models, pull models with progress feedback, stream completions, and build interactive chats. The project includes a demo console for exploring and managing the Ollama host.

aikit

AIKit is a one-stop shop to quickly get started to host, deploy, build and fine-tune large language models (LLMs). AIKit offers two main capabilities: Inference: AIKit uses LocalAI, which supports a wide range of inference capabilities and formats. LocalAI provides a drop-in replacement REST API that is OpenAI API compatible, so you can use any OpenAI API compatible client, such as Kubectl AI, Chatbot-UI and many more, to send requests to open-source LLMs! Fine Tuning: AIKit offers an extensible fine tuning interface. It supports Unsloth for fast, memory efficient, and easy fine-tuning experience.

SemanticKernel.Assistants

This repository contains an assistant proposal for the Semantic Kernel, allowing the usage of assistants without relying on OpenAI Assistant APIs. It runs locally planners and plugins for the assistants, providing scenarios like Assistant with Semantic Kernel plugins, Multi-Assistant conversation, and AutoGen conversation. The Semantic Kernel is a lightweight SDK enabling integration of AI Large Language Models with conventional programming languages, offering functions like semantic functions, native functions, and embeddings-based memory. Users can bring their own model for the assistants and host them locally. The repository includes installation instructions, usage examples, and information on creating new conversation threads with the assistant.

SiriLLama

Siri LLama is an Apple shortcut that allows users to access locally running LLMs through Siri or the shortcut UI on any Apple device connected to the same network as the host machine. It utilizes Langchain and supports open source models from Ollama or Fireworks AI. Users can easily set up and configure the tool to interact with various language models for chat and multimodal tasks. The tool provides a convenient way to leverage the power of language models through Siri or the shortcut interface, enhancing user experience and productivity.

20 - OpenAI Gpts

Escape Room Host

Let's go on an Escape Room adventure! Do you have what it takes to escape?

Impractical Jokers: Shark Tank Edition Game

Host a comedic game show of absurd inventions!

Game Night (After Dark)

Your custom adult game night host! It learns your group's details for a tailored, lively experience. With a focus on sophistication and humor, it creates a safe, fun atmosphere, keeping up with the latest trends in adult entertainment.

Sports Nerds Trivia MCQ

I host a diverse range of sports trivia: Prompt a difficulty to begin

Homes Under The Hammer Bot

Consistent property auction game host with post-purchase renovation insights.