Best AI tools for< Deprecate Apis >

2 - AI tool Sites

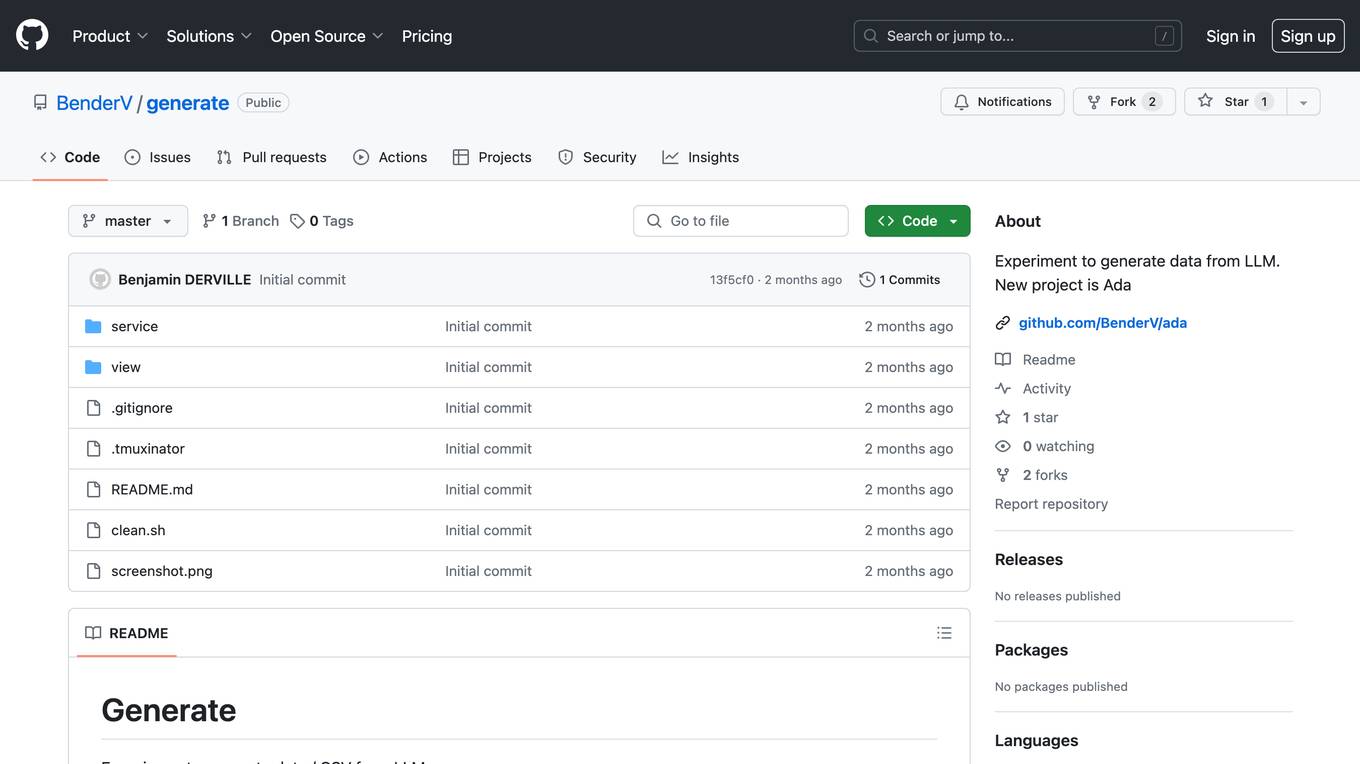

Apiversion.dev

Apiversion.dev is an AI-powered API versioning platform that helps developers manage and version their APIs. It provides a range of features to make API versioning easier, including automatic versioning, version deprecation, and version promotion. Apiversion.dev also integrates with popular CI/CD tools to automate the API versioning process.

site

: 0

0 - Open Source AI Tools

No tools available

0 - OpenAI Gpts

No tools available