Best AI tools for< check hair loss >

20 - AI tool Sites

Am I balding?

This website provides a tool that uses AI to assess hair loss. Users can take a photo of their scalp and the tool will provide a Norwood scale and Diffuse scale score, which are measures of hair loss. The tool can also be used to track hair loss over time. The website also offers a service where users can get their hair checked by experts for $19.

Essay Check

Essay Check is a free AI-powered tool that helps students, teachers, content creators, SEO specialists, and legal experts refine their writing, detect plagiarism, and identify AI-generated content. With its user-friendly interface and advanced algorithms, Essay Check analyzes text to identify grammatical errors, spelling mistakes, instances of plagiarism, and the likelihood that content was written using AI. The tool provides detailed feedback and suggestions to help users improve their writing and ensure its originality and authenticity.

Check Typo

Check Typo is an AI-powered spell-checker that helps you write error-free text. It offers advanced grammar intelligence, global linguistic compatibility, and an intuitive design for streamlined use. With Check Typo, you can elevate your content, embrace global communication, and enjoy precision writing simplified.

Copyright Check AI

Copyright Check AI is a service that helps protect brands from legal disputes related to copyright violations on social media. The software automatically detects copyright infringements on social profiles, reducing the risk of costly legal action. It is used by Heads of Marketing and In-House Counsel at top brands to avoid lawsuits and potential damages. The service offers a done-for-you audit to highlight violations, deliver reports, and provide ongoing monitoring to ensure brand protection.

Fact Check Anything

Fact Check Anything (FCA) is a browser extension that allows users to fact-check information on the internet. It uses AI to verify statements and provide users with reliable sources. FCA is available for all browsers using the Chromium engine on Windows or MacOS. It is easy to use and can be used on any website. FCA is a valuable tool for anyone who wants to stay informed and fight against misinformation.

Rizz Check

Rizz Check is a swipe game where users can befriend AI celebrities and ask them on dates. The game is built with Rizz, a library created by boredhead00.

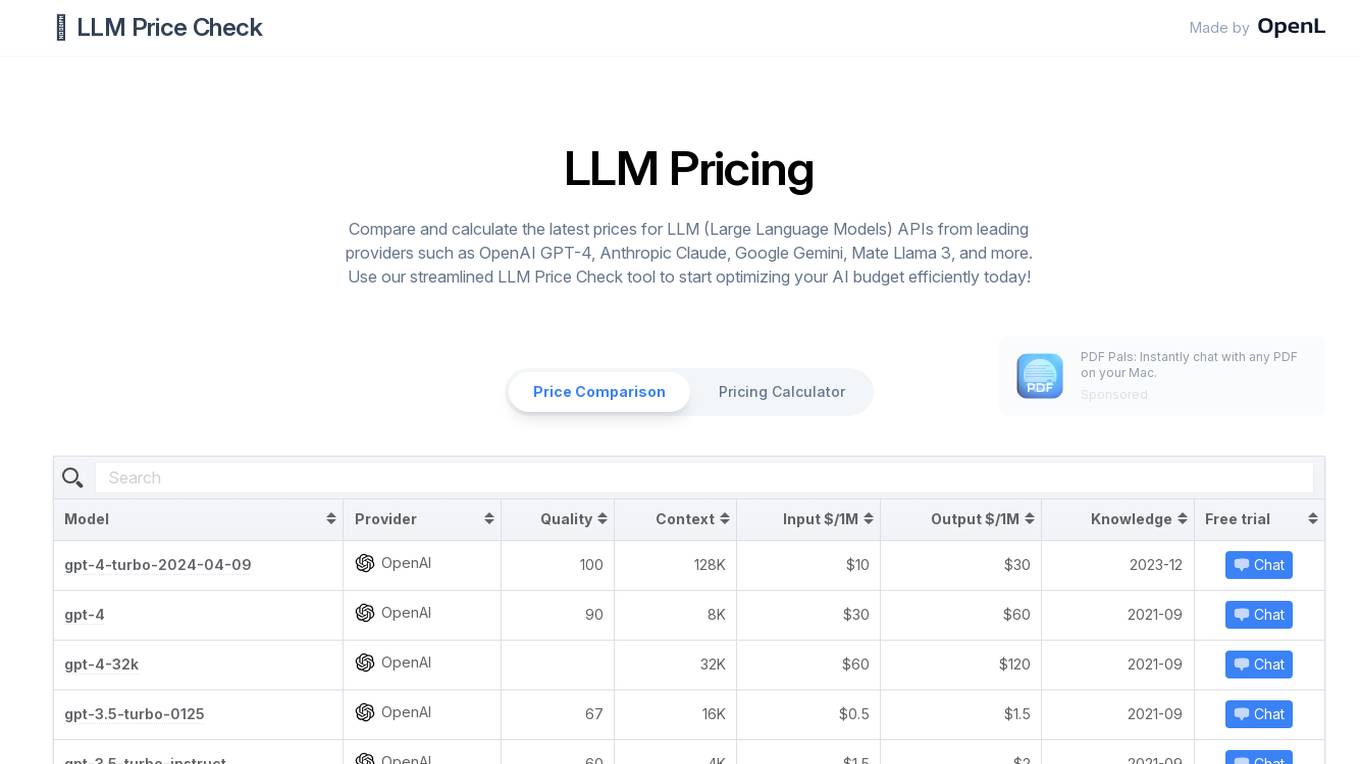

LLM Price Check

LLM Price Check is an AI tool designed to compare and calculate the latest prices for Large Language Models (LLM) APIs from leading providers such as OpenAI, Anthropic, Google, and more. Users can use the streamlined tool to optimize their AI budget efficiently by comparing pricing, sorting by various parameters, and searching for specific models. The tool provides a comprehensive overview of pricing information to help users make informed decisions when selecting an LLM API provider.

English and Tagalog Grammar Checker

English and Tagalog Grammar Checker is a free online tool that checks your grammar and spelling. It can also help you improve your writing style and avoid common mistakes. The tool is easy to use and can be used by anyone, regardless of their level of English proficiency.

Is This Image NSFW?

This website provides a tool that allows users to check if an image is safe for work (SFW) or not. The tool uses Stable Diffusion's safety checker, which can be used with arbitrary images, not just AI-generated ones. Users can upload an image or drag and drop it onto the website to check if it is SFW.

PimEyes

PimEyes is an online face search engine that uses face recognition technology to find pictures containing given faces. It is a great tool to audit copyright infringement, protect your privacy, and find people.

Yesil Health AI Health Assistant

Yesil Health provides an AI-powered health assistant that offers evidence-based answers to health-related questions. It covers various topics such as health symptoms, diet and nutrition, skin care, mental health, lab results, chronic conditions, exercise, and women's health. The assistant utilizes artificial intelligence to analyze user input and generate personalized recommendations. It is designed to supplement the professional judgment of healthcare providers and assist users in making informed decisions about their health.

Trinka

Trinka is an AI-powered English grammar checker and language enhancement writing assistant designed for academic and technical writing. It corrects contextual spelling mistakes and advanced grammar errors by providing writing suggestions in real-time. Trinka helps professionals and academics ensure formal, concise, and engaging writing. Trinka's Enterprise solutions come with unlimited access and great customization options to all of Trinka's powerful capabilities.

Trinka

Trinka is an AI-powered English grammar checker and language enhancement writing assistant designed for academic and technical writing. It corrects contextual spelling mistakes and advanced grammar errors by providing writing suggestions in real-time. Trinka helps professionals and academics ensure formal, concise, and engaging writing.

PaperRater

PaperRater is a free online proofreader and plagiarism checker that uses AI to scan essays and papers for errors and assign them an automated score. It offers grammar checking, writing suggestions, and plagiarism detection. PaperRater is accessible, requiring no downloads or signups, and is used by thousands of students every day in over 140 countries.

Linguix

Linguix is a GPT-4 writing and productivity copilot for teams. It uses artificial intelligence to improve grammar, spelling, and style, and to help users write more clearly and effectively. Linguix is available as a browser extension and a web editor, and it can be used with a variety of online platforms, including Gmail, Google Docs, and OpenAI. Linguix is trusted by over 310,000 users, including Google Chrome Store Featured App, Edge Store Featured App, Product Hunt Top #1 writing assistant, G2 reviews website Top proofreading tool, and Linguix for Figma Featured App.

Veriff

Veriff.com is an AI-powered identity verification platform designed for fraud prevention, compliance, and enhancing customer trust. It offers a range of services such as document verification, proof of address, database checks, age validation, KYC onboarding, biometric authentication, AML screening, and more. Veriff combines AI technology with human verification to ensure accurate and efficient identity verification processes, helping businesses build trusted digital communities and drive growth.

EssayGrader.ai

EssayGrader.ai is the original AI essay grader, a leading AI platform designed for teachers to efficiently grade essays. It provides high-quality, specific, and accurate writing feedback, reducing the grading time from 10 minutes to just 30 seconds. The AI-powered tool analyzes essays for grammar, punctuation, spelling, coherence, clarity, and writing style errors. It offers features like bulk uploading, custom rubrics, summarizer, AI detector, and class organization. EssayGrader.ai aims to transform the grading experience for teachers and students by simplifying the grading process and enhancing writing skills.

Bibit AI

Bibit AI is a real estate marketing AI designed to enhance the efficiency and effectiveness of real estate marketing and sales. It can help create listings, descriptions, and property content, and offers a host of other features. Bibit AI is the world's first AI for Real Estate. We are transforming the real estate industry by boosting efficiency and simplifying tasks like listing creation and content generation.

NPI Lookup

NPI Lookup is an AI-powered platform that offers advanced search and validation services for National Provider Identifiers (NPI) in the United States healthcare system. The tool utilizes cutting-edge artificial intelligence technology, including Natural Language Processing (NLP) algorithms and GPT models, to provide comprehensive insights and answers related to NPI profiles. Users can easily search and validate NPI records of doctors, hospitals, and other healthcare providers using everyday language queries, eliminating the need for manual searches. The platform ensures real-time updates and synchronization with the latest NPPES NPI database, offering accurate and up-to-date information for informed decision-making in the healthcare industry.

LanguageTool

LanguageTool is an AI-based spelling, style, and grammar checker that helps correct or paraphrase texts across languages. It offers a range of features including grammar checking, paraphrasing, punctuation correction, style improvement, and more. LanguageTool is available as a browser extension, desktop app, and mobile app, and it supports over 30 languages. It is used by over 2000 organizations, including BMW Group, European Union, Spiegel Magazine, and Deutsche Presse-Agentur (dpa).

20 - Open Source AI Tools

ruby-openai

Use the OpenAI API with Ruby! 🤖🩵 Stream text with GPT-4, transcribe and translate audio with Whisper, or create images with DALL·E... Hire me | 🎮 Ruby AI Builders Discord | 🐦 Twitter | 🧠 Anthropic Gem | 🚂 Midjourney Gem ## Table of Contents * Ruby OpenAI * Table of Contents * Installation * Bundler * Gem install * Usage * Quickstart * With Config * Custom timeout or base URI * Extra Headers per Client * Logging * Errors * Faraday middleware * Azure * Ollama * Counting Tokens * Models * Examples * Chat * Streaming Chat * Vision * JSON Mode * Functions * Edits * Embeddings * Batches * Files * Finetunes * Assistants * Threads and Messages * Runs * Runs involving function tools * Image Generation * DALL·E 2 * DALL·E 3 * Image Edit * Image Variations * Moderations * Whisper * Translate * Transcribe * Speech * Errors * Development * Release * Contributing * License * Code of Conduct

RPG-DiffusionMaster

This repository contains the official implementation of RPG, a powerful training-free paradigm for text-to-image generation and editing. RPG utilizes proprietary or open-source MLLMs as prompt recaptioner and region planner with complementary regional diffusion. It achieves state-of-the-art results and can generate high-resolution images. The codebase supports diffusers and various diffusion backbones, including SDXL and SD v1.4/1.5. Users can reproduce results with GPT-4, Gemini-Pro, or local MLLMs like miniGPT-4. The repository provides tools for quick start, regional diffusion with GPT-4, and regional diffusion with local LLMs.

airdrop-checker

Airdrop-checker is a tool that helps you to check if you are eligible for any airdrops. It supports multiple airdrops, including Altlayer, Rabby points, Zetachain, Frame, Anoma, Dymension, and MEME. To use the tool, you need to install it using npm and then fill the addresses files in the addresses folder with your wallet addresses. Once you have done this, you can run the tool using npm start.

sd-civitai-browser-plus

sd-civitai-browser-plus is an extension designed for Automatic1111's Stable Difussion Web UI, providing features to browse models from CivitAI, check for updates, download specific model versions hassle-free, assign tags to models, access model info quickly, and download models with high-speed using Aria2. The extension offers a sleek and intuitive user interface, actively maintained with feature requests welcome. It also addresses known issues like frozen downloads with possible solutions. The tool is actively developed with regular updates and bug fixes, ensuring a smooth user experience.

Awesome-LLM-Inference

Awesome-LLM-Inference: A curated list of 📙Awesome LLM Inference Papers with Codes, check 📖Contents for more details. This repo is still updated frequently ~ 👨💻 Welcome to star ⭐️ or submit a PR to this repo!

vectara-answer

Vectara Answer is a sample app for Vectara-powered Summarized Semantic Search (or question-answering) with advanced configuration options. For examples of what you can build with Vectara Answer, check out Ask News, LegalAid, or any of the other demo applications.

json_repair

This simple package can be used to fix an invalid json string. To know all cases in which this package will work, check out the unit test. Inspired by https://github.com/josdejong/jsonrepair Motivation Some LLMs are a bit iffy when it comes to returning well formed JSON data, sometimes they skip a parentheses and sometimes they add some words in it, because that's what an LLM does. Luckily, the mistakes LLMs make are simple enough to be fixed without destroying the content. I searched for a lightweight python package that was able to reliably fix this problem but couldn't find any. So I wrote one How to use from json_repair import repair_json good_json_string = repair_json(bad_json_string) # If the string was super broken this will return an empty string You can use this library to completely replace `json.loads()`: import json_repair decoded_object = json_repair.loads(json_string) or just import json_repair decoded_object = json_repair.repair_json(json_string, return_objects=True) Read json from a file or file descriptor JSON repair provides also a drop-in replacement for `json.load()`: import json_repair try: file_descriptor = open(fname, 'rb') except OSError: ... with file_descriptor: decoded_object = json_repair.load(file_descriptor) and another method to read from a file: import json_repair try: decoded_object = json_repair.from_file(json_file) except OSError: ... except IOError: ... Keep in mind that the library will not catch any IO-related exception and those will need to be managed by you Performance considerations If you find this library too slow because is using `json.loads()` you can skip that by passing `skip_json_loads=True` to `repair_json`. Like: from json_repair import repair_json good_json_string = repair_json(bad_json_string, skip_json_loads=True) I made a choice of not using any fast json library to avoid having any external dependency, so that anybody can use it regardless of their stack. Some rules of thumb to use: - Setting `return_objects=True` will always be faster because the parser returns an object already and it doesn't have serialize that object to JSON - `skip_json_loads` is faster only if you 100% know that the string is not a valid JSON - If you are having issues with escaping pass the string as **raw** string like: `r"string with escaping\"" Adding to requirements Please pin this library only on the major version! We use TDD and strict semantic versioning, there will be frequent updates and no breaking changes in minor and patch versions. To ensure that you only pin the major version of this library in your `requirements.txt`, specify the package name followed by the major version and a wildcard for minor and patch versions. For example: json_repair==0.* In this example, any version that starts with `0.` will be acceptable, allowing for updates on minor and patch versions. How it works This module will parse the JSON file following the BNF definition:

imodels

Python package for concise, transparent, and accurate predictive modeling. All sklearn-compatible and easy to use. _For interpretability in NLP, check out our new package:imodelsX _

documentation

Vespa documentation is served using GitHub Project pages with Jekyll. To edit documentation, check out and work off the master branch in this repository. Documentation is written in HTML or Markdown. Use a single Jekyll template _layouts/default.html to add header, footer and layout. Install bundler, then $ bundle install $ bundle exec jekyll serve --incremental --drafts --trace to set up a local server at localhost:4000 to see the pages as they will look when served. If you get strange errors on bundle install try $ export PATH=“/usr/local/opt/[email protected]/bin:$PATH” $ export LDFLAGS=“-L/usr/local/opt/[email protected]/lib” $ export CPPFLAGS=“-I/usr/local/opt/[email protected]/include” $ export PKG_CONFIG_PATH=“/usr/local/opt/[email protected]/lib/pkgconfig” The output will highlight rendering/other problems when starting serving. Alternatively, use the docker image `jekyll/jekyll` to run the local server on Mac $ docker run -ti --rm --name doc \ --publish 4000:4000 -e JEKYLL_UID=$UID -v $(pwd):/srv/jekyll \ jekyll/jekyll jekyll serve or RHEL 8 $ podman run -it --rm --name doc -p 4000:4000 -e JEKYLL_ROOTLESS=true \ -v "$PWD":/srv/jekyll:Z docker.io/jekyll/jekyll jekyll serve The layout is written in denali.design, see _layouts/default.html for usage. Please do not add custom style sheets, as it is harder to maintain.

WavCraft

WavCraft is an LLM-driven agent for audio content creation and editing. It applies LLM to connect various audio expert models and DSP function together. With WavCraft, users can edit the content of given audio clip(s) conditioned on text input, create an audio clip given text input, get more inspiration from WavCraft by prompting a script setting and let the model do the scriptwriting and create the sound, and check if your audio file is synthesized by WavCraft.

glm-free-api

GLM AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, intelligent agent dialogue support, AI drawing support, online search support, long document interpretation support, image parsing support. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository also includes six other free APIs for various services like Moonshot AI, StepChat, Qwen, Metaso, Spark, and Emohaa. The tool supports tasks such as chat completions, AI drawing, document interpretation, image parsing, and refresh token survival check.

only_train_once

Only Train Once (OTO) is an automatic, architecture-agnostic DNN training and compression framework that allows users to train a general DNN from scratch or a pretrained checkpoint to achieve high performance and slimmer architecture simultaneously in a one-shot manner without fine-tuning. The framework includes features for automatic structured pruning and erasing operators, as well as hybrid structured sparse optimizers for efficient model compression. OTO provides tools for pruning zero-invariant group partitioning, constructing pruned models, and visualizing pruning and erasing dependency graphs. It supports the HESSO optimizer and offers a sanity check for compliance testing on various DNNs. The repository also includes publications, installation instructions, quick start guides, and a roadmap for future enhancements and collaborations.

femtoGPT

femtoGPT is a pure Rust implementation of a minimal Generative Pretrained Transformer. It can be used for both inference and training of GPT-style language models using CPUs and GPUs. The tool is implemented from scratch, including tensor processing logic and training/inference code of a minimal GPT architecture. It is a great start for those fascinated by LLMs and wanting to understand how these models work at deep levels. The tool uses random generation libraries, data-serialization libraries, and a parallel computing library. It is relatively fast on CPU and correctness of gradients is checked using the gradient-check method.

open-webui

Open WebUI is an extensible, feature-rich, and user-friendly self-hosted WebUI designed to operate entirely offline. It supports various LLM runners, including Ollama and OpenAI-compatible APIs. For more information, be sure to check out our Open WebUI Documentation.

guardrails

Guardrails is a Python framework that helps build reliable AI applications by performing two key functions: 1. Guardrails runs Input/Output Guards in your application that detect, quantify and mitigate the presence of specific types of risks. To look at the full suite of risks, check out Guardrails Hub. 2. Guardrails help you generate structured data from LLMs.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

deepdoctection

**deep** doctection is a Python library that orchestrates document extraction and document layout analysis tasks using deep learning models. It does not implement models but enables you to build pipelines using highly acknowledged libraries for object detection, OCR and selected NLP tasks and provides an integrated framework for fine-tuning, evaluating and running models. For more specific text processing tasks use one of the many other great NLP libraries. **deep** doctection focuses on applications and is made for those who want to solve real world problems related to document extraction from PDFs or scans in various image formats. **deep** doctection provides model wrappers of supported libraries for various tasks to be integrated into pipelines. Its core function does not depend on any specific deep learning library. Selected models for the following tasks are currently supported: * Document layout analysis including table recognition in Tensorflow with **Tensorpack**, or PyTorch with **Detectron2**, * OCR with support of **Tesseract**, **DocTr** (Tensorflow and PyTorch implementations available) and a wrapper to an API for a commercial solution, * Text mining for native PDFs with **pdfplumber**, * Language detection with **fastText**, * Deskewing and rotating images with **jdeskew**. * Document and token classification with all LayoutLM models provided by the **Transformer library**. (Yes, you can use any LayoutLM-model with any of the provided OCR-or pdfplumber tools straight away!). * Table detection and table structure recognition with **table-transformer**. * There is a small dataset for token classification available and a lot of new tutorials to show, how to train and evaluate this dataset using LayoutLMv1, LayoutLMv2, LayoutXLM and LayoutLMv3. * Comprehensive configuration of **analyzer** like choosing different models, output parsing, OCR selection. Check this notebook or the docs for more infos. * Document layout analysis and table recognition now runs with **Torchscript** (CPU) as well and **Detectron2** is not required anymore for basic inference. * [**new**] More angle predictors for determining the rotation of a document based on **Tesseract** and **DocTr** (not contained in the built-in Analyzer). * [**new**] Token classification with **LiLT** via **transformers**. We have added a model wrapper for token classification with LiLT and added a some LiLT models to the model catalog that seem to look promising, especially if you want to train a model on non-english data. The training script for LayoutLM can be used for LiLT as well and we will be providing a notebook on how to train a model on a custom dataset soon. **deep** doctection provides on top of that methods for pre-processing inputs to models like cropping or resizing and to post-process results, like validating duplicate outputs, relating words to detected layout segments or ordering words into contiguous text. You will get an output in JSON format that you can customize even further by yourself. Have a look at the **introduction notebook** in the notebook repo for an easy start. Check the **release notes** for recent updates. **deep** doctection or its support libraries provide pre-trained models that are in most of the cases available at the **Hugging Face Model Hub** or that will be automatically downloaded once requested. For instance, you can find pre-trained object detection models from the Tensorpack or Detectron2 framework for coarse layout analysis, table cell detection and table recognition. Training is a substantial part to get pipelines ready on some specific domain, let it be document layout analysis, document classification or NER. **deep** doctection provides training scripts for models that are based on trainers developed from the library that hosts the model code. Moreover, **deep** doctection hosts code to some well established datasets like **Publaynet** that makes it easy to experiment. It also contains mappings from widely used data formats like COCO and it has a dataset framework (akin to **datasets** so that setting up training on a custom dataset becomes very easy. **This notebook** shows you how to do this. **deep** doctection comes equipped with a framework that allows you to evaluate predictions of a single or multiple models in a pipeline against some ground truth. Check again **here** how it is done. Having set up a pipeline it takes you a few lines of code to instantiate the pipeline and after a for loop all pages will be processed through the pipeline.

generative-ai-for-beginners

This course has 18 lessons. Each lesson covers its own topic so start wherever you like! Lessons are labeled either "Learn" lessons explaining a Generative AI concept or "Build" lessons that explain a concept and code examples in both **Python** and **TypeScript** when possible. Each lesson also includes a "Keep Learning" section with additional learning tools. **What You Need** * Access to the Azure OpenAI Service **OR** OpenAI API - _Only required to complete coding lessons_ * Basic knowledge of Python or Typescript is helpful - *For absolute beginners check out these Python and TypeScript courses. * A Github account to fork this entire repo to your own GitHub account We have created a **Course Setup** lesson to help you with setting up your development environment. Don't forget to star (🌟) this repo to find it easier later. ## 🧠 Ready to Deploy? If you are looking for more advanced code samples, check out our collection of Generative AI Code Samples in both **Python** and **TypeScript**. ## 🗣️ Meet Other Learners, Get Support Join our official AI Discord server to meet and network with other learners taking this course and get support. ## 🚀 Building a Startup? Sign up for Microsoft for Startups Founders Hub to receive **free OpenAI credits** and up to **$150k towards Azure credits to access OpenAI models through Azure OpenAI Services**. ## 🙏 Want to help? Do you have suggestions or found spelling or code errors? Raise an issue or Create a pull request ## 📂 Each lesson includes: * A short video introduction to the topic * A written lesson located in the README * Python and TypeScript code samples supporting Azure OpenAI and OpenAI API * Links to extra resources to continue your learning ## 🗃️ Lessons | | Lesson Link | Description | Additional Learning | | :-: | :------------------------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------: | ------------------------------------------------------------------------------ | | 00 | Course Setup | **Learn:** How to Setup Your Development Environment | Learn More | | 01 | Introduction to Generative AI and LLMs | **Learn:** Understanding what Generative AI is and how Large Language Models (LLMs) work. | Learn More | | 02 | Exploring and comparing different LLMs | **Learn:** How to select the right model for your use case | Learn More | | 03 | Using Generative AI Responsibly | **Learn:** How to build Generative AI Applications responsibly | Learn More | | 04 | Understanding Prompt Engineering Fundamentals | **Learn:** Hands-on Prompt Engineering Best Practices | Learn More | | 05 | Creating Advanced Prompts | **Learn:** How to apply prompt engineering techniques that improve the outcome of your prompts. | Learn More | | 06 | Building Text Generation Applications | **Build:** A text generation app using Azure OpenAI | Learn More | | 07 | Building Chat Applications | **Build:** Techniques for efficiently building and integrating chat applications. | Learn More | | 08 | Building Search Apps Vector Databases | **Build:** A search application that uses Embeddings to search for data. | Learn More | | 09 | Building Image Generation Applications | **Build:** A image generation application | Learn More | | 10 | Building Low Code AI Applications | **Build:** A Generative AI application using Low Code tools | Learn More | | 11 | Integrating External Applications with Function Calling | **Build:** What is function calling and its use cases for applications | Learn More | | 12 | Designing UX for AI Applications | **Learn:** How to apply UX design principles when developing Generative AI Applications | Learn More | | 13 | Securing Your Generative AI Applications | **Learn:** The threats and risks to AI systems and methods to secure these systems. | Learn More | | 14 | The Generative AI Application Lifecycle | **Learn:** The tools and metrics to manage the LLM Lifecycle and LLMOps | Learn More | | 15 | Retrieval Augmented Generation (RAG) and Vector Databases | **Build:** An application using a RAG Framework to retrieve embeddings from a Vector Databases | Learn More | | 16 | Open Source Models and Hugging Face | **Build:** An application using open source models available on Hugging Face | Learn More | | 17 | AI Agents | **Build:** An application using an AI Agent Framework | Learn More | | 18 | Fine-Tuning LLMs | **Learn:** The what, why and how of fine-tuning LLMs | Learn More |

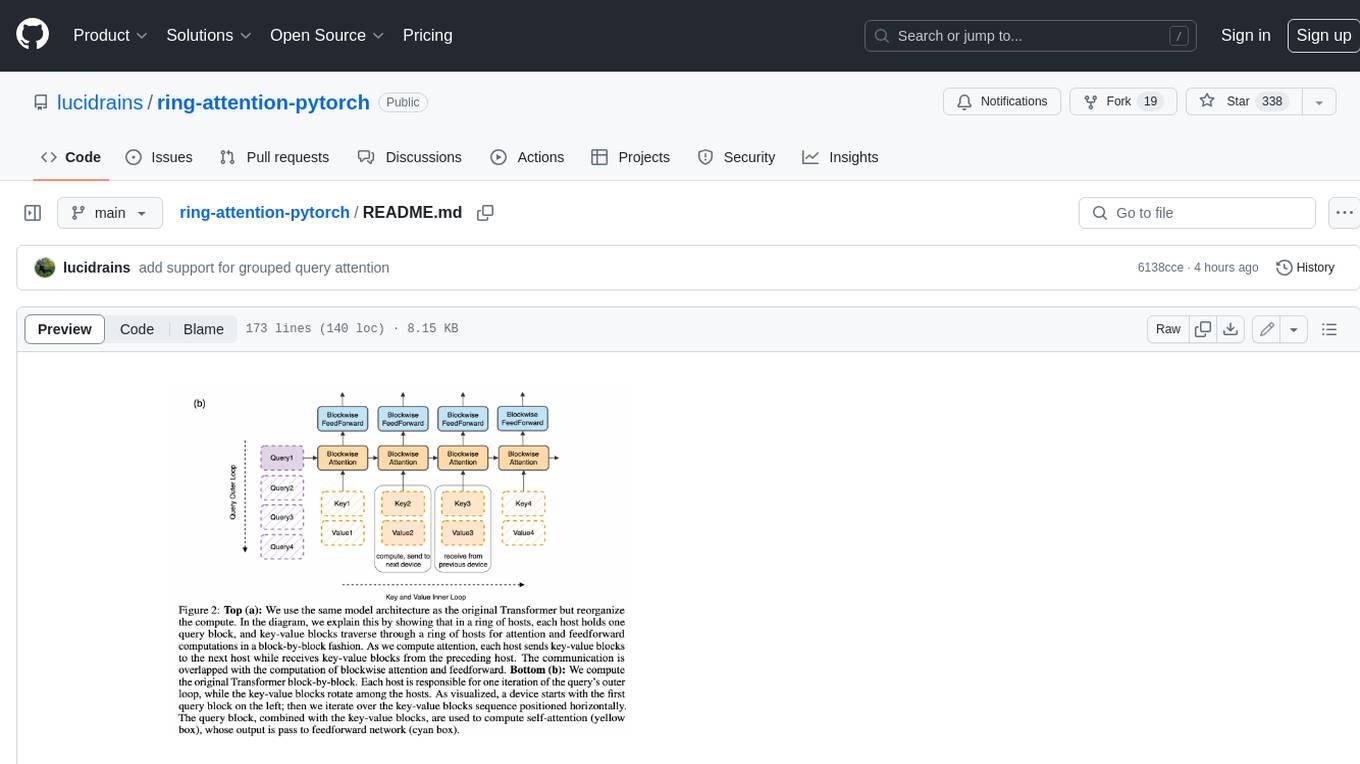

ring-attention-pytorch

This repository contains an implementation of Ring Attention, a technique for processing large sequences in transformers. Ring Attention splits the data across the sequence dimension and applies ring reduce to the processing of the tiles of the attention matrix, similar to flash attention. It also includes support for Striped Attention, a follow-up paper that permutes the sequence for better workload balancing for autoregressive transformers, and grouped query attention, which saves on communication costs during the ring reduce. The repository includes a CUDA version of the flash attention kernel, which is used for the forward and backward passes of the ring attention. It also includes logic for splitting the sequence evenly among ranks, either within the attention function or in the external ring transformer wrapper, and basic test cases with two processes to check for equivalent output and gradients.

node_characterai

Node.js client for the unofficial Character AI API, an awesome website which brings characters to life with AI! This repository is inspired by RichardDorian's unofficial node API. Though, I found it hard to use and it was not really stable and archived. So I remade it in javascript. This project is not affiliated with Character AI in any way! It is a community project. The purpose of this project is to bring and build projects powered by Character AI. If you like this project, please check their website.

20 - OpenAI Gpts

Credit Score Check

Guides on checking and monitoring credit scores, with a financial and informative tone.

Backloger.ai - Requirements Health Check

Drop in any requirements ; I'll reduces ambiguity using requirement health check

Website Worth Calculator - Check Website Value

Calculate website worth by analyzing monthly revenue, using industry-standard valuation methods to provide approximate, informative value estimates.

News Bias Corrector

Balances out bias and researches live reports to give you a more balanced view (Paste in the text you want to check)

Service Rater

Helps check and provide feedback on service providers like contractors and plumbers.

Are You Weather Dependent or Not?

A mental health self-check tool assessing weather dependency. Powered by WeatherMind

AI Essay Writer

ChatGPT Essay Writer helps you to write essays with OpenAI. Generate Professional Essays with Plagiarism Check, Formatting, Cost Estimation & More.

Biblical Insights Hub & Navigator

Provides in-depth insights based on familiarity with the historical & cultural context of biblical times including an understanding of theological concepts. It's a Bible Scholar in your pocket!!! Verify Before You Trust (VBYT): Always Double-Check ChatGPT's Insights!

A/B Test GPT

Calculate the results of your A/B test and check whether the result is statistically significant or due to chance.

Anchorage Code Navigator

EXPERIMENT - Friendly guide for navigating Anchorage Municipal Code - Double Check info

Low FODMAP Chef

Expert in crafting personalized low FODMAP recipes with representative images. Cross check all ingredients & low FODMAP servings.

REI Mentor | Your Real Estate Investing Guide 🏦

A Mentor in Real Estate Investing. Check www.2060.us for more details.