Best AI tools for< Cache Instructions >

5 - AI tool Sites

Default Web Site Page

The website page appears to be a default error page indicating that the site is not accessible. It provides information for the website owner on potential reasons for the error, such as IP address changes, DNS settings, server misconfigurations, or server migrations. The page advises on steps to potentially resolve the issue, including clearing DNS cache and verifying Apache settings. The copyright information at the bottom indicates it is powered by cPanel, L.L.C.

ai2page.com

ai2page.com is a website that appears to be experiencing technical difficulties at the moment. The page is not working, showing an HTTP error 436 message. Users encountering this issue are advised to reload the page and contact the site owner if the problem persists.

DataGPT

DataGPT is a conversational AI data analyst that provides instant analysis and answers to any data-related question in everyday language. It connects to any data source and automatically defines and suggests the most relevant metrics and dimensions. DataGPT's core analytics engine carries out intricate analysis against all data, checking every segment, identifying anomalies, detecting outliers, diving into funnel analytics, or conducting robust comparative analysis to reveal accurate results. The AI-powered onboarding agent guides users through the setup process, and the Lightning Cache boosts query speeds 100x over current data warehouses. The Data Navigator allows users to freely explore any part of their data with just a few clicks. DataGPT empowers decision-makers by replacing specialized dashboards with an 'ask me anything' interface, enabling them to access essential insights on demand.

Application Error

The website seems to be experiencing an application error, which indicates a technical issue with the application. It may be a temporary problem that needs to be resolved by the website's developers. An application error can occur due to various reasons such as bugs in the code, server issues, or database problems. Users encountering this error may need to refresh the page, clear their cache, or contact the website's support team for assistance.

imgix

imgix is an end-to-end visual media solution that enables users to create, transform, and optimize captivating images and videos for an unparalleled visual experience. It simplifies the complex visual media technology, improves web performance, and delivers responsive design. Trusted by innovative companies worldwide, imgix offers features such as easy cloud storage connection, intelligent compression, fast loading with a globally distributed CDN, over 150 image operations, video streaming, asset management, intuitive analytics, and powerful SDKs & tools.

1 - Open Source AI Tools

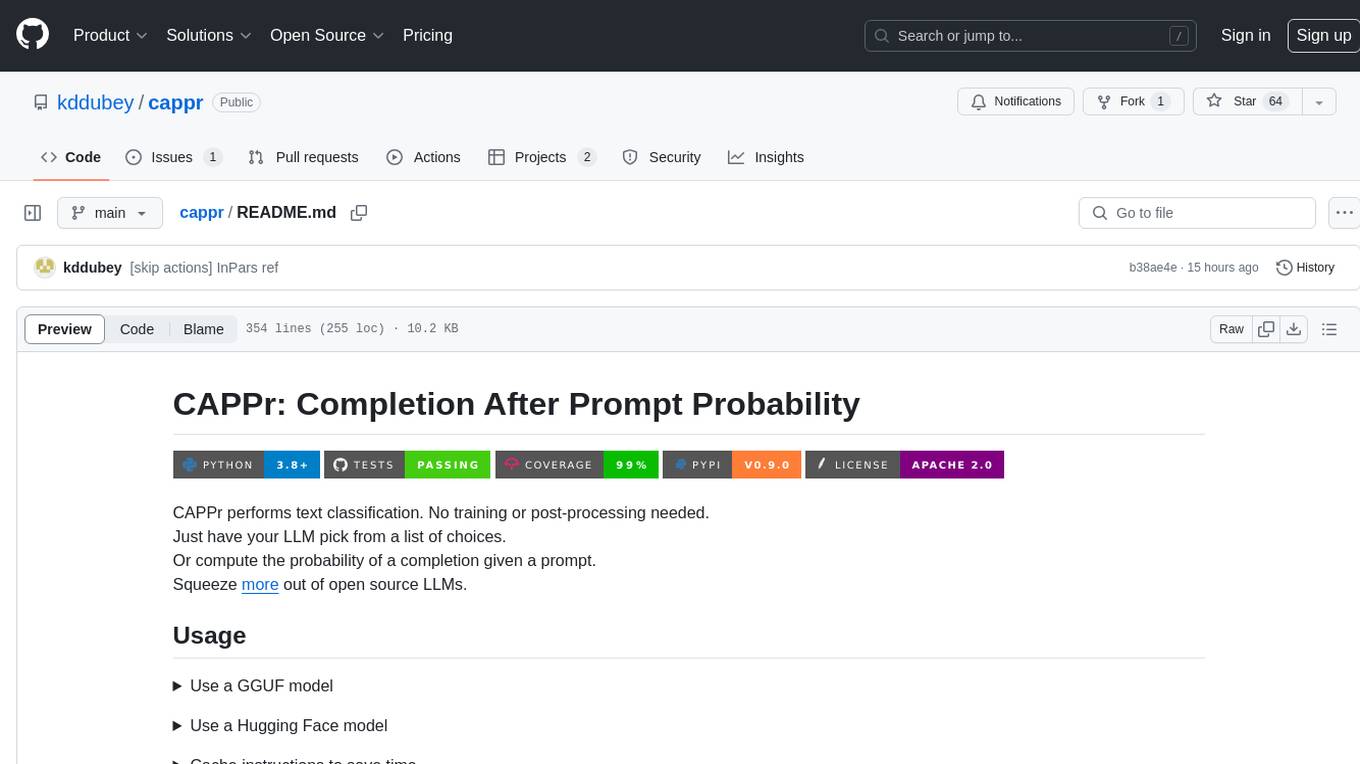

cappr

CAPPr is a tool for text classification that does not require training or post-processing. It allows users to have their language models pick from a list of choices or compute the probability of a completion given a prompt. The tool aims to help users get more out of open source language models by simplifying the text classification process. CAPPr can be used with GGUF models, Hugging Face models, models from the OpenAI API, and for tasks like caching instructions, extracting final answers from step-by-step completions, and running predictions in batches with different sets of completions.

2 - OpenAI Gpts

TYPO3 GPT

Specialist for technical and editorial TYPO3 support. // FEATURES: Optional browsing via external api with 'web: search query' and optimized GitHub access.