Best AI tools for< Safety Consultant >

Infographic

20 - AI tool Sites

AI Safety Initiative

The AI Safety Initiative is a premier coalition of trusted experts that aims to develop and deliver essential AI guidance and tools for organizations to deploy safe, responsible, and compliant AI solutions. Through vendor-neutral research, training programs, and global industry experts, the initiative provides authoritative AI best practices and tools. It offers certifications, training, and resources to help organizations navigate the complexities of AI governance, compliance, and security. The initiative focuses on AI technology, risk, governance, compliance, controls, and organizational responsibilities.

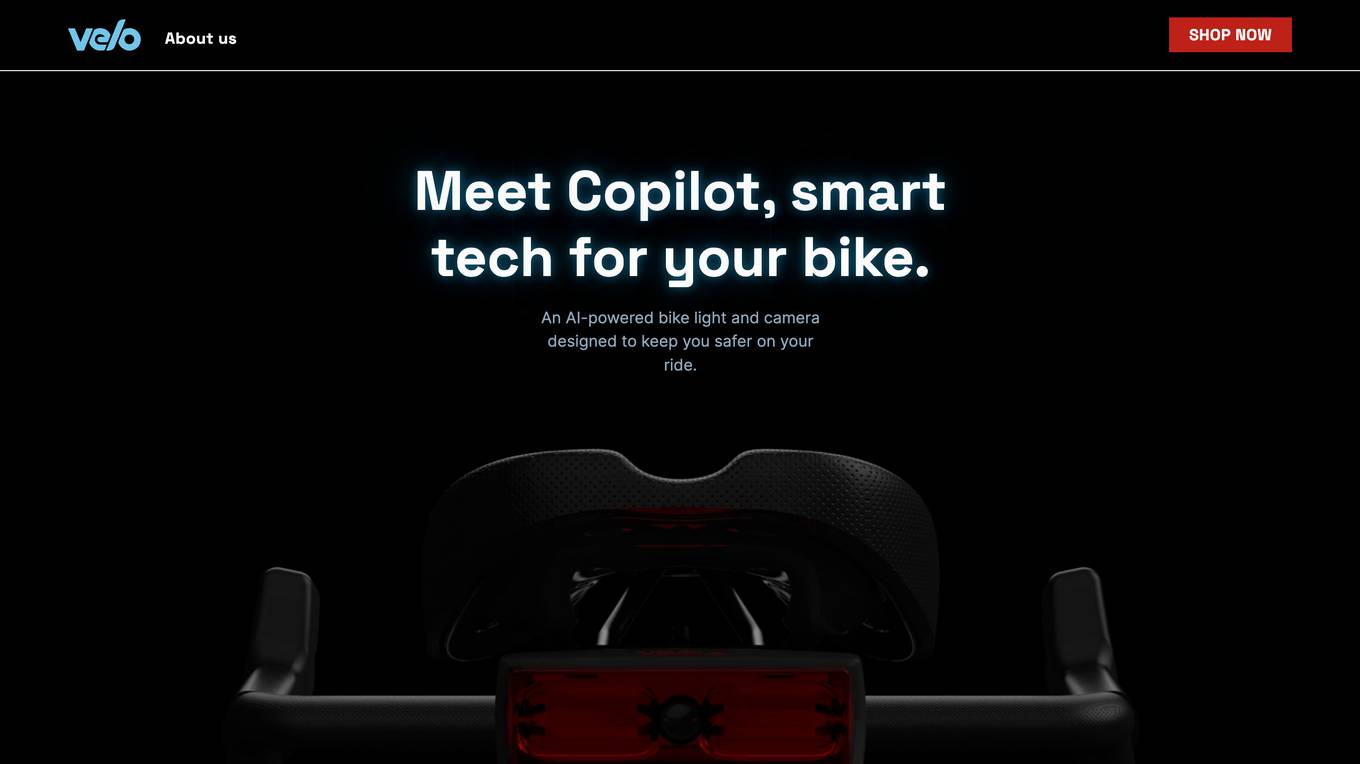

Copilot

Copilot is a smart tech application designed for cyclists to enhance safety and provide peace of mind while riding. It leverages artificial intelligence to constantly monitor the road behind the cyclist, alerting them to approaching vehicles and potential dangers. Through a combination of audible and visual alerts, Copilot aims to prevent crashes and ensure a safer cycling experience. The application also features an Ultimate Protection System that records rides with video evidence, customizable audio alerts, and reactive light patterns to communicate with drivers. Copilot's advanced AI technology anticipates hazards before they occur, offering a comprehensive safety solution for cyclists.

Kami Home

Kami Home is an AI-powered security application that provides effortless safety and security for homes. It offers smart alerts, secure cloud video storage, and a Pro Security Alarm system with 24/7 emergency response. The application uses AI-vision to detect humans, vehicles, and animals, ensuring that users receive custom alerts for relevant activities. With features like Fall Detect for seniors living at home, Kami Home aims to protect families and provide peace of mind through advanced technology.

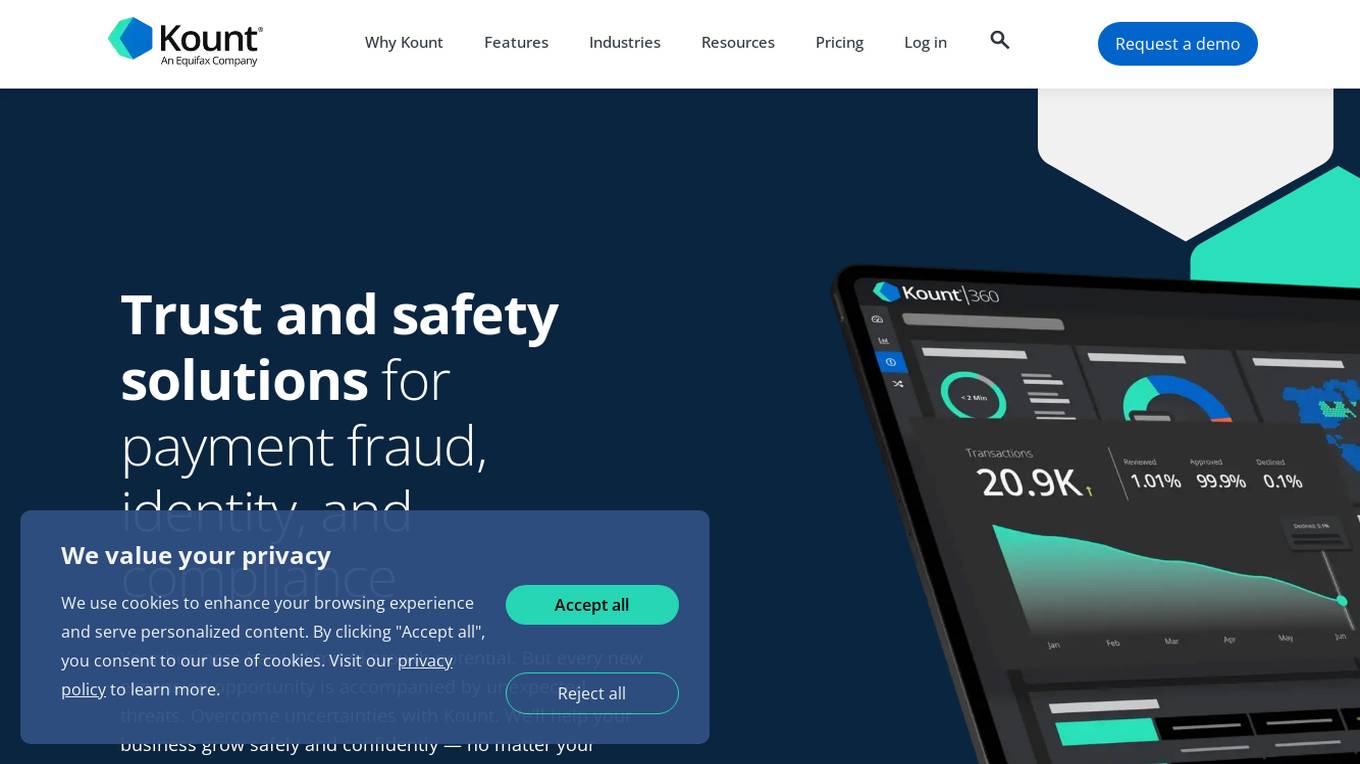

Kount

Kount is a comprehensive trust and safety platform that offers solutions for fraud detection, chargeback management, identity verification, and compliance. With advanced artificial intelligence and machine learning capabilities, Kount provides businesses with robust data and customizable policies to protect against various threats. The platform is suitable for industries such as ecommerce, health care, online learning, gaming, and more, offering personalized solutions to meet individual business needs.

Turing AI

Turing AI is a cloud-based video security system powered by artificial intelligence. It offers a range of AI-powered video surveillance products and solutions to enhance safety, security, and operations. The platform provides smart video search capabilities, real-time alerts, instant video sharing, and hardware offerings compatible with various cameras. With flexible licensing options and integration with third-party devices, Turing AI is trusted by customers across industries for its robust and innovative approach to cloud video security.

DisplayGateGuard

DisplayGateGuard is an AI-powered brand safety and suitability provider that helps advertisers choose the right placements, isolate fraudulent websites, and enhance brand safety. By leveraging artificial intelligence, the platform offers curated inclusion and exclusion lists to provide deeper insights into the environments and contexts where ads are shown, ensuring campaigns reach the right audience effectively.

Aura

Aura is an all-in-one digital safety platform that uses artificial intelligence (AI) to protect your family online. It offers a wide range of features, including financial fraud protection, identity theft protection, VPN & online privacy, antivirus, password manager & smart vault, parental controls & safe gaming, and spam call protection. Aura is easy to use and affordable, and it comes with a 60-day money-back guarantee.

MarqVision

MarqVision is a modern brand protection platform that offers solutions for full marketplace coverage, anti-counterfeit measures, impersonation monitoring, offline services including revenue recovery, and content protection services such as pirated content detection and illegal listings removal. The platform also provides trademark management services and industry-specific solutions for beauty, fashion, auto, pharmaceuticals, food & beverage, network marketing, and natural health products.

Seventh Sense

Seventh Sense is an AI company focused on providing cutting-edge AI solutions for secure and private identity verification. Their innovative technologies, such as SenseCrypt, OpenCV FR, and SenseVantage, offer advanced biometric verification, face recognition, and AI video analysis. With a mission to make self-sovereign identity accessible to all, Seventh Sense ensures privacy, security, and compliance through their AI algorithms and cryptographic solutions.

Plus

Plus is an AI-based autonomous driving software company that focuses on developing solutions for driver assist and autonomous driving technologies. The company offers a suite of autonomous driving solutions designed for integration with various hardware platforms and vehicle types, ranging from perception software to highly automated driving systems. Plus aims to transform the transportation industry by providing high-performance, safe, and affordable autonomous driving vehicles at scale.

blog.biocomm.ai

blog.biocomm.ai is an AI safety blog that focuses on the existential threat posed by uncontrolled and uncontained AI technology. It curates and organizes information related to AI safety, including the risks and challenges associated with the proliferation of AI. The blog aims to educate and raise awareness about the importance of developing safe and regulated AI systems to ensure the survival of humanity.

Unitary Virtual Agents

Unitary Virtual Agents is an AI BPO application that offers virtual agents for customer, marketplace, and safety operations. It provides a solution to replace manual effort with a blend of AI agents and expert humans, ensuring fast responses, cost-effectiveness, and human-level accuracy without the need for engineering integration. The application aims to minimize costs, improve response time, automate tasks, maintain high accuracy, and offer easy integration for businesses across various industries.

Creator Tools

Creator Tools is a website that provides tools for YouTube bloggers to make their lives easier and help them earn more money. Their tools include a video translator that can translate video data into 140 languages in 2 clicks and 15 seconds, and a voiceover tool that can provide a beautiful voiceover for your video in the most popular languages. Creator Tools also has a strict pricing policy, and they have been keeping the price for 2 years. They also help you grow and give you tips for promotion, and they guarantee the safety of your channels.

AI Bot Eye

AI Bot Eye is an AI-based security system that seamlessly integrates with existing CCTV systems to deliver intelligent insights. From AI-powered Fire Detection to Real-Time Intrusion Alerts, AI Bot Eye elevates security systems with cutting-edge AI technology. The application offers features such as Intrusion Detection, Face Recognition, Fire and Smoke Detection, Speed Cam Mode, Safety Kit Detection, HeatMaps Insights, Foot Traffic Analysis, and Numberplate recognition. AI Bot Eye provides advantages like real-time alerts, enhanced security, efficient traffic monitoring, worker compliance monitoring, and optimized operational efficiency. However, the application has disadvantages such as potential false alarms, initial setup complexity, and dependency on existing CCTV infrastructure. The FAQ section addresses common queries about the application, including integration, customization, and compatibility. AI Bot Eye is suitable for jobs such as security guard, surveillance analyst, system integrator, security consultant, and safety officer. The AI keywords associated with the application include AI-based security system, CCTV integration, intrusion detection, and video analytics. Users can utilize AI Bot Eye for tasks like monitor intrusion, analyze foot traffic, detect fire, recognize faces, and manage vehicle entry.

AI Alliance

The AI Alliance is a community dedicated to building and advancing open-source AI agents, data, models, evaluation, safety, applications, and advocacy to ensure everyone can benefit. They focus on various areas such as skills and education, trust and safety, applications and tools, hardware enablement, foundation models, and advocacy. The organization supports global AI skill-building, education, and exploratory research, creates benchmarks and tools for safe generative AI, builds capable tools for AI model builders and developers, fosters AI hardware accelerator ecosystem, enables open foundation models and datasets, and advocates for regulatory policies for healthy AI ecosystems.

Loudfame

Loudfame.com is a website that appears to be experiencing a privacy error related to an expired security certificate. The site may be at risk of attackers trying to steal sensitive information such as passwords, messages, or credit card details. Users are advised to proceed with caution due to the security concerns highlighted on the page.

Lovi

Lovi is a comprehensive AI-driven skin care application that offers science-backed cosmetology services. It provides personalized skin health analysis, helps users set skincare goals, tracks changes with face scanning technology, and ensures cosmetic safety. With a focus on user health and well-being, Lovi offers expert skincare guidance, product recommendations, and answers to skincare-related questions. The application is designed to cater to individual skin needs, offering a unique approach to maintaining a healthy lifestyle through self-care.

DeepTeam

DeepTeam by Confident AI is an AI-powered red teaming framework designed to detect over 40 LLM vulnerabilities automatically. It offers state-of-the-art adversarial attacks like prompt injections and gray box techniques to jailbreak LLMs. The framework includes OWASP Top 10 for LLMs, NIST AI, and comprehensive documentation to guide users in evaluating and enhancing the safety of their models. DeepTeam fosters a vibrant red teaming community through GitHub, Discord, and newsletters, empowering users to stay updated on the latest advancements in AI security.

icetana AI

icetana AI is a leading AI security video analytics software product suite that offers real-time surveillance, transforming data into actionable insights. It includes features like license plate recognition, facial recognition, and automated security workflows. The application helps in detecting unusual events, such as loitering, fire, and smoke, and provides solutions for various industries like safe cities, mall management, and retail security. icetana AI is known for its self-learning AI, real-time event detection, reduced false alarms, easy configuration, and scalability, making it a comprehensive solution for security operations.

Metasoma Web Security

The website www.metasoma.ai is experiencing a privacy error due to an expired security certificate. Users are warned that their information may be at risk of theft. The site is advised to enhance security measures to protect user data and prevent potential attacks.

0 - Open Source Tools

20 - OpenAI Gpts

Canadian Film Industry Safety Expert

Film studio safety expert guiding on regulations and practices

oceansense

Expert on freediving techniques, safety, and OceanSense services. online coarse available ..

Ready Advisor

Personal emergency preparedness advisor offering tailored advice and resources.

Travel Safety Advisor

Up-to-date travel safety advisor using web data, avoids subjective advice.

Product Recalls

Informs about product recalls in various industries, focusing on consumer safety.

DateMate

Your friendly AI assistant for voice-based dating, offering personalized tips, safety advice, and fun interactions.

Detective

Dedicated investigator resolving diverse crimes, ensuring justice and community safety.

Buildwell AI - UK Construction Regs Assistant

Provides Construction Support relating to Planning Permission, Building Regulations, Party Wall Act and Fire Safety in the UK. Obtain instant Guidance for your Construction Project.