OverseasAI.list

None

Stars: 52

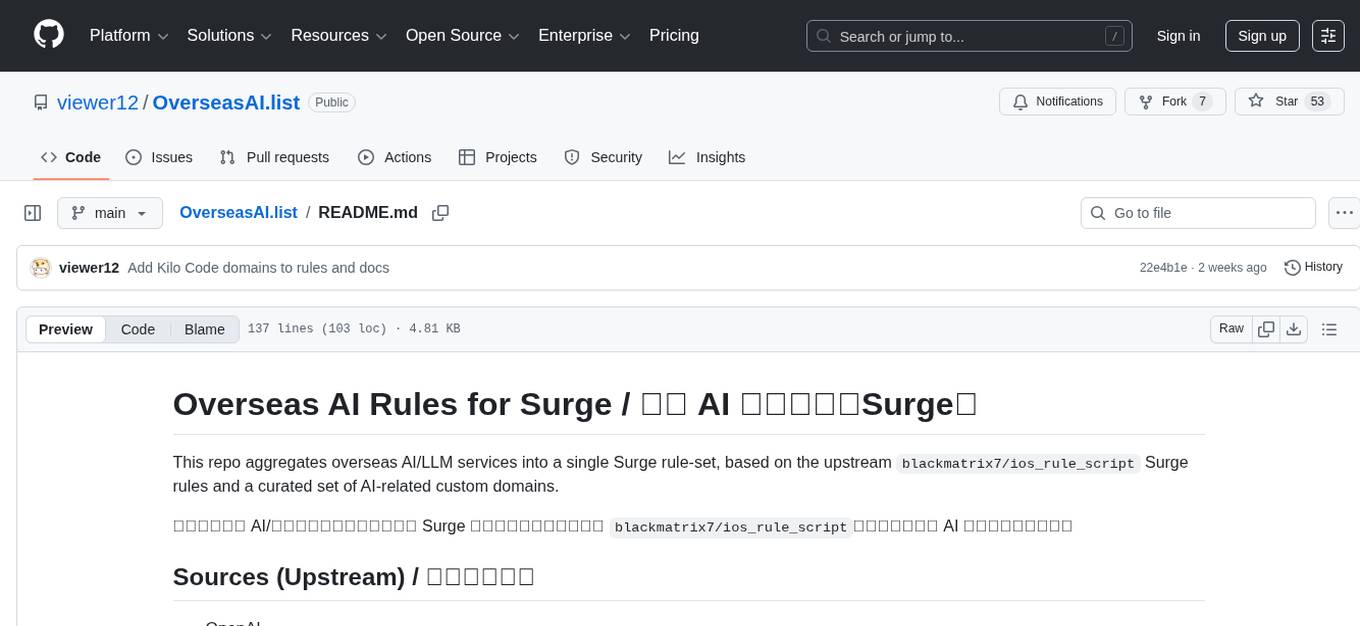

Overseas AI Rules for Surge aggregates overseas AI/LLM services into a single rule-set for Surge based on curated AI-related custom domains. It covers AI model vendors, platforms, apps, media, IDEs, coding tools, verification, and payments. Custom domains can be added to the main list. Files include various formats for different clients. Surge usage involves adding a RULE-SET to a proxy policy. Automation includes daily syncs, client format rebuilds, and domain checks. License derived from `blackmatrix7/ios_rule_script` (GPL-2.0).

README:

This repo aggregates overseas AI/LLM services into a single Surge rule-set,

based on the upstream blackmatrix7/ios_rule_script Surge rules and a curated

set of AI-related custom domains.

本仓库将海外 AI/大模型相关服务整合为一个 Surge 规则集,基础规则来源于

blackmatrix7/ios_rule_script,并补充了常用 AI 应用的自定义域名。

- OpenAI

- Claude

- Anthropic

- Gemini

- BardAI

- Copilot

- Civitai

- Stripe

- PayPal

Model vendors, AI platforms, and apps are grouped below; the exact domains

are in OverseasAI.list, and custom-only entries are in OverseasAI_Custom.list.

以下按类别概览覆盖范围,具体域名请以 OverseasAI.list 为准,

自定义补充项见 OverseasAI_Custom.list。

OpenAI, Anthropic, Google Gemini, xAI, Meta, Cohere, Mistral, Groq, Cerebras, AI21, Inflection (Pi), Reka, NVIDIA (NIM/API), and more.

OpenRouter, Hugging Face, Together, Fireworks, Replicate, Fal, LangChain, LlamaIndex, Pinecone, Weaviate, Qdrant, Milvus, Chroma, OpenCode (opencode.ai / opncd.ai / models.dev), and more.

Perplexity, Poe, Character.AI, You.com, Phind, Exa, Jasper, Copy.ai, Manus, Writesonic, Rytr, Sudowrite, Wordtune, Grammarly, QuillBot, Diabrowser, and more.

Midjourney, Runway, Leonardo, Ideogram, Krea, Luma, Pika, Stability, DreamStudio, PlaygroundAI, Kaiber, Lovart, ElevenLabs, Suno, Udio, and more.

GitHub Copilot, Cursor, Kilo Code, Windsurf, Codeium, Augment, Tabnine, Supermaven, Continue, AmpCode, Sourcegraph/Cody, Replit, Context7, Grep.app (MCP endpoints), and more.

SheerID, Stripe, PayPal, ID.me, Paddle, LemonSqueezy, Chargebee, FastSpring, Checkout.com, and more.

Custom domains are merged into the main list and recorded in:

rule/Surge/OverseasAI/OverseasAI_Custom.list.

自定义域名已合并进主规则,并记录在:

rule/Surge/OverseasAI/OverseasAI_Custom.list。

If you want more custom domains added, send the list and I will extend

OverseasAI_Custom.list and re-merge.

如果需要新增更多自定义域名,请提供清单,我会扩展

OverseasAI_Custom.list 并重新合并。

rule/Surge/OverseasAI/OverseasAI.list-

rule/Surge/OverseasAI/OverseasAI_Resolve.list(same rules, IP rules withoutno-resolve) -

rule/Surge/OverseasAI/OverseasAI_Custom.list(custom-only, already merged) rule/Clash/OverseasAI/OverseasAI.listrule/Loon/OverseasAI/OverseasAI.listrule/Shadowrocket/OverseasAI/OverseasAI.listrule/QuantumultX/OverseasAI/OverseasAI.list-

rule/Quantumult/OverseasAI/OverseasAI.list(alias of QuantumultX format)

Add one RULE-SET pointing at the list you prefer and map it to a single proxy policy.

添加一个 RULE-SET 指向你选择的列表,并映射到单一代理策略即可。

Example / 示例:

[Rule]

RULE-SET,OverseasAI,PROXY

[Rule Set]

OverseasAI = https://raw.githubusercontent.com/viewer12/OverseasAI.list/main/rule/Surge/OverseasAI/OverseasAI.list

Rule provider URL:

https://raw.githubusercontent.com/viewer12/OverseasAI.list/main/rule/Clash/OverseasAI/OverseasAI.list

RULE-SET URL:

https://raw.githubusercontent.com/viewer12/OverseasAI.list/main/rule/Loon/OverseasAI/OverseasAI.list

RULE-SET URL:

https://raw.githubusercontent.com/viewer12/OverseasAI.list/main/rule/Shadowrocket/OverseasAI/OverseasAI.list

Filter URL (policy name is embedded as OverseasAI):

https://raw.githubusercontent.com/viewer12/OverseasAI.list/main/rule/QuantumultX/OverseasAI/OverseasAI.list

Quantumult alias:

https://raw.githubusercontent.com/viewer12/OverseasAI.list/main/rule/Quantumult/OverseasAI/OverseasAI.list

Daily GitHub Actions syncs from upstream, rebuilds all client formats, and checks domains for NXDOMAIN. Deletion is not automatic; the report is for manual confirmation.

每日自动同步上游、重建规则、检测域名 NXDOMAIN,但不会自动删除,仅输出报告供人工确认。

Artifacts and state:

reports/nxdomain_report.mdreports/nxdomain_candidates.txtdata/nxdomain_state.json

Scripts (local usage):

python scripts/sync_rules.py --upstream /path/to/ios_rule_script

python scripts/build_clients.py

python scripts/check_domains.py

Derived from blackmatrix7/ios_rule_script (GPL-2.0). See LICENSE.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for OverseasAI.list

Similar Open Source Tools

OverseasAI.list

Overseas AI Rules for Surge aggregates overseas AI/LLM services into a single rule-set for Surge based on curated AI-related custom domains. It covers AI model vendors, platforms, apps, media, IDEs, coding tools, verification, and payments. Custom domains can be added to the main list. Files include various formats for different clients. Surge usage involves adding a RULE-SET to a proxy policy. Automation includes daily syncs, client format rebuilds, and domain checks. License derived from `blackmatrix7/ios_rule_script` (GPL-2.0).

ruby_llm

RubyLLM is a delightful Ruby tool for working with AI, providing a beautiful API for various AI providers like OpenAI, Anthropic, Gemini, and DeepSeek. It simplifies AI usage by offering a consistent format, minimal dependencies, and a joyful coding experience. Users can chat, analyze images, audio, and documents, generate images, create vector embeddings, and integrate AI with Ruby code effortlessly. The tool also supports Rails integration, streaming responses, and tool creation, making AI tasks seamless and enjoyable.

pandas-ai

PandaAI is a Python platform that enables users to interact with their data in natural language, catering to both non-technical and technical users. It simplifies data querying and analysis, offering conversational data analytics capabilities with minimal code. Users can ask questions, visualize charts, and compare dataframes effortlessly. The tool aims to streamline data exploration and decision-making processes by providing a user-friendly interface for data manipulation and analysis.

lionagi

LionAGI is a powerful intelligent workflow automation framework that introduces advanced ML models into any existing workflows and data infrastructure. It can interact with almost any model, run interactions in parallel for most models, produce structured pydantic outputs with flexible usage, automate workflow via graph based agents, use advanced prompting techniques, and more. LionAGI aims to provide a centralized agent-managed framework for "ML-powered tools coordination" and to dramatically lower the barrier of entries for creating use-case/domain specific tools. It is designed to be asynchronous only and requires Python 3.10 or higher.

obsei

Obsei is an open-source, low-code, AI powered automation tool that consists of an Observer to collect unstructured data from various sources, an Analyzer to analyze the collected data with various AI tasks, and an Informer to send analyzed data to various destinations. The tool is suitable for scheduled jobs or serverless applications as all Observers can store their state in databases. Obsei is still in alpha stage, so caution is advised when using it in production. The tool can be used for social listening, alerting/notification, automatic customer issue creation, extraction of deeper insights from feedbacks, market research, dataset creation for various AI tasks, and more based on creativity.

goai

Go AI is a golang API library for AI Engineering, providing high level features like Chat Completion and Embedding. It allows users to interact with AI models for various tasks such as text generation and analysis. The library simplifies the process of integrating AI capabilities into Go applications, making it easier for developers to leverage AI technologies in their projects.

fastserve-ai

FastServe-AI is a machine learning serving tool focused on GenAI & LLMs with simplicity as the top priority. It allows users to easily serve custom models by implementing the 'handle' method for 'FastServe'. The tool provides a FastAPI server for custom models and can be deployed using Lightning AI Studio. Users can install FastServe-AI via pip and run it to serve their own GPT-like LLM models in minutes.

Acontext

Acontext is a context data platform designed for production AI agents, offering unified storage, built-in context management, and observability features. It helps agents scale from local demos to production without the need to rebuild context infrastructure. The platform provides solutions for challenges like scattered context data, long-running agents requiring context management, and tracking states from multi-modal agents. Acontext offers core features such as context storage, session management, disk storage, agent skills management, and sandbox for code execution and analysis. Users can connect to Acontext, install SDKs, initialize clients, store and retrieve messages, perform context engineering, and utilize agent storage tools. The platform also supports building agents using end-to-end scripts in Python and Typescript, with various templates available. Acontext's architecture includes client layer, backend with API and core components, infrastructure with PostgreSQL, S3, Redis, and RabbitMQ, and a web dashboard. Join the Acontext community on Discord and follow updates on GitHub.

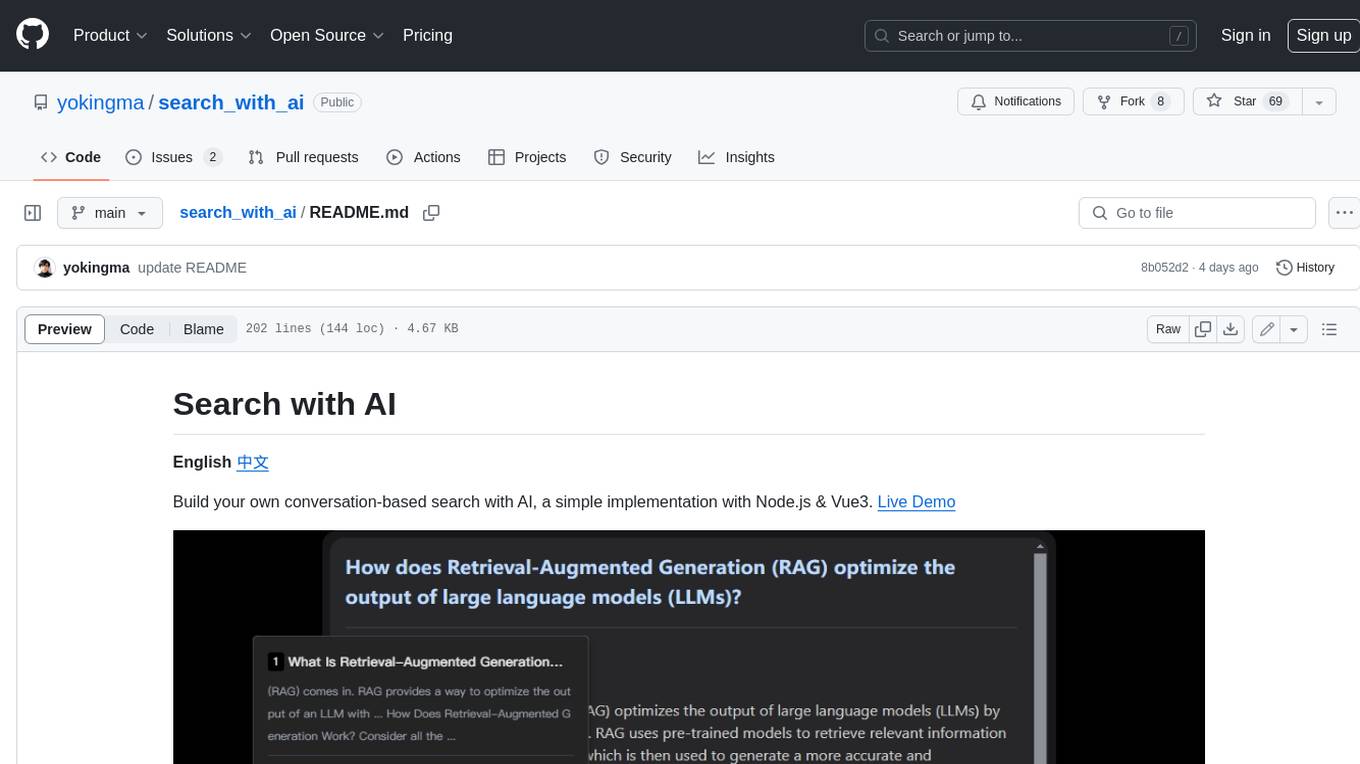

search_with_ai

Build your own conversation-based search with AI, a simple implementation with Node.js & Vue3. Live Demo Features: * Built-in support for LLM: OpenAI, Google, Lepton, Ollama(Free) * Built-in support for search engine: Bing, Sogou, Google, SearXNG(Free) * Customizable pretty UI interface * Support dark mode * Support mobile display * Support local LLM with Ollama * Support i18n * Support Continue Q&A with contexts.

unitxt

Unitxt is a customizable library for textual data preparation and evaluation tailored to generative language models. It natively integrates with common libraries like HuggingFace and LM-eval-harness and deconstructs processing flows into modular components, enabling easy customization and sharing between practitioners. These components encompass model-specific formats, task prompts, and many other comprehensive dataset processing definitions. The Unitxt-Catalog centralizes these components, fostering collaboration and exploration in modern textual data workflows. Beyond being a tool, Unitxt is a community-driven platform, empowering users to build, share, and advance their pipelines collaboratively.

mirascope

Mirascope is an LLM toolkit for lightning-fast, high-quality development. Building with Mirascope feels like writing the Python code you’re already used to writing.

plexe

Plexe is a tool that allows users to create machine learning models by describing them in plain language. Users can explain their requirements, provide a dataset, and the AI-powered system will build a fully functional model through an automated agentic approach. It supports multiple AI agents and model building frameworks like XGBoost, CatBoost, and Keras. Plexe also provides Docker images with pre-configured environments, YAML configuration for customization, and support for multiple LiteLLM providers. Users can visualize experiment results using the built-in Streamlit dashboard and extend Plexe's functionality through custom integrations.

npcpy

npcpy is a core library of the NPC Toolkit that enhances natural language processing pipelines and agent tooling. It provides a flexible framework for building applications and conducting research with LLMs. The tool supports various functionalities such as getting responses for agents, setting up agent teams, orchestrating jinx workflows, obtaining LLM responses, generating images, videos, audio, and more. It also includes a Flask server for deploying NPC teams, supports LiteLLM integration, and simplifies the development of NLP-based applications. The tool is versatile, supporting multiple models and providers, and offers a graphical user interface through NPC Studio and a command-line interface via NPC Shell.

lionagi

LionAGI is a robust framework for orchestrating multi-step AI operations with precise control. It allows users to bring together multiple models, advanced reasoning, tool integrations, and custom validations in a single coherent pipeline. The framework is structured, expandable, controlled, and transparent, offering features like real-time logging, message introspection, and tool usage tracking. LionAGI supports advanced multi-step reasoning with ReAct, integrates with Anthropic's Model Context Protocol, and provides observability and debugging tools. Users can seamlessly orchestrate multiple models, integrate with Claude Code CLI SDK, and leverage a fan-out fan-in pattern for orchestration. The framework also offers optional dependencies for additional functionalities like reader tools, local inference support, rich output formatting, database support, and graph visualization.

chat

deco.chat is an open-source foundation for building AI-native software, providing developers, engineers, and AI enthusiasts with robust tools to rapidly prototype, develop, and deploy AI-powered applications. It empowers Vibecoders to prototype ideas and Agentic engineers to deploy scalable, secure, and sustainable production systems. The core capabilities include an open-source runtime for composing tools and workflows, MCP Mesh for secure integration of models and APIs, a unified TypeScript stack for backend logic and custom frontends, global modular infrastructure built on Cloudflare, and a visual workspace for building agents and orchestrating everything in code.

instructor-go

Instructor Go is a library that simplifies working with structured outputs from large language models (LLMs). Built on top of `invopop/jsonschema` and utilizing `jsonschema` Go struct tags, it provides a user-friendly API for managing validation, retries, and streaming responses without changing code logic. The library supports LLM provider APIs such as OpenAI, Anthropic, Cohere, and Google, capturing and returning usage data in responses. Users can easily add metadata to struct fields using `jsonschema` tags to enhance model awareness and streamline workflows.

For similar tasks

OverseasAI.list

Overseas AI Rules for Surge aggregates overseas AI/LLM services into a single rule-set for Surge based on curated AI-related custom domains. It covers AI model vendors, platforms, apps, media, IDEs, coding tools, verification, and payments. Custom domains can be added to the main list. Files include various formats for different clients. Surge usage involves adding a RULE-SET to a proxy policy. Automation includes daily syncs, client format rebuilds, and domain checks. License derived from `blackmatrix7/ios_rule_script` (GPL-2.0).

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.