vast-python

Vast.ai python and cli api client

Stars: 106

This repository contains the open source python command line interface for vast.ai. The CLI has all the main functionality of the vast.ai website GUI and uses the same underlying REST API. The main functionality is self-contained in the script file vast.py, with additional invoice generating commands in vast_pdf.py. Users can interact with the vast.ai platform through the CLI to manage instances, create templates, manage teams, and perform various cloud-related tasks.

README:

This repository contains the open source python command line interface for vast.ai.

This CLI has all of the main functionality of the vast.ai website GUI and uses the

same underlying REST API. Most of the functionality is self-contained in the single

script file vast.py, although the invoice generating commands

require installing an additional second script called vast_pdf.py.

You should probably create a subdirectory in which to put this script and related files if you

haven't already. You can call it whatever you like but I'll refer to it as "vid" for "Vast Install Directory".

So just enter mkdir vid to create the directory. Once you've created the directory just change your working directory to it with cd vid. After you've

done that the quickest way to get started is to download the vast.py script using the wget command.

wget https://raw.githubusercontent.com/vast-ai/vast-python/master/vast.py; chmod +x vast.py;

You can verify that the script is working by doing ./vast.py --help. You should see a list of the available

commands. In order to proceed further you will need to login to the vast.ai website and get your api-key.

Go to https://vast.ai/console/cli/. Copy the command under

the heading "Login / Set API Key" and run it. The command will be something like

./vast.py set api-key xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

where the xxxx... is your api-key (a long hexadecimal number). Note that if the script is

named "vast" in this command on the website and your installed script is named "vast.py"

you will need to change the name of the script in the command you run. The set api-key

command saves your api-key in a hidden file in your home directory. Do not share your

api-key with anyone as it authenticates your other vast commands to your account.

To see how the API works you can use it to find machines for rent. vast.py search offers. In this

form the command will show all available offers. To get more specific results try narrowing the search.

There is a large online help page on how to do this. Bring up the help by doing vast.py search offers --help.

There are many parameters that can be used to filter the results. The search command supports

all of the filters and sort options that the website GUI uses. To find Turing GPU instances

(compute capability 7.0 or higher):

./vast.py search offers 'compute_cap > 700 '

To find instances with a reliability score >= 0.99 and at least 4 gpus, ordering by num of gpus descending:

./vast.py search offers 'reliability > 0.99 num_gpus>=4' -o 'num_gpus-'

The output of this command at the time of this writing is

ID CUDA Num Model PCIE_BW vCPUs RAM Storage $/hr DLPerf DLP/$ Nvidia Driver Version Net_up Net_down R Max_Days machine_id

1596177 11.4 10x GTX_1080 5.5 48.0 257.9 4628 2.0000 73.0 36.5 470.63.01 653.3 854.5 99.5 - 638

2459430 11.5 8x RTX_A5000 9.1 128.0 515.8 3094 4.0000 209.4 52.3 495.46 1844.2 2669.6 99.7 12.0 4384

2459380 11.4 8x RTX_3070 6.3 12.0 64.0 710 1.4200 67.2 47.3 470.86 0.0 0.0 99.8 - 4102

2456624 11.4 8x RTX_2080_Ti 10.7 32.0 257.9 1653 2.8000 126.4 45.2 470.82.00 14.6 214.2 99.8 28.7 3047

2456622 11.4 8x RTX_2080_Ti 10.8 32.0 128.9 1651 2.8000 127.1 45.4 470.82.00 14.9 214.7 99.1 28.7 1569

2456600 11.5 8x RTX_2080_Ti 10.9 48.0 256.6 1704 2.4000 125.5 52.3 495.29.05 169.0 169.8 99.7 25.7 4058

2455617 11.2 8x RTX_3090 21.7 64.0 515.8 6165 6.4000 261.1 40.8 460.67 477.6 707.2 99.8 28.7 2980

2454397 11.2 8x A100_SXM4 22.4 128.0 2064.1 21568 13.2000 300.1 22.7 460.106.00 708.7 1119.8 99.2 - 4762

2405590 11.4 8x RTX_2080_Ti 11.2 48.0 257.9 1629 3.8000 125.5 33.0 470.82.00 389.4 608.8 100.0 1.8 2776

2364579 11.4 8x A100_PCIE 18.5 128.0 515.8 4813 14.8000 278.8 18.8 470.74 472.4 699.0 99.9 28.7 3459

2281839 11.2 8x Tesla_V100 11.8 72.0 483.1 1171 5.6000 193.6 34.6 460.67 493.0 697.8 100.0 28.7 2744

2281832 11.2 8x A100_PCIE 17.7 64.0 515.9 5821 14.8000 276.7 18.7 460.91.03 478.2 655.5 99.9 28.7 2901

2452630 11.4 7x RTX_3090 6.3 28.0 64.0 61 3.5000 165.5 47.3 470.86 84.6 84.4 99.3 3.8 4420

2342561 11.4 7x RTX_3090 6.1 96.0 257.6 1664 4.5500 149.2 32.8 470.82.00 476.9 671.7 99.4 1.7 4202

2237983 11.4 7x RTX_3090 12.5 32.0 257.6 3228 3.1500 204.5 64.9 470.86 194.4 183.8 99.1 - 4207

2459511 11.4 6x RTX_3090 6.2 - 128.8 812 2.8200 150.2 53.2 470.94 374.4 271.4 99.0 6.7 3129

2448342 11.5 6x RTX_A6000 12.4 64.0 515.7 6695 3.6000 169.8 47.2 495.29.05 668.6 1082.6 99.6 - 3624

2437565 11.4 6x RTX_3090 23.0 16.0 128.8 1676 5.4000 196.8 36.5 470.94 34.1 131.5 99.4 - 4238

2332973 11.2 6x RTX_3090 11.9 48.0 193.3 1671 3.3000 180.3 54.6 460.84 582.1 737.6 99.9 25.6 3552

2459459 11.5 4x RTX_3090 23.1 32.0 257.8 1363 2.0000 131.2 65.6 495.46 1954.7 2725.8 99.6 12.0 3059

2459428 11.5 4x RTX_A5000 24.6 64.0 515.8 1547 2.0000 104.9 52.4 495.46 1844.2 2669.6 99.7 12.0 4384

2459368 11.4 4x RTX_3090 25.3 48.0 64.2 133 1.3967 130.5 93.4 470.86 0.0 0.0 99.4 - 4637

2458968 11.6 4x RTX_3090 11.7 16.0 128.5 752 1.4000 79.8 57.0 510.39.01 797.8 842.7 99.9 4.0 2555

2458878 11.6 4x RTX_3090 11.6 36.0 128.5 1531 1.4000 81.9 58.5 510.39.01 757.1 807.6 99.9 4.0 3646

2458845 11.6 4x RTX_3090 3.1 12.0 128.5 369 1.4000 92.4 66.0 510.39.01 725.7 852.2 99.8 4.0 700

2458838 11.6 4x RTX_3090 5.7 48.0 128.9 624 1.4000 85.3 60.9 510.39.01 574.9 731.7 99.8 4.0 2217

2454395 11.2 4x A100_SXM4 22.9 64.0 2064.1 10784 6.6000 150.0 22.7 460.106.00 708.7 1119.8 99.2 - 4762

2452632 11.4 4x RTX_3090 6.3 16.0 64.0 35 2.0000 123.5 61.8 470.86 84.6 84.4 99.3 3.8 4420

2450275 11.4 4x RTX_3080_Ti 12.5 32.0 128.7 817 1.8000 128.8 71.6 470.82.00 278.3 350.4 99.7 - 4260

2449210 11.5 4x RTX_3090 11.2 48.0 128.9 324 2.0000 89.7 44.9 495.29.05 688.3 775.4 99.8 - 2764

2445175 11.4 4x RTX_3090 11.9 32.0 257.6 1530 2.0000 135.4 67.7 470.86 868.6 887.1 99.7 25.9 3055

2444916 11.4 4x RTX_3090 11.9 16.0 128.7 1576 1.4000 131.8 94.2 470.82.00 39.4 402.3 99.9 - 3759

2437188 11.4 4x Tesla_P100 11.7 24.0 95.2 2945 0.7200 44.8 62.2 470.82.00 10.9 76.2 99.5 0.1 3969

2437179 11.4 4x Tesla_P100 11.7 32.0 192.1 3070 0.7200 44.8 62.3 470.82.00 11.1 66.0 99.2 0.0 4159

2431606 11.4 4x RTX_3090 17.9 32.0 110.7 330 1.8400 134.3 73.0 470.82.01 584.6 813.4 99.7 4.4 4079

2419191 11.4 4x RTX_2080_Ti 6.3 32.0 64.4 837 2.0000 64.7 32.4 470.63.01 40.5 205.9 99.7 - 162

2405589 11.4 4x RTX_2080_Ti 10.8 24.0 257.9 815 1.9000 62.8 33.0 470.82.00 389.4 608.8 100.0 1.8 2776

2392087 11.4 4x RTX_A6000 10.8 32.0 515.9 1247 1.8000 64.5 35.8 470.94 669.9 705.4 99.1 10.9 4782

2377227 11.2 4x RTX_3090 6.3 24.0 64.3 1638 2.0000 128.3 64.1 460.32.03 37.8 145.0 99.7 3.0 2672

2349173 11.4 4x RTX_3090 23.2 48.0 128.7 1475 2.0000 107.4 53.7 470.86 33.2 84.2 99.8 47.3 3949

2338635 11.4 4x RTX_3090 23.0 32.0 128.5 3151 1.6000 108.8 68.0 470.86 33.8 86.4 99.6 47.4 3948

2303959 11.2 4x RTX_3090 11.7 28.0 128.8 791 2.1200 131.3 61.9 460.32.03 519.7 570.7 99.5 - 3042

2281830 11.2 4x A100_PCIE 18.1 32.0 515.9 2910 7.4000 143.6 19.4 460.91.03 478.2 655.5 99.9 28.7 2901

2193726 11.4 4x RTX_3090 12.4 32.0 128.8 1646 3.6000 153.9 42.8 470.82.01 33.3 137.5 99.5 - 3434

1737692 11.2 4x RTX_3070 6.3 28.0 128.5 656 2.8000 37.5 13.4 460.91.03 452.6 703.2 99.6 - 3510

To create an instance of type 2459368 (using an ID from the search) with the vastai/tensorflow image and 32 GB of disk storage

./vast.py create instance 2459368 --image vastai/tensorflow --disk 32

If you followed the instructions in Quickstart you have already installed the script that contains

most of the CLI functionality. If you wish to print PDF format invoices you will need a few other

things. First, you'll need the vast_pdf.py script. This can be found in this repository in the main

directory at vast_pdf.py. This script should be present in the same directory as the

vast.py script. It makes use of a third party library called Borb to create the PDF invoices. To install

this run the command pip3 install borb

The CLI API is all contained in a python script called vast.py.

This script can be called with various commands as arguments. Commands follow

a simple "verb-object" pattern. As an example, consider "show machines". To run this

command we type ./vast.py show machines

usage: vast.py [-h] [--url URL] [--retry RETRY] [--raw] [--explain] [--api-key API_KEY] command ...

positional arguments:

command command to run. one of:

help print this help message

attach ssh Attach an ssh key to an instance. This will allow you to connect to the instance with the ssh key.

cancel copy Cancel a remote copy in progress, specified by DST id

cancel sync Cancel a remote copy in progress, specified by DST id

change bid Change the bid price for a spot/interruptible instance

copy Copy directories between instances and/or local

cloud copy Copy files/folders to and from cloud providers

create api-key Create a new api-key with restricted permissions. Can be sent to other users and teammates

create ssh-key Create a new ssh-key

create autoscaler Create a new autoscale group

create endpoint Create a new endpoint group

create instance Create a new instance

create subaccount Create a subaccount

create team Create a new team

create team-role Add a new role to your

create template Create a new template

delete api-key Remove an api-key

delete ssh-key Remove an ssh-key

delete autoscaler Delete an autoscaler group

delete endpoint Delete an endpoint group

destroy instance Destroy an instance (irreversible, deletes data)

destroy instances Destroy a list of instances (irreversible, deletes data)

destroy team Destroy your team

detach ssh Detach an ssh key from an instance

execute Execute a (constrained) remote command on a machine

invite team-member Invite a team member

label instance Assign a string label to an instance

logs Get the logs for an instance

prepay instance Deposit credits into reserved instance.

reboot instance Reboot (stop/start) an instance

recycle instance Recycle (destroy/create) an instance

remove team-member Remove a team member

remove team-role Remove a role from your team

reports Get the user reports for a given machine

reset api-key Reset your api-key (get new key from website).

start instance Start a stopped instance

start instances Start a list of instances

stop instance Stop a running instance

stop instances Stop a list of instances

search benchmarks Search for benchmark results using custom query

search invoices Search for benchmark results using custom query

search offers Search for instance types using custom query

search templates Search for template results using custom query

set api-key Set api-key (get your api-key from the console/CLI)

set user Update user data from json file

ssh-url ssh url helper

scp-url scp url helper

show api-key Show an api-key

show api-keys List your api-keys associated with your account

show ssh-keys List your ssh keys associated with your account

show autoscalers Display user's current autoscaler groups

show endpoints Display user's current endpoint groups

show connections Displays user's cloud connections

show deposit Display reserve deposit info for an instance

show earnings Get machine earning history reports

show invoices Get billing history reports

show instance Display user's current instances

show instances Display user's current instances

show ipaddrs Display user's history of ip addresses

show user Get current user data

show subaccounts Get current subaccounts

show team-members Show your team members

show team-role Show your team role

show team-roles Show roles for a team

transfer credit Transfer credits to another account

update autoscaler Update an existing autoscale group

update endpoint Update an existing endpoint group

update team-role Update an existing team role

update ssh-key Update an existing ssh key

generate pdf-invoices

cleanup machine [Host] Remove all expired storage instances from the machine, freeing up space.

list machine [Host] list a machine for rent

list machines [Host] list machines for rent

remove defjob [Host] Delete default jobs

set defjob [Host] Create default jobs for a machine

set min-bid [Host] Set the minimum bid/rental price for a machine

schedule maint [Host] Schedule upcoming maint window

cancel maint [Host] Cancel maint window

show machines [Host] Show hosted machines

unlist machine [Host] Unlist a listed machine

launch instance Launch the top instance from the search offers based on the given parameters

options:

-h, --help show this help message and exit

--url URL server REST api url

--retry RETRY retry limit

--raw output machine-readable json

--explain output verbose explanation of mapping of CLI calls to HTTPS API endpoints

--api-key API_KEY api key. defaults to using the one stored in ~/.vast_api_key

Use 'vast COMMAND --help' for more info about a command

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for vast-python

Similar Open Source Tools

vast-python

This repository contains the open source python command line interface for vast.ai. The CLI has all the main functionality of the vast.ai website GUI and uses the same underlying REST API. The main functionality is self-contained in the script file vast.py, with additional invoice generating commands in vast_pdf.py. Users can interact with the vast.ai platform through the CLI to manage instances, create templates, manage teams, and perform various cloud-related tasks.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

numerapi

Numerapi is a Python client to the Numerai API that allows users to automatically download and upload data for the Numerai machine learning competition. It provides functionalities for downloading training data, uploading predictions, and accessing user, submission, and competitions information for both the main competition and Numerai Signals competition. Users can interact with the API using Python modules or command line interface. Tokens are required for certain actions like uploading predictions or staking, which can be obtained from Numer.ai account settings. The tool also supports features like checking new rounds, getting leaderboards, and managing stakes.

leettools

LeetTools is an AI search assistant that can perform highly customizable search workflows and generate customized format results based on both web and local knowledge bases. It provides an automated document pipeline for data ingestion, indexing, and storage, allowing users to focus on implementing workflows without worrying about infrastructure. LeetTools can run with minimal resource requirements on the command line with configurable LLM settings and supports different databases for various functions. Users can configure different functions in the same workflow to use different LLM providers and models.

LARS

LARS is an application that enables users to run Large Language Models (LLMs) locally on their devices, upload their own documents, and engage in conversations where the LLM grounds its responses with the uploaded content. The application focuses on Retrieval Augmented Generation (RAG) to increase accuracy and reduce AI-generated inaccuracies. LARS provides advanced citations, supports various file formats, allows follow-up questions, provides full chat history, and offers customization options for LLM settings. Users can force enable or disable RAG, change system prompts, and tweak advanced LLM settings. The application also supports GPU-accelerated inferencing, multiple embedding models, and text extraction methods. LARS is open-source and aims to be the ultimate RAG-centric LLM application.

aws-lex-web-ui

The AWS Lex Web UI is a sample Amazon Lex web interface that provides a chatbot UI component for integration into websites. It supports voice and text interactions, Lex response cards, and programmable configuration using JavaScript. The interface can be used as a full-page chatbot UI or embedded as a widget. It offers mobile-ready responsive UI, seamless voice-text switching, and interactive messaging support. The project includes CloudFormation templates for easy deployment and customization. Users can modify configurations, integrate the UI into existing sites, and deploy using various methods like CloudFormation, pre-built libraries, or npm installation.

0chain

Züs is a high-performance cloud on a fast blockchain offering privacy and configurable uptime. It uses erasure code to distribute data between data and parity servers, allowing flexibility for IT managers to design for security and uptime. Users can easily share encrypted data with business partners through a proxy key sharing protocol. The ecosystem includes apps like Blimp for cloud migration, Vult for personal cloud storage, and Chalk for NFT artists. Other apps include Bolt for secure wallet and staking, Atlus for blockchain explorer, and Chimney for network participation. The QoS protocol challenges providers based on response time, while the privacy protocol enables secure data sharing. Züs supports hybrid and multi-cloud architectures, allowing users to improve regulatory compliance and security requirements.

InfiniStore

InfiniStore is an open-source high-performance KV store designed to support LLM Inference clusters. It provides high-performance and low-latency KV cache transfer and reuse among inference nodes. In addition to inference clusters, it can be used as a standalone KV store for integration with LLM training or inference services. InfiniStore is currently integrated with vLLM via LMCache and is in progress for integration with SGLang and other inference engines.

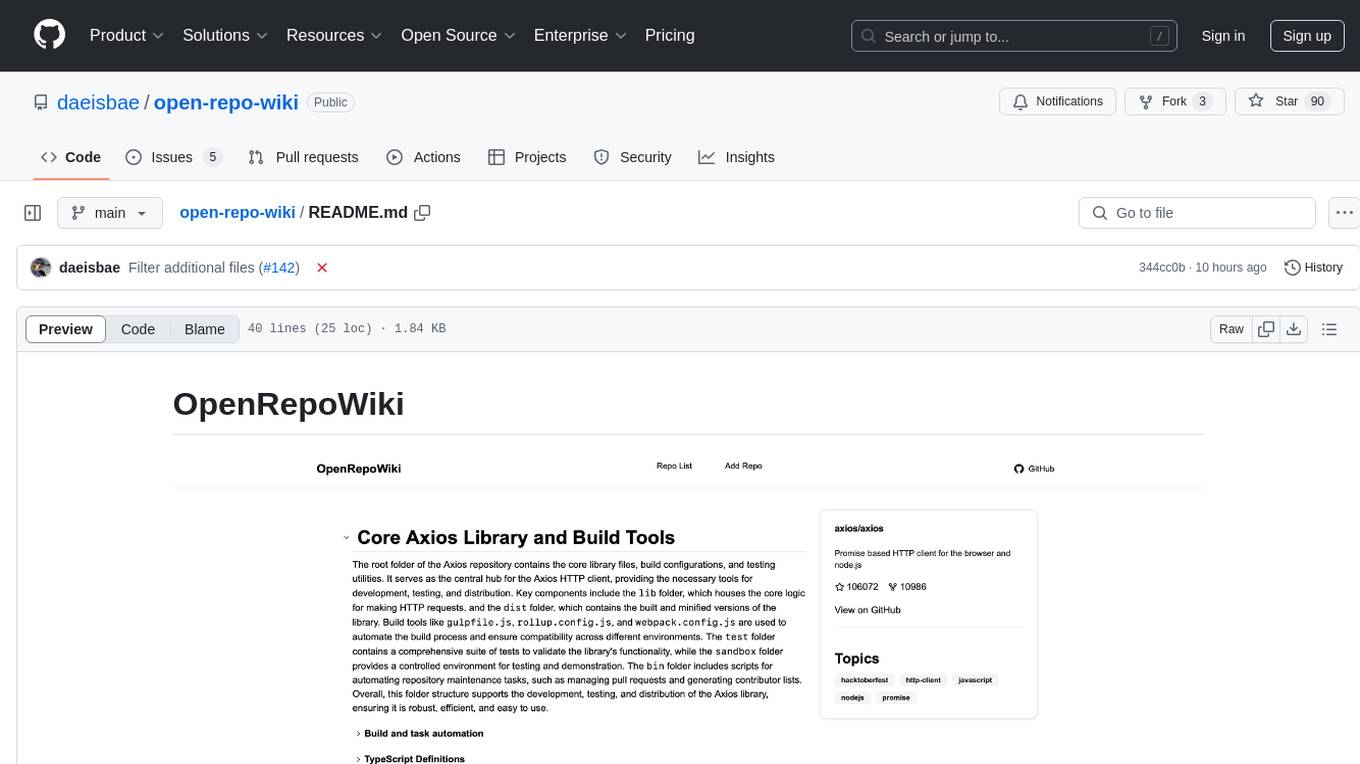

open-repo-wiki

OpenRepoWiki is a tool designed to automatically generate a comprehensive wiki page for any GitHub repository. It simplifies the process of understanding the purpose, functionality, and core components of a repository by analyzing its code structure, identifying key files and functions, and providing explanations. The tool aims to assist individuals who want to learn how to build various projects by providing a summarized overview of the repository's contents. OpenRepoWiki requires certain dependencies such as Google AI Studio or Deepseek API Key, PostgreSQL for storing repository information, Github API Key for accessing repository data, and Amazon S3 for optional usage. Users can configure the tool by setting up environment variables, installing dependencies, building the server, and running the application. It is recommended to consider the token usage and opt for cost-effective options when utilizing the tool.

Open_Data_QnA

Open Data QnA is a Python library that allows users to interact with their PostgreSQL or BigQuery databases in a conversational manner, without needing to write SQL queries. The library leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, enabling users to ask questions in natural language and receive informative responses. It offers features such as conversational querying with multiturn support, table grouping, multi schema/dataset support, SQL generation, query refinement, natural language responses, visualizations, and extensibility. The library is built on a modular design and supports various components like Database Connectors, Vector Stores, and Agents for SQL generation, validation, debugging, descriptions, embeddings, responses, and visualizations.

bedrock-claude-chatbot

Bedrock Claude ChatBot is a Streamlit application that provides a conversational interface for users to interact with various Large Language Models (LLMs) on Amazon Bedrock. Users can ask questions, upload documents, and receive responses from the AI assistant. The app features conversational UI, document upload, caching, chat history storage, session management, model selection, cost tracking, logging, and advanced data analytics tool integration. It can be customized using a config file and is extensible for implementing specialized tools using Docker containers and AWS Lambda. The app requires access to Amazon Bedrock Anthropic Claude Model, S3 bucket, Amazon DynamoDB, Amazon Textract, and optionally Amazon Elastic Container Registry and Amazon Athena for advanced analytics features.

vulnerability-analysis

The NVIDIA AI Blueprint for Vulnerability Analysis for Container Security showcases accelerated analysis on common vulnerabilities and exposures (CVE) at an enterprise scale, reducing mitigation time from days to seconds. It enables security analysts to determine software package vulnerabilities using large language models (LLMs) and retrieval-augmented generation (RAG). The blueprint is designed for security analysts, IT engineers, and AI practitioners in cybersecurity. It requires NVAIE developer license and API keys for vulnerability databases, search engines, and LLM model services. Hardware requirements include L40 GPU for pipeline operation and optional LLM NIM and Embedding NIM. The workflow involves LLM pipeline for CVE impact analysis, utilizing LLM planner, agent, and summarization nodes. The blueprint uses NVIDIA NIM microservices and Morpheus Cybersecurity AI SDK for vulnerability analysis.

serverless-pdf-chat

The serverless-pdf-chat repository contains a sample application that allows users to ask natural language questions of any PDF document they upload. It leverages serverless services like Amazon Bedrock, AWS Lambda, and Amazon DynamoDB to provide text generation and analysis capabilities. The application architecture involves uploading a PDF document to an S3 bucket, extracting metadata, converting text to vectors, and using a LangChain to search for information related to user prompts. The application is not intended for production use and serves as a demonstration and educational tool.

sql-eval

This repository contains the code that Defog uses for the evaluation of generated SQL. It's based off the schema from the Spider, but with a new set of hand-selected questions and queries grouped by query category. The testing procedure involves generating a SQL query, running both the 'gold' query and the generated query on their respective database to obtain dataframes with the results, comparing the dataframes using an 'exact' and a 'subset' match, logging these alongside other metrics of interest, and aggregating the results for reporting. The repository provides comprehensive instructions for installing dependencies, starting a Postgres instance, importing data into Postgres, importing data into Snowflake, using private data, implementing a query generator, and running the test with different runners.

trinityX

TrinityX is an open-source HPC, AI, and cloud platform designed to provide all services required in a modern system, with full customization options. It includes default services like Luna node provisioner, OpenLDAP, SLURM or OpenPBS, Prometheus, Grafana, OpenOndemand, and more. TrinityX also sets up NFS-shared directories, OpenHPC applications, environment modules, HA, and more. Users can install TrinityX on Enterprise Linux, configure network interfaces, set up passwordless authentication, and customize the installation using Ansible playbooks. The platform supports HA, OpenHPC integration, and provides detailed documentation for users to contribute to the project.

olmocr

olmOCR is a toolkit designed for training language models to work with PDF documents in real-world scenarios. It includes various components such as a prompting strategy for natural text parsing, an evaluation toolkit for comparing pipeline versions, filtering by language and SEO spam removal, finetuning code for specific models, processing PDFs through a finetuned model, and viewing documents created from PDFs. The toolkit requires a recent NVIDIA GPU with at least 20 GB of RAM and 30GB of free disk space. Users can install dependencies, set up a conda environment, and utilize olmOCR for tasks like converting single or multiple PDFs, viewing extracted text, and running batch inference pipelines.

For similar tasks

vast-python

This repository contains the open source python command line interface for vast.ai. The CLI has all the main functionality of the vast.ai website GUI and uses the same underlying REST API. The main functionality is self-contained in the script file vast.py, with additional invoice generating commands in vast_pdf.py. Users can interact with the vast.ai platform through the CLI to manage instances, create templates, manage teams, and perform various cloud-related tasks.

hof

Hof is a CLI tool that unifies data models, schemas, code generation, and a task engine. It allows users to augment data, config, and schemas with CUE to improve consistency, generate multiple Yaml and JSON files, explore data or config with a TUI, and run workflows with automatic task dependency inference. The tool uses CUE to power the DX and implementation, providing a language for specifying schemas, configuration, and writing declarative code. Hof offers core features like code generation, data model management, task engine, CUE cmds, creators, modules, TUI, and chat for better, scalable results.

obsidian-systemsculpt-ai

SystemSculpt AI is a comprehensive AI-powered plugin for Obsidian, integrating advanced AI capabilities into note-taking, task management, knowledge organization, and content creation. It offers modules for brain integration, chat conversations, audio recording and transcription, note templates, and task generation and management. Users can customize settings, utilize AI services like OpenAI and Groq, and access documentation for detailed guidance. The plugin prioritizes data privacy by storing sensitive information locally and offering the option to use local AI models for enhanced privacy.

sdk

Smithery SDK is a tool that provides utilities to simplify the development and deployment of Model Context Protocols (MCPs) with Smithery. It offers functionalities for finding and connecting to MCP servers in the registry, building and deploying MCP servers, and creating fast MCP servers with Smithery session configuration support. Additionally, it includes a ready-to-use MCP server template. For more information and access to the MCP registry, visit https://smithery.ai/.

mushroom

MRCMS is a Java-based content management system that uses data model + template + plugin implementation, providing built-in article model publishing functionality. The goal is to quickly build small to medium websites.

flow-like

Flow-Like is an enterprise-grade workflow operating system built upon Rust for uncompromising performance, efficiency, and code safety. It offers a modular frontend for apps, a rich set of events, a node catalog, a powerful no-code workflow IDE, and tools to manage teams, templates, and projects within organizations. With typed workflows, users can create complex, large-scale workflows with clear data origins, transformations, and contracts. Flow-Like is designed to automate any process through seamless integration of LLM, ML-based, and deterministic decision-making instances.

ag2

Ag2 is a lightweight and efficient tool for generating automated reports from data sources. It simplifies the process of creating reports by allowing users to define templates and automate the data extraction and formatting. With Ag2, users can easily generate reports in various formats such as PDF, Excel, and CSV, saving time and effort in manual report generation tasks.

cedana-cli

Cedana is a framework for the democritization and commodification of compute. It leverages checkpoint/restore to migrate work across machines, clouds, and beyond. The repo contains a CLI tool for developers to experiment with the system.

For similar jobs

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.

AI-in-a-Box

AI-in-a-Box is a curated collection of solution accelerators that can help engineers establish their AI/ML environments and solutions rapidly and with minimal friction, while maintaining the highest standards of quality and efficiency. It provides essential guidance on the responsible use of AI and LLM technologies, specific security guidance for Generative AI (GenAI) applications, and best practices for scaling OpenAI applications within Azure. The available accelerators include: Azure ML Operationalization in-a-box, Edge AI in-a-box, Doc Intelligence in-a-box, Image and Video Analysis in-a-box, Cognitive Services Landing Zone in-a-box, Semantic Kernel Bot in-a-box, NLP to SQL in-a-box, Assistants API in-a-box, and Assistants API Bot in-a-box.

awsome-distributed-training

This repository contains reference architectures and test cases for distributed model training with Amazon SageMaker Hyperpod, AWS ParallelCluster, AWS Batch, and Amazon EKS. The test cases cover different types and sizes of models as well as different frameworks and parallel optimizations (Pytorch DDP/FSDP, MegatronLM, NemoMegatron...).

generative-ai-cdk-constructs

The AWS Generative AI Constructs Library is an open-source extension of the AWS Cloud Development Kit (AWS CDK) that provides multi-service, well-architected patterns for quickly defining solutions in code to create predictable and repeatable infrastructure, called constructs. The goal of AWS Generative AI CDK Constructs is to help developers build generative AI solutions using pattern-based definitions for their architecture. The patterns defined in AWS Generative AI CDK Constructs are high level, multi-service abstractions of AWS CDK constructs that have default configurations based on well-architected best practices. The library is organized into logical modules using object-oriented techniques to create each architectural pattern model.

model_server

OpenVINO™ Model Server (OVMS) is a high-performance system for serving models. Implemented in C++ for scalability and optimized for deployment on Intel architectures, the model server uses the same architecture and API as TensorFlow Serving and KServe while applying OpenVINO for inference execution. Inference service is provided via gRPC or REST API, making deploying new algorithms and AI experiments easy.

dify-helm

Deploy langgenius/dify, an LLM based chat bot app on kubernetes with helm chart.