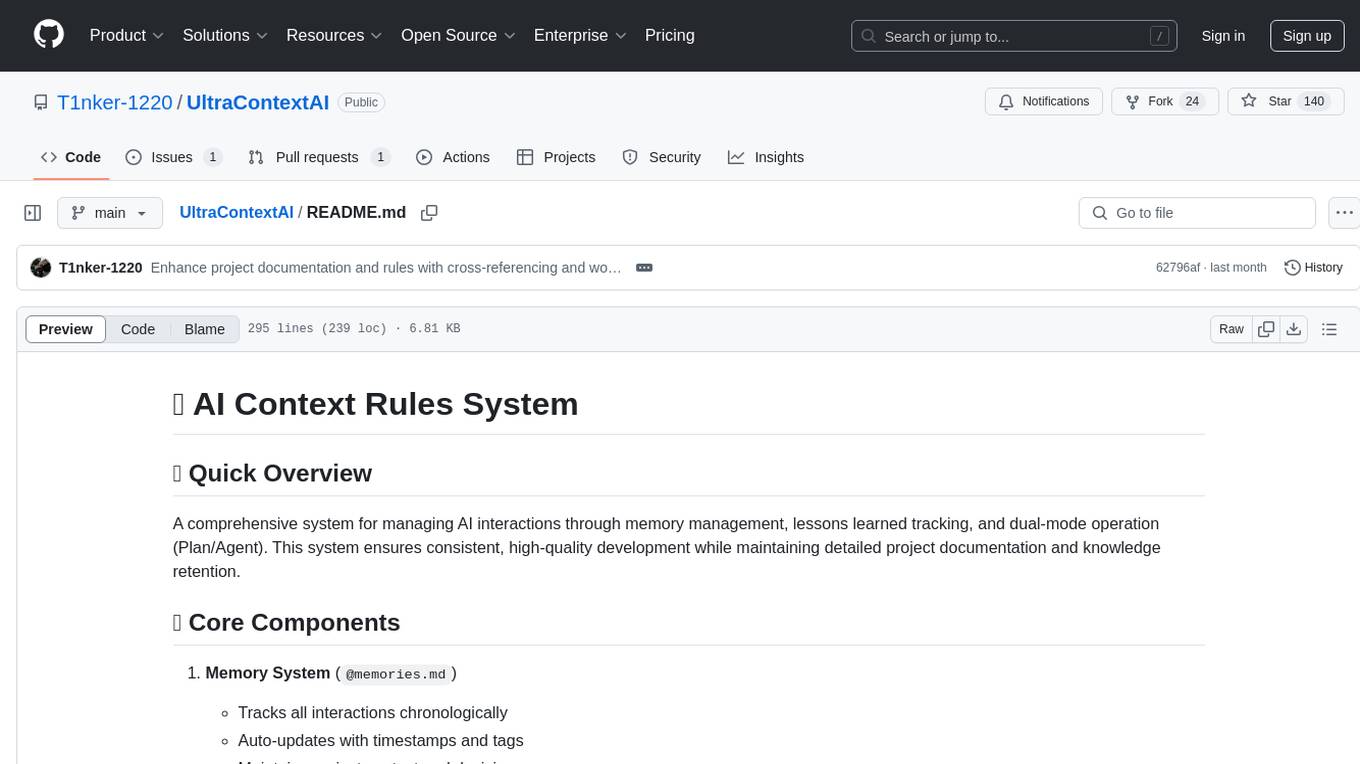

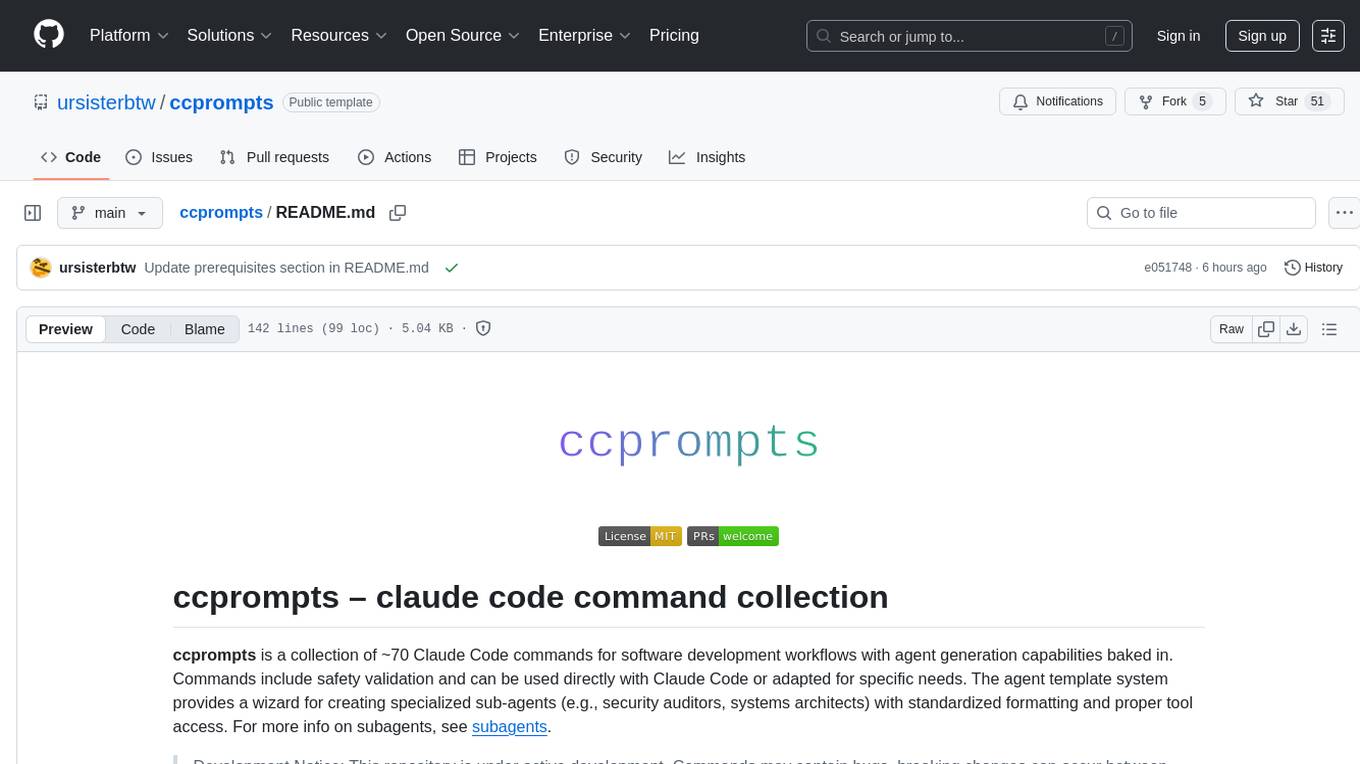

ccprompts

practical claude code commands and subagents

Stars: 51

ccprompts is a collection of ~70 Claude Code commands for software development workflows with agent generation capabilities. It includes safety validation and can be used directly with Claude Code or adapted for specific needs. The agent template system provides a wizard for creating specialized sub-agents (e.g., security auditors, systems architects) with standardized formatting and proper tool access. The repository is under active development, so caution is advised when using it in production environments.

README:

ccprompts is a collection of ~70 Claude Code commands for software development workflows with agent generation capabilities baked in. Commands include safety validation and can be used directly with Claude Code or adapted for specific needs. The agent template system provides a wizard for creating specialized sub-agents (e.g., security auditors, systems architects) with standardized formatting and proper tool access. For more info on subagents, see subagents.

Development Notice: This repository is under active development. Commands may contain bugs, breaking changes can occur between versions, and the structure may evolve. Use with caution in production environments.

Install Dagger (not explicitly required, but recommended to enable containerized command execution):

Linux:

curl -fsSL https://dl.dagger.io/dagger/install.sh | BIN_DIR=$HOME/.local/bin shmacOS:

brew install dagger/tap/daggerWindows:

winget install Dagger.Cligit clone https://github.com/ursisterbtw/ccprompts.git; and cd ccprompts; and bun i; and bun run validate- Copy an existing command from

.claude/commands/to your desired phase folder. - Update the structure and content for your specific use case.

- Include safety validation steps.

- Run

bun run validatebefore committing.

The repository includes an agent creation system using templates/SUB_AGENT_TEMPLATE.md:

- Use the

agent-template-wizardagent to create new specialized agents - Provide domain expertise and specific capabilities needed

- Wizard fills template placeholders with consistent structure

- Generated agents follow 7-step methodology with proper categorization

- Agents include examples, color coding, and validation approaches

The template system ensures consistent agent structure while allowing domain-specific customization.

.claude/

├── commands/ # ~70 commands across 12 phases (00-11)

│ ├── 00-initial-workflow/ # Project analysis and workflow (2 commands)

│ ├── 01-project-setup/ # Documentation, learning, MCP (3 commands)

│ ├── 02-development/ # Backup, debug, optimize, refactor (5 commands)

│ ├── 03-security/ # Security auditing and compliance (4 commands)

│ ├── 04-testing/ # Testing and troubleshooting (2 commands)

│ ├── 05-deployment/ # Deployment and CI/CD (4 commands)

│ ├── 06-collaboration/ # Code review and team workflow (4 commands)

│ ├── 07-utilities/ # Productivity and management tools (10 commands)

│ ├── 08-extras/ # Health checks and modernization (4 commands)

│ ├── 09-agentic-capabilities/ # MCP and agent orchestration (12 commands)

│ ├── 10-ai-native-development/ # AI-powered development tools (10 commands)

│ └── 11-enterprise-scale/ # Governance and multi-repo (8 commands)

├── agents/ # Agent templates and configurations

├── workflows/ # Automated workflow definitions

├── README.md

├── settings.json

└── command-registry.json

scripts/ # Safety system + validation utilities

templates/ # Sub-agent template files

tests/ # Jest testing suite

docs/ # Multi-level documentation

cat .claude/commands/09-agentic-capabilities/mcp-discover.md

cat .claude/commands/10-ai-native-development/ai-pair-program.md

cat .claude/commands/11-enterprise-scale/governance.md

/mcp-discover install filesystem --path=/project/data

/ai-pair-program advanced typescript --context-aware

/governance policy organization soc2 --enforce-automatically./scripts/safe-run.sh "command"See SAFETY.md for full safety system documentation.

- Open an issue for bugs, feature requests, or questions

- Start a discussion by opening an issue for ideas and feedback

- See CONTRIBUTING.md for guidelines

Pull requests are welcome. Please:

- Run the validator and ensure no errors.

- Follow conventional commit messages (

feat: …,fix: …, etc.). - Keep commands clear and focused—avoid unnecessary complexity.

MIT. See LICENSE for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ccprompts

Similar Open Source Tools

ccprompts

ccprompts is a collection of ~70 Claude Code commands for software development workflows with agent generation capabilities. It includes safety validation and can be used directly with Claude Code or adapted for specific needs. The agent template system provides a wizard for creating specialized sub-agents (e.g., security auditors, systems architects) with standardized formatting and proper tool access. The repository is under active development, so caution is advised when using it in production environments.

OpenManus

OpenManus is an open-source project aiming to replicate the capabilities of the Manus AI agent, known for autonomously executing complex tasks like travel planning and stock analysis. The project provides a modular, containerized framework using Docker, Python, and JavaScript, allowing developers to build, deploy, and experiment with a multi-agent AI system. Features include collaborative AI agents, Dockerized environment, task execution support, tool integration, modular design, and community-driven development. Users can interact with OpenManus via CLI, API, or web UI, and the project welcomes contributions to enhance its capabilities.

oreilly_live_training_agents

This repository provides resources and notebooks for O'Reilly Live Training on getting started with LLM Agents using LangChain & LangGraph. It includes setup instructions, core learning paths, additional topics, repository structure, and additional resources for learning and deploying LangGraph agents.

RooFlow

RooFlow is a VS Code extension that enhances AI-assisted development by providing persistent project context and optimized mode interactions. It reduces token consumption and streamlines workflow by integrating Architect, Code, Test, Debug, and Ask modes. The tool simplifies setup, offers real-time updates, and provides clearer instructions through YAML-based rule files. It includes components like Memory Bank, System Prompts, VS Code Integration, and Real-time Updates. Users can install RooFlow by downloading specific files, placing them in the project structure, and running an insert-variables script. They can then start a chat, select a mode, interact with Roo, and use the 'Update Memory Bank' command for synchronization. The Memory Bank structure includes files for active context, decision log, product context, progress tracking, and system patterns. RooFlow features persistent context, real-time updates, mode collaboration, and reduced token consumption.

BIRD-CRITIC-1

BIRD-CRITIC 1.0 is a SQL benchmark designed to evaluate the capability of large language models (LLMs) in diagnosing and solving user issues within real-world database environments. It comprises 600 tasks for development and 200 held-out out-of-distribution tests across 4 prominent open-source SQL dialects. The benchmark expands beyond simple SELECT queries to cover a wider range of SQL operations, reflecting actual application scenarios. An optimized execution-based evaluation environment is included for rigorous and efficient validation.

Linly-Talker

Linly-Talker is an innovative digital human conversation system that integrates the latest artificial intelligence technologies, including Large Language Models (LLM) 🤖, Automatic Speech Recognition (ASR) 🎙️, Text-to-Speech (TTS) 🗣️, and voice cloning technology 🎤. This system offers an interactive web interface through the Gradio platform 🌐, allowing users to upload images 📷 and engage in personalized dialogues with AI 💬.

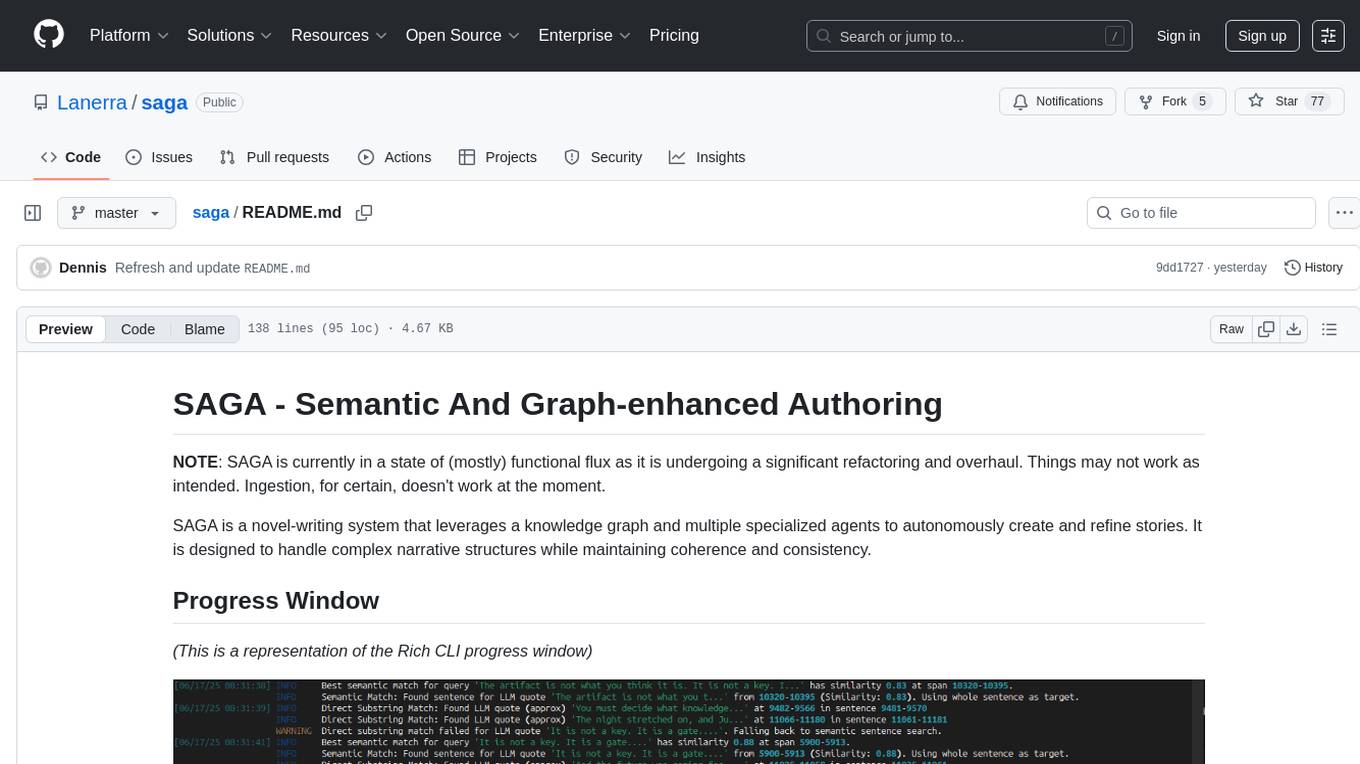

saga

SAGA is a novel-writing system that leverages a knowledge graph and specialized agents to autonomously create and refine stories. It handles complex narrative structures while maintaining coherence and consistency. Features include a Knowledge Graph using Neo4j, Modular Agent Architecture, LLM Integration, Configurable Generation Parameters, Robust Testing Framework, Code Quality enforcement, Vector Search, and Agentic Planning. The system structure includes components for specialized agents, core components, data access, documentation, initialization scripts, Pydantic models, output directory, orchestrator logic, text processing tools, UI components, utility functions, and more.

openkf

OpenKF (Open Knowledge Flow) is an online intelligent customer service system. It is an open-source customer service system based on OpenIM, supporting LLM (Local Knowledgebase) customer service and multi-channel customer service. It is easy to integrate with third-party systems, deploy, and perform secondary development. The system provides features like login page, config page, dashboard page, platform page, and session page. Users can quickly get started with OpenKF by following the installation and run instructions. The architecture follows MVC design with a standardized directory structure. The community encourages involvement through community meetings, contributions, and development. OpenKF is licensed under the Apache 2.0 license.

CodeRAG

CodeRAG is an AI-powered code retrieval and assistance tool that combines Retrieval-Augmented Generation (RAG) with AI to provide intelligent coding assistance. It indexes your entire codebase for contextual suggestions based on your complete project, offering real-time indexing, semantic code search, and contextual AI responses. The tool monitors your code directory, generates embeddings for Python files, stores them in a FAISS vector database, matches user queries against the code database, and sends retrieved code context to GPT models for intelligent responses. CodeRAG also features a Streamlit web interface with a chat-like experience for easy usage.

moling

MoLing is a computer-use and browser-use MCP Server that implements system interaction through operating system APIs, enabling file system operations such as reading, writing, merging, statistics, and aggregation, as well as the ability to execute system commands. It is a dependency-free local office automation assistant. Requiring no installation of any dependencies, MoLing can be run directly and is compatible with multiple operating systems, including Windows, Linux, and macOS. This eliminates the hassle of dealing with environment conflicts involving Node.js, Python, Docker, and other development environments. Command-line operations are dangerous and should be used with caution. MoLing supports features like file system operations, command-line terminal execution, browser control powered by 'github.com/chromedp/chromedp', and future plans for personal PC data organization, document writing assistance, schedule planning, and life assistant features. MoLing has been tested on macOS but may have issues on other operating systems.

Shellsage

Shell Sage is an intelligent terminal companion and AI-powered terminal assistant that enhances the terminal experience with features like local and cloud AI support, context-aware error diagnosis, natural language to command translation, and safe command execution workflows. It offers interactive workflows, supports various API providers, and allows for custom model selection. Users can configure the tool for local or API mode, select specific models, and switch between modes easily. Currently in alpha development, Shell Sage has known limitations like limited Windows support and occasional false positives in error detection. The roadmap includes improvements like better context awareness, Windows PowerShell integration, Tmux integration, and CI/CD error pattern database.

LLM-FuzzX

LLM-FuzzX is an open-source user-friendly fuzz testing tool for large language models (e.g., GPT, Claude, LLaMA), equipped with advanced task-aware mutation strategies, fine-grained evaluation, and jailbreak detection capabilities. It helps researchers and developers quickly discover potential security vulnerabilities and enhance model robustness. The tool features a user-friendly web interface for visual configuration and real-time monitoring, supports various advanced mutation methods, integrates RoBERTa model for real-time jailbreak detection and evaluation, supports multiple language models like GPT, Claude, LLaMA, provides visualization analysis with seed flowcharts and experiment data statistics, and offers detailed logging support for main, mutation, and jailbreak logs.

llmxcpg

LLMxCPG is a framework for vulnerability detection using Code Property Graphs (CPG) and Large Language Models (LLM). It involves a two-phase process: Slice Construction where an LLM generates queries for a CPG to extract a code slice, and Vulnerability Detection where another LLM classifies the code slice as vulnerable or safe. The repository includes implementations of baseline models, information on datasets, scripts for running models, prompt templates, query generation examples, and configurations for fine-tuning models.

UltraContextAI

UltraContextAI is a comprehensive system for managing AI interactions through memory management, lessons learned tracking, and dual-mode operation (Plan/Agent). It ensures consistent, high-quality development while maintaining detailed project documentation and knowledge retention. The system includes core components like Memory System, Lessons Learned, and Scratchpad. It operates in Plan Mode for information gathering and planning, and Agent Mode for execution. Users can create new features, fix bugs, set up projects, and update documentation using the system. Real-time updates, version control, and cross-referencing are key aspects of the system. Best practices include memory management, task tracking, and documentation standards. Tips and tricks are provided for handling AI and Cursor issues. Contributions to the system are welcome, and it is licensed under MIT License.

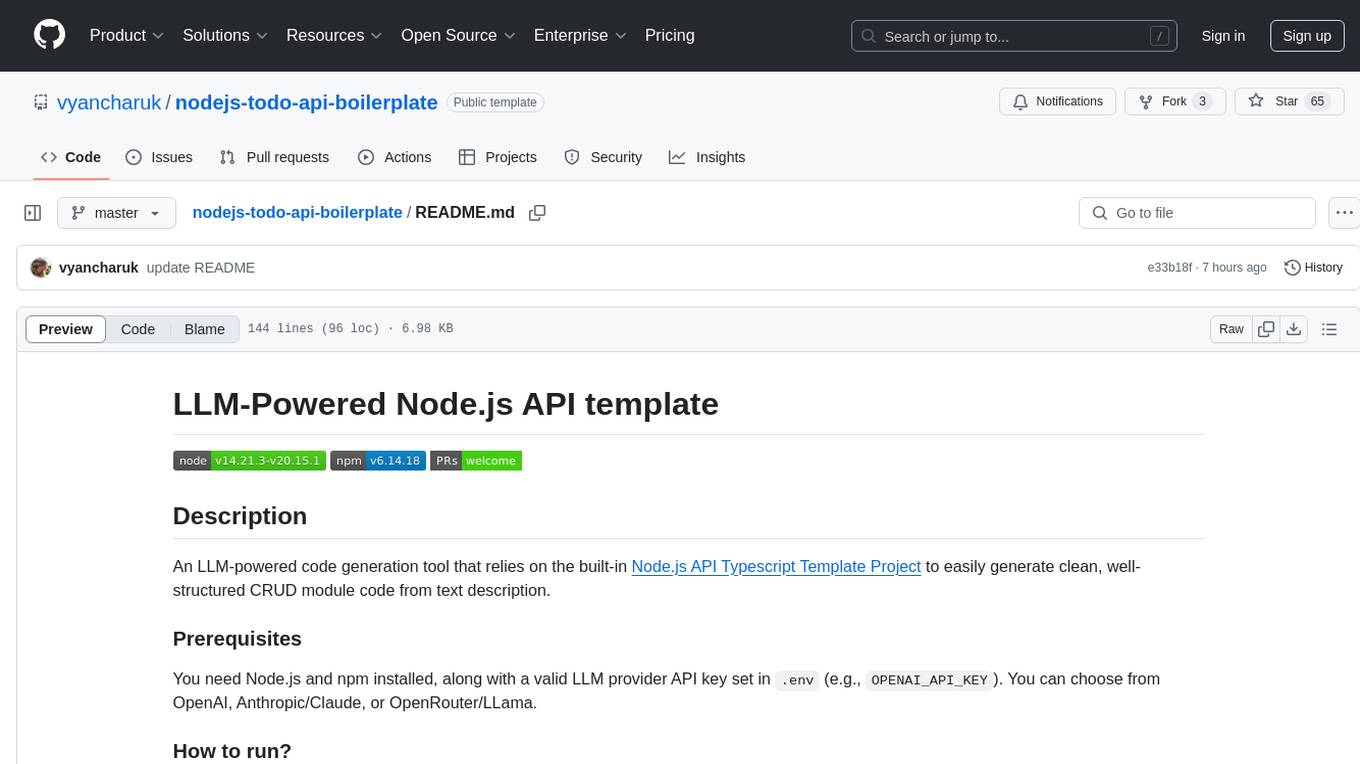

nodejs-todo-api-boilerplate

An LLM-powered code generation tool that relies on the built-in Node.js API Typescript Template Project to easily generate clean, well-structured CRUD module code from text description. It orchestrates 3 LLM micro-agents (`Developer`, `Troubleshooter` and `TestsFixer`) to generate code, fix compilation errors, and ensure passing E2E tests. The process includes module code generation, DB migration creation, seeding data, and running tests to validate output. By cycling through these steps, it guarantees consistent and production-ready CRUD code aligned with vertical slicing architecture.

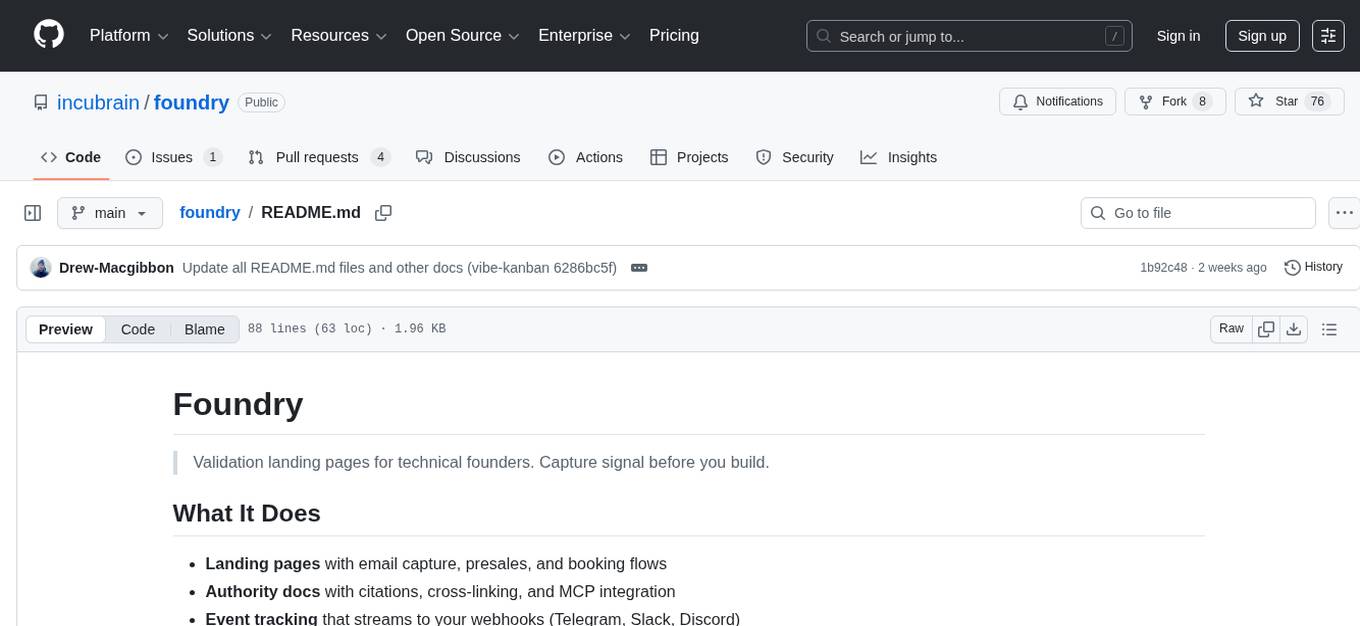

foundry

Foundry is a tool designed for technical founders to create validation landing pages. It allows users to capture email signals before building, with features such as landing pages, authority docs, event tracking, and zero lock-in. The tool is built using Nuxt 4, Tailwind v4, and TypeScript, and provides quick start options for easy setup. Foundry helps founders test interest, collect payments, and sell services before fully developing their products.

For similar tasks

ccprompts

ccprompts is a collection of ~70 Claude Code commands for software development workflows with agent generation capabilities. It includes safety validation and can be used directly with Claude Code or adapted for specific needs. The agent template system provides a wizard for creating specialized sub-agents (e.g., security auditors, systems architects) with standardized formatting and proper tool access. The repository is under active development, so caution is advised when using it in production environments.

pilottai

PilottAI is a Python framework for building autonomous multi-agent systems with advanced orchestration capabilities. It provides enterprise-ready features for building scalable AI applications. The framework includes hierarchical agent systems, production-ready features like asynchronous processing and fault tolerance, advanced memory management with semantic storage, and integrations with multiple LLM providers and custom tools. PilottAI offers specialized agents for various tasks such as customer service, document processing, email handling, knowledge acquisition, marketing, research analysis, sales, social media, and web search. The framework also provides documentation, example use cases, and advanced features like memory management, load balancing, and fault tolerance.

AgentForge

AgentForge is a low-code framework tailored for the rapid development, testing, and iteration of AI-powered autonomous agents and Cognitive Architectures. It is compatible with a range of LLM models and offers flexibility to run different models for different agents based on specific needs. The framework is designed for seamless extensibility and database-flexibility, making it an ideal playground for various AI projects. AgentForge is a beta-testing ground and future-proof hub for crafting intelligent, model-agnostic autonomous agents.

bedrock-engineer

Bedrock Engineer is an autonomous software development agent application that utilizes Amazon Bedrock. It allows users to customize, create/edit files, execute commands, search the web, use a knowledge base, utilize multi-agents, generate images, and more. The tool provides an interactive chat interface with AI agents, file system operations, web search capabilities, project structure management, code analysis, code generation, data analysis, agent and tool customization, chat history management, and multi-language support. Users can select and customize agents, choose from various tools like file system operations, web search, Amazon Bedrock integration, and system command execution. Additionally, the tool offers features for website generation, connecting to design system data sources, AWS Step Functions ASL definition generation, diagram creation using natural language descriptions, and multi-language support.

Shellsage

Shell Sage is an intelligent terminal companion and AI-powered terminal assistant that enhances the terminal experience with features like local and cloud AI support, context-aware error diagnosis, natural language to command translation, and safe command execution workflows. It offers interactive workflows, supports various API providers, and allows for custom model selection. Users can configure the tool for local or API mode, select specific models, and switch between modes easily. Currently in alpha development, Shell Sage has known limitations like limited Windows support and occasional false positives in error detection. The roadmap includes improvements like better context awareness, Windows PowerShell integration, Tmux integration, and CI/CD error pattern database.

For similar jobs

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.