NeuroSandboxWebUI

(Windows/Linux) Local WebUI with neural network models (Text, Image, Video, 3D, Audio) on python (Gradio interface). Translated on 3 languages

Stars: 53

A simple and convenient interface for using various neural network models. Users can interact with LLM using text, voice, and image input to generate images, videos, 3D objects, music, and audio. The tool supports a wide range of models for different tasks such as image generation, video generation, audio file separation, voice conversion, and more. Users can also view files from the outputs directory in a gallery, download models, change application settings, and check system sensors. The goal of the project is to create an easy-to-use application for utilizing neural network models.

README:

Features | Dependencies | SystemRequirements | Install | Wiki | Acknowledgment | Licenses

A simple and convenient interface for using various neural network models. You can communicate with LLM using text, voice and image input; use StableDiffusion, Kandinsky, Flux, HunyuanDiT, Lumina-T2X, Kolors, AuraFlow, Würstchen, DeepFloydIF, PixArt and PlaygroundV2.5, to generate images; ModelScope, ZeroScope 2, CogVideoX and Latte to generate videos; StableFast3D, Shap-E, SV34D and Zero123Plus to generate 3D objects; StableAudioOpen, AudioCraft and AudioLDM 2 to generate music and audio; CoquiTTS, MMS and SunoBark for text-to-speech; OpenAI-Whisper and MMS for speech-to-text; Wav2Lip for lip-sync; LivePortrait for animate an image; Roop to faceswap; Rembg to remove background; CodeFormer for face restore; PixelOE for image pixelization; DDColor for image colorization; LibreTranslate and SeamlessM4Tv2 for text translation; Demucs and UVR for audio file separation; RVC for voice conversion. You can also view files from the outputs directory in gallery, download the LLM and StableDiffusion models, change the application settings inside the interface and check system sensors

The goal of the project - to create the easiest possible application to use neural network models

- Easy installation via install.bat (Windows) or install.sh (Linux)

- You can use the application via your mobile device in localhost (Via IPv4) or anywhere online (Via Share)

- Flexible and optimized interface (By Gradio)

- Debug logging to logs from

InstallandUpdatefiles - Available in three languages

- Support for Transformers, BNB, GPTQ, AWQ, ExLlamaV2 and llama.cpp models (LLM)

- Support for diffusers and safetensors models (StableDiffusion) - txt2img, img2img, depth2img, marigold, pix2pix, controlnet, upscale (latent), upscale (SUPIR), refiner, inpaint, outpaint, gligen, diffedit, blip-diffusion, animatediff, hotshot-xl, video, ldm3d, sd3, cascade, t2i-ip-adapter, ip-adapter-faceid and riffusion tabs

- Support for stable-diffusion-cpp models for FLUX and Stable Diffusion

- Support of additional models for image generation: Kandinsky (txt2img, img2img, inpaint), Flux (txt2img with cpp quantize and LoRA support, img2img, inpaint, controlnet), HunyuanDiT (txt2img, controlnet), Lumina-T2X, Kolors (txt2img with LoRA support, img2img, ip-adapter-plus), AuraFlow (with LoRA and AuraSR support), Würstchen, DeepFloydIF (txt2img, img2img, inpaint), PixArt and PlaygroundV2.5

- Support Extras with Rembg, CodeFormer, PixelOE, DDColor, DownScale, Format changer, FaceSwap (Roop) and Upscale (Real-ESRGAN) models for image, video and audio

- Support StableAudio

- Support AudioCraft (Models: musicgen, audiogen and magnet)

- Support AudioLDM 2 (Models: audio and music)

- Supports TTS and Whisper models (For LLM and TTS-STT)

- Support MMS for text-to-speech and speech-to-text

- Supports Lora, Textual inversion (embedding), Vae, MagicPrompt, Img2img, Depth, Marigold, Pix2Pix, Controlnet, Upscalers (latent and SUPIR), Refiner, Inpaint, Outpaint, GLIGEN, DiffEdit, BLIP-Diffusion, AnimateDiff, HotShot-XL, Videos, LDM3D, SD3, Cascade, T2I-IP-ADAPTER, IP-Adapter-FaceID and Riffusion models (For StableDiffusion)

- Support Multiband Diffusion model (For AudioCraft)

- Support LibreTranslate (Local API) and SeamlessM4Tv2 for language translations

- Support ModelScope, ZeroScope 2, CogVideoX and Latte for video generation

- Support SunoBark

- Support Demucs and UVR for audio file separation

- Support RVC for voice conversion

- Support StableFast3D, Shap-E, SV34D and Zero123Plus for 3D generation

- Support Wav2Lip

- Support LivePortrait for animate an image

- Support Multimodal (Moondream 2, LLaVA-NeXT-Video, Qwen2-Audio), PDF-Parsing (OpenParse), TTS (CoquiTTS), STT (Whisper), LORA and WebSearch (with DuckDuckGo) for LLM

- MetaData-Info viewer for generating image, video and audio

- Model settings inside the interface

- Online and offline Wiki

- Gallery

- ModelDownloader (For LLM and StableDiffusion)

- Application settings

- Ability to see system sensors

- C+ compiler

- Windows: VisualStudio, VisualStudioCode and Cmake

- Linux: GCC, VisualStudioCode and Cmake

- System: Windows or Linux

- GPU: 6GB+ or CPU: 8 core 3.6GHZ

- RAM: 16GB+

- Disk space: 20GB+

- Internet for downloading models and installing

- First install all RequiredDependencies

-

Git clone https://github.com/Dartvauder/NeuroSandboxWebUI.gitto any location - Run the

Install.batand wait for installation - After installation, run

Start.bat - Wait for the application to launch

- Now you can start generating!

To get update, run Update.bat

To work with the virtual environment through the terminal, run Venv.bat

- First install all RequiredDependencies

-

Git clone https://github.com/Dartvauder/NeuroSandboxWebUI.gitto any location - In the terminal, run the

./Install.shand wait for installation of all dependencies - After installation, run

./Start.sh - Wait for the application to launch

- Now you can start generating!

To get update, run ./Update.sh

To work with the virtual environment through the terminal, run ./Venv.sh

Many thanks to these projects because thanks to their applications/libraries, i was able to create my application:

First of all, I want to thank the developers of PyCharm and GitHub. With the help of their applications, i was able to create and share my code

-

gradio- https://github.com/gradio-app/gradio -

transformers- https://github.com/huggingface/transformers -

auto-gptq- https://github.com/AutoGPTQ/AutoGPTQ -

autoawq- https://github.com/casper-hansen/AutoAWQ -

exllamav2- https://github.com/turboderp/exllamav2 -

tts- https://github.com/coqui-ai/TTS -

openai-whisper- https://github.com/openai/whisper -

torch- https://github.com/pytorch/pytorch -

soundfile- https://github.com/bastibe/python-soundfile -

cuda-python- https://github.com/NVIDIA/cuda-python -

gitpython- https://github.com/gitpython-developers/GitPython -

diffusers- https://github.com/huggingface/diffusers -

llama.cpp-python- https://github.com/abetlen/llama-cpp-python -

stable-diffusion-cpp-python- https://github.com/william-murray1204/stable-diffusion-cpp-python -

audiocraft- https://github.com/facebookresearch/audiocraft -

AudioLDM2- https://github.com/haoheliu/AudioLDM2 -

xformers- https://github.com/facebookresearch/xformers -

demucs- https://github.com/facebookresearch/demucs -

libretranslate- https://github.com/LibreTranslate/LibreTranslate -

libretranslatepy- https://github.com/argosopentech/LibreTranslate-py -

rembg- https://github.com/danielgatis/rembg -

trimesh- https://github.com/mikedh/trimesh -

suno-bark- https://github.com/suno-ai/bark -

IP-Adapter- https://github.com/tencent-ailab/IP-Adapter -

PyNanoInstantMeshes- https://github.com/vork/PyNanoInstantMeshes -

CLIP- https://github.com/openai/CLIP -

rvc-python- https://github.com/daswer123/rvc-python -

audio-separator- https://github.com/nomadkaraoke/python-audio-separator -

pixeloe- https://github.com/KohakuBlueleaf/PixelOE -

k-diffusion- https://github.com/crowsonkb/k-diffusion -

open-parse- https://github.com/Filimoa/open-parse -

AudioSR- https://github.com/haoheliu/versatile_audio_super_resolution

Many models have their own license for use. Before using it, I advise you to familiarize yourself with them:

- Transformers

- AutoGPTQ

- AutoAWQ

- exllamav2

- llama.cpp

- stable-diffusion.cpp

- CoquiTTS

- OpenAI-Whisper

- LibreTranslate

- Diffusers

- StableDiffusion1.5

- StableDiffusion2

- StableDiffusion3

- StableDiffusionXL

- StableCascade

- LatentDiffusionModel3D

- StableVideoDiffusion

- I2VGen-XL

- Rembg

- Shap-E

- StableAudioOpen

- AudioCraft

- AudioLDM2

- Demucs

- SunoBark

- Moondream2

- LLaVA-NeXT-Video

- Qwen2-Audio

- ZeroScope2

- GLIGEN

- Wav2Lip

- Roop

- CodeFormer

- ControlNet

- AnimateDiff

- Pix2Pix

- Kandinsky 2.1; 2.2; 3

- Flux-schnell

- Flux-dev

- HunyuanDiT

- Lumina-T2X

- DeepFloydIF

- PixArt

- CogVideoX

- Latte

- Kolors

- AuraFlow

- Würstchen

- ModelScope

- StableFast3D

- SV34D

- Zero123Plus

- Real-ESRGAN

- Refiner

- PlaygroundV2.5

- AuraSR

- IP-Adapter-FaceID

- T2I-IP-Adapter

- MMS

- SeamlessM4Tv2

- HotShot-XL

- Riffusion

- MozillaCommonVoice17

- UVR-MDX

- RVC

- DDColor

- PixelOE

- LivePortrait

- SUPIR

- MagicPrompt

- Marigold

- BLIP-Diffusion

- Consistency-Decoder

- Tiny-AutoEncoder

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for NeuroSandboxWebUI

Similar Open Source Tools

NeuroSandboxWebUI

A simple and convenient interface for using various neural network models. Users can interact with LLM using text, voice, and image input to generate images, videos, 3D objects, music, and audio. The tool supports a wide range of models for different tasks such as image generation, video generation, audio file separation, voice conversion, and more. Users can also view files from the outputs directory in a gallery, download models, change application settings, and check system sensors. The goal of the project is to create an easy-to-use application for utilizing neural network models.

dexto

Dexto is a lightweight runtime for creating and running AI agents that turn natural language into real-world actions. It serves as the missing intelligence layer for building AI applications, standalone chatbots, or as the reasoning engine inside larger products. Dexto features a powerful CLI and Web UI for running AI agents, supports multiple interfaces, allows hot-swapping of LLMs from various providers, connects to remote tool servers via the Model Context Protocol, is config-driven with version-controlled YAML, offers production-ready core features, extensibility for custom services, and enables multi-agent collaboration via MCP and A2A.

ollama

Ollama is a lightweight, extensible framework for building and running language models on the local machine. It provides a simple API for creating, running, and managing models, as well as a library of pre-built models that can be easily used in a variety of applications. Ollama is designed to be easy to use and accessible to developers of all levels. It is open source and available for free on GitHub.

dotclaude

A sophisticated multi-agent configuration system for Claude Code that provides specialized agents and command templates to accelerate code review, refactoring, security audits, tech-lead-guidance, and UX evaluations. It offers essential commands, directory structure details, agent system overview, command templates, usage patterns, collaboration philosophy, sync management, advanced usage guidelines, and FAQ. The tool aims to streamline development workflows, enhance code quality, and facilitate collaboration between developers and AI agents.

BrowserAI

BrowserAI is a tool that allows users to run large language models (LLMs) directly in the browser, providing a simple, fast, and open-source solution. It prioritizes privacy by processing data locally, is cost-effective with no server costs, works offline after initial download, and offers WebGPU acceleration for high performance. It is developer-friendly with a simple API, supports multiple engines, and comes with pre-configured models for easy use. Ideal for web developers, companies needing privacy-conscious AI solutions, researchers experimenting with browser-based AI, and hobbyists exploring AI without infrastructure overhead.

pipecat

Pipecat is an open-source framework designed for building generative AI voice bots and multimodal assistants. It provides code building blocks for interacting with AI services, creating low-latency data pipelines, and transporting audio, video, and events over the Internet. Pipecat supports various AI services like speech-to-text, text-to-speech, image generation, and vision models. Users can implement new services and contribute to the framework. Pipecat aims to simplify the development of applications like personal coaches, meeting assistants, customer support bots, and more by providing a complete framework for integrating AI services.

LEANN

LEANN is an innovative vector database that democratizes personal AI, transforming your laptop into a powerful RAG system that can index and search through millions of documents using 97% less storage than traditional solutions without accuracy loss. It achieves this through graph-based selective recomputation and high-degree preserving pruning, computing embeddings on-demand instead of storing them all. LEANN allows semantic search of file system, emails, browser history, chat history, codebase, or external knowledge bases on your laptop with zero cloud costs and complete privacy. It is a drop-in semantic search MCP service fully compatible with Claude Code, enabling intelligent retrieval without changing your workflow.

X-AnyLabeling

X-AnyLabeling is a robust annotation tool that seamlessly incorporates an AI inference engine alongside an array of sophisticated features. Tailored for practical applications, it is committed to delivering comprehensive, industrial-grade solutions for image data engineers. This tool excels in swiftly and automatically executing annotations across diverse and intricate tasks.

chat

deco.chat is an open-source foundation for building AI-native software, providing developers, engineers, and AI enthusiasts with robust tools to rapidly prototype, develop, and deploy AI-powered applications. It empowers Vibecoders to prototype ideas and Agentic engineers to deploy scalable, secure, and sustainable production systems. The core capabilities include an open-source runtime for composing tools and workflows, MCP Mesh for secure integration of models and APIs, a unified TypeScript stack for backend logic and custom frontends, global modular infrastructure built on Cloudflare, and a visual workspace for building agents and orchestrating everything in code.

nexa-sdk

Nexa SDK is a comprehensive toolkit supporting ONNX and GGML models for text generation, image generation, vision-language models (VLM), and text-to-speech (TTS) capabilities. It offers an OpenAI-compatible API server with JSON schema mode and streaming support, along with a user-friendly Streamlit UI. Users can run Nexa SDK on any device with Python environment, with GPU acceleration supported. The toolkit provides model support, conversion engine, inference engine for various tasks, and differentiating features from other tools.

R2R

R2R (RAG to Riches) is a fast and efficient framework for serving high-quality Retrieval-Augmented Generation (RAG) to end users. The framework is designed with customizable pipelines and a feature-rich FastAPI implementation, enabling developers to quickly deploy and scale RAG-based applications. R2R was conceived to bridge the gap between local LLM experimentation and scalable production solutions. **R2R is to LangChain/LlamaIndex what NextJS is to React**. A JavaScript client for R2R deployments can be found here. ### Key Features * **🚀 Deploy** : Instantly launch production-ready RAG pipelines with streaming capabilities. * **🧩 Customize** : Tailor your pipeline with intuitive configuration files. * **🔌 Extend** : Enhance your pipeline with custom code integrations. * **⚖️ Autoscale** : Scale your pipeline effortlessly in the cloud using SciPhi. * **🤖 OSS** : Benefit from a framework developed by the open-source community, designed to simplify RAG deployment.

Shellsage

Shell Sage is an intelligent terminal companion and AI-powered terminal assistant that enhances the terminal experience with features like local and cloud AI support, context-aware error diagnosis, natural language to command translation, and safe command execution workflows. It offers interactive workflows, supports various API providers, and allows for custom model selection. Users can configure the tool for local or API mode, select specific models, and switch between modes easily. Currently in alpha development, Shell Sage has known limitations like limited Windows support and occasional false positives in error detection. The roadmap includes improvements like better context awareness, Windows PowerShell integration, Tmux integration, and CI/CD error pattern database.

auto-subs

Auto-subs is a tool designed to automatically transcribe editing timelines using OpenAI Whisper and Stable-TS for extreme accuracy. It generates subtitles in a custom style, is completely free, and runs locally within Davinci Resolve. It works on Mac, Linux, and Windows, supporting both Free and Studio versions of Resolve. Users can jump to positions on the timeline using the Subtitle Navigator and translate from any language to English. The tool provides a user-friendly interface for creating and customizing subtitles for video content.

easy-dataset

Easy Dataset is a specialized application designed to streamline the creation of fine-tuning datasets for Large Language Models (LLMs). It offers an intuitive interface for uploading domain-specific files, intelligently splitting content, generating questions, and producing high-quality training data for model fine-tuning. With Easy Dataset, users can transform domain knowledge into structured datasets compatible with all OpenAI-format compatible LLM APIs, making the fine-tuning process accessible and efficient.

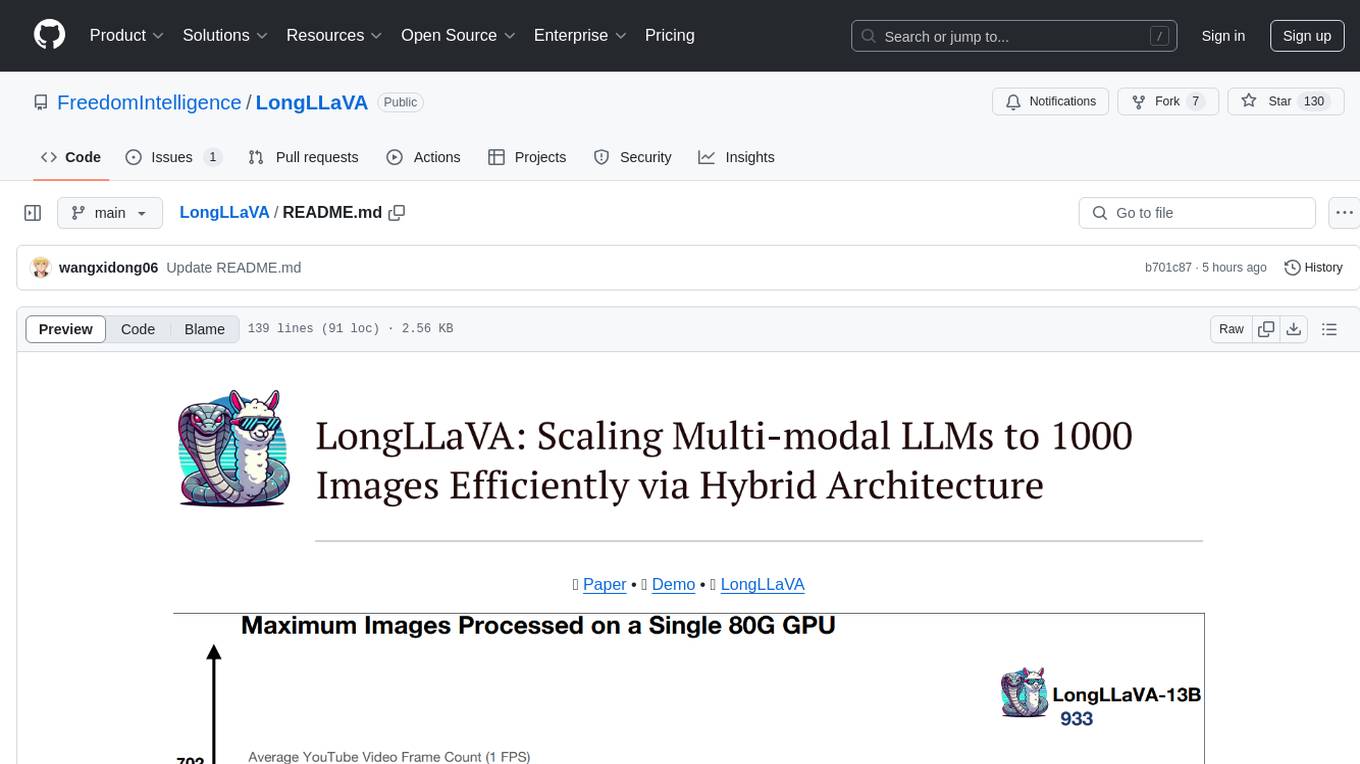

LongLLaVA

LongLLaVA is a tool for scaling multi-modal LLMs to 1000 images efficiently via hybrid architecture. It includes stages for single-image alignment, instruction-tuning, and multi-image instruction-tuning, with evaluation through a command line interface and model inference. The tool aims to achieve GPT-4V level capabilities and beyond, providing reproducibility of results and benchmarks for efficiency and performance.

pebble

Pebbling is an open-source protocol for agent-to-agent communication, enabling AI agents to collaborate securely using Decentralised Identifiers (DIDs) and mutual TLS (mTLS). It provides a lightweight communication protocol built on JSON-RPC 2.0, ensuring reliable and secure conversations between agents. Pebbling allows agents to exchange messages safely, connect seamlessly regardless of programming language, and communicate quickly and efficiently. It is designed to pave the way for the next generation of collaborative AI systems, promoting secure and effortless communication between agents across different environments.

For similar tasks

manga-image-translator

Translate texts in manga/images. Some manga/images will never be translated, therefore this project is born. * Image/Manga Translator * Samples * Online Demo * Disclaimer * Installation * Pip/venv * Poetry * Additional instructions for **Windows** * Docker * Hosting the web server * Using as CLI * Setting Translation Secrets * Using with Nvidia GPU * Building locally * Usage * Batch mode (default) * Demo mode * Web Mode * Api Mode * Related Projects * Docs * Recommended Modules * Tips to improve translation quality * Options * Language Code Reference * Translators Reference * GPT Config Reference * Using Gimp for rendering * Api Documentation * Synchronous mode * Asynchronous mode * Manual translation * Next steps * Support Us * Thanks To All Our Contributors :

facefusion

FaceFusion is a next-generation face swapper and enhancer that allows users to seamlessly swap faces in images and videos, as well as enhance facial features for a more polished and refined look. With its advanced deep learning models, FaceFusion provides users with a wide range of options for customizing their face swaps and enhancements, making it an ideal tool for content creators, artists, and anyone looking to explore their creativity with facial manipulation.

aidea

AIdea is an app that integrates mainstream large language models and drawing models, developed using Flutter. The code is completely open-source and supports various functions such as GPT-3.5, GPT-4 from OpenAI, Claude instant, Claude 2.1 from Anthropic, Gemini Pro and visual language models from Google, as well as various Chinese and open-source models. It also supports features like text-to-image, super-resolution, coloring black and white images, artistic fonts, artistic QR codes, and more.

NeuroSandboxWebUI

A simple and convenient interface for using various neural network models. Users can interact with LLM using text, voice, and image input to generate images, videos, 3D objects, music, and audio. The tool supports a wide range of models for different tasks such as image generation, video generation, audio file separation, voice conversion, and more. Users can also view files from the outputs directory in a gallery, download models, change application settings, and check system sensors. The goal of the project is to create an easy-to-use application for utilizing neural network models.

InvokeAI

InvokeAI is a leading creative engine built to empower professionals and enthusiasts alike. Generate and create stunning visual media using the latest AI-driven technologies. InvokeAI offers an industry leading Web Interface, interactive Command Line Interface, and also serves as the foundation for multiple commercial products.

Open-Sora-Plan

Open-Sora-Plan is a project that aims to create a simple and scalable repo to reproduce Sora (OpenAI, but we prefer to call it "ClosedAI"). The project is still in its early stages, but the team is working hard to improve it and make it more accessible to the open-source community. The project is currently focused on training an unconditional model on a landscape dataset, but the team plans to expand the scope of the project in the future to include text2video experiments, training on video2text datasets, and controlling the model with more conditions.

comflowyspace

Comflowyspace is an open-source AI image and video generation tool that aims to provide a more user-friendly and accessible experience than existing tools like SDWebUI and ComfyUI. It simplifies the installation, usage, and workflow management of AI image and video generation, making it easier for users to create and explore AI-generated content. Comflowyspace offers features such as one-click installation, workflow management, multi-tab functionality, workflow templates, and an improved user interface. It also provides tutorials and documentation to lower the learning curve for users. The tool is designed to make AI image and video generation more accessible and enjoyable for a wider range of users.

Rewind-AI-Main

Rewind AI is a free and open-source AI-powered video editing tool that allows users to easily create and edit videos. It features a user-friendly interface, a wide range of editing tools, and support for a variety of video formats. Rewind AI is perfect for beginners and experienced video editors alike.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.