awesome-pi-agent

Awesome list of add-ons, hooks, tools, skills, and resources for the pi coding agent (pi-mono).

Stars: 114

Awesome Pi Agent is a versatile and powerful tool for building intelligent agents on Raspberry Pi. It provides a framework for developing AI-powered applications that can interact with the physical world through sensors and actuators. With a focus on simplicity and extensibility, this tool enables users to create a wide range of smart devices, from home automation systems to robotics projects. The agent can be easily customized and integrated with various AI algorithms and libraries, making it suitable for both beginners and advanced users interested in exploring the intersection of AI and IoT technologies.

README:

Concise, curated resources for extending and integrating the pi coding agent

(Yes, it was tempting to call it shitty-list).

- pi (pi-mono) — Official coding agent repository

Extensions are TypeScript/JavaScript modules that enhance pi-agent functionality by handling events, registering tools, or adding UI components. Previously called "hooks" or "custom tools".

- agent-stuff (mitsupi) — Skills and extensions for pi (answer, review, loop, files, todos, codex-tuning, whimsical)

- cloud-research-agent — AI agent in cloud sandbox for researching GitHub repositories and libraries

-

michalvavra/agents — User extensions and configuration examples

- filter-output — Redact sensitive data (API keys, tokens, passwords) from tool results before LLM sees them

- security — Block dangerous bash commands and protect sensitive paths from writes

- pi-extensions — Collection of debugging and utility extensions

- pi-agent-scip — SCIP code intelligence tools for pi agent

-

pi-extensions — Collection of extensions for pi coding agent

- toolwatch — Tool call auditing and approval system with SQLite logging

-

pi-hooks — Minimal reference extensions

- checkpoint — Git-based checkpoint system for restoring code state when branching conversations

- lsp — Language Server Protocol integration with auto-diagnostics and on-demand queries

- permission — Layered permission control with four levels (off, low, medium, high)

- pi-canvas — Interactive TUI canvases (calendar, document, flights) rendered inline using native pi TUI

- pi-cost-dashboard — Interactive web dashboard to monitor and analyze API costs

-

pi-extensions — Collection of delightful extensions for pi agent

- agent-guidance — Agent behavior guidance and instructions

- arcade — Arcade-style interactions and games

- ralph-wiggum — Long-running agent loops for iterative development

- tab-status — Tab status indicators and management

- usage-extension — Usage statistics dashboard across sessions

- pi-interview-tool — Web-based form tool with keyboard navigation, themes, and image attachments

- pi-notification-extension — Telegram/bell alerts when the agent finishes and waits for input

- pi-notify-pp — Rich notification extension with tool stats, error tracking, and OSC 777 support

- pi-powerline-footer — Powerline-style status bar with git integration, context awareness, and token intelligence

- pi-prompt-template-model — Prompt templates with model/skill/thinking frontmatter and auto-restore

- pi-rewind-hook — Rewind file changes with git-based checkpoints and conversation branching

- pi-ssh-remote — Extension that redirects all file operations and commands to a remote host via SSH

-

pi-extensions — Collection of extensions for pi coding agent

- files — Browse and open files mentioned in conversation

-

skill-task — Route

/skill:commands to task tool when skills opt in - task-tool — Run isolated pi subprocesses for single, chain, or parallel work

-

rhubarb-pi — Collection of small extensions for pi agent

- background-notify — Notifications when tasks complete (audio beep, terminal focus)

- session-emoji — AI-powered emoji in footer representing conversation context

- session-color — Colored band in footer to visually distinguish sessions

- safe-git — Require approval before dangerous git operations

- ben-vargas/pi-packages — Packages for pi (extensions, skills, prompt templates, themes)

- ferologics/pi-notify — Native desktop notifications via OSC 777

- ogulcancelik/pi-ghostty-theme-sync — Sync Ghostty terminal theme with pi session

- ogulcancelik/pi-sketch — Quick sketch pad - draw in browser, send to models

- pi-dcp — Dynamic context pruning extension for intelligent conversation optimization

- pi-screenshots-picker — Screenshot picker extension for better screenshot selections

- pi-super-curl — Extension to empower curl requests with coding agent capabilities

-

shitty-extensions — Community extensions collection

- cost-tracker — Session spending analysis from pi logs

- handoff — Transfer context to new focused sessions

- memory-mode — Save instructions to AGENTS.md with AI-assisted integration

- oracle — Get second opinion from alternative AI models without switching contexts

- plan-mode — Read-only exploration mode for safe code exploration

- status-widget — Persistent provider status indicator in footer

- ultrathink — Rainbow animated effect with Knight Rider shimmer

- usage-bar — AI provider usage statistics with status polling

Skills are reusable workflows described in natural language (SKILL.md format) that guide the agent through complex tasks.

- agent-stuff (mitsupi) — Skills and extensions for pi (commit, changelog, GitHub, web browser, tmux, Sentry, and more)

- pi-amplike — Pi skills for web search and webpage extraction (Jina APIs)

-

pi-skills — Community skills collection

- brave-search — Web search and content extraction via Brave Search API

- browser-tools — Interactive browser automation via Chrome DevTools Protocol

- gccli — Google Calendar CLI for events and availability

- gdcli — Google Drive CLI for file management and sharing

- gmcli — Gmail CLI for email, drafts, and labels

- transcribe — Speech-to-text transcription via Groq Whisper API

- vscode — VS Code integration for diffs and file comparison

- youtube-transcript — Fetch YouTube video transcripts

- CodexBar — macOS menu bar app for tracking AI coding tool usage (session + weekly limits, reset timers) — supports Codex, Claude, Cursor, Gemini, and more

- claude-code-ui — Real-time dashboard for monitoring Claude Code sessions with AI-powered summaries, PR tracking, and multi-repo support

- nono — Secure, kernel-enforced capability sandbox for AI agents (Landlock on Linux, Seatbelt on macOS) — blocks dangerous commands and enforces OS-level security primitives

- codemap — Compact, token-aware codebase maps for LLMs and coding agents (TypeScript/JavaScript symbol extraction, markdown structure)

- gondolin — Linux micro-VM sandbox with programmable network/filesystem and Pi integration

- gob — Process manager for AI agents with background job support and TUI interface

-

PiSwarm — Parallel GitHub issue and PR processing using the

piagent and Git worktrees - task-factory — Queue-first work orchestrator for Pi with planning, execution skills, and web UI

- pi-ds — TUI design system components for pi-mono extensions with TypeScript support

- pi-mobile — Android client for Pi coding agent with session management over Tailscale

- pi-stuffed — Collection of pi extensions including Reddit integration and more

- pi-sub — Monorepo for usage tracking extensions with shared core (sub-core, sub-bar UI widget)

Prompt templates (formerly "slash commands") let you create reusable prompt shortcuts with parameters.

No community prompt templates yet — contributions welcome!

- pi-rose-pine — Rose Pine themes for pi coding agent (main, moon, dawn variants)

- pi-acp — ACP adapter for pi agent

- pi-config — Project config example

- pi-synthetic — Pi provider for Synthetic (open-source models via Anthropic-compatible API)

- crossjam/mpr — Context and writeups referencing the agent

- anthropics/claude-code — Official Anthropic agentic coding tool that lives in your terminal with natural language commands and git workflow support

- claude-plugins-official — Official Anthropic directory of Claude Code plugins with MCP servers, skills, and commands

- synthetic-lab/octofriend — Open-source coding assistant agent with friendly interactions and codebase understanding

Deep links into the official pi-mono repository:

- Extensions guide — Unified extensions API (hooks, tools, events, UI)

- Package README — High-level package README and quick start

- Docs directory — Full documentation (CLI, SDK, RPC, sessions, compaction, themes)

- Examples directory — Working examples for extensions, SDK usage, and more

- Theme guide — Theme schema, color tokens, and examples

- Migration guide — Upgrading from hooks/tools to extensions

- Web UI utilities — Provider dialogs and model discovery utilities

- Model registry — Core model/provider registry implementation

- Pods models.json — Example models.json for pods and local runners

When adding a new resource, ensure the following:

- [ ] Tool is actively maintained (commits within last year)

- [ ] Has documentation / README

- [ ] Description is concise and explains value

- [ ] Link works and goes to correct resource

- [ ] Not a duplicate

- [ ] Alphabetically ordered within section

Please add only one-line entries (short description + link). Maintainers may re-order or trim entries during review.

Fork, create a topic branch, add your entry to the appropriate section in this README (one-line entry, alphabetical), and open a Pull Request using the PR template.

This repository includes automated tools for discovering new pi-agent resources shared in Discord servers. See discord_scraping/ for:

- Puppeteer-based scraper with forum post support

- Incremental message tracker with state persistence

- GitHub link extraction from channels and forums

- Automatic filtering for pi-agent content

- Integration with awesome list checking

Run ./discord_scraping/run.sh to find new resources to add to this list.

Link-checker workflow: .github/workflows/check-links.yml (runs on push and PRs)

MIT — see LICENSE

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-pi-agent

Similar Open Source Tools

awesome-pi-agent

Awesome Pi Agent is a versatile and powerful tool for building intelligent agents on Raspberry Pi. It provides a framework for developing AI-powered applications that can interact with the physical world through sensors and actuators. With a focus on simplicity and extensibility, this tool enables users to create a wide range of smart devices, from home automation systems to robotics projects. The agent can be easily customized and integrated with various AI algorithms and libraries, making it suitable for both beginners and advanced users interested in exploring the intersection of AI and IoT technologies.

open-ai

Open AI is a powerful tool for artificial intelligence research and development. It provides a wide range of machine learning models and algorithms, making it easier for developers to create innovative AI applications. With Open AI, users can explore cutting-edge technologies such as natural language processing, computer vision, and reinforcement learning. The platform offers a user-friendly interface and comprehensive documentation to support users in building and deploying AI solutions. Whether you are a beginner or an experienced AI practitioner, Open AI offers the tools and resources you need to accelerate your AI projects and stay ahead in the rapidly evolving field of artificial intelligence.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

simple-ai

Simple AI is a lightweight Python library for implementing basic artificial intelligence algorithms. It provides easy-to-use functions and classes for tasks such as machine learning, natural language processing, and computer vision. With Simple AI, users can quickly prototype and deploy AI solutions without the complexity of larger frameworks.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

sdk-python

Strands Agents is a lightweight and flexible SDK that takes a model-driven approach to building and running AI agents. It supports various model providers, offers advanced capabilities like multi-agent systems and streaming support, and comes with built-in MCP server support. Users can easily create tools using Python decorators, integrate MCP servers seamlessly, and leverage multiple model providers for different AI tasks. The SDK is designed to scale from simple conversational assistants to complex autonomous workflows, making it suitable for a wide range of AI development needs.

blurr

Panda is a proactive, on-device AI agent for Android that autonomously understands natural language commands and operates your phone's UI to achieve them. It acts as a personal operator, handling complex, multi-step tasks across different applications. With intelligent UI automation, high-quality voice, and personalized local memory, Panda simplifies interactions with technology. Built on Kotlin, Panda's architecture includes Eyes & Hands for physical device connection, The Brain for reasoning, and The Agent for execution. The project is a proof-of-concept aiming to become an indispensable assistant.

GEN-AI

GEN-AI is a versatile Python library for implementing various artificial intelligence algorithms and models. It provides a wide range of tools and functionalities to support machine learning, deep learning, natural language processing, computer vision, and reinforcement learning tasks. With GEN-AI, users can easily build, train, and deploy AI models for diverse applications such as image recognition, text classification, sentiment analysis, object detection, and game playing. The library is designed to be user-friendly, efficient, and scalable, making it suitable for both beginners and experienced AI practitioners.

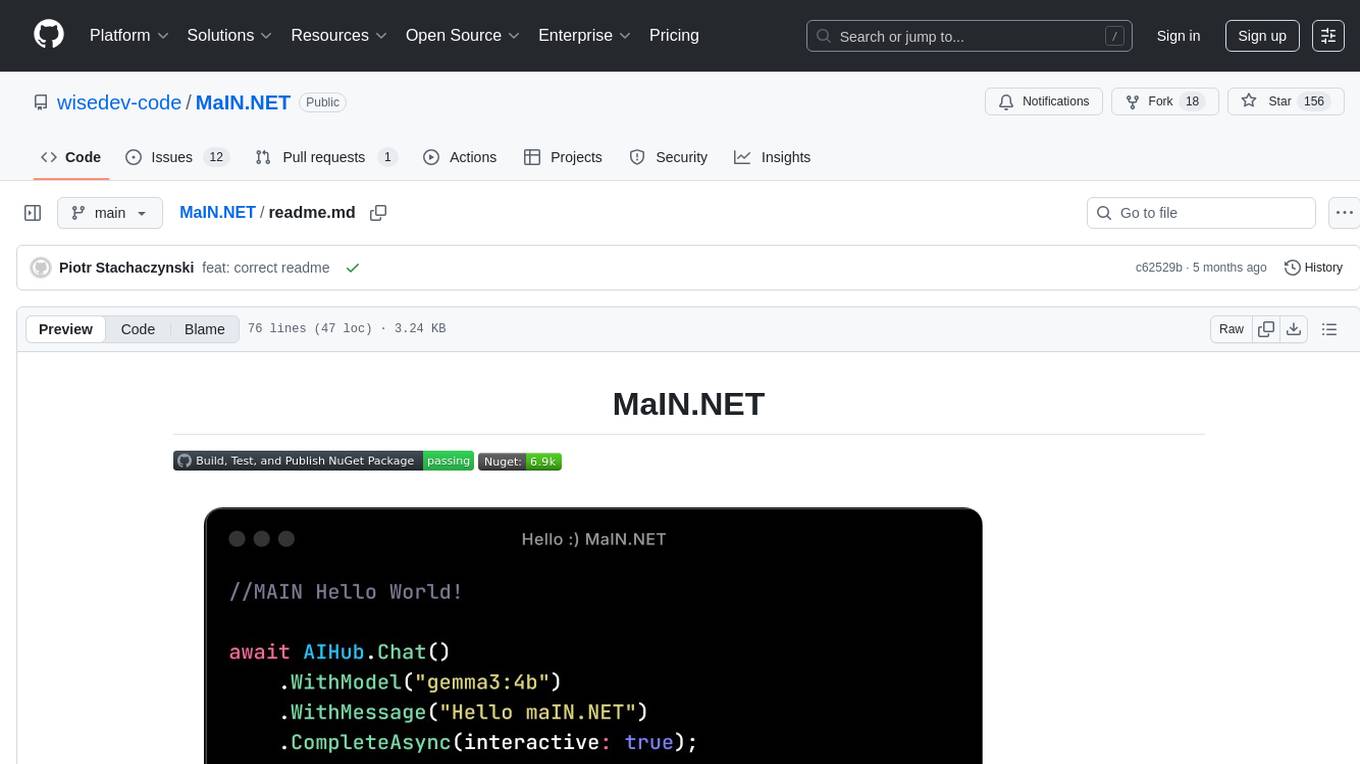

MaIN.NET

MaIN.NET (Modular Artificial Intelligence Network) is a versatile .NET package designed to streamline the integration of large language models (LLMs) into advanced AI workflows. It offers a flexible and robust foundation for developing chatbots, automating processes, and exploring innovative AI techniques. The package connects diverse AI methods into one unified ecosystem, empowering developers with a low-code philosophy to create powerful AI applications with ease.

AimRT

AimRT is a basic runtime framework for modern robotics, developed in modern C++ with lightweight and easy deployment. It integrates research and development for robot applications in various deployment scenarios, providing debugging tools and observability support. AimRT offers a plug-in development interface compatible with ROS2, HTTP, Grpc, and other ecosystems for progressive system upgrades.

ai

This repository contains a collection of AI algorithms and models for various machine learning tasks. It provides implementations of popular algorithms such as neural networks, decision trees, and support vector machines. The code is well-documented and easy to understand, making it suitable for both beginners and experienced developers. The repository also includes example datasets and tutorials to help users get started with building and training AI models. Whether you are a student learning about AI or a professional working on machine learning projects, this repository can be a valuable resource for your development journey.

dify

Dify is an open-source LLM app development platform that combines AI workflow, RAG pipeline, agent capabilities, model management, observability features, and more. It allows users to quickly go from prototype to production. Key features include: 1. Workflow: Build and test powerful AI workflows on a visual canvas. 2. Comprehensive model support: Seamless integration with hundreds of proprietary / open-source LLMs from dozens of inference providers and self-hosted solutions. 3. Prompt IDE: Intuitive interface for crafting prompts, comparing model performance, and adding additional features. 4. RAG Pipeline: Extensive RAG capabilities that cover everything from document ingestion to retrieval. 5. Agent capabilities: Define agents based on LLM Function Calling or ReAct, and add pre-built or custom tools. 6. LLMOps: Monitor and analyze application logs and performance over time. 7. Backend-as-a-Service: All of Dify's offerings come with corresponding APIs for easy integration into your own business logic.

openvino_build_deploy

The OpenVINO Build and Deploy repository provides pre-built components and code samples to accelerate the development and deployment of production-grade AI applications across various industries. With the OpenVINO Toolkit from Intel, users can enhance the capabilities of both Intel and non-Intel hardware to meet specific needs. The repository includes AI reference kits, interactive demos, workshops, and step-by-step instructions for building AI applications. Additional resources such as Jupyter notebooks and a Medium blog are also available. The repository is maintained by the AI Evangelist team at Intel, who provide guidance on real-world use cases for the OpenVINO toolkit.

crewAI-tools

This repository provides a guide for setting up tools for crewAI agents to enhance functionality. It offers steps to equip agents with ready-to-use tools and create custom ones. Tools are expected to return strings for generating responses. Users can create tools by subclassing BaseTool or using the tool decorator. Contributions are welcome to enrich the toolset, and guidelines are provided for contributing. The development setup includes installing dependencies, activating virtual environment, setting up pre-commit hooks, running tests, static type checking, packaging, and local installation. The goal is to empower AI solutions through advanced tooling.

humanlayer

HumanLayer is a Python toolkit designed to enable AI agents to interact with humans in tool-based and asynchronous workflows. By incorporating humans-in-the-loop, agentic tools can access more powerful and meaningful tasks. The toolkit provides features like requiring human approval for function calls, human as a tool for contacting humans, omni-channel contact capabilities, granular routing, and support for various LLMs and orchestration frameworks. HumanLayer aims to ensure human oversight of high-stakes function calls, making AI agents more reliable and safe in executing impactful tasks.

BentoVLLM

BentoVLLM is an example project demonstrating how to serve and deploy open-source Large Language Models using vLLM, a high-throughput and memory-efficient inference engine. It provides a basis for advanced code customization, such as custom models, inference logic, or vLLM options. The project allows for simple LLM hosting with OpenAI compatible endpoints without the need to write any code. Users can interact with the server using Swagger UI or other methods, and the service can be deployed to BentoCloud for better management and scalability. Additionally, the repository includes integration examples for different LLM models and tools.

For similar tasks

reolink_aio

The 'reolink_aio' Python package is designed to integrate Reolink devices (NVR/cameras) into your application. It implements Reolink IP NVR and camera API, allowing users to subscribe to Reolink ONVIF SWN events for real-time event notifications via webhook. The package provides functionalities to obtain and cache NVR or camera settings, capabilities, and states, as well as enable features like infrared lights, spotlight, and siren. Users can also subscribe to events, renew timers, and disconnect from the host device.

ChatGPT-OpenAI-Smart-Speaker

ChatGPT Smart Speaker is a project that enables speech recognition and text-to-speech functionalities using OpenAI and Google Speech Recognition. It provides scripts for running on PC/Mac and Raspberry Pi, allowing users to interact with a smart speaker setup. The project includes detailed instructions for setting up the required hardware and software dependencies, along with customization options for the OpenAI model engine, language settings, and response randomness control. The Raspberry Pi setup involves utilizing the ReSpeaker hardware for voice feedback and light shows. The project aims to offer an advanced smart speaker experience with features like wake word detection and response generation using AI models.

aiohue

Aiohue is an asynchronous library designed to control Philips Hue lights. It requires Python 3.10+ and utilizes asyncio and aiohttp. The library supports both V1 and V2 APIs of the Hue Bridge, with V2 API offering event-based updates to eliminate the need for polling. The contribution guidelines emphasize matching object hierarchy and property/method names with the Philips Hue API.

aioshelly

Aioshelly is an asynchronous library designed to control Shelly devices. It is currently under development and requires Python version 3.11 or higher, along with dependencies like bluetooth-data-tools, aiohttp, and orjson. The library provides examples for interacting with Gen1 devices using CoAP protocol and Gen2/Gen3 devices using RPC and WebSocket protocols. Users can easily connect to Shelly devices, retrieve status information, and perform various actions through the provided APIs. The repository also includes example scripts for quick testing and usage guidelines for contributors to maintain consistency with the Shelly API.

awesome-pi-agent

Awesome Pi Agent is a versatile and powerful tool for building intelligent agents on Raspberry Pi. It provides a framework for developing AI-powered applications that can interact with the physical world through sensors and actuators. With a focus on simplicity and extensibility, this tool enables users to create a wide range of smart devices, from home automation systems to robotics projects. The agent can be easily customized and integrated with various AI algorithms and libraries, making it suitable for both beginners and advanced users interested in exploring the intersection of AI and IoT technologies.

AIOsense

AIOsense is an all-in-one sensor that is modular, affordable, and easy to solder. It is designed to be an alternative to commercially available sensors and focuses on upgradeability. AIOsense is cheaper and better than most commercial sensors and supports a variety of sensors and modules, including: - (RGB)-LED - Barometer - Breath VOC equivalent - Buzzer / Beeper - CO² equivalent - Humidity sensor - Light / Illumination sensor - PIR motion sensor - Temperature sensor - mmWave / Radar sensor Upcoming features include full voice assistant support, microphone, and speaker. All supported sensors & modules are listed in the documentation. AIOsense has a low power consumption, with an idle power consumption of 0.45W / 0.09A on a fully equipped board. Without a mmWave sensor, the idle power consumption is around 0.11W / 0.02A. To get started with AIOsense, you can refer to the documentation. If you have any questions, you can open an issue.

viseron

Viseron is a self-hosted, local-only NVR and AI computer vision software that provides features such as object detection, motion detection, and face recognition. It allows users to monitor their home, office, or any other place they want to keep an eye on. Getting started with Viseron is easy by spinning up a Docker container and editing the configuration file using the built-in web interface. The software's functionality is enabled by components, which can be explored using the Component Explorer. Contributors are welcome to help with implementing open feature requests, improving documentation, and answering questions in issues or discussions. Users can also sponsor Viseron or make a one-time donation.

ztachip

ztachip is a RISCV accelerator designed for vision and AI edge applications, offering up to 20-50x acceleration compared to non-accelerated RISCV implementations. It features an innovative tensor processor hardware to accelerate various vision tasks and TensorFlow AI models. ztachip introduces a new tensor programming paradigm for massive processing/data parallelism. The repository includes technical documentation, code structure, build procedures, and reference design examples for running vision/AI applications on FPGA devices. Users can build ztachip as a standalone executable or a micropython port, and run various AI/vision applications like image classification, object detection, edge detection, motion detection, and multi-tasking on supported hardware.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.