awesome-ai-security

A collection of awesome resources related AI security

Stars: 548

Awesome AI Security is a curated list of frameworks, standards, learning resources, and open source tools related to AI security. It covers a wide range of topics including general reading material, technical material & labs, podcasts, governance frameworks and standards, offensive tools and frameworks, attacking Large Language Models (LLMs), AI for offensive cyber, defensive tools and frameworks, AI for defensive cyber, data security and governance, general AI/ML safety and robustness, MCP security, LLM guardrails, safety and sandboxing for agentic AI tools, detection & scanners, OpenClaw security, privacy and confidentiality, agentic AI skills, models for cybersecurity, and more.

README:

A curated list of awesome AI security related frameworks, standards, learning resources and open source tools.

If you want to contribute, create a PR or contact me @ottosulin.

- GenAI Security podcast

- OWASP ML TOP 10

- OWASP LLM TOP 10

- OWASP AI Security and Privacy Guide

- NIST AIRC - NIST Trustworthy & Responsible AI Resource Center

- The MLSecOps Top 10 by Institute for Ethical AI & Machine Learning

- OWASP Multi-Agentic System Threat Modeling

- OWASP: CheatSheet – A Practical Guide for Securely Using Third-Party MCP Servers 1.0

- Damn Vulnerable MCP Server - A deliberately vulnerable implementation of the Model Context Protocol (MCP) for educational purposes.

- OWASP WrongSecrets LLM exercise

- vulnerable-mcp-servers-lab - A collection of servers which are deliberately vulnerable to learn Pentesting MCP Servers.

- FinBot Agentic AI Capture The Flag (CTF) Application - FinBot is an Agentic Security Capture The Flag (CTF) interactive platform that simulates real-world vulnerabilities in agentic AI systems using a simulated Financial Services-focused application.

- NIST AI Risk Management Framework

- ISO/IEC 42001 Artificial Intelligence Management System

- ISO/IEC 23894:2023 Information technology — Artificial intelligence — Guidance on risk management

- Google Secure AI Framework

- ENISA Multilayer Framework for Good Cybersecurity Practices for AI

- OWASP Artificial Intelligence Maturity Assessment

- Google Secure AI Framework

- CSA AI Model Risk Framework

- NIST AI 100-2e2023

- AVIDML

- MITRE ATLAS

- ISO/IEC 22989:2022 Information technology — Artificial intelligence — Artificial intelligence concepts and terminology

- MIT AI Risk Repository

- AI Incident Database

- NIST AI Glossary

- The Arcanum Prompt Injection Taxonomy

- CSA LLM Threats Taxonomy

- Malware Env for OpenAI Gym - makes it possible to write agents that learn to manipulate PE files (e.g., malware) to achieve some objective (e.g., bypass AV) based on a reward provided by taking specific manipulation actions

- Deep-pwning - a lightweight framework for experimenting with machine learning models with the goal of evaluating their robustness against a motivated adversary

- Counterfit - generic automation layer for assessing the security of machine learning systems

- DeepFool - A simple and accurate method to fool deep neural networks

- Snaike-MLFlow - MLflow red team toolsuite

- HackingBuddyGPT - An automatic pentester (+ corresponding [benchmark dataset](https://github.com/ipa -lab/hacking-benchmark))

- Charcuterie - code execution techniques for ML or ML adjacent libraries

- OffsecML Playbook - A collection of offensive and adversarial TTP's with proofs of concept

- BadDiffusion - Official repo to reproduce the paper "How to Backdoor Diffusion Models?" published at CVPR 2023

- Exploring the Space of Adversarial Images

- Adversarial Machine Learning Library(Ad-lib)](https://github.com/vu-aml/adlib) - Game-theoretic adversarial machine learning library providing a set of learner and adversary modules

- Adversarial Robustness Toolkit - ART focuses on the threats of Evasion (change the model behavior with input modifications), Poisoning (control a model with training data modifications), Extraction (steal a model through queries) and Inference (attack the privacy of the training data)

- cleverhans - An adversarial example library for constructing attacks, building defenses, and benchmarking both

- foolbox - A Python toolbox to create adversarial examples that fool neural networks in PyTorch, TensorFlow, and JAX

- TextAttack - TextAttack 🐙 is a Python framework for adversarial attacks, data augmentation, and model training in NLP https://textattack.readthedocs.io/en/master/

- garak - security probing tool for LLMs

- agentic_security - Agentic LLM Vulnerability Scanner / AI red teaming kit

- Agentic Radar - Open-source CLI security scanner for agentic workflows.

- llamator - Framework for testing vulnerabilities of large language models (LLM).

- whistleblower - Whistleblower is a offensive security tool for testing against system prompt leakage and capability discovery of an AI application exposed through API

- LLMFuzzer - 🧠 LLMFuzzer - Fuzzing Framework for Large Language Models 🧠 LLMFuzzer is the first open-source fuzzing framework specifically designed for Large Language Models (LLMs), especially for their integrations in applications via LLM APIs. 🚀💥

- vigil-llm - ⚡ Vigil ⚡ Detect prompt injections, jailbreaks, and other potentially risky Large Language Model (LLM) inputs

- FuzzyAI - A powerful tool for automated LLM fuzzing. It is designed to help developers and security researchers identify and mitigate potential jailbreaks in their LLM APIs.

- EasyJailbreak - An easy-to-use Python framework to generate adversarial jailbreak prompts.

- promptmap - a prompt injection scanner for custom LLM applications

- PyRIT - The Python Risk Identification Tool for generative AI (PyRIT) is an open source framework built to empower security professionals and engineers to proactively identify risks in generative AI systems.

- PurpleLlama - Set of tools to assess and improve LLM security.

- Giskard-AI - 🐢 Open-Source Evaluation & Testing for AI & LLM systems

- promptfoo - Test your prompts, agents, and RAGs. Red teaming, pentesting, and vulnerability scanning for LLMs. Compare performance of GPT, Claude, Gemini, Llama, and more. Simple declarative configs with command line and CI/CD integration.

- HouYi - The automated prompt injection framework for LLM-integrated applications.

- llm-attacks - Universal and Transferable Attacks on Aligned Language Models

- Dropbox llm-security - Dropbox LLM Security research code and results

- llm-security - New ways of breaking app-integrated LLMs

- OpenPromptInjection - This repository provides a benchmark for prompt Injection attacks and defenses

- Plexiglass - A toolkit for detecting and protecting against vulnerabilities in Large Language Models (LLMs).

- ps-fuzz - Make your GenAI Apps Safe & Secure 🚀 Test & harden your system prompt

- EasyEdit - Modify an LLM's ground truths

- spikee) - Simple Prompt Injection Kit for Evaluation and Exploitation

- Prompt Hacking Resources - A list of curated resources for people interested in AI Red Teaming, Jailbreaking, and Prompt Injection

- mcp-injection-experiments - Code snippets to reproduce MCP tool poisoning attacks.

- gptfuzz - Official repo for GPTFUZZER : Red Teaming Large Language Models with Auto-Generated Jailbreak Prompts

- AgentDojo - A Dynamic Environment to Evaluate Attacks and Defenses for LLM Agents.

- jailbreakbench - JailbreakBench: An Open Robustness Benchmark for Jailbreaking Language Models [NeurIPS 2024 Datasets and Benchmarks Track]

- giskard - 🐢 Open-Source Evaluation & Testing library for LLM Agents

- TrustGate - Generative Application Firewall (GAF) to detects, prevents and blocks attacks against GenAI Applications

- blackice - BlackIce is an open-source containerized toolkit designed for red teaming AI models, including Large Language Models (LLMs) and classical machine learning (ML) models. Inspired by the convenience and standardization of Kali Linux in traditional penetration testing, BlackIce simplifies AI security assessments by providing a reproducible container image preconfigured with specialized evaluation tools.

- augustus - LLM security testing framework for detecting prompt injection, jailbreaks, and adversarial attacks. 190+ probes, 28 providers, single Go binary. Production-ready with concurrent scanning, rate limiting, and retry logic.

- guardian-cli - AI-Powered Security Testing & Vulnerability Scanner. Guardian CLI is an intelligent security testing tool that leverages AI to automate penetration testing, vulnerability assessment, and security auditing.

- AI-Red-Teaming-Playground-Labs - AI Red Teaming playground labs to run AI Red Teaming trainings including infrastructure.

- HackGPT - A tool using ChatGPT for hacking

- mcp-for-security - A collection of Model Context Protocol servers for popular security tools like SQLMap, FFUF, NMAP, Masscan and more. Integrate security testing and penetration testing into AI workflows.

- cai - Cybersecurity AI (CAI), an open Bug Bounty-ready Artificial Intelligence (paper)

- AIRTBench - Code Repository for: AIRTBench: Measuring Autonomous AI Red Teaming Capabilities in Language Models

- PentestGPT - A GPT-empowered penetration testing tool

- HackingBuddyGPT - Helping Ethical Hackers use LLMs in 50 Lines of Code or less..

- HexStrikeAI - HexStrike AI MCP Agents is an advanced MCP server that lets AI agents (Claude, GPT, Copilot, etc.) autonomously run 150+ cybersecurity tools for automated pentesting, vulnerability discovery, bug bounty automation, and security research. Seamlessly bridge LLMs with real-world offensive security capabilities.

- Burp MCP Server - MCP Server for Burp

- burpgpt - A Burp Suite extension that integrates OpenAI's GPT to perform an additional passive scan for discovering highly bespoke vulnerabilities and enables running traffic-based analysis of any type.

- AI-Infra-Guard - A comprehensive, intelligent, and easy-to-use AI Red Teaming platform developed by Tencent Zhuque Lab.It integrates modules for Infra Scan,MCP Scan,and Jailbreak Evaluation,providing a one-click web UI, REST APIs, and Docker-based deployment for comprehensive AI security evaluation.

- strix - Strix are autonomous AI agents that act just like real hackers - they run your code dynamically, find vulnerabilities, and validate them through actual proof-of-concepts

- mcp-security-hub - A growing collection of MCP servers bringing offensive security tools to AI assistants. Nmap, Ghidra, Nuclei, SQLMap, Hashcat and more.

- AutoPentestX - AutoPentestX – Linux Automated Pentesting & Vulnerability Reporting Tool

- CyberStrikeAI - AI-native security testing platform built in Go. Integrates 100+ security tools with an intelligent orchestration engine, role-based testing with predefined security roles, skills system, and comprehensive lifecycle management. Uses MCP protocol and AI agents for end-to-end automation from conversational commands to vulnerability discovery.

- redamon - AI-powered agentic red team framework that automates offensive security operations from reconnaissance to exploitation to post-exploitation with zero human intervention.

- OWASP LLM and Generative AI Security Center of Excellence Guide

- OWASP Agentic AI – Threats and Mitigations

- OWASP AI Security Solutions Landscape

- OWASP GenAI Incident Response Guide

- OWASP LLM and GenAI Data Security Best Practices

- OWASP Securing Agentic AI Applications

- CSA Maestro AI Threat Modeling Framework

- Claude Code Security Review - An AI-powered security review GitHub Action using Claude to analyze code changes for security vulnerabilities.

- GhidraGPT - Integrates GPT models into Ghidra for automated code analysis, variable renaming, vulnerability detection, and explanation generation.

- datasig - Dataset fingerprinting for AIBOM

- OWASP AIBOM - AI Bill of Materials

- secml-torch - SecML-Torch: A Library for Robustness Evaluation of Deep Learning Models

- awesome-ai-safety

- MCP-Security-Checklist - A comprehensive security checklist for MCP-based AI tools. Built by SlowMist to safeguard LLM plugin ecosystems.

- Awesome-MCP-Security - Everything you need to know about Model Context Protocol (MCP) security.

- secure-mcp-gateway - This Secure MCP Gateway is built with authentication, automatic tool discovery, caching, and guardrail enforcement.

- mcp-context-protector - context-protector is a security wrapper for MCP servers that addresses risks associated with running untrusted MCP servers, including line jumping, unexpected server configuration changes, and other prompt injection attacks

- mcp-guardian - MCP Guardian manages your LLM assistant's access to MCP servers, handing you realtime control of your LLM's activity.

- MCP Audit VSCode Extension - Audit and log all GitHub Copilot MCP tool calls in VSCode in centrally with ease.

- Guardrail.ai - Guardrails is a Python package that lets a user add structure, type and quality guarantees to the outputs of large language models (LLMs)

- CodeGate - An open-source, privacy-focused project that acts as a layer of security within a developers Code Generation AI workflow

- LlamaFirewall - LlamaFirewall is a framework designed to detect and mitigate AI centric security risks, supporting multiple layers of inputs and outputs, such as typical LLM chat and more advanced multi-step agentic operations.

- ZenGuard AI - The fastest Trust Layer for AI Agents

- llm-guard - LLM Guard by Protect AI is a comprehensive tool designed to fortify the security of Large Language Models (LLMs).

- vibraniumdome - Full blown, end to end LLM WAF for Agents, allowing security teams govenrance, auditing, policy driven control over Agents usage of language models.

- LocalMod - Self-hosted content moderation API with prompt injection detection, toxicity filtering, PII detection, and NSFW classification. Runs 100% offline.

- NeMo-GuardRails - NeMo Guardrails is an open-source toolkit for easily adding programmable guardrails to LLM-based conversational systems.

- DynaGuard - A Dynamic Guardrail Model With User-Defined Policies

- AprielGuard - 8B parameter safety–security safeguard model

- Safe Zone - Safe Zone is an open-source PII detection and guardrails engine that prevents sensitive data from leaking to LLMs and third-party APIs.

- superagent - Superagent provides purpose-trained guardrails that make AI-agents secure and compliant.

- mcp-context-protector - mcp-context-protector is a security wrapper for MCP servers that addresses risks associated with running untrusted MCP servers

- vibekit - Run Claude Code, Gemini, Codex — or any coding agent — in a clean, isolated sandbox with sensitive data redaction and observability baked in.

- claude-code-safety-net - A Claude Code plugin that acts as a safety net, catching destructive git and filesystem commands before they execute

- leash - Leash wraps AI coding agents in containers and monitors their activity.

- skill-scanner - A security scanner for AI Agent Skills that detects prompt injection, data exfiltration, and malicious code patterns. Combines pattern-based detection (YAML + YARA), LLM-as-a-judge, and behavioral dataflow analysis for comprehensive threat detection.

- Project CodeGuard - CoSAI Open Source Project for securing AI-assisted development workflows. CodeGuard provides security controls and guardrails for AI coding assistants to prevent vulnerabilities from being introduced during AI-generated code development.

- modelscan - ModelScan is an open source project from Protect AI that scans models to determine if they contain unsafe code.

- rebuff - Prompt Injection Detector

- langkit - LangKit is an open-source text metrics toolkit for monitoring language models. The toolkit various security related metrics that can be used to detect attacks

- MCP-Scan - A security scanning tool for MCP servers

- picklescan - Security scanner detecting Python Pickle files performing suspicious actions

- fickling - A Python pickling decompiler and static analyzer

- a2a-scanner - Scan A2A agents for potential threats and security issues

- medusa - AI-first security scanner with 74+ analyzers, 180+ AI agent security rules, intelligent false positive reduction. Supports all languages. CVE detection for React2Shell, mcp-remote RCE.

- julius - LLM service fingerprinting tool for security professionals. Detects 32+ AI services (Ollama, vLLM, LiteLLM, Hugging Face TGI, etc.) during penetration tests and attack surface discovery. Uses HTTP-based service fingerprinting to identify server infrastructure.

- openclaw-shield - Security plugin for OpenClaw agents - prevents secret leaks, PII exposure, and destructive command execution

- clawsec - Security scanner and hardening tool for OpenClaw deployments. Provides security assessments, configuration auditing, and vulnerability detection specifically for OpenClaw gateway and agent configurations.

- Python Differential Privacy Library

- Diffprivlib - The IBM Differential Privacy Library

- PLOT4ai - Privacy Library Of Threats 4 Artificial Intelligence A threat modeling library to help you build responsible AI

- TenSEAL - A library for doing homomorphic encryption operations on tensors

- SyMPC - A Secure Multiparty Computation companion library for Syft

- PyVertical - Privacy Preserving Vertical Federated Learning

- Cloaked AI - Open source property-preserving encryption for vector embeddings

- dstack - Open-source confidential AI framework for secure ML/LLM deployment with hardware-enforced isolation and data privacy

- PrivacyRaven - privacy testing library for deep learning systems

- claude-secure-coding-rules - Open-source security rules that guide Claude Code to generate secure code by default.

- tm_skills - Agent skills to help with Continuous Threat Modeling

- Trail of Bits Skills Marketplace - Trail of Bits Claude Code skills for security research, vulnerability detection, and audit workflows

- Semgrep Skills - Official Semgrep skills for Claude Code and other AI coding assistants. Provides security scanning, code analysis, and vulnerability detection capabilities directly in your AI-assisted development workflow.

- VulnLLM-R-7B - Specialized reasoning LLM for vulnerability detection. Uses Chain-of-Thought reasoning to analyze data flow, control flow, and security context. Outperforms Claude-3.7-Sonnet and CodeQL on vulnerability detection benchmarks. Only 7B parameters making it efficient and fast.

- Foundation-Sec-8B-Reasoning - Llama-3.1-FoundationAI-SecurityLLM-8B-Reasoning (Foundation-Sec-8B-Reasoning) is an open-weight, 8-billion parameter instruction-tuned language model specialized for cybersecurity applications. It extends the Foundation-Sec-8B base model with instruction-following and reasoning capabilities. It leverages prior training to understand security concepts, terminology, and practices across multiple security domains

- AgentDoG - AgentDoG is a risk-aware evaluation and guarding framework for autonomous agents. It focuses on trajectory-level risk assessment, aiming to determine whether an agent’s execution trajectory contains safety risks under diverse application scenarios.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-ai-security

Similar Open Source Tools

awesome-ai-security

Awesome AI Security is a curated list of frameworks, standards, learning resources, and open source tools related to AI security. It covers a wide range of topics including general reading material, technical material & labs, podcasts, governance frameworks and standards, offensive tools and frameworks, attacking Large Language Models (LLMs), AI for offensive cyber, defensive tools and frameworks, AI for defensive cyber, data security and governance, general AI/ML safety and robustness, MCP security, LLM guardrails, safety and sandboxing for agentic AI tools, detection & scanners, OpenClaw security, privacy and confidentiality, agentic AI skills, models for cybersecurity, and more.

AI-Gateway

The AI-Gateway repository explores the AI Gateway pattern through a series of experimental labs, focusing on Azure API Management for handling AI services APIs. The labs provide step-by-step instructions using Jupyter notebooks with Python scripts, Bicep files, and APIM policies. The goal is to accelerate experimentation of advanced use cases and pave the way for further innovation in the rapidly evolving field of AI. The repository also includes a Mock Server to mimic the behavior of the OpenAI API for testing and development purposes.

ai-platform-engineering

The AI Platform Engineering repository provides a collection of tools and resources for building and deploying AI models. It includes libraries for data preprocessing, model training, and model serving. The repository also contains example code and tutorials to help users get started with AI development. Whether you are a beginner or an experienced AI engineer, this repository offers valuable insights and best practices to streamline your AI projects.

FATE-LLM

FATE-LLM is a framework supporting federated learning for large and small language models. It promotes training efficiency of federated LLMs using Parameter-Efficient methods, protects the IP of LLMs using FedIPR, and ensures data privacy during training and inference through privacy-preserving mechanisms.

adk-ts

ADK-TS is a comprehensive TypeScript framework for building sophisticated AI agents with multi-LLM support, advanced tools, and flexible conversation flows. It is production-ready and enables developers to create intelligent, autonomous systems that can handle complex multi-step tasks. The framework provides features such as multi-provider LLM support, extensible tool system, advanced agent reasoning, real-time streaming, flexible authentication, persistent memory systems, multi-agent orchestration, built-in telemetry, and prebuilt MCP servers for easy deployment and management of agents.

Upsonic

Upsonic offers a cutting-edge enterprise-ready framework for orchestrating LLM calls, agents, and computer use to complete tasks cost-effectively. It provides reliable systems, scalability, and a task-oriented structure for real-world cases. Key features include production-ready scalability, task-centric design, MCP server support, tool-calling server, computer use integration, and easy addition of custom tools. The framework supports client-server architecture and allows seamless deployment on AWS, GCP, or locally using Docker.

neuro-san-studio

Neuro SAN Studio is an open-source library for building agent networks across various industries. It simplifies the development of collaborative AI systems by enabling users to create sophisticated multi-agent applications using declarative configuration files. The tool offers features like data-driven configuration, adaptive communication protocols, safe data handling, dynamic agent network designer, flexible tool integration, robust traceability, and cloud-agnostic deployment. It has been used in various use-cases such as automated generation of multi-agent configurations, airline policy assistance, banking operations, market analysis in consumer packaged goods, insurance claims processing, intranet knowledge management, retail operations, telco network support, therapy vignette supervision, and more.

llm-on-ray

LLM-on-Ray is a comprehensive solution for building, customizing, and deploying Large Language Models (LLMs). It simplifies complex processes into manageable steps by leveraging the power of Ray for distributed computing. The tool supports pretraining, finetuning, and serving LLMs across various hardware setups, incorporating industry and Intel optimizations for performance. It offers modular workflows with intuitive configurations, robust fault tolerance, and scalability. Additionally, it provides an Interactive Web UI for enhanced usability, including a chatbot application for testing and refining models.

thecodersgig

TheCodersGig is an AI-powered open-source social network platform for developers, facilitating seamless connection and collaboration. It features an integrated utility marketplace for creating plugins easily, automating backend development with scalable code. The user-friendly interface supports API integration, data models, databases, authentication, and authorization. The platform's architecture includes frontend, backend, AI services, database, marketplace, security, and DevOps layers, enabling customization and diverse integrations. Key components encompass technologies like React.js, Node.js, Python-based AI frameworks, SQL/NoSQL databases, payment gateways, security protocols, and DevOps tools for automation and scalability.

magic

Magic is an open-source all-in-one AI productivity platform designed to help enterprises quickly build and deploy AI applications, aiming for a 100x increase in productivity. It consists of various AI products and infrastructure tools, such as Super Magic, Magic IM, Magic Flow, and more. Super Magic is a general-purpose AI Agent for complex task scenarios, while Magic Flow is a visual AI workflow orchestration system. Magic IM is an enterprise-grade AI Agent conversation system for internal knowledge management. Teamshare OS is a collaborative office platform integrating AI capabilities. The platform provides cloud services, enterprise solutions, and a self-hosted community edition for users to leverage its features.

refly

Refly.AI is an open-source AI-native creation engine that empowers users to transform ideas into production-ready content. It features a free-form canvas interface with multi-threaded conversations, knowledge base integration, contextual memory, intelligent search, WYSIWYG AI editor, and more. Users can leverage AI-powered capabilities, context memory, knowledge base integration, quotes, and AI document editing to enhance their content creation process. Refly offers both cloud and self-hosting options, making it suitable for individuals, enterprises, and organizations. The tool is designed to facilitate human-AI collaboration and streamline content creation workflows.

nextpy

Nextpy is a cutting-edge software development framework optimized for AI-based code generation. It provides guardrails for defining AI system boundaries, structured outputs for prompt engineering, a powerful prompt engine for efficient processing, better AI generations with precise output control, modularity for multiplatform and extensible usage, developer-first approach for transferable knowledge, and containerized & scalable deployment options. It offers 4-10x faster performance compared to Streamlit apps, with a focus on cooperation within the open-source community and integration of key components from various projects.

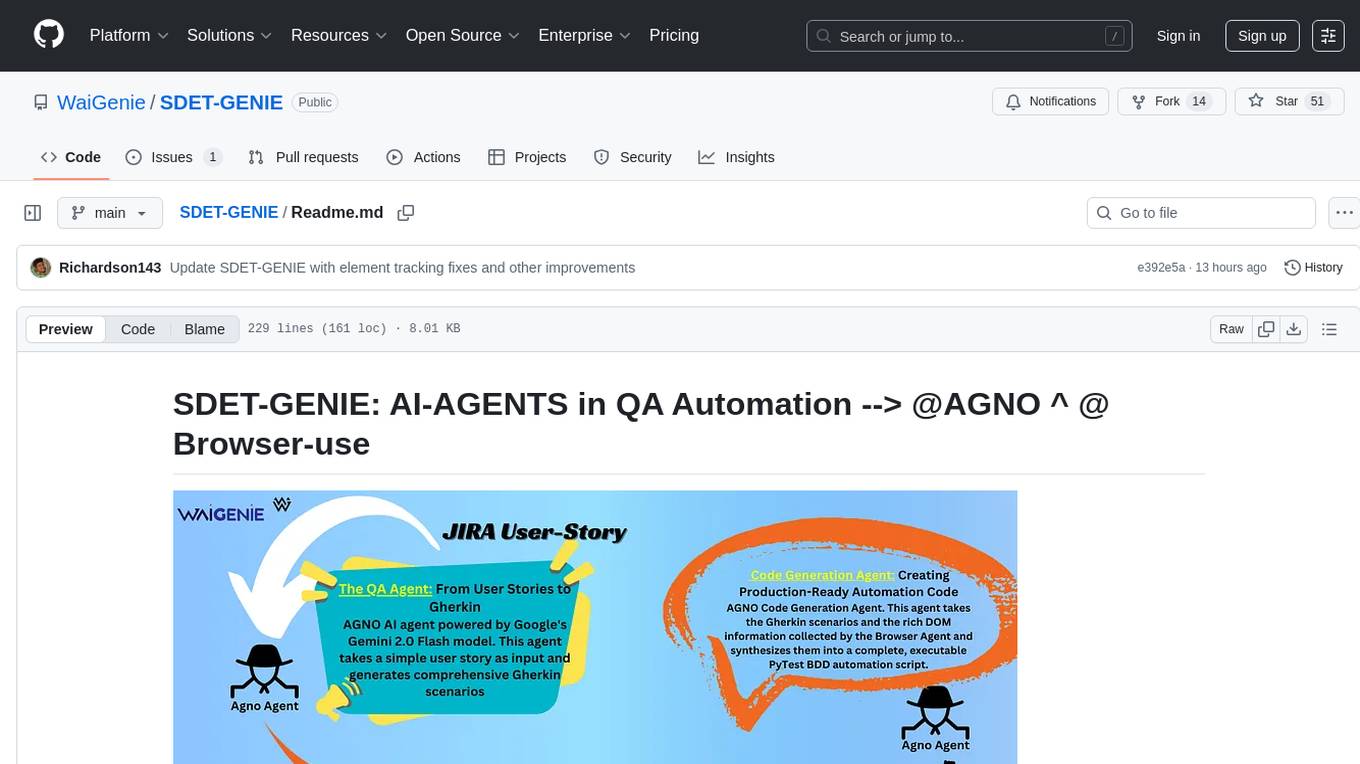

SDET-GENIE

SDET-GENIE is a cutting-edge, AI-powered Quality Assurance (QA) automation framework that revolutionizes the software testing process. Leveraging a suite of specialized AI agents, SDET-GENIE transforms rough user stories into comprehensive, executable test automation code through a seamless end-to-end process. The framework integrates five powerful AI agents working in sequence: User Story Enhancement Agent, Manual Test Case Agent, Gherkin Scenario Agent, Browser Agent, and Code Generation Agent. It supports multiple testing frameworks and provides advanced browser automation capabilities with AI features.

higress

Higress is an open-source cloud-native API gateway built on the core of Istio and Envoy, based on Alibaba's internal practice of Envoy Gateway. It is designed for AI-native API gateway, serving AI businesses such as Tongyi Qianwen APP, Bailian Big Model API, and Machine Learning PAI platform. Higress provides capabilities to interface with LLM model vendors, AI observability, multi-model load balancing/fallback, AI token flow control, and AI caching. It offers features for AI gateway, Kubernetes Ingress gateway, microservices gateway, and security protection gateway, with advantages in production-level scalability, stream processing, extensibility, and ease of use.

trustgraph

TrustGraph is a tool that deploys private GraphRAG pipelines to build a RDF style knowledge graph from data, enabling accurate and secure `RAG` requests compatible with cloud LLMs and open-source SLMs. It showcases the reliability and efficiencies of GraphRAG algorithms, capturing contextual language flags missed in conventional RAG approaches. The tool offers features like PDF decoding, text chunking, inference of various LMs, RDF-aligned Knowledge Graph extraction, and more. TrustGraph is designed to be modular, supporting multiple Language Models and environments, with a plug'n'play architecture for easy customization.

cosdata

Cosdata is a cutting-edge AI data platform designed to power the next generation search pipelines. It features immutability, version control, and excels in semantic search, structured knowledge graphs, hybrid search capabilities, real-time search at scale, and ML pipeline integration. The platform is customizable, scalable, efficient, enterprise-grade, easy to use, and can manage multi-modal data. It offers high performance, indexing, low latency, and high requests per second. Cosdata is designed to meet the demands of modern search applications, empowering businesses to harness the full potential of their data.

For similar tasks

awesome-ai-security

Awesome AI Security is a curated list of frameworks, standards, learning resources, and open source tools related to AI security. It covers a wide range of topics including general reading material, technical material & labs, podcasts, governance frameworks and standards, offensive tools and frameworks, attacking Large Language Models (LLMs), AI for offensive cyber, defensive tools and frameworks, AI for defensive cyber, data security and governance, general AI/ML safety and robustness, MCP security, LLM guardrails, safety and sandboxing for agentic AI tools, detection & scanners, OpenClaw security, privacy and confidentiality, agentic AI skills, models for cybersecurity, and more.

watchtower

AIShield Watchtower is a tool designed to fortify the security of AI/ML models and Jupyter notebooks by automating model and notebook discoveries, conducting vulnerability scans, and categorizing risks into 'low,' 'medium,' 'high,' and 'critical' levels. It supports scanning of public GitHub repositories, Hugging Face repositories, AWS S3 buckets, and local systems. The tool generates comprehensive reports, offers a user-friendly interface, and aligns with industry standards like OWASP, MITRE, and CWE. It aims to address the security blind spots surrounding Jupyter notebooks and AI models, providing organizations with a tailored approach to enhancing their security efforts.

LLM-PLSE-paper

LLM-PLSE-paper is a repository focused on the applications of Large Language Models (LLMs) in Programming Language and Software Engineering (PL/SE) domains. It covers a wide range of topics including bug detection, specification inference and verification, code generation, fuzzing and testing, code model and reasoning, code understanding, IDE technologies, prompting for reasoning tasks, and agent/tool usage and planning. The repository provides a comprehensive collection of research papers, benchmarks, empirical studies, and frameworks related to the capabilities of LLMs in various PL/SE tasks.

invariant

Invariant Analyzer is an open-source scanner designed for LLM-based AI agents to find bugs, vulnerabilities, and security threats. It scans agent execution traces to identify issues like looping behavior, data leaks, prompt injections, and unsafe code execution. The tool offers a library of built-in checkers, an expressive policy language, data flow analysis, real-time monitoring, and extensible architecture for custom checkers. It helps developers debug AI agents, scan for security violations, and prevent security issues and data breaches during runtime. The analyzer leverages deep contextual understanding and a purpose-built rule matching engine for security policy enforcement.

OpenRedTeaming

OpenRedTeaming is a repository focused on red teaming for generative models, specifically large language models (LLMs). The repository provides a comprehensive survey on potential attacks on GenAI and robust safeguards. It covers attack strategies, evaluation metrics, benchmarks, and defensive approaches. The repository also implements over 30 auto red teaming methods. It includes surveys, taxonomies, attack strategies, and risks related to LLMs. The goal is to understand vulnerabilities and develop defenses against adversarial attacks on large language models.

Awesome-LLM4Cybersecurity

The repository 'Awesome-LLM4Cybersecurity' provides a comprehensive overview of the applications of Large Language Models (LLMs) in cybersecurity. It includes a systematic literature review covering topics such as constructing cybersecurity-oriented domain LLMs, potential applications of LLMs in cybersecurity, and research directions in the field. The repository analyzes various benchmarks, datasets, and applications of LLMs in cybersecurity tasks like threat intelligence, fuzzing, vulnerabilities detection, insecure code generation, program repair, anomaly detection, and LLM-assisted attacks.

quark-engine

Quark Engine is an AI-powered tool designed for analyzing Android APK files. It focuses on enhancing the detection process for auto-suggestion, enabling users to create detection workflows without coding. The tool offers an intuitive drag-and-drop interface for workflow adjustments and updates. Quark Agent, the core component, generates Quark Script code based on natural language input and feedback. The project is committed to providing a user-friendly experience for designing detection workflows through textual and visual methods. Various features are still under development and will be rolled out gradually.

vulnerability-analysis

The NVIDIA AI Blueprint for Vulnerability Analysis for Container Security showcases accelerated analysis on common vulnerabilities and exposures (CVE) at an enterprise scale, reducing mitigation time from days to seconds. It enables security analysts to determine software package vulnerabilities using large language models (LLMs) and retrieval-augmented generation (RAG). The blueprint is designed for security analysts, IT engineers, and AI practitioners in cybersecurity. It requires NVAIE developer license and API keys for vulnerability databases, search engines, and LLM model services. Hardware requirements include L40 GPU for pipeline operation and optional LLM NIM and Embedding NIM. The workflow involves LLM pipeline for CVE impact analysis, utilizing LLM planner, agent, and summarization nodes. The blueprint uses NVIDIA NIM microservices and Morpheus Cybersecurity AI SDK for vulnerability analysis.

For similar jobs

awesome-ai-security

Awesome AI Security is a curated list of frameworks, standards, learning resources, and open source tools related to AI security. It covers a wide range of topics including general reading material, technical material & labs, podcasts, governance frameworks and standards, offensive tools and frameworks, attacking Large Language Models (LLMs), AI for offensive cyber, defensive tools and frameworks, AI for defensive cyber, data security and governance, general AI/ML safety and robustness, MCP security, LLM guardrails, safety and sandboxing for agentic AI tools, detection & scanners, OpenClaw security, privacy and confidentiality, agentic AI skills, models for cybersecurity, and more.

ai-goat

AI Goat is a tool designed to help users learn about AI security through a series of vulnerable LLM CTF challenges. It allows users to run everything locally on their system without the need for sign-ups or cloud fees. The tool focuses on exploring security risks associated with large language models (LLMs) like ChatGPT, providing practical experience for security researchers to understand vulnerabilities and exploitation techniques. AI Goat uses the Vicuna LLM, derived from Meta's LLaMA and ChatGPT's response data, to create challenges that involve prompt injections, insecure output handling, and other LLM security threats. The tool also includes a prebuilt Docker image, ai-base, containing all necessary libraries to run the LLM and challenges, along with an optional CTFd container for challenge management and flag submission.

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

PurpleLlama

Purple Llama is an umbrella project that aims to provide tools and evaluations to support responsible development and usage of generative AI models. It encompasses components for cybersecurity and input/output safeguards, with plans to expand in the future. The project emphasizes a collaborative approach, borrowing the concept of purple teaming from cybersecurity, to address potential risks and challenges posed by generative AI. Components within Purple Llama are licensed permissively to foster community collaboration and standardize the development of trust and safety tools for generative AI.

vpnfast.github.io

VPNFast is a lightweight and fast VPN service provider that offers secure and private internet access. With VPNFast, users can protect their online privacy, bypass geo-restrictions, and secure their internet connection from hackers and snoopers. The service provides high-speed servers in multiple locations worldwide, ensuring a reliable and seamless VPN experience for users. VPNFast is easy to use, with a user-friendly interface and simple setup process. Whether you're browsing the web, streaming content, or accessing sensitive information, VPNFast helps you stay safe and anonymous online.

taranis-ai

Taranis AI is an advanced Open-Source Intelligence (OSINT) tool that leverages Artificial Intelligence to revolutionize information gathering and situational analysis. It navigates through diverse data sources like websites to collect unstructured news articles, utilizing Natural Language Processing and Artificial Intelligence to enhance content quality. Analysts then refine these AI-augmented articles into structured reports that serve as the foundation for deliverables such as PDF files, which are ultimately published.

NightshadeAntidote

Nightshade Antidote is an image forensics tool used to analyze digital images for signs of manipulation or forgery. It implements several common techniques used in image forensics including metadata analysis, copy-move forgery detection, frequency domain analysis, and JPEG compression artifacts analysis. The tool takes an input image, performs analysis using the above techniques, and outputs a report summarizing the findings.

h4cker

This repository is a comprehensive collection of cybersecurity-related references, scripts, tools, code, and other resources. It is carefully curated and maintained by Omar Santos. The repository serves as a supplemental material provider to several books, video courses, and live training created by Omar Santos. It encompasses over 10,000 references that are instrumental for both offensive and defensive security professionals in honing their skills.