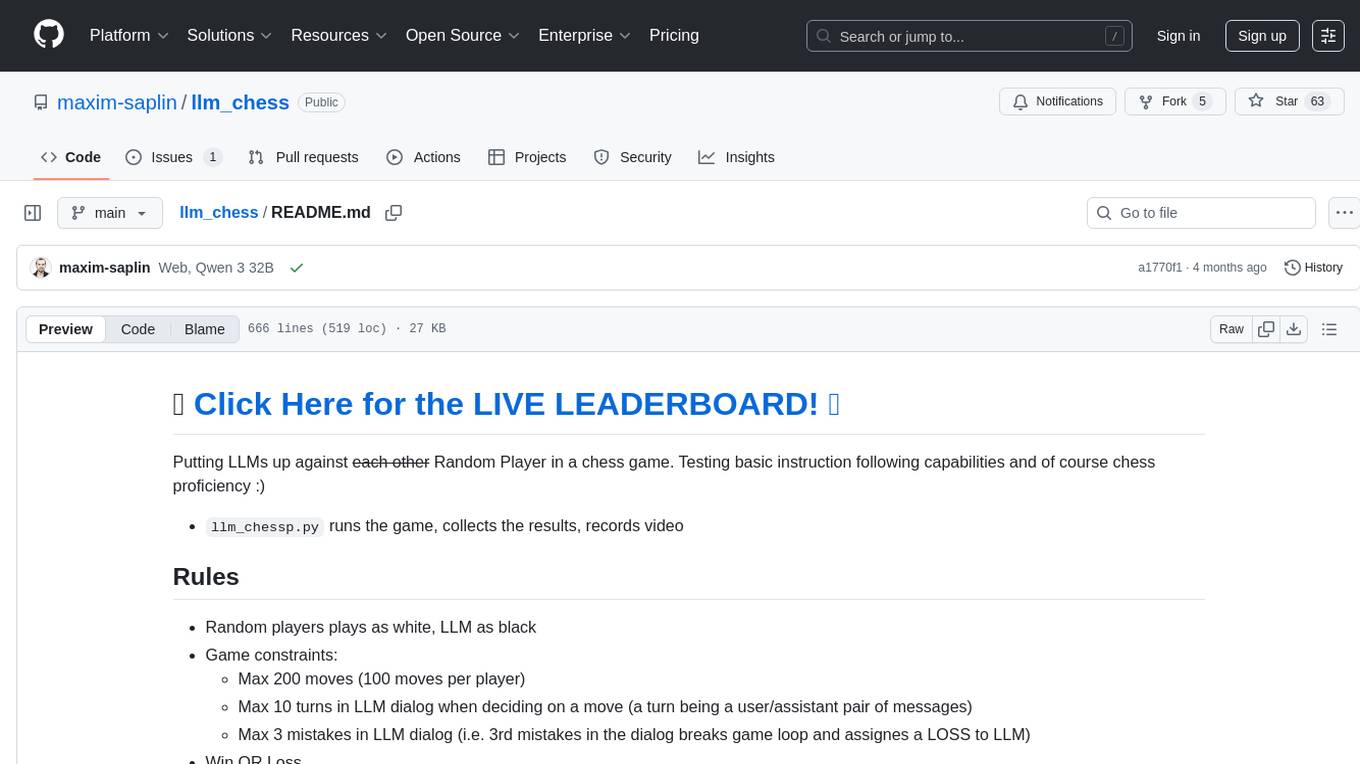

llm_chess

LLM Chess - Large Language Models Competing in Chess

Stars: 64

llm_chess is a tool designed to put Large Language Models (LLMs) against a Random Player in a chess game to test basic instruction following capabilities and chess proficiency. The tool sets constraints for the game, determines win/loss conditions, and handles exceptions/execution errors. Users can run games between LLMs and random players, configure player types, and analyze game results. The tool also supports running multiple games, processing logs, and preparing data for web visualization. It provides insights into player performance, model behavior, and future ideas for validation and benchmarking.

README:

Putting LLMs up against each other Random Player in a chess game. Testing basic instruction following capabilities and of course chess proficiency :)

-

llm_chessp.pyruns the game, collects the results, records video

- Random players plays as white, LLM as black

- Game constraints:

- Max 200 moves (100 moves per player)

- Max 10 turns in LLM dialog when deciding on a move (a turn being a user/assistant pair of messages)

- Max 3 mistakes in LLM dialog (i.e. 3rd mistakes in the dialog breaks game loop and assignes a LOSS to LLM)

- Win OR Loss

- In LLM dialog if max turns is reached OR 3 mistakes are made by LMM, Random Players gets a WIN and LLM gets a LOSS

- If max moves is reached a DRAW is given

- Chess engine evaluates the board after each move and can give the corresponding player a WIN in case of a checkmate or stop the game and give a DRAW for the following reasons: Stalemate, Insufficient Material, Seventy-five Moves, or Fivefold Repetition.

- Exceptions/Execution Errors:

- If the execution is halted due to a programatic error the game is stopped and DRAW is scored.

- MANUAL inspection of logs is required:

- If the error happens to be connectivity or thorrle limmit error by API the game to be discarded

- If the error is model error (e.g. ""The model produced invalid content. Consider modifying your prompt if you are seeing this error persistently." by OpenAI or 400 or 500 errors by Local Models) the draw to be changed to a LOSS to LLM

- Decide if you would like to put one LLM against the other OR a random player (chaos monkey picking random move out of a list of legal moves provided to it)

- Set

use_random_playerto True to make random player play white and LLM play black

- Set

- Set LLM params in the .env file (API key, etc.) for both white and black player agents

- Azure Open AI used by default, modify

utils.pyto use a different provider that is supported by Autogen 0.2

- Azure Open AI used by default, modify

- Check configs (see next)

-

pip install -r requirements.txt- Optionally create a VENV

- Run

llm_chess.py

Adjust the global configs at llm_chess.py.

-

white_player_type: Determines the type of player controlling the white pieces. Options includeRANDOM_PLAYER,LLM_WHITE,LLM_BLACK,CHESS_ENGINE_SUNFISH, andCHESS_ENGINE_STOCKFISH. -

black_player_type: Determines the type of player controlling the black pieces. Options includeRANDOM_PLAYER,LLM_WHITE,LLM_BLACK,CHESS_ENGINE_SUNFISH, andCHESS_ENGINE_STOCKFISH. -

enable_reflection: whether to offer the LLM time to think and evaluate moves by giving an extra "reflect" action -

use_fen_board: A boolean indicating whether to use the FEN format for board representation. The default isFalse. -

max_game_moves: An integer specifying the maximum number of moves allowed in a game before it is automatically terminated. The default is 200. - Constraints for a single move (LLM dialogs if LLM agent is used)

-

max_llm_turns: An integer indicating the maximum number of conversation turns (pairs of user/assistant messages) an LLM can take while deciding and making a move. The default is 10. -

max_failed_attempts: An integer that sets the number of incorrect replies or actions a player agent can make before the game is halted and the player is declared the loser. E.g. if a model returns an action name not in the requested format OR asks to make a move that is not possible an internal counter will grow, and the model will be asked to self-correct. If themax_failed_attemptsis reached the game is interrupted, and WIN is given to the opposite player. The default value is 3.

-

-

throttle_delay_moves: A delay in seconds between moves to prevent throttling by LLM providers due to frequent API requests. The default is 1 second.

These settings configure the game environment and control the flow of the chess match between the agents.

The run_multiple_games.py script allows you to execute multiple chess games between different agents and aggregate the results.

To run multiple games:

- Adjust the

NUM_REPETITIONSvariable to set the number of games you want to simulate. - The results, including win/loss statistics and material counts, are aggregated and can be analyzed to understand the strengths and weaknesses of each player type.

- Aggregate logs and logs for individual games (if

STORE_INDIVIDUAL_LOGSis set to True) can be stored in the specifiedLOG_FOLDERfor further inspection.

This feature is used to compare different kinds of players and generalize the findings. For LLM playes 10 games were used, for random/chess engine players 1000 games, some states is provided below.

When running multiple games individual logs are collected in {date_time}.json files (e.g. 2024.10.11_17:34.json) which are collected in a directory. After the games are finished summary results are collected in aggregate_results.csv.

Notes:

- "wrong_moves" and "wrong_actions" is the total number of erroneous replies by the player (e.g. not following convention) made in "inner" dialogs between the proxy and player. To get the total number of erroneous replies add up the metrics (i.e.

wrong_movesdo not includewrong_actions)- A single game is finished if any of the players make more than

max_failed_attemptsin the inner dialogs (i.e.wrong_moves+wrong_actions<max_failed_attempts- see above config)

- A single game is finished if any of the players make more than

-

data_processing/aggregate_logss_to_csv.pycan process individual games logs within a given folder and produce a CSV grouping logs by model name and aggregating stats. Change the default params to point the script to the correct folder and output CSV file:

Run data_processing/get_refined.py to prdouce refined.csv ready for index.html emdedding. Manually copy the needed rows to index.html const in JS - the data will be used in the Web Leaderboard.

Why copy manually? Cause you are likely updating a leaderboard and some of the models in the Web migth have different names, not the ones used in the logs.

- LLM Player Agent (instantiated from Autogen's standard ConversiableAgent) is used to evaluate the board and make moves on behalf of one of the players (white or black)

- Random Player Agent (custom RandomPlayerAgent) requests for a list of legal moves, randomly picks one, and then makes a move. Always plays as white

- Proxy Agent (custom AutoReplyAgent) is used to kick off conversation with a player agent and provides a list of available actions (get_current_board, get_legal_moves, make_move)

- Chess Engine Players:

- Sunfish: A simple Python engine.

- Stockfish: A powerful engine requiring separate installation.

GPT-4o-Mini

Proxy (to Player_Black):

You are a professional chess player and you play as black. Now is your turn to make a move. Before making a move you can pick one of 3 actions:

- 'get_current_board' to get the schema and current status of the board

- 'get_legal_moves' to get a UCI formatted list of available moves

- 'make_move <UCI formatted move>' when you are ready to complete your turn (e.g., 'make_move e2e4')

Respond with the action.

--------------------------------------------------------------------------------

Player_Black (to Proxy):

get_current_board

--------------------------------------------------------------------------------

Proxy (to Player_Black):

⭘ ♜ ♝ ♛ ♚ ♜ ⭘ ⭘

♟ ♟ ♟ ♟ ♟ ♟ ♝ ♟

⭘ ⭘ ♞ ⭘ ⭘ ♞ ⭘ ⭘

⭘ ⭘ ⭘ ⭘ ⭘ ⭘ ⭘ ♕

♙ ♙ ⭘ ⭘ ♙ ⭘ ⭘ ⭘

⭘ ⭘ ⭘ ♙ ♔ ⭘ ⭘ ⭘

⭘ ⭘ ♙ ⭘ ⭘ ♙ ♙ ♙

♖ ♘ ♗ ⭘ ⭘ ♗ ⭘ ♖

--------------------------------------------------------------------------------

Player_Black (to Proxy):

get_legal_moves

--------------------------------------------------------------------------------

Proxy (to Player_Black):

f8h8,f8g8,b8a8,g7h8,g7h6,f6g8,f6h5,f6d5,f6g4,f6e4,c6e5,c6a5,c6d4,c6b4,h7h6,e7e6,d7d6,b7b6,a7a6,e7e5,d7d5,b7b5,a7a5

--------------------------------------------------------------------------------

Player_Black (to Proxy):

make_move f8g8

--------------------------------------------------------------------------------

Proxy (to Player_Black):

Move made, switching player

Game run results are stored under the _logs folder.

- Logs before 08.10.2024 (first 8) are more strict with wrong moves stats

- Logs before 16.10.2024 had ambiguous "Unknown issue, Player_Black failed to make a move" reason which often meant that a single dialog took more than 10 turns (20 total messages) and execution was halted, changed to include a more specific reason "Max turns in single dialog"

- Different configs could be used, directory folder

- Log

_15.10.2024_gpt-4o-anthropic.claude-v3-5-sonnet_reflectiohad timeout error, aggregate only had 9 out of 10 consistent runs - After 19.10.2024 setting default hyperparams ("temperature": 0.3, "top_p": 1.0, "frequency_penalty": 0.0, "presence_penalty": 0.0,)

- 19.10.2024, Gemini-1.5-flash-001 consistently failed to follow the instructions:

- 22.10.2024, slightly updated common prompt removing excessive tabs

- 05.11.2024, fixed bug with wrong action counting (not global per game but per move), set temperature to 0.7, re-ran no_reflection

- 14.01.2025, changed logs aand switched DRAWS for LLM losses due to model error to Gemini-1.5-pro-preview-0409 (8 logs from November, no reflection), qwq-32b-preview@q4_k_m (1 log from January 9, no reflection), sky-t1-32b-preview@q4_0 (1 log from January 14, no reflection), o1-mini-2024-09-12 (1 lof fro December 22, no reflection), gpt-4-turbo-2024-04-09 (4 logs from November, reflection)

- 31.01.2025 - changing from weighted average to simple average for material count, wrong moves, wrong actions

- 08.03.2025 - thinking models with think tags, changing

ignore_texttoremove_textensuring that thinking tags are not inluded in consequent messages shared with the model (i.e. removing think sections returned previously in next messages in multi-turn dialogs, less clutter, recomended by QwQ and I guess assumed by any thinking model they don't need seeing past thinking trails) - 12.03.2025 - adding PGN history to logs

- 16.03.2025 - wrong actions and wrong moves stats has been wrongly collected (underreporting) in all prior logs, the mistakes metric for all prior logs in invalid

- 16.04.2024 - removing "frequency_penalty": 0.0, "presence_penalty": 0.0 as non relevant and breaking LLM config validations with some models

I have conducted a number of games putting LLM (playing with black) against a Random Player (as white), typically 10 games with a cap of 200 moves.

!NOTE, not touching prompts, changing parsing logic to be more relaxed, logs before 08.10.2024 (first 8) are more strict with wrong moves stats

Original kick-off prompt:

You are a professional chess player and you play as black. Now is your turn to make a move. Before making a move you can pick one of 3 actions:

- 'get_current_board' to get the schema and current status of the board

- 'get_legal_moves' to get a UCI formatted list of available moves

- 'make_move <UCI formatted move>' when you are ready to complete your turn (e.g., 'make_move e2e4')

Respond with the action.

And the failure message:

Invalid action. Pick one, reply exactly with the name and space delimited argument: "get_current_board, get_legal_moves, make_move <UCI formatted move>

- GPT-4o, GPT-4o mini, gemini-1.5-pro-preview-0409 worked fine with the original prompts and exact match logic for make_move

- Claude 3.5 Sonnet failed to reply with a simple string always adding verbosity

Proxy (to Player_Black):

Invalid action. Pick one, reply exactly with the name and space delimited argument: get_current_board, get_legal_moves, make_move <UCI formatted move>

--------------------------------------------------------------------------------

Player_Black (to Proxy):

I apologize for the continued confusion. Let me provide the correct format for the move I want to make:

make_move c7c5

- Gemini-1.5-flash-001 always wrapped answers in JSON code blocks (while not asked)

- lama-3.1-nemotron-70b-instruct-hf 4 bit was too verbose failing to make any actions (2024.10.22_23:18_lama-3.1-nemotron-70b-instruct-hf.md)

- gpt-35-turbo-0125 failed to make any moves by not complying with the instructions and reply format (see _23.10.2024_gpt-35-turbo-0125/fail.md)

Proxy (to Player_Black):

You are a professional chess player and you play as black. Now is your turn to make a move. Before making a move you can pick one of 3 actions:

- 'get_current_board' to get the schema and current status of the board

- 'get_legal_moves' to get a UCI formatted list of available moves

- 'make_move <UCI formatted move>' when you are ready to complete your turn (e.g., 'make_move e2e4')

Respond with the action.

--------------------------------------------------------------------------------

Player_Black (to Proxy):

get_current_board

--------------------------------------------------------------------------------

Proxy (to Player_Black):

♜ ♞ ♝ ♛ ♚ ♝ ⭘ ⭘

⭘ ♟ ♟ ♟ ♟ ⭘ ⭘ ♜

♟ ⭘ ⭘ ⭘ ⭘ ♟ ♟ ♟

♙ ⭘ ⭘ ⭘ ⭘ ⭘ ⭘ ♞

⭘ ⭘ ⭘ ♙ ⭘ ♙ ⭘ ⭘

⭘ ♙ ♘ ⭘ ⭘ ♘ ⭘ ⭘

♖ ⭘ ♙ ⭘ ♙ ⭘ ♙ ♙

⭘ ⭘ ♗ ♕ ♔ ♗ ⭘ ♖

--------------------------------------------------------------------------------

Player_Black (to Proxy):

get_legal_moves

--------------------------------------------------------------------------------

Proxy (to Player_Black):

f8g7,e8f7,b8c6,a8a7,h7h8,h7g7,h7f7,h5g7,h5f4,h5g3,e7e6,d7d6,c7c6,b7b6,g6g5,f6f5,e7e5,d7d5,c7c5,b7b5

--------------------------------------------------------------------------------

Player_Black (to Proxy):

make_move h7h6

--------------------------------------------------------------------------------

Proxy (to Player_Black):

Failed to make move: illegal uci: 'h7h6' in rnbqkb2/1pppp2r/p4ppp/P6n/3P1P2/1PN2N2/R1P1P1PP/2BQKB1R b Kq - 2 8

- Azure OpenAI:

- gpt-4.1-2025-04-14

- gpt-4.5-preview-2025-02-27

- o3-mini-2025-01-31-medium

- o3-mini-2025-01-31-low

- o1-preview-2024-09-12

- o1-mini-2024-09-12

- gpt-4o-2024-11-20

- gpt-4o-2024-08-06

- gpt-4o-2024-05-13

- gpt-4o-mini-2024-07-18

- gpt-35-turbo-0125

- gpt-35-turbo-0301

- gpt-35-turbo-0613

- gpt-35-turbo-1106

- gpt-4-turbo-2024-04-09

- gpt-4-0613

- gpt-4-32k-0613

- OpenAI

- gpt-4.1-2025-04-14

- gpt-4.1-mini-2025-04-14

- gpt-4.1-nano-2025-04-14

- gpt-4.5-preview-2025-02-27

- AWS:

- anthropic.claude-v3-5-sonnet-v1

- anthropic.claude-v3-5-sonnet-v2

- anthropic.claude-v3-opus

- anthropic.claude-v3-haiku

- amazon.nova-pro-v1

- amazon.nova-lite-v1

- Google:

- gemini-1.5-flash-001

- gemini-1.5-pro-preview-0409

- gemini-2.0-flash-exp

- gemini-2.0-flash-001

- gemini-2.0-flash-lite-001

- gemini-2.0-flash-lite-preview-02-05

- gemini-2.0-flash-thinking-exp-1219

- gemini-2.0-flash-thinking-exp-01-21

- gemini-2.0-flash-lite-preview-02-05

- chat-bison-32k@002 (Palm 2)

- x.ai

- grok-2-1212

- Groq:

- llama-3.2-90b-vision-preview

- llama-3.3-70b-versatile

- llama-3.3-70b-specdec

- llama3-70b-8192

- llama-3.1-8b-instant

- llama3-8b-8192

- mixtral-8x7b-32768

- gemma2-9b-it

- Cerebras:

- llama-4-scout-17b-16e-instruct

- llama-3.3-70b

- llama3.1-8b

- qwen3-32b

- Deepseek:

- deepseek-chat-v3

- deepseek-reasoner-r1

- Alibaba:

- qwen-max-2025-01-25

- qwen-turbo-2024-11-01

- qwen-plus-2025-01-25

- qwen2.5-72b-instruct

- qwen2.5-14b-instruct-1m

- qwen2.5-7b-instruct-1m

- Mercury

- mercury-coder-small

- Local (up to date llama.cpp/LM Studio as of time of testing, RTX4090 24GB, default settings - 8k context, 100% GPU off-load when possible):

- llama-2-7b-chat@q8_0

- llama-3-70b-instruct-awq

- meta-llama-3.1-8b-instruct@fp16

- meta-llama-3.1-8b-instruct@q8_0

- meta-llama-3.1-8b-instruct@q4_k_m

- gemma-2-27b-it@q6_k_l

- gemma-2-9b-it-8bit

- gemma-2-9b-it@iq4_xs

- granite-3.1-8b-instruct@q8_0

- internlm3-8b-instruct@q8_0

- ministral-8b-instruct-2410@q8_0

- mistral-nemo-12b-instruct-2407@q8_0

- mistral-small-instruct-2409

- phi-4@q8

- qwen2.5-14b-instruct@q8_0

- qwq-32b-preview@q4_k_m

- sky-t1-32b-preview@q4_0

- deephermes-3-llama-3-8b-preview@q8

- qwen3-14b@iq4_xs

- Random players request all legal moves and randomly pick one thus always making valid moves

- Chess engines

- Simple no dependency Pyhton engine Sunfish (https://github.com/thomasahle/sunfish)

- Powerful Stockfish

- Requires separate installation and properly defining path with python-chess

- On macOS, I installed it via

brew install stockfish - Path to Stockfish is identified via

where stockfishyielding/opt/homebrew/bin/stockfish - Set the path in

llm_chess.py.

- On macOS, I installed it via

- Requires separate installation and properly defining path with python-chess

Below are some stats simulating many games (1000) and collecting stats to get an idea of a baseline for LLM players playing against a random player

{

"total_games": 1000,

"white_wins": 0,

"black_wins": 1000,

"draws": 0,

"total_moves": 57922,

"reasons": {

"Checkmate": 1000

},

"player_white": {

"name": "Random_Player",

"model": "",

"total_material": 19125,

"wrong_moves": 0,

"wrong_actions": 0,

"reflections_used": 0,

"reflections_used_before_board": 0,

"avg_material": 19.125,

"std_dev_material": 10.335966679861503

},

"player_black": {

"name": "Chess_Engine_Stockfish_Black",

"model": "",

"total_material": 35113,

"wrong_moves": 0,

"wrong_actions": 0,

"reflections_used": 0,

"reflections_used_before_board": 0,

"avg_material": 35.113,

"std_dev_material": 5.572188105249165

},

"average_moves": 57.922,

"std_dev_moves": 30.961727092404658

}{

"total_games": 1000,

"white_wins": 105,

"black_wins": 0,

"draws": 895,

"total_moves": 190073,

"reasons": {

"Max moves reached": 886,

"Checkmate": 105,

"Stalemate": 9

},

"player_white": {

"name": "Random_Player",

"model": "",

"total_material": 10555,

"wrong_moves": 0,

"wrong_actions": 0,

"avg_material": 10.555,

"std_dev_material": 7.228224001940049

},

"player_black": {

"name": "Random_Player",

"model": "",

"total_material": 11076,

"wrong_moves": 0,

"wrong_actions": 0,

"avg_material": 11.076,

"std_dev_material": 7.491217680880882

},

"average_moves": 190.073,

"std_dev_moves": 32.05006829755403

}If enabled (enable_reflection = True) an extract action is added to the list of available options offerrin an LLM to reflect on best move generating more tokens and thinking through the best next move.

Sample dialog:

Proxy (to Player_Black):

You are a professional chess player and you play as black. Now is your turn to make a move. Before making a move you can pick one of 3 actions:

- 'get_current_board' to get the schema and current status of the board

- 'get_legal_moves' to get a UCI formatted list of available moves

- 'do_reflection' to take a moment to think about your strategy

- 'make_move <UCI formatted move>' when you are ready to complete your turn (e.g., 'make_move e2e4')

--------------------------------------------------------------------------------

Player_Black (to Proxy):

I'll start by checking the current state of the board.

Action: `get_current_board`

--------------------------------------------------------------------------------

Proxy (to Player_Black):

♜ ♞ ♝ ♛ ♚ ♝ ♞ ♜

♟ ♟ ♟ ⭘ ⭘ ♟ ♟ ♟

⭘ ⭘ ⭘ ⭘ ⭘ ⭘ ⭘ ⭘

⭘ ⭘ ⭘ ♟ ♟ ⭘ ⭘ ⭘

⭘ ⭘ ⭘ ⭘ ⭘ ⭘ ⭘ ⭘

♙ ⭘ ⭘ ♙ ♙ ⭘ ⭘ ⭘

⭘ ♙ ♙ ⭘ ⭘ ♙ ♙ ♙

♖ ♘ ♗ ♕ ♔ ♗ ♘ ♖

--------------------------------------------------------------------------------

Player_Black (to Proxy):

Let's analyze the board further by checking possible moves.

Action: `get_legal_moves`

--------------------------------------------------------------------------------

Proxy (to Player_Black):

g8e7,g8h6,g8f6,f8e7,f8d6,f8c5,f8b4,f8a3,e8e7,e8d7,d8e7,d8d7,d8f6,d8d6,d8g5,d8h4,c8d7,c8e6,c8f5,c8g4,c8h3,b8d7,b8c6,b8a6,h7h6,g7g6,f7f6,c7c6,b7b6,a7a6,e5e4,d5d4,h7h5,g7g5,f7f5,c7c5,b7b5,a7a5

--------------------------------------------------------------------------------

Player_Black (to Proxy):

I'll think about the best strategy given these options.

Action: `do_reflection`

--------------------------------------------------------------------------------

Proxy (to Player_Black):

Before deciding on the next move you can reflect on your current situation, write down notes, and evaluate.

Here are a few recommendations that you can follow to make a better move decision:

- Shortlist the most valuable next moves

- Consider how they affect the situation

- What could be the next moves from your opponent in each case

- Is there any strategy fitting the situation and your choice of moves

- Rerank the shortlisted moves based on the previous steps

--------------------------------------------------------------------------------

Player_Black (to Proxy):

**Reflection:**

1. **Shortlist Valuable Moves:**

- **g8f6**: Develops the knight, controls the center, and targets e4.

- **h7h6**: Prepares to fianchetto the bishop or prevents a knight pin on g5.

- **c7c5**: Challenges the center and prepares to expand on the queenside.

2. **Immediate Effects:**

- **g8f6**: Develops a piece, and brings the knight nearer to the center.

- **h7h6**: Solidifies kingside but is more of a precautionary move.

- **c7c5**: Direct action against the center, preparing for future breaks.

3. **Opponent's Responses:**

- **g8f6**: White might continue developing with Nc3 or push e4.

- **h7h6**: White might ignore it and proceed with normal development.

- **c7c5**: White could opt for dxc5 or develop with Nc3.

4. **Strategy Considerations:**

- Aim to control the center and set up a solid defensive position.

- Balance between development and maintaining structural integrity.

5. **Rerank Shortlisted Moves:**

- **1. g8f6**: Best for development and central control.

- **2. c7c5**: Strong challenge to the center.

- **3. h7h6**: Defensive but adds limited value immediately.

With that in mind, I’ll proceed with the development strategy.

Action: `make_move g8f6`

--------------------------------------------------------------------------------

Proxy (to Player_Black):

Move made, switching player

Prompt:

Please name chess pieces and their colors.

♕ ♔ ♗ ♘ ♖ ♙

♛ ♚ ♝ ♞ ♜ ♟

Here are the chess pieces and their colors:

White pieces: ♕ Queen ♔ King ♗ Bishop ♘ Knight ♖ Rook ♙ Pawn

Black pieces: ♛ Queen ♚ King ♝ Bishop ♞ Knight ♜ Rook ♟ Pawn

In a standard chess set, one player uses the white pieces, and the other player uses the black pieces. Each side starts with 16 pieces: 1 king, 1 queen, 2 rooks, 2 knights, 2 bishops, and 8 pawns.

Here are the chess pieces and their colors:

**White Pieces:**

* ♕ Queen

* ♔ King

* ♗ Bishop

* ♘ Knight

* ♖ Rook

* ♙ Pawn

**Black Pieces:**

* ♛ Queen

* ♚ King

* ♝ Bishop

* ♞ Knight

* ♜ Rook

* ♟ Pawn

White Pieces

♕: White Queen

♔: White King

♗: White Bishop

♘: White Knight

♖: White Rook

♙: White Pawn

Black Pieces

♛: Black Queen

♚: Black King

♝: Black Bishop

♞: Black Knight

♜: Black Rook

♟: Black Pawn

In chess, the pieces are typically divided into two colors: white and black. Here’s a breakdown of the pieces and their corresponding colors:

White Pieces:

♕ - White Queen

♔ - White King

♗ - White Bishop

♘ - White Knight

♖ - White Rook

♙ - White Pawn

Black Pieces:

♛ - Black Queen

♚ - Black King

♝ - Black Bishop

♞ - Black Knight

♜ - Black Rook

♟ - Black Pawn

Each player controls one color and aims to checkmate the opponent's king.

-

Validate that Win/Draw rate are representative of resoning abilities

- Currenntly there's a threshold of 0 wins which reliably was only beaten by Open AI o1 models and Deepseek R1 - the models generally well accepted by the puiblic as special and overperforming models

- This supports that chess proficiency is a good indicator of reasoning, the binary behavior - either you step over the threshold or stay behind it, either you do some reasoning or keep generating text converting input to output.

-

Corss-check with other benchmarks

-

I see alignment of instruction following evaluation with other benches

- E.g. ComplexFuncBench has this distinctive split between OS and Closed models with the latter doing much better complying with the prompt

ComplexFuncBench is designed to test complex function calling evaluation with over 1,000 real-world scenarios from five aspects. Function calling with: 1️⃣ multiple-step in single-turn 2️⃣ User-provided constraints 3️⃣ Parameter value reasoning from implicit information 4️⃣ Long parameter values that exceed 500 tokens 5️⃣ 128k long-context length. Insights: 🏆 Claude 3.5 61%; OpenAI 4o 60.5%; Qwen 2.5 72B 40%; Llama 3.1 70B 2.7% 📉 Open LLMs underperform compared to closed models ⛔ Value Errors are a major error type 🛑 Early stop in multi-step calls, failing to complete all necessary functions. 🧩 keys like 'filter' and 'legs' are more challenging Paper: https://lnkd.in/eTmf4RPc Github: https://lnkd.in/eKaSG6KR -

Rerun AIW Benchamrk (https://github.com/LAION-AI/AIW?tab=readme-ov-file) for more recent models and cross-check results - a breainteaser question that lead to breakdown of older Claude 3 and GPT-4

-

Correlate with TAU-Bench (https://github.com/sierra-research/tau-bench)

-

-

Better study and describe stochasticity. Randmization of games make the becnh unique, no memorization can happen, every game is different

- Smth similar to GSM Symbolic observations, adding mutations to a well known math bench demonstrates how models struggle and pass rate drops: https://machinelearning.apple.com/research/gsm-symbolic

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm_chess

Similar Open Source Tools

llm_chess

llm_chess is a tool designed to put Large Language Models (LLMs) against a Random Player in a chess game to test basic instruction following capabilities and chess proficiency. The tool sets constraints for the game, determines win/loss conditions, and handles exceptions/execution errors. Users can run games between LLMs and random players, configure player types, and analyze game results. The tool also supports running multiple games, processing logs, and preparing data for web visualization. It provides insights into player performance, model behavior, and future ideas for validation and benchmarking.

seahorse

A handy package for kickstarting AI contests. This Python framework simplifies the creation of an environment for adversarial agents, offering various functionalities for game setup, playing against remote agents, data generation, and contest organization. The package is user-friendly and provides easy-to-use features out of the box. Developed by an enthusiastic team of M.Sc candidates at Polytechnique Montréal, 'seahorse' is distributed under the 3-Clause BSD License.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

ai

This repository contains a collection of AI algorithms and models for various machine learning tasks. It provides implementations of popular algorithms such as neural networks, decision trees, and support vector machines. The code is well-documented and easy to understand, making it suitable for both beginners and experienced developers. The repository also includes example datasets and tutorials to help users get started with building and training AI models. Whether you are a student learning about AI or a professional working on machine learning projects, this repository can be a valuable resource for your development journey.

GEN-AI

GEN-AI is a versatile Python library for implementing various artificial intelligence algorithms and models. It provides a wide range of tools and functionalities to support machine learning, deep learning, natural language processing, computer vision, and reinforcement learning tasks. With GEN-AI, users can easily build, train, and deploy AI models for diverse applications such as image recognition, text classification, sentiment analysis, object detection, and game playing. The library is designed to be user-friendly, efficient, and scalable, making it suitable for both beginners and experienced AI practitioners.

AI_Spectrum

AI_Spectrum is a versatile machine learning library that provides a wide range of tools and algorithms for building and deploying AI models. It offers a user-friendly interface for data preprocessing, model training, and evaluation. With AI_Spectrum, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is designed to be flexible and scalable, making it suitable for both beginners and experienced data scientists.

pdr_ai_v2

pdr_ai_v2 is a Python library for implementing machine learning algorithms and models. It provides a wide range of tools and functionalities for data preprocessing, model training, evaluation, and deployment. The library is designed to be user-friendly and efficient, making it suitable for both beginners and experienced data scientists. With pdr_ai_v2, users can easily build and deploy machine learning models for various applications, such as classification, regression, clustering, and more.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

RAGElo

RAGElo is a streamlined toolkit for evaluating Retrieval Augmented Generation (RAG)-powered Large Language Models (LLMs) question answering agents using the Elo rating system. It simplifies the process of comparing different outputs from multiple prompt and pipeline variations to a 'gold standard' by allowing a powerful LLM to judge between pairs of answers and questions. RAGElo conducts tournament-style Elo ranking of LLM outputs, providing insights into the effectiveness of different settings.

build-your-own-x-machine-learning

This repository provides a step-by-step guide for building your own machine learning models from scratch. It covers various machine learning algorithms and techniques, including linear regression, logistic regression, decision trees, and neural networks. The code examples are written in Python and include detailed explanations to help beginners understand the concepts behind machine learning. By following the tutorials in this repository, you can gain a deeper understanding of how machine learning works and develop your own models for different applications.

BentoVLLM

BentoVLLM is an example project demonstrating how to serve and deploy open-source Large Language Models using vLLM, a high-throughput and memory-efficient inference engine. It provides a basis for advanced code customization, such as custom models, inference logic, or vLLM options. The project allows for simple LLM hosting with OpenAI compatible endpoints without the need to write any code. Users can interact with the server using Swagger UI or other methods, and the service can be deployed to BentoCloud for better management and scalability. Additionally, the repository includes integration examples for different LLM models and tools.

BrowserGym

BrowserGym is an open, easy-to-use, and extensible framework designed to accelerate web agent research. It provides benchmarks like MiniWoB, WebArena, VisualWebArena, WorkArena, AssistantBench, and WebLINX. Users can design new web benchmarks by inheriting the AbstractBrowserTask class. The tool allows users to install different packages for core functionalities, experiments, and specific benchmarks. It supports the development setup and offers boilerplate code for running agents on various tasks. BrowserGym is not a consumer product and should be used with caution.

open-ai

Open AI is a powerful tool for artificial intelligence research and development. It provides a wide range of machine learning models and algorithms, making it easier for developers to create innovative AI applications. With Open AI, users can explore cutting-edge technologies such as natural language processing, computer vision, and reinforcement learning. The platform offers a user-friendly interface and comprehensive documentation to support users in building and deploying AI solutions. Whether you are a beginner or an experienced AI practitioner, Open AI offers the tools and resources you need to accelerate your AI projects and stay ahead in the rapidly evolving field of artificial intelligence.

MaiBot

MaiBot is an intelligent QQ group chat bot based on a large language model. It is developed using the nonebot2 framework, with LLM providing conversation abilities, MongoDB for data persistence support, and NapCat as the QQ protocol endpoint support. The project is in active development stage, with features like chat functionality, emoji functionality, schedule management, memory function, knowledge base function, and relationship function planned for future updates. The project aims to create a 'life form' active in QQ group chats, focusing on companionship and creating a more human-like presence rather than a perfect assistant. The application generates content from AI models, so users are advised to discern carefully and not use it for illegal purposes.

simple-ai

Simple AI is a lightweight Python library for implementing basic artificial intelligence algorithms. It provides easy-to-use functions and classes for tasks such as machine learning, natural language processing, and computer vision. With Simple AI, users can quickly prototype and deploy AI solutions without the complexity of larger frameworks.

God-Level-AI

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This repository is designed for individuals aiming to excel in the field of Data and AI, providing video sessions and text content for learning. It caters to those in leadership positions, professionals, and students, emphasizing the need for dedicated effort to achieve excellence in the tech field. The content covers various topics with a focus on practical application.

For similar tasks

llm_chess

llm_chess is a tool designed to put Large Language Models (LLMs) against a Random Player in a chess game to test basic instruction following capabilities and chess proficiency. The tool sets constraints for the game, determines win/loss conditions, and handles exceptions/execution errors. Users can run games between LLMs and random players, configure player types, and analyze game results. The tool also supports running multiple games, processing logs, and preparing data for web visualization. It provides insights into player performance, model behavior, and future ideas for validation and benchmarking.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.