pyllms

Minimal Python library to connect to LLMs (OpenAI, Anthropic, Google, Groq, Reka, Together, AI21, Cohere, Aleph Alpha, HuggingfaceHub), with a built-in model performance benchmark.

Stars: 734

PyLLMs is a minimal Python library designed to connect to various Language Model Models (LLMs) such as OpenAI, Anthropic, Google, AI21, Cohere, Aleph Alpha, and HuggingfaceHub. It provides a built-in model performance benchmark for fast prototyping and evaluating different models. Users can easily connect to top LLMs, get completions from multiple models simultaneously, and evaluate models on quality, speed, and cost. The library supports asynchronous completion, streaming from compatible models, and multi-model initialization for testing and comparison. Additionally, it offers features like passing chat history, system messages, counting tokens, and benchmarking models based on quality, speed, and cost.

README:

PyLLMs is a minimal Python library to connect to various Language Models (LLMs) with a built-in model performance benchmark.

- Features

- Installation

- Quick Start

- Usage

- Configuration

- Model Benchmarks

- Supported Models

- Advanced Usage

- Contributing

- License

- Connect to top LLMs in a few lines of code

- Response meta includes tokens processed, cost, and latency standardized across models

- Multi-model support: Get completions from different models simultaneously

- LLM benchmark: Evaluate models on quality, speed, and cost

- Async and streaming support for compatible models

Install the package using pip:

pip install pyllmsimport llms

model = llms.init('gpt-4o')

result = model.complete("What is 5+5?")

print(result.text)import llms

model = llms.init('gpt-4o')

result = model.complete(

"What is the capital of the country where Mozart was born?",

temperature=0.1,

max_tokens=200

)

print(result.text)

print(result.meta)models = llms.init(model=['gpt-3.5-turbo', 'claude-instant-v1'])

result = models.complete('What is the capital of the country where Mozart was born?')

print(result.text)

print(result.meta)result = await model.acomplete("What is the capital of the country where Mozart was born?")model = llms.init('claude-v1')

result = model.complete_stream("Write an essay on the Civil War")

for chunk in result.stream:

if chunk is not None:

print(chunk, end='')history = []

history.append({"role": "user", "content": user_input})

history.append({"role": "assistant", "content": result.text})

model.complete(prompt=prompt, history=history)

# For OpenAI chat models

model.complete(prompt=prompt, system_message=system, history=history)count = model.count_tokens('The quick brown fox jumped over the lazy dog')PyLLMs will attempt to read API keys and the default model from environment variables. You can set them like this:

export OPENAI_API_KEY="your_api_key_here"

export ANTHROPIC_API_KEY="your_api_key_here"

export AI21_API_KEY="your_api_key_here"

export COHERE_API_KEY="your_api_key_here"

export ALEPHALPHA_API_KEY="your_api_key_here"

export HUGGINFACEHUB_API_KEY="your_api_key_here"

export GOOGLE_API_KEY="your_api_key_here"

export MISTRAL_API_KEY="your_api_key_here"

export REKA_API_KEY="your_api_key_here"

export TOGETHER_API_KEY="your_api_key_here"

export GROQ_API_KEY="your_api_key_here"

export DEEPSEEK_API_KEY="your_api_key_here"

export LLMS_DEFAULT_MODEL="gpt-3.5-turbo"Alternatively, you can pass initialization values to the init() method, either as a dict:

model = llms.init(model={'gpt-4': {'api_key': 'your_api_key_here'}})Or, as a tuple:

model = llms.init(model=('gpt-4', {'api_key': 'your_api_key_here'}))If you want multiple models, simply add other dict keys, or use a list of tuples:

model = llms.init(model={'gpt-4': {'api_key': 'your_api_key_here'}, 'deepseek-chat': {'api_key': 'your_api_key_here'}})

# or

model = llms.init(model=[('gpt-4', {'api_key': 'your_api_key_here'}), ('deepseek-chat', {'api_key': 'your_api_key_here'})])PyLLMs includes an automated benchmark system. The quality of models is evaluated using a powerful model (e.g., GPT-4) on a range of predefined questions, or you can supply your own.

model = llms.init(model=['claude-3-haiku-20240307', 'gpt-4o-mini', 'claude-3-5-sonnet-20240620', 'gpt-4o', 'mistral-large-latest', 'open-mistral-nemo', 'gpt-4', 'gpt-3.5-turbo', 'deepseek-coder', 'deepseek-chat', 'llama-3.1-8b-instant', 'llama-3.1-70b-versatile'])

gpt4 = llms.init('gpt-4o')

models.benchmark(evaluator=gpt4)Check Kagi LLM Benchmarking Project for the latest benchmarks!

To evaluate models on your own prompts:

models.benchmark(prompts=[("What is the capital of Finland?", "Helsinki")], evaluator=gpt4)To get a full list of supported models:

model = llms.init()

model.list() # list all models

model.list("gpt") # lists only models with 'gpt' in name/provider nameCurrently supported models (may be outdated):

| Provider | Models |

|---|---|

| OpenAIProvider | gpt-3.5-turbo, gpt-3.5-turbo-1106, gpt-3.5-turbo-instruct, gpt-4, gpt-4-1106-preview, gpt-4-turbo-preview, gpt-4-turbo, gpt-4o, gpt-4o-mini, gpt-4o-2024-08-06, o1-preview, o1-mini, o1 |

| AnthropicProvider | claude-instant-v1.1, claude-instant-v1, claude-v1, claude-v1-100k, claude-instant-1, claude-instant-1.2, claude-2.1, claude-3-haiku-20240307, claude-3-sonnet-20240229, claude-3-opus-20240229, claude-3-5-sonnet-20240620, claude-3-5-sonnet-20241022 |

| BedrockAnthropicProvider | anthropic.claude-instant-v1, anthropic.claude-v1, anthropic.claude-v2, anthropic.claude-3-haiku-20240307-v1:0, anthropic.claude-3-sonnet-20240229-v1:0, anthropic.claude-3-5-sonnet-20240620-v1:0 |

| AI21Provider | j2-grande-instruct, j2-jumbo-instruct |

| CohereProvider | command, command-nightly |

| AlephAlphaProvider | luminous-base, luminous-extended, luminous-supreme, luminous-supreme-control |

| HuggingfaceHubProvider | hf_pythia, hf_falcon40b, hf_falcon7b, hf_mptinstruct, hf_mptchat, hf_llava, hf_dolly, hf_vicuna |

| GoogleGenAIProvider | chat-bison-genai, text-bison-genai, gemini-1.5-pro, gemini-1.5-pro-latest, gemini-1.5-flash, gemini-1.5-flash-latest, gemini-1.5-pro-exp-0801 |

| GoogleProvider | chat-bison, text-bison, text-bison-32k, code-bison, code-bison-32k, codechat-bison, codechat-bison-32k, gemini-pro, gemini-1.5-pro-preview-0514, gemini-1.5-flash-preview-0514 |

| OllamaProvider | vanilj/Phi-4:latest, falcon3:10b, smollm2:latest, llama3.2:3b-instruct-q8_0, qwen2:1.5b, mistral:7b-instruct-v0.2-q4_K_S, phi3:latest, phi3:3.8b, phi:latest, tinyllama:latest, magicoder:latest, deepseek-coder:6.7b, deepseek-coder:latest, dolphin-phi:latest, stablelm-zephyr:latest |

| DeepSeekProvider | deepseek-chat, deepseek-coder |

| GroqProvider | llama-3.1-405b-reasoning, llama-3.1-70b-versatile, llama-3.1-8b-instant, gemma2-9b-it |

| RekaProvider | reka-edge, reka-flash, reka-core |

| TogetherProvider | meta-llama/Meta-Llama-3.1-405B-Instruct-Turbo |

| OpenRouterProvider | nvidia/llama-3.1-nemotron-70b-instruct, x-ai/grok-2, nousresearch/hermes-3-llama-3.1-405b:free, google/gemini-flash-1.5-exp, liquid/lfm-40b, mistralai/ministral-8b, qwen/qwen-2.5-72b-instruct |

| MistralProvider | mistral-tiny, open-mistral-7b, mistral-small, open-mixtral-8x7b, mistral-small-latest, mistral-medium-latest, mistral-large-latest, open-mistral-nemo |

import llms

AZURE_API_BASE = "{insert here}"

AZURE_API_KEY = "{insert here}"

model = llms.init('gpt-4')

azure_args = {

"engine": "gpt-4", # Azure deployment_id

"api_base": AZURE_API_BASE,

"api_type": "azure",

"api_version": "2023-05-15",

"api_key": AZURE_API_KEY,

}

azure_result = model.complete("What is 5+5?", **azure_args)- Set up a GCP account and create a project

- Enable Vertex AI APIs in your GCP project

- Install gcloud CLI tool

- Set up Application Default Credentials

Then:

model = llms.init('chat-bison')

result = model.complete("Hello!")- Ensure Ollama is running and you've pulled the desired model

- Get the name of the LLM you want to use

- Initialize PyLLMs:

model = llms.init("tinyllama:latest")

result = model.complete("Hello!")Contributions are welcome! Please feel free to submit a Pull Request.

This project is licensed under the MIT License. See the LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for pyllms

Similar Open Source Tools

pyllms

PyLLMs is a minimal Python library designed to connect to various Language Model Models (LLMs) such as OpenAI, Anthropic, Google, AI21, Cohere, Aleph Alpha, and HuggingfaceHub. It provides a built-in model performance benchmark for fast prototyping and evaluating different models. Users can easily connect to top LLMs, get completions from multiple models simultaneously, and evaluate models on quality, speed, and cost. The library supports asynchronous completion, streaming from compatible models, and multi-model initialization for testing and comparison. Additionally, it offers features like passing chat history, system messages, counting tokens, and benchmarking models based on quality, speed, and cost.

AnglE

AnglE is a library for training state-of-the-art BERT/LLM-based sentence embeddings with just a few lines of code. It also serves as a general sentence embedding inference framework, allowing for inferring a variety of transformer-based sentence embeddings. The library supports various loss functions such as AnglE loss, Contrastive loss, CoSENT loss, and Espresso loss. It provides backbones like BERT-based models, LLM-based models, and Bi-directional LLM-based models for training on single or multi-GPU setups. AnglE has achieved significant performance on various benchmarks and offers official pretrained models for both BERT-based and LLM-based models.

candle-vllm

Candle-vllm is an efficient and easy-to-use platform designed for inference and serving local LLMs, featuring an OpenAI compatible API server. It offers a highly extensible trait-based system for rapid implementation of new module pipelines, streaming support in generation, efficient management of key-value cache with PagedAttention, and continuous batching. The tool supports chat serving for various models and provides a seamless experience for users to interact with LLMs through different interfaces.

agentscope

AgentScope is a multi-agent platform designed to empower developers to build multi-agent applications with large-scale models. It features three high-level capabilities: Easy-to-Use, High Robustness, and Actor-Based Distribution. AgentScope provides a list of `ModelWrapper` to support both local model services and third-party model APIs, including OpenAI API, DashScope API, Gemini API, and ollama. It also enables developers to rapidly deploy local model services using libraries such as ollama (CPU inference), Flask + Transformers, Flask + ModelScope, FastChat, and vllm. AgentScope supports various services, including Web Search, Data Query, Retrieval, Code Execution, File Operation, and Text Processing. Example applications include Conversation, Game, and Distribution. AgentScope is released under Apache License 2.0 and welcomes contributions.

ai

The react-native-ai repository allows users to run Large Language Models (LLM) locally in a React Native app using the Universal MLC LLM Engine with compatibility for Vercel AI SDK. Please note that this project is experimental and not ready for production. The repository is licensed under MIT and was created with create-react-native-library.

Q-Bench

Q-Bench is a benchmark for general-purpose foundation models on low-level vision, focusing on multi-modality LLMs performance. It includes three realms for low-level vision: perception, description, and assessment. The benchmark datasets LLVisionQA and LLDescribe are collected for perception and description tasks, with open submission-based evaluation. An abstract evaluation code is provided for assessment using public datasets. The tool can be used with the datasets API for single images and image pairs, allowing for automatic download and usage. Various tasks and evaluations are available for testing MLLMs on low-level vision tasks.

browser-ai

Browser AI is a TypeScript library that provides access to in-browser AI model providers with seamless fallback to server-side models. It offers different packages for Chrome/Edge built-in browser AI models, open-source models via WebLLM, and 🤗 Transformers.js models. The library simplifies the process of integrating AI models into web applications by handling the complexities of custom hooks, UI components, state management, and compatibility with server-side models.

ai-gradio

ai-gradio is a Python package that simplifies the creation of machine learning apps using various models like OpenAI, Google's Gemini, Anthropic's Claude, LumaAI, CrewAI, XAI's Grok, and Hyperbolic. It provides easy installation with support for different providers and offers features like text chat, voice chat, video chat, code generation interfaces, and AI agent teams. Users can set API keys for different providers and customize interfaces for specific tasks.

cellseg_models.pytorch

cellseg-models.pytorch is a Python library built upon PyTorch for 2D cell/nuclei instance segmentation models. It provides multi-task encoder-decoder architectures and post-processing methods for segmenting cell/nuclei instances. The library offers high-level API to define segmentation models, open-source datasets for training, flexibility to modify model components, sliding window inference, multi-GPU inference, benchmarking utilities, regularization techniques, and example notebooks for training and finetuning models with different backbones.

ruby_llm-agents

RubyLLM::Agents is a production-ready Rails engine for building, managing, and monitoring LLM-powered AI agents. It seamlessly integrates with Rails apps, providing features like automatic execution tracking, cost analytics, budget controls, and a real-time dashboard. Users can build intelligent AI agents in Ruby using a clean DSL and support various LLM providers like OpenAI GPT-4, Anthropic Claude, and Google Gemini. The engine offers features such as agent DSL configuration, execution tracking, cost analytics, reliability with retries and fallbacks, budget controls, multi-tenancy support, async execution with Ruby fibers, real-time dashboard, streaming, conversation history, image operations, alerts, and more.

rank_llm

RankLLM is a suite of prompt-decoders compatible with open source LLMs like Vicuna and Zephyr. It allows users to create custom ranking models for various NLP tasks, such as document reranking, question answering, and summarization. The tool offers a variety of features, including the ability to fine-tune models on custom datasets, use different retrieval methods, and control the context size and variable passages. RankLLM is easy to use and can be integrated into existing NLP pipelines.

UMbreLLa

UMbreLLa is a tool designed for deploying Large Language Models (LLMs) for personal agents. It combines offloading, speculative decoding, and quantization to optimize single-user LLM deployment scenarios. With UMbreLLa, 70B-level models can achieve performance comparable to human reading speed on an RTX 4070Ti, delivering exceptional efficiency and responsiveness, especially for coding tasks. The tool supports deploying models on various GPUs and offers features like code completion and CLI/Gradio chatbots. Users can configure the LLM engine for optimal performance based on their hardware setup.

openlrc

Open-Lyrics is a Python library that transcribes voice files using faster-whisper and translates/polishes the resulting text into `.lrc` files in the desired language using LLM, e.g. OpenAI-GPT, Anthropic-Claude. It offers well preprocessed audio to reduce hallucination and context-aware translation to improve translation quality. Users can install the library from PyPI or GitHub and follow the installation steps to set up the environment. The tool supports GUI usage and provides Python code examples for transcription and translation tasks. It also includes features like utilizing context and glossary for translation enhancement, pricing information for different models, and a list of todo tasks for future improvements.

TempCompass

TempCompass is a benchmark designed to evaluate the temporal perception ability of Video LLMs. It encompasses a diverse set of temporal aspects and task formats to comprehensively assess the capability of Video LLMs in understanding videos. The benchmark includes conflicting videos to prevent models from relying on single-frame bias and language priors. Users can clone the repository, install required packages, prepare data, run inference using examples like Video-LLaVA and Gemini, and evaluate the performance of their models across different tasks such as Multi-Choice QA, Yes/No QA, Caption Matching, and Caption Generation.

functionary

Functionary is a language model that interprets and executes functions/plugins. It determines when to execute functions, whether in parallel or serially, and understands their outputs. Function definitions are given as JSON Schema Objects, similar to OpenAI GPT function calls. It offers documentation and examples on functionary.meetkai.com. The newest model, meetkai/functionary-medium-v3.1, is ranked 2nd in the Berkeley Function-Calling Leaderboard. Functionary supports models with different context lengths and capabilities for function calling and code interpretation. It also provides grammar sampling for accurate function and parameter names. Users can deploy Functionary models serverlessly using Modal.com.

agentops

AgentOps is a toolkit for evaluating and developing robust and reliable AI agents. It provides benchmarks, observability, and replay analytics to help developers build better agents. AgentOps is open beta and can be signed up for here. Key features of AgentOps include: - Session replays in 3 lines of code: Initialize the AgentOps client and automatically get analytics on every LLM call. - Time travel debugging: (coming soon!) - Agent Arena: (coming soon!) - Callback handlers: AgentOps works seamlessly with applications built using Langchain and LlamaIndex.

For similar tasks

pyllms

PyLLMs is a minimal Python library designed to connect to various Language Model Models (LLMs) such as OpenAI, Anthropic, Google, AI21, Cohere, Aleph Alpha, and HuggingfaceHub. It provides a built-in model performance benchmark for fast prototyping and evaluating different models. Users can easily connect to top LLMs, get completions from multiple models simultaneously, and evaluate models on quality, speed, and cost. The library supports asynchronous completion, streaming from compatible models, and multi-model initialization for testing and comparison. Additionally, it offers features like passing chat history, system messages, counting tokens, and benchmarking models based on quality, speed, and cost.

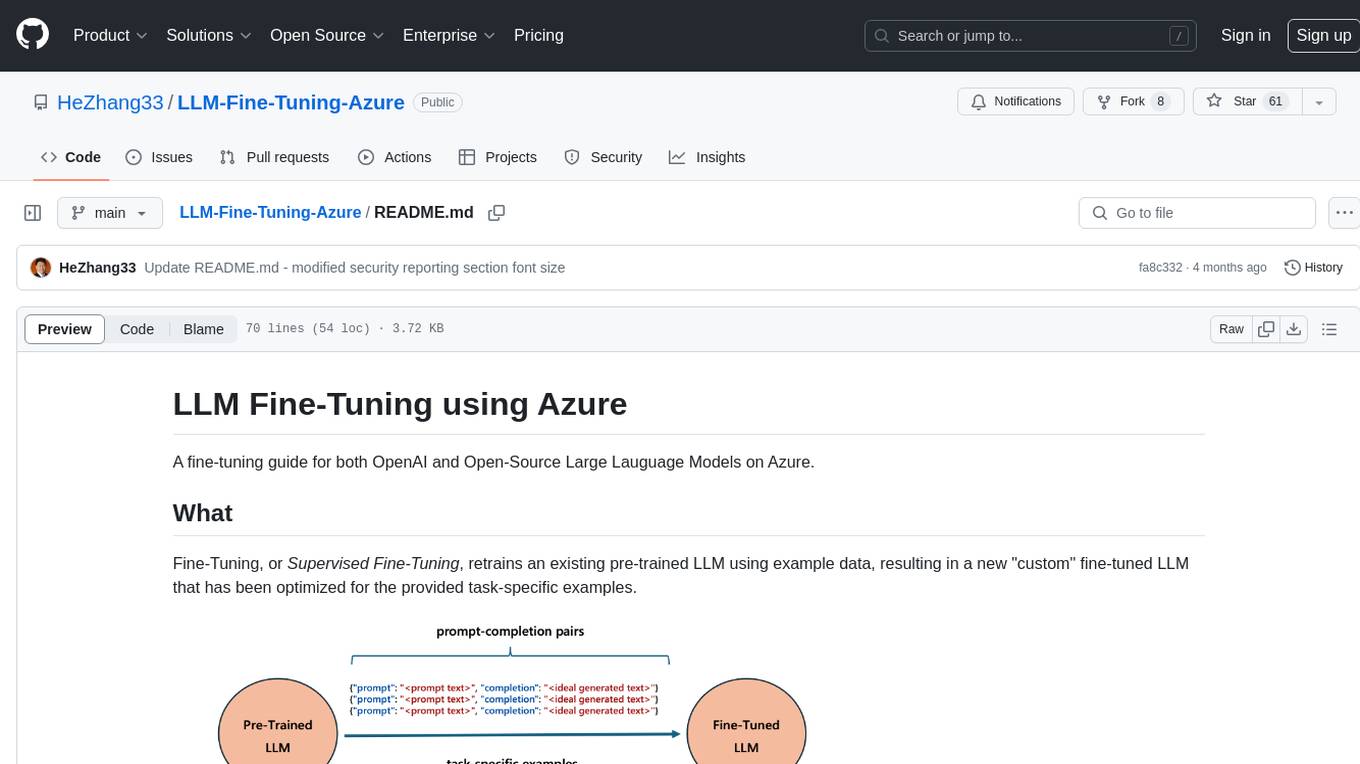

LLM-Fine-Tuning-Azure

A fine-tuning guide for both OpenAI and Open-Source Large Language Models on Azure. Fine-Tuning retrains an existing pre-trained LLM using example data, resulting in a new 'custom' fine-tuned LLM optimized for task-specific examples. Use cases include improving LLM performance on specific tasks and introducing information not well represented by the base LLM model. Suitable for cases where latency is critical, high accuracy is required, and clear evaluation metrics are available. Learning path includes labs for fine-tuning GPT and Llama2 models via Dashboards and Python SDK.

cellseg_models.pytorch

cellseg-models.pytorch is a Python library built upon PyTorch for 2D cell/nuclei instance segmentation models. It provides multi-task encoder-decoder architectures and post-processing methods for segmenting cell/nuclei instances. The library offers high-level API to define segmentation models, open-source datasets for training, flexibility to modify model components, sliding window inference, multi-GPU inference, benchmarking utilities, regularization techniques, and example notebooks for training and finetuning models with different backbones.

awesome-mobile-llm

Awesome Mobile LLMs is a curated list of Large Language Models (LLMs) and related studies focused on mobile and embedded hardware. The repository includes information on various LLM models, deployment frameworks, benchmarking efforts, applications, multimodal LLMs, surveys on efficient LLMs, training LLMs on device, mobile-related use-cases, industry announcements, and related repositories. It aims to be a valuable resource for researchers, engineers, and practitioners interested in mobile LLMs.

Medical_Image_Analysis

The Medical_Image_Analysis repository focuses on X-ray image-based medical report generation using large language models. It provides pre-trained models and benchmarks for CheXpert Plus dataset, context sample retrieval for X-ray report generation, and pre-training on high-definition X-ray images. The goal is to enhance diagnostic accuracy and reduce patient wait times by improving X-ray report generation through advanced AI techniques.

AngelSlim

AngelSlim is a comprehensive and efficient large model compression toolkit designed to be user-friendly. It integrates mainstream compression algorithms for easy one-click access, continuously innovates compression algorithms, and optimizes end-to-end performance in model compression and deployment. It supports various models for quantization and speculative sampling, with a focus on performance optimization and ease of use.

speculators

Speculators is a unified library for building, training, and storing speculative decoding algorithms for large language model (LLM) inference. It speeds up LLM inference by using a smaller, faster draft model (the speculator) to propose tokens, which are then verified by the larger base model, reducing latency without compromising output quality. Trained models can seamlessly run in vLLM, enabling the deployment of speculative decoding in production-grade inference servers.

multilspy

Multilspy is a Python library developed for research purposes to facilitate the creation of language server clients for querying and obtaining results of static analyses from various language servers. It simplifies the process by handling server setup, communication, and configuration parameters, providing a common interface for different languages. The library supports features like finding function/class definitions, callers, completions, hover information, and document symbols. It is designed to work with AI systems like Large Language Models (LLMs) for tasks such as Monitor-Guided Decoding to ensure code generation correctness and boost compilability.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.