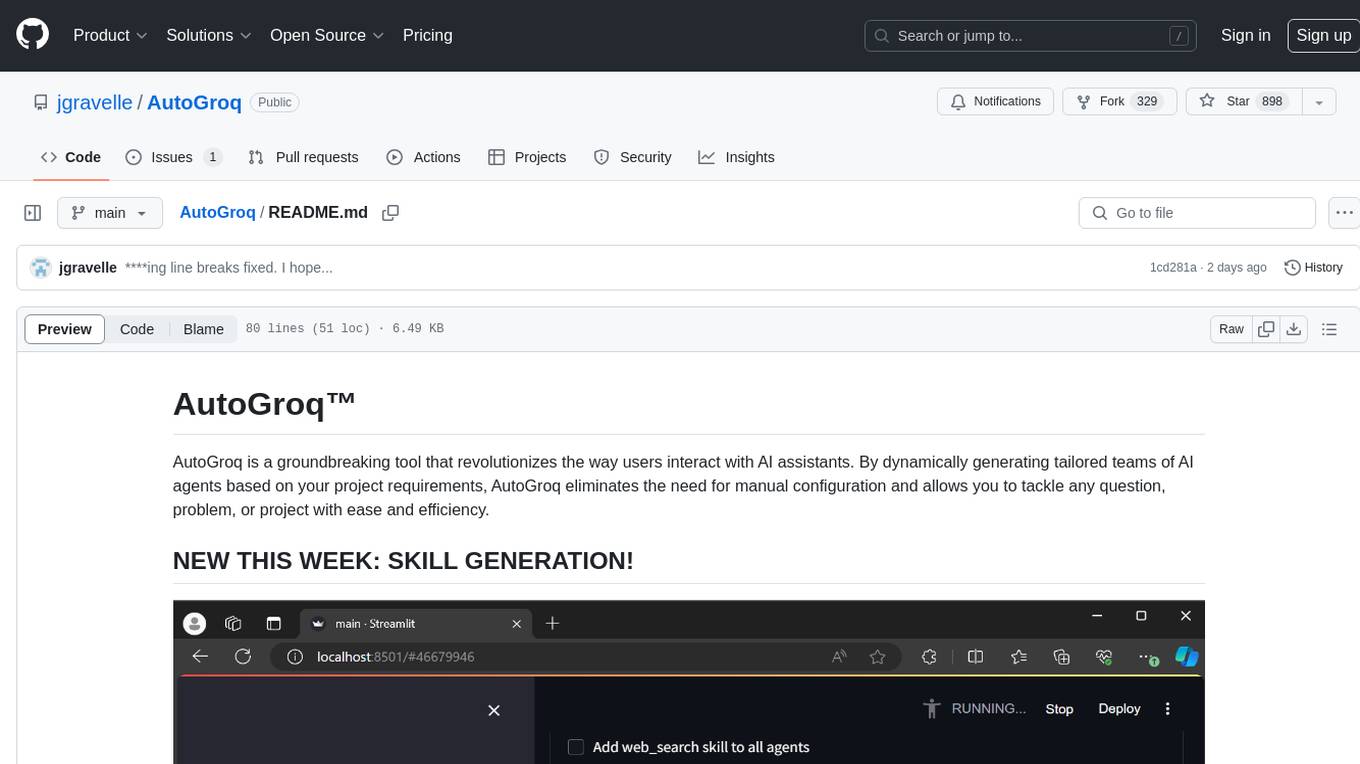

AutoGroq

AutoGroq is a groundbreaking tool that revolutionizes the way users interact with Autogen™ and other AI assistants. By dynamically generating tailored teams of AI agents based on your project requirements, AutoGroq eliminates the need for manual configuration and allows you to tackle any question, problem, or project with ease and efficiency.

Stars: 1191

AutoGroq is a revolutionary tool that dynamically generates tailored teams of AI agents based on project requirements, eliminating manual configuration. It enables users to effortlessly tackle questions, problems, and projects by creating expert agents, workflows, and skillsets with ease and efficiency. With features like natural conversation flow, code snippet extraction, and support for multiple language models, AutoGroq offers a seamless and intuitive AI assistant experience for developers and users.

README:

AutoGroq is a groundbreaking tool that revolutionizes the way users interact with AI assistants. By dynamically generating tailored teams of AI agents based on your project requirements, AutoGroq eliminates the need for manual configuration and allows you to tackle any question, problem, or project with ease and efficiency.

AutoGroq was born out of the realization that the traditional approach to building AI agents was backwards. Instead of creating agents in anticipation of problems, AutoGroq uses the syntax of the users' needs as the basis for constructing the perfect AI team. It's how we wished Autogen worked from the very beginning.

With AutoGroq, a fully configured workflow, team of agents, and skillset are just a few clicks and a couple of minutes away, without any programming necessary. Our rapidly growing user base of nearly 8000 developers is a testament to the power and effectiveness of AutoGroq.

- Dynamic Expert Agent Generation: AutoGroq automatically creates expert agents specialized in various domains or topics, ensuring you receive the most relevant support for your inquiries.

- Dynamic Workflow Generation: With AutoGroq, you're just minutes away from having a custom team of experts working on your project. Watch our video tutorial to see it in action!

- Natural Conversation Flow: Engage in intuitive and contextually aware conversations with AutoGroq's expert agents, facilitating a seamless exchange of information.

- Code Snippet Extraction: AutoGroq intelligently extracts and presents code snippets within a dedicated "Whiteboard" section, making it convenient to reference, copy, or modify code during your interaction.

- Flexible Agent Management: Customize your panel of expert agents according to your evolving project needs. Add new agents, modify their expertise, or remove them as required.

- Advanced Prompt Rephrasing: AutoGroq employs sophisticated natural language processing techniques to rephrase user inputs, enhancing clarity and ensuring accurate responses from expert agents.

- Bulk File Upload to Autogen: With AutoGroq, you can import multiple agents, skills, and workflows into Autogen with a single click, saving you time and effort.

- Support for Multiple LLMs: AutoGroq supports Groq, ChatGPT, Ollama, and more, making it compatible with a wide range of language models. You can even create your own provider model to integrate with your preferred LLM.

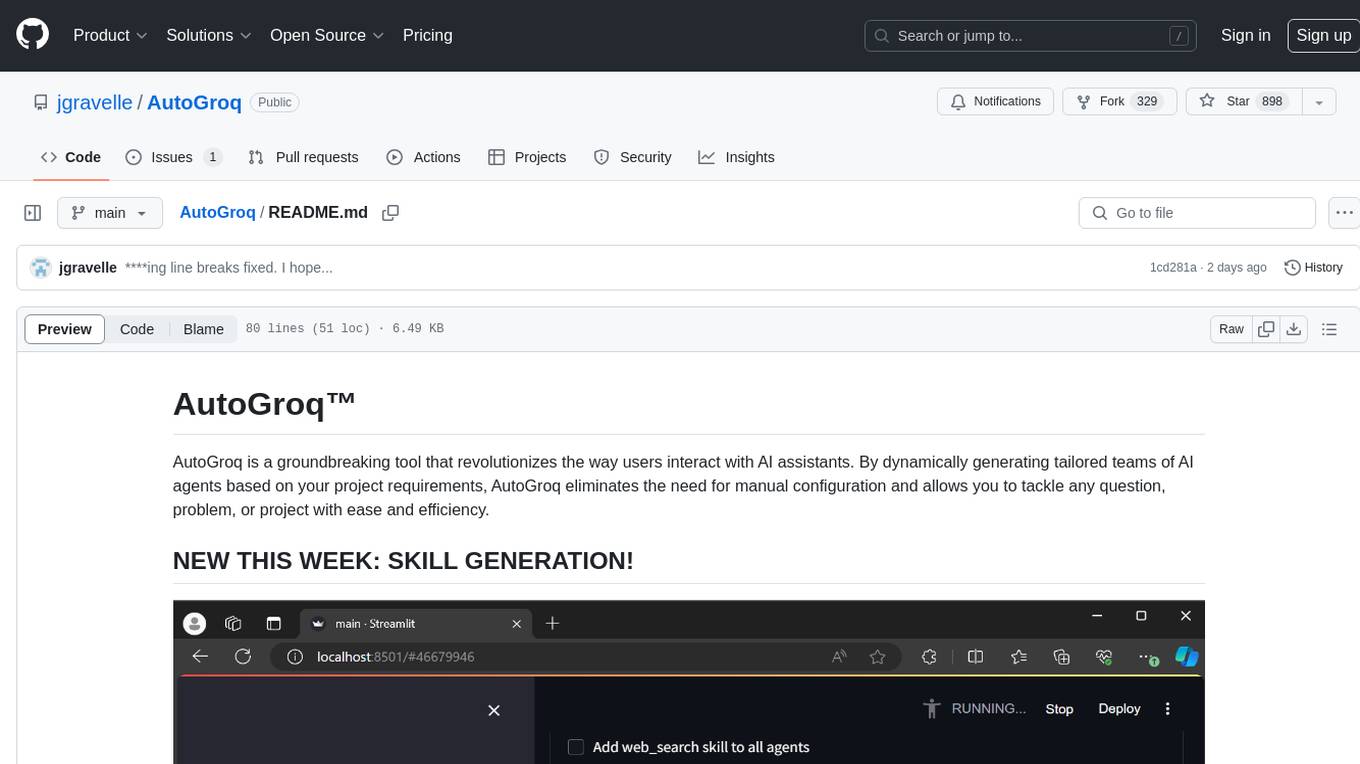

- Skill Integration: Extend your agents' capabilities by adding custom skills. Simply drop a valid skill file into the skills folder, and it will be automatically available for your agents to use.

To get started with AutoGroq, follow these steps:

- Install Autogen following Matt Berman's instructions: https://www.youtube.com/watch?v=mUEFwUU0IfE

- Install Mini-conda: https://docs.anaconda.com/free/miniconda/miniconda-install/

- Open a command prompt and run the following commands:

md c:\AutoGroq

cd c:\AutoGroq

conda create -n AutoGroq python=3.11

conda activate AutoGroq

git clone https://github.com/jgravelle/AutoGroq.git

cd AutoGroq pip install -r requirements.txt

streamlit run c:\AutoGroq\AutoGroq\main.py

To customize the configurations for your local environment, follow these steps:

- Create a new file called

config_local.pyin the same directory asconfig.py. - Copy the contents of

config_local.py.exampleintoconfig_local.py. - Modify the values in

config_local.pyaccording to your specific setup, such as API keys and URLs. - Save the

config_local.pyfile.

Note: The config_local.py file is not tracked by Git, so your customizations will not be overwritten when pulling updates from the repository.

- Initiation: Begin by entering your query or request in the designated input area.

- Engagement: Click the "Begin" button to initiate the interaction. AutoGroq will rephrase your request and generate the appropriate expert agents.

- Interaction: Select an expert agent to receive specialized assistance tailored to your needs.

- Dialogue: Continue the conversation by providing additional input or context as required, guiding the flow of information.

- Review: The "Discussion" section will display your dialogue history, while the "Whiteboard" section will showcase any extracted code snippets.

- Reset: Use the "Reset" button to clear the current conversation and start a new one whenever needed.

Experience AutoGroq's capabilities firsthand by accessing our online beta version: AutoGroq Live Demo

For a step-by-step guide on using AutoGroq, watch our updated video tutorials: AutoGroq Video Tutorials

We value your feedback and contributions in shaping the future of AutoGroq. If you encounter any issues or have ideas for new features, please share them with us on our GitHub repository.

AutoGroq is proudly open-source and released under the MIT License.

Thank you for choosing AutoGroq as your AI-powered conversational assistant. We are committed to redefining the boundaries of what AI can achieve and empowering you to tackle any question, problem, or project with ease and efficiency.

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

1. The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

2. Any modifications made to the Software must clearly indicate that they are derived from the original work, and the name of the original author (J. Gravelle) must remain intact.

3. Redistributions of the Software in source code form must also include a prominent notice that the code has been modified from the original.

THE SOFTWARE IS PROVIDED "AS IS," WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES, OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT, OR OTHERWISE, ARISING FROM, OUT OF, OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AutoGroq

Similar Open Source Tools

AutoGroq

AutoGroq is a revolutionary tool that dynamically generates tailored teams of AI agents based on project requirements, eliminating manual configuration. It enables users to effortlessly tackle questions, problems, and projects by creating expert agents, workflows, and skillsets with ease and efficiency. With features like natural conversation flow, code snippet extraction, and support for multiple language models, AutoGroq offers a seamless and intuitive AI assistant experience for developers and users.

AIlice

AIlice is a fully autonomous, general-purpose AI agent that aims to create a standalone artificial intelligence assistant, similar to JARVIS, based on the open-source LLM. AIlice achieves this goal by building a "text computer" that uses a Large Language Model (LLM) as its core processor. Currently, AIlice demonstrates proficiency in a range of tasks, including thematic research, coding, system management, literature reviews, and complex hybrid tasks that go beyond these basic capabilities. AIlice has reached near-perfect performance in everyday tasks using GPT-4 and is making strides towards practical application with the latest open-source models. We will ultimately achieve self-evolution of AI agents. That is, AI agents will autonomously build their own feature expansions and new types of agents, unleashing LLM's knowledge and reasoning capabilities into the real world seamlessly.

motleycrew

Motleycrew is an ultimate framework for building multi-agent AI systems, allowing users to mix and match AI agents and tools from popular frameworks, design advanced workflows, and leverage dynamic knowledge graphs with simplicity and elegance. It acts as a conductor orchestrating a symphony of AI agents and tools, providing building blocks for creating AI systems and enabling users to focus on high-level design while taking care of the rest. The framework offers integration with various tools, flexibility in providing agents with tools or other agents, advanced flow design capabilities, and built-in observability and caching features.

Build-your-own-AI-Assistant-Solution-Accelerator

Build-your-own-AI-Assistant-Solution-Accelerator is a pre-release and preview solution that helps users create their own AI assistants. It leverages Azure Open AI Service, Azure AI Search, and Microsoft Fabric to identify, summarize, and categorize unstructured information. Users can easily find relevant articles and grants, generate grant applications, and export them as PDF or Word documents. The solution accelerator provides reusable architecture and code snippets for building AI assistants with enterprise data. It is designed for researchers looking to explore flu vaccine studies and grants to accelerate grant proposal submissions.

obsidian-Smart2Brain

Your Smart Second Brain is a free and open-source Obsidian plugin that serves as your personal assistant, powered by large language models like ChatGPT or Llama2. It can directly access and process your notes, eliminating the need for manual prompt editing, and it can operate completely offline, ensuring your data remains private and secure.

aide

Aide is an Open Source AI-native code editor that combines the powerful features of VS Code with advanced AI capabilities. It provides a combined chat + edit flow, proactive agents for fixing errors, inline editing widget, intelligent code completion, and AST navigation. Aide is designed to be an intelligent coding companion, helping users write better code faster while maintaining control over the development process.

mastra

Mastra is an opinionated Typescript framework designed to help users quickly build AI applications and features. It provides primitives such as workflows, agents, RAG, integrations, syncs, and evals. Users can run Mastra locally or deploy it to a serverless cloud. The framework supports various LLM providers, offers tools for building language models, workflows, and accessing knowledge bases. It includes features like durable graph-based state machines, retrieval-augmented generation, integrations, syncs, and automated tests for evaluating LLM outputs.

ChainForge

ChainForge is a visual programming environment for battle-testing prompts to LLMs. It is geared towards early-stage, quick-and-dirty exploration of prompts, chat responses, and response quality that goes beyond ad-hoc chatting with individual LLMs. With ChainForge, you can: * Query multiple LLMs at once to test prompt ideas and variations quickly and effectively. * Compare response quality across prompt permutations, across models, and across model settings to choose the best prompt and model for your use case. * Setup evaluation metrics (scoring function) and immediately visualize results across prompts, prompt parameters, models, and model settings. * Hold multiple conversations at once across template parameters and chat models. Template not just prompts, but follow-up chat messages, and inspect and evaluate outputs at each turn of a chat conversation. ChainForge comes with a number of example evaluation flows to give you a sense of what's possible, including 188 example flows generated from benchmarks in OpenAI evals. This is an open beta of Chainforge. We support model providers OpenAI, HuggingFace, Anthropic, Google PaLM2, Azure OpenAI endpoints, and Dalai-hosted models Alpaca and Llama. You can change the exact model and individual model settings. Visualization nodes support numeric and boolean evaluation metrics. ChainForge is built on ReactFlow and Flask.

auth0-assistant0

Assistant0 is an AI personal assistant that consolidates digital life by accessing multiple tools to help users stay organized and efficient. It integrates with Gmail for email summaries, manages calendars, retrieves user information, enables online shopping with human-in-the-loop authorizations, uploads and retrieves documents, lists GitHub repositories and events, and soon provides Slack notifications and Google Drive access. With tool-calling capabilities, it acts as a digital personal secretary, enhancing efficiency and ushering in intelligent automation. Security challenges are addressed by using Auth0 for secure tool calling with scoped access tokens, ensuring user data protection.

chat-with-your-data-solution-accelerator

Chat with your data using OpenAI and AI Search. This solution accelerator uses an Azure OpenAI GPT model and an Azure AI Search index generated from your data, which is integrated into a web application to provide a natural language interface, including speech-to-text functionality, for search queries. Users can drag and drop files, point to storage, and take care of technical setup to transform documents. There is a web app that users can create in their own subscription with security and authentication.

get-started-with-ai-agents

The 'Getting Started with Agents Using Azure AI Foundry' repository provides a solution that deploys a web-based chat application with an AI agent running in Azure Container App. The agent leverages Azure AI services for knowledge retrieval from uploaded files, enabling it to generate responses with citations. The solution includes built-in monitoring capabilities for easier troubleshooting and optimized performance. Users can deploy AI models, customize the agent, and evaluate its performance. The repository offers flexible deployment options through GitHub Codespaces, VS Code Dev Containers, or local environments.

Customer-Service-Conversational-Insights-with-Azure-OpenAI-Services

This solution accelerator is built on Azure Cognitive Search Service and Azure OpenAI Service to synthesize post-contact center transcripts for intelligent contact center scenarios. It converts raw transcripts into customer call summaries to extract insights around product and service performance. Key features include conversation summarization, key phrase extraction, speech-to-text transcription, sensitive information extraction, sentiment analysis, and opinion mining. The tool enables data professionals to quickly analyze call logs for improvement in contact center operations.

ai2apps

AI2Apps is a visual IDE for building LLM-based AI agent applications, enabling developers to efficiently create AI agents through drag-and-drop, with features like design-to-development for rapid prototyping, direct packaging of agents into apps, powerful debugging capabilities, enhanced user interaction, efficient team collaboration, flexible deployment, multilingual support, simplified product maintenance, and extensibility through plugins.

AppAgent

AppAgent is a novel LLM-based multimodal agent framework designed to operate smartphone applications. Our framework enables the agent to operate smartphone applications through a simplified action space, mimicking human-like interactions such as tapping and swiping. This novel approach bypasses the need for system back-end access, thereby broadening its applicability across diverse apps. Central to our agent's functionality is its innovative learning method. The agent learns to navigate and use new apps either through autonomous exploration or by observing human demonstrations. This process generates a knowledge base that the agent refers to for executing complex tasks across different applications.

mahilo

Mahilo is a flexible framework for creating multi-agent systems that can interact with humans while sharing context internally. It allows developers to set up complex agent networks for various applications, from customer service to emergency response simulations. Agents can communicate with each other and with humans, making the system efficient by handling context from multiple agents and helping humans stay focused on specific problems. The system supports Realtime API for voice interactions, WebSocket-based communication, flexible communication patterns, session management, and easy agent definition.

morphik-core

Morphik is an AI-native toolset designed to help developers integrate context into their AI applications by providing tools to store, represent, and search unstructured data. It offers features such as multimodal search, fast metadata extraction, and integrations with existing tools. Morphik aims to address the challenges of traditional AI approaches that struggle with visually rich documents and provide a more comprehensive solution for understanding and processing complex data.

For similar tasks

AutoGroq

AutoGroq is a revolutionary tool that dynamically generates tailored teams of AI agents based on project requirements, eliminating manual configuration. It enables users to effortlessly tackle questions, problems, and projects by creating expert agents, workflows, and skillsets with ease and efficiency. With features like natural conversation flow, code snippet extraction, and support for multiple language models, AutoGroq offers a seamless and intuitive AI assistant experience for developers and users.

forms-flow-ai

formsflow.ai is a Free, Open-Source, Low Code Development Platform for rapidly building powerful business applications. It combines leading Open-Source applications including form.io forms, Camunda’s workflow engine, Keycloak’s security, and Redash’s data analytics into a seamless, integrated platform. Check out the installation documentation for installation instructions and features documentation to explore features and capabilities in detail.

ComfyUIMini

ComfyUI Mini is a lightweight and mobile-friendly frontend designed to run ComfyUI workflows. It allows users to save workflows locally on their device or PC, easily import workflows, and view generation progress information. The tool requires ComfyUI to be installed on the PC and a modern browser with WebSocket support on the mobile device. Users can access the WebUI by running the app and connecting to the local address of the PC. ComfyUI Mini provides a simple and efficient way to manage workflows on mobile devices.

Visionatrix

Visionatrix is a project aimed at providing easy use of ComfyUI workflows. It offers simplified setup and update processes, a minimalistic UI for daily workflow use, stable workflows with versioning and update support, scalability for multiple instances and task workers, multiple user support with integration of different user backends, LLM power for integration with Ollama/Gemini, and seamless integration as a service with backend endpoints and webhook support. The project is approaching version 1.0 release and welcomes new ideas for further implementation.

flowdeer-dist

FlowDeer Tree is an AI tool designed for managing complex workflows and facilitating deep thoughts. It provides features such as displaying thinking chains, assigning tasks to AI members, utilizing task conclusions as context, copying and importing AI members in JSON format, adjusting node sequences, calling external APIs as plugins, and customizing default task splitting, execution, summarization, and output rewriting prompts. The tool aims to streamline workflow processes and enhance productivity by leveraging artificial intelligence capabilities.

Archon

Archon is an AI meta-agent designed to autonomously build, refine, and optimize other AI agents. It serves as a practical tool for developers and an educational framework showcasing the evolution of agentic systems. Through iterative development, Archon demonstrates the power of planning, feedback loops, and domain-specific knowledge in creating robust AI agents.

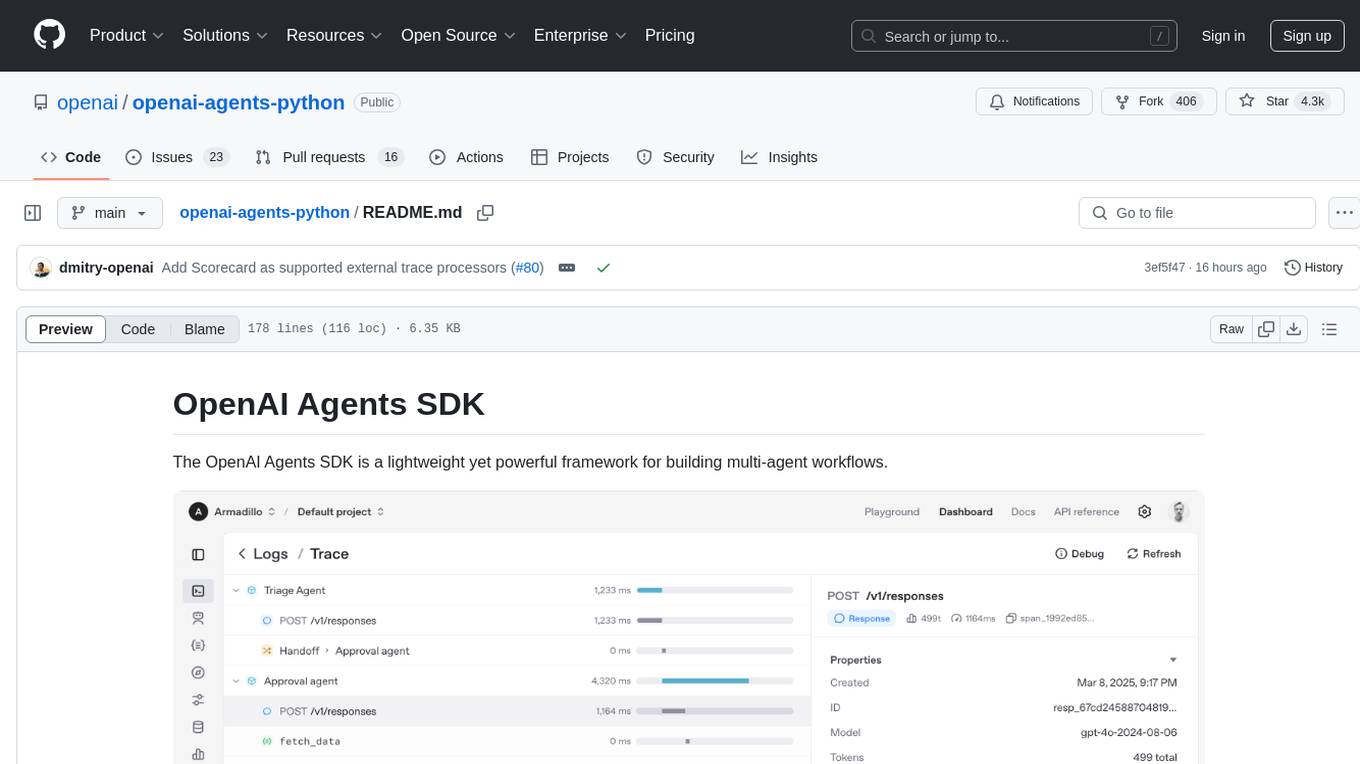

openai-agents-python

The OpenAI Agents SDK is a lightweight framework for building multi-agent workflows. It includes concepts like Agents, Handoffs, Guardrails, and Tracing to facilitate the creation and management of agents. The SDK is compatible with any model providers supporting the OpenAI Chat Completions API format. It offers flexibility in modeling various LLM workflows and provides automatic tracing for easy tracking and debugging of agent behavior. The SDK is designed for developers to create deterministic flows, iterative loops, and more complex workflows.

flow-like

Flow-Like is an enterprise-grade workflow operating system built upon Rust for uncompromising performance, efficiency, and code safety. It offers a modular frontend for apps, a rich set of events, a node catalog, a powerful no-code workflow IDE, and tools to manage teams, templates, and projects within organizations. With typed workflows, users can create complex, large-scale workflows with clear data origins, transformations, and contracts. Flow-Like is designed to automate any process through seamless integration of LLM, ML-based, and deterministic decision-making instances.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.