ai-comic-factory

Generate comic panels using a LLM + SDXL. Powered by Hugging Face 🤗

Stars: 1012

The AI Comic Factory is a tool that allows you to create your own AI comics with a single prompt. It uses a large language model (LLM) to generate the story and dialogue, and a rendering API to generate the panel images. The AI Comic Factory is open-source and can be run on your own website or computer. It is a great tool for anyone who wants to create their own comics, or for anyone who is interested in the potential of AI for storytelling.

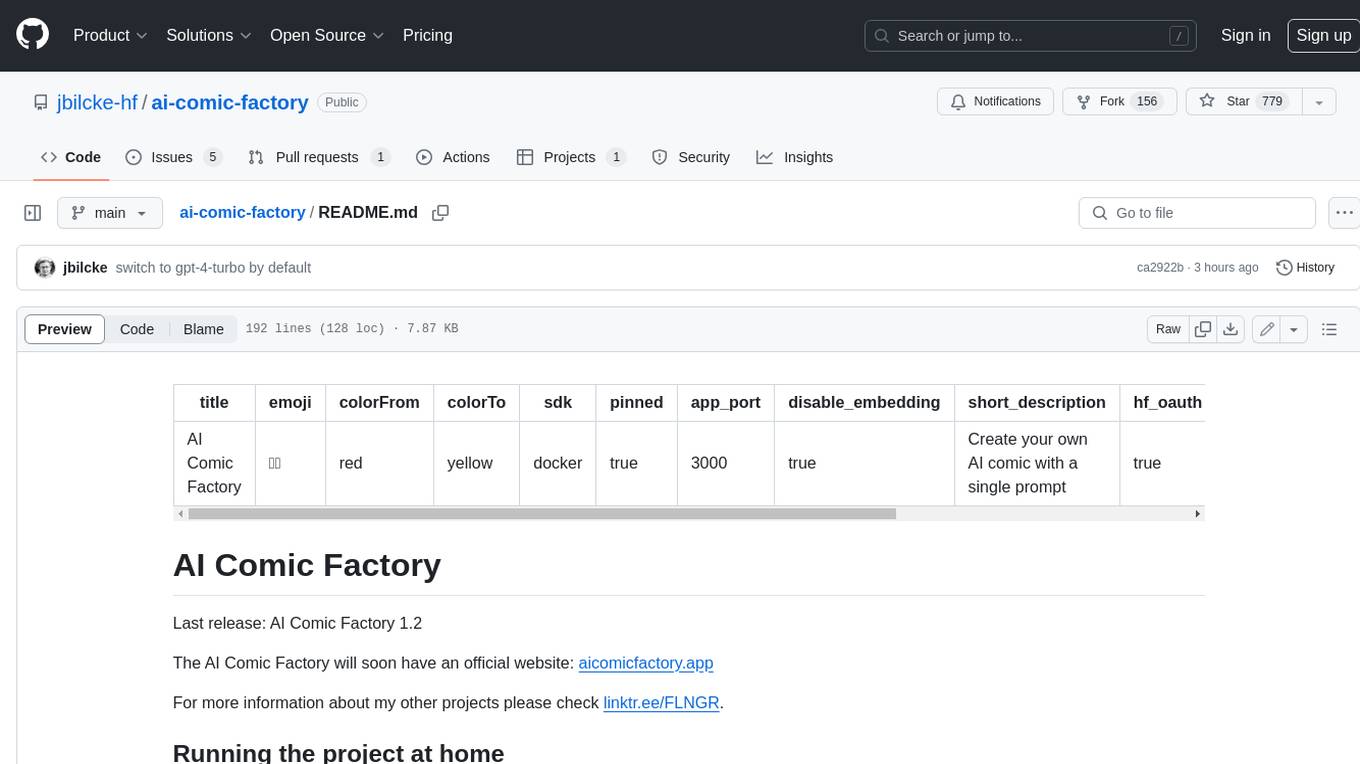

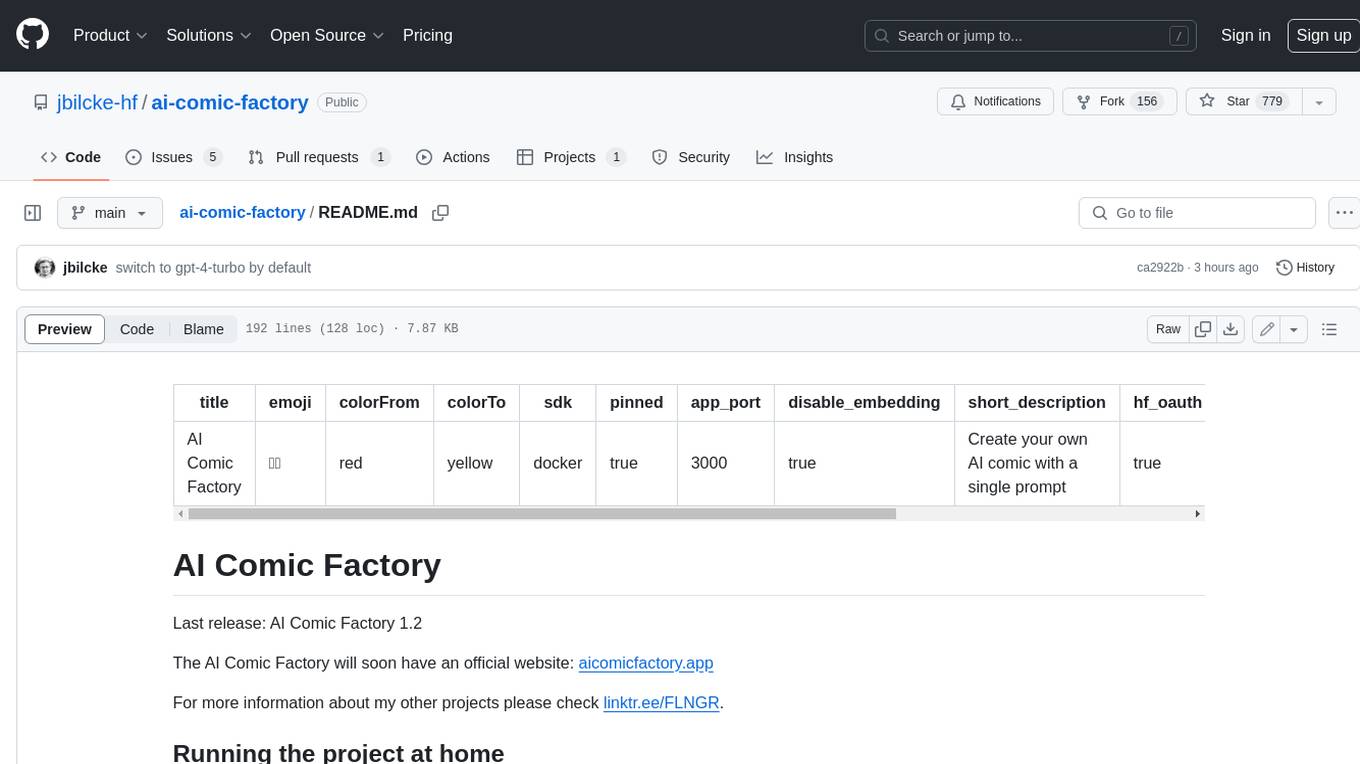

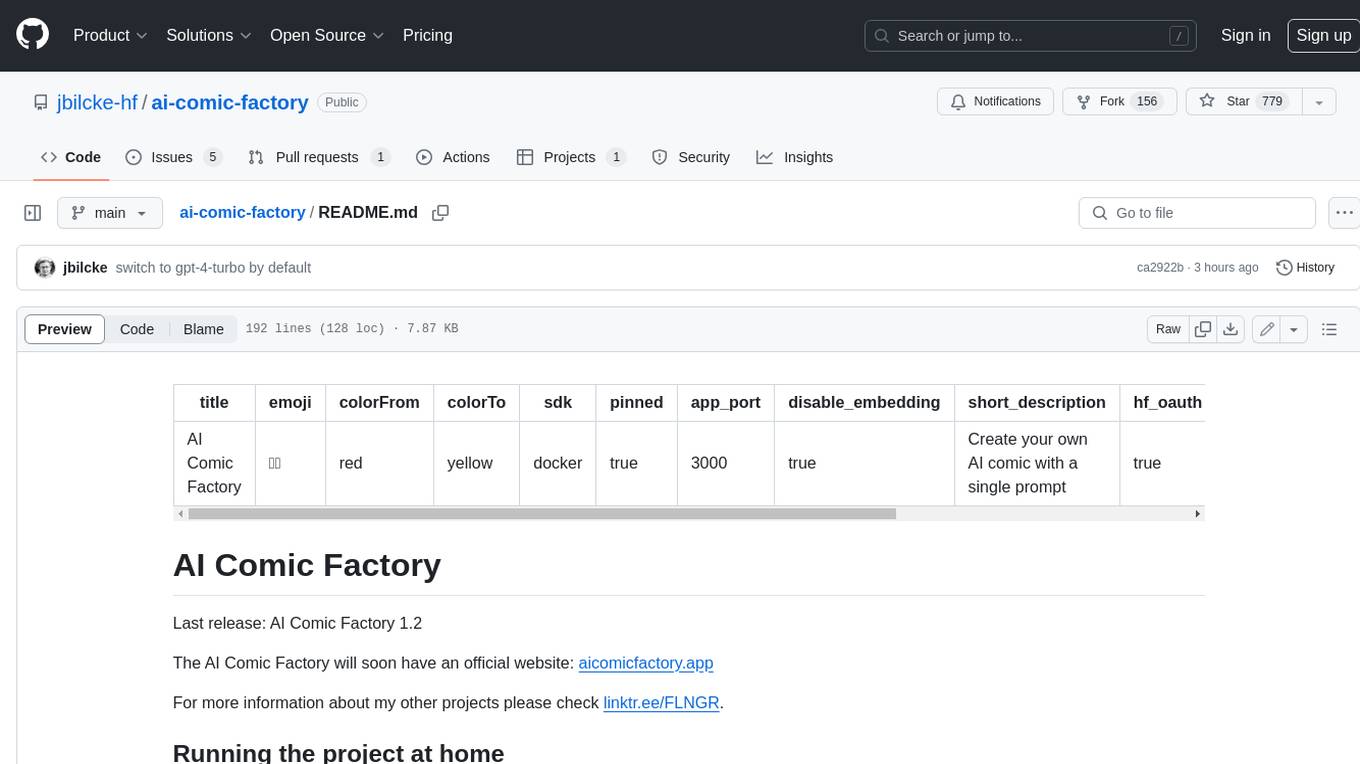

README:

title: AI Comic Factory emoji: 👩🎨 colorFrom: red colorTo: yellow sdk: docker pinned: true app_port: 3000 disable_embedding: false short_description: Create your own AI comic with a single prompt hf_oauth: true hf_oauth_expiration_minutes: 43200 hf_oauth_scopes: [inference-api]

Last release: AI Comic Factory 1.2

The AI Comic Factory will soon have an official website: aicomicfactory.app

For more information about my other projects please check linktr.ee/FLNGR.

First, I would like to highlight that everything is open-source (see here, here, here, here).

However the project isn't a monolithic Space that can be duplicated and ran immediately: it requires various components to run for the frontend, backend, LLM, SDXL etc.

If you try to duplicate the project, open the .env you will see it requires some variables.

Provider config:

-

LLM_ENGINE: can be one ofINFERENCE_API,INFERENCE_ENDPOINT,OPENAI,GROQ,ANTHROPIC -

RENDERING_ENGINE: can be one of: "INFERENCE_API", "INFERENCE_ENDPOINT", "REPLICATE", "VIDEOCHAIN", "OPENAI" for now, unless you code your custom solution

Auth config:

-

AUTH_HF_API_TOKEN: if you decide to use Hugging Face for the LLM engine (inference api model or a custom inference endpoint) -

AUTH_OPENAI_API_KEY: to use OpenAI for the LLM engine -

AUTH_GROQ_API_KEY: to use Groq for the LLM engine -

AUTH_ANTHROPIC_API_KEY: to use Anthropic (Claude) for the LLM engine -

AUTH_VIDEOCHAIN_API_TOKEN: secret token to access the VideoChain API server -

AUTH_REPLICATE_API_TOKEN: in case you want to use Replicate.com

Rendering config:

-

RENDERING_HF_INFERENCE_ENDPOINT_URL: necessary if you decide to use a custom inference endpoint -

RENDERING_REPLICATE_API_MODEL_VERSION: url to the VideoChain API server -

RENDERING_HF_INFERENCE_ENDPOINT_URL: optional, default to nothing -

RENDERING_HF_INFERENCE_API_BASE_MODEL: optional, defaults to "stabilityai/stable-diffusion-xl-base-1.0" -

RENDERING_HF_INFERENCE_API_REFINER_MODEL: optional, defaults to "stabilityai/stable-diffusion-xl-refiner-1.0" -

RENDERING_REPLICATE_API_MODEL: optional, defaults to "stabilityai/sdxl" -

RENDERING_REPLICATE_API_MODEL_VERSION: optional, in case you want to change the version

Language model config (depending on the LLM engine you decide to use):

-

LLM_HF_INFERENCE_ENDPOINT_URL: "" -

LLM_HF_INFERENCE_API_MODEL: "HuggingFaceH4/zephyr-7b-beta" -

LLM_OPENAI_API_BASE_URL: "https://api.openai.com/v1" -

LLM_OPENAI_API_MODEL: "gpt-4-turbo" -

LLM_GROQ_API_MODEL: "mixtral-8x7b-32768" -

LLM_ANTHROPIC_API_MODEL: "claude-3-opus-20240229"

In addition, there are some community sharing variables that you can just ignore. Those variables are not required to run the AI Comic Factory on your own website or computer (they are meant to create a connection with the Hugging Face community, and thus only make sense for official Hugging Face apps):

-

NEXT_PUBLIC_ENABLE_COMMUNITY_SHARING: you don't need this -

COMMUNITY_API_URL: you don't need this -

COMMUNITY_API_TOKEN: you don't need this -

COMMUNITY_API_ID: you don't need this

Please read the .env default config file for more informations.

To customise a variable locally, you should create a .env.local

(do not commit this file as it will contain your secrets).

-> If you intend to run it with local, cloud-hosted and/or proprietary models you are going to need to code 👨💻.

Currently the AI Comic Factory uses zephyr-7b-beta through an Inference Endpoint.

You have multiple options:

This is a new option added recently, where you can use one of the models from the Hugging Face Hub. By default we suggest to use zephyr-7b-beta as it will provide better results than the 7b model.

To activate it, create a .env.local configuration file:

LLM_ENGINE="INFERENCE_API"

HF_API_TOKEN="Your Hugging Face token"

# "HuggingFaceH4/zephyr-7b-beta" is used by default, but you can change this

# note: You should use a model able to generate JSON responses,

# so it is storngly suggested to use at least the 34b model

HF_INFERENCE_API_MODEL="HuggingFaceH4/zephyr-7b-beta"If you would like to run the AI Comic Factory on a private LLM running on the Hugging Face Inference Endpoint service, create a .env.local configuration file:

LLM_ENGINE="INFERENCE_ENDPOINT"

HF_API_TOKEN="Your Hugging Face token"

HF_INFERENCE_ENDPOINT_URL="path to your inference endpoint url"To run this kind of LLM locally, you can use TGI (Please read this post for more information about the licensing).

This is a new option added recently, where you can use OpenAI API with an OpenAI API Key.

To activate it, create a .env.local configuration file:

LLM_ENGINE="OPENAI"

# default openai api base url is: https://api.openai.com/v1

LLM_OPENAI_API_BASE_URL="A custom OpenAI API Base URL if you have some special privileges"

LLM_OPENAI_API_MODEL="gpt-4-turbo"

AUTH_OPENAI_API_KEY="Yourown OpenAI API Key"LLM_ENGINE="GROQ"

LLM_GROQ_API_MODEL="mixtral-8x7b-32768"

AUTH_GROQ_API_KEY="Your own GROQ API Key"LLM_ENGINE="ANTHROPIC"

LLM_ANTHROPIC_API_MODEL="claude-3-opus-20240229"

AUTH_ANTHROPIC_API_KEY="Your own ANTHROPIC API Key"Another option could be to disable the LLM completely and replace it with another LLM protocol and/or provider (eg. Claude, Replicate), or a human-generated story instead (by returning mock or static data).

It is possible that I modify the AI Comic Factory to make it easier in the future (eg. add support for Claude or Replicate)

This API is used to generate the panel images. This is an API I created for my various projects at Hugging Face.

I haven't written documentation for it yet, but basically it is "just a wrapper ™" around other existing APIs:

- The hysts/SD-XL Space by @hysts

- And other APIs for making videos, adding audio etc.. but you won't need them for the AI Comic Factory

You will have to clone the source-code

Unfortunately, I haven't had the time to write the documentation for VideoChain yet. (When I do I will update this document to point to the VideoChain's README)

To use Replicate, create a .env.local configuration file:

RENDERING_ENGINE="REPLICATE"

RENDERING_REPLICATE_API_MODEL="stabilityai/sdxl"

RENDERING_REPLICATE_API_MODEL_VERSION="da77bc59ee60423279fd632efb4795ab731d9e3ca9705ef3341091fb989b7eaf"

AUTH_REPLICATE_API_TOKEN="Your Replicate token"If you fork the project you will be able to modify the code to use the Stable Diffusion technology of your choice (local, open-source, proprietary, your custom HF Space etc).

It would even be something else, such as Dall-E.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-comic-factory

Similar Open Source Tools

ai-comic-factory

The AI Comic Factory is a tool that allows you to create your own AI comics with a single prompt. It uses a large language model (LLM) to generate the story and dialogue, and a rendering API to generate the panel images. The AI Comic Factory is open-source and can be run on your own website or computer. It is a great tool for anyone who wants to create their own comics, or for anyone who is interested in the potential of AI for storytelling.

smartcat

Smartcat is a CLI interface that brings language models into the Unix ecosystem, allowing power users to leverage the capabilities of LLMs in their daily workflows. It features a minimalist design, seamless integration with terminal and editor workflows, and customizable prompts for specific tasks. Smartcat currently supports OpenAI, Mistral AI, and Anthropic APIs, providing access to a range of language models. With its ability to manipulate file and text streams, integrate with editors, and offer configurable settings, Smartcat empowers users to automate tasks, enhance code quality, and explore creative possibilities.

openai-cf-workers-ai

OpenAI for Workers AI is a simple, quick, and dirty implementation of OpenAI's API on Cloudflare's new Workers AI platform. It allows developers to use the OpenAI SDKs with the new LLMs without having to rewrite all of their code. The API currently supports completions, chat completions, audio transcription, embeddings, audio translation, and image generation. It is not production ready but will be semi-regularly updated with new features as they roll out to Workers AI.

vectorflow

VectorFlow is an open source, high throughput, fault tolerant vector embedding pipeline. It provides a simple API endpoint for ingesting large volumes of raw data, processing, and storing or returning the vectors quickly and reliably. The tool supports text-based files like TXT, PDF, HTML, and DOCX, and can be run locally with Kubernetes in production. VectorFlow offers functionalities like embedding documents, running chunking schemas, custom chunking, and integrating with vector databases like Pinecone, Qdrant, and Weaviate. It enforces a standardized schema for uploading data to a vector store and supports features like raw embeddings webhook, chunk validation webhook, S3 endpoint, and telemetry. The tool can be used with the Python client and provides detailed instructions for running and testing the functionalities.

ray-llm

RayLLM (formerly known as Aviary) is an LLM serving solution that makes it easy to deploy and manage a variety of open source LLMs, built on Ray Serve. It provides an extensive suite of pre-configured open source LLMs, with defaults that work out of the box. RayLLM supports Transformer models hosted on Hugging Face Hub or present on local disk. It simplifies the deployment of multiple LLMs, the addition of new LLMs, and offers unique autoscaling support, including scale-to-zero. RayLLM fully supports multi-GPU & multi-node model deployments and offers high performance features like continuous batching, quantization and streaming. It provides a REST API that is similar to OpenAI's to make it easy to migrate and cross test them. RayLLM supports multiple LLM backends out of the box, including vLLM and TensorRT-LLM.

vectara-answer

Vectara Answer is a sample app for Vectara-powered Summarized Semantic Search (or question-answering) with advanced configuration options. For examples of what you can build with Vectara Answer, check out Ask News, LegalAid, or any of the other demo applications.

AlwaysReddy

AlwaysReddy is a simple LLM assistant with no UI that you interact with entirely using hotkeys. It can easily read from or write to your clipboard, and voice chat with you via TTS and STT. Here are some of the things you can use AlwaysReddy for: - Explain a new concept to AlwaysReddy and have it save the concept (in roughly your words) into a note. - Ask AlwaysReddy "What is X called?" when you know how to roughly describe something but can't remember what it is called. - Have AlwaysReddy proofread the text in your clipboard before you send it. - Ask AlwaysReddy "From the comments in my clipboard, what do the r/LocalLLaMA users think of X?" - Quickly list what you have done today and get AlwaysReddy to write a journal entry to your clipboard before you shutdown the computer for the day.

call-gpt

Call GPT is a voice application that utilizes Deepgram for Speech to Text, elevenlabs for Text to Speech, and OpenAI for GPT prompt completion. It allows users to chat with ChatGPT on the phone, providing better transcription, understanding, and speaking capabilities than traditional IVR systems. The app returns responses with low latency, allows user interruptions, maintains chat history, and enables GPT to call external tools. It coordinates data flow between Deepgram, OpenAI, ElevenLabs, and Twilio Media Streams, enhancing voice interactions.

aiac

AIAC is a library and command line tool to generate Infrastructure as Code (IaC) templates, configurations, utilities, queries, and more via LLM providers such as OpenAI, Amazon Bedrock, and Ollama. Users can define multiple 'backends' targeting different LLM providers and environments using a simple configuration file. The tool allows users to ask a model to generate templates for different scenarios and composes an appropriate request to the selected provider, storing the resulting code to a file and/or printing it to standard output.

garak

Garak is a vulnerability scanner designed for LLMs (Large Language Models) that checks for various weaknesses such as hallucination, data leakage, prompt injection, misinformation, toxicity generation, and jailbreaks. It combines static, dynamic, and adaptive probes to explore vulnerabilities in LLMs. Garak is a free tool developed for red-teaming and assessment purposes, focusing on making LLMs or dialog systems fail. It supports various LLM models and can be used to assess their security and robustness.

fabric

Fabric is an open-source framework for augmenting humans using AI. It provides a structured approach to breaking down problems into individual components and applying AI to them one at a time. Fabric includes a collection of pre-defined Patterns (prompts) that can be used for a variety of tasks, such as extracting the most interesting parts of YouTube videos and podcasts, writing essays, summarizing academic papers, creating AI art prompts, and more. Users can also create their own custom Patterns. Fabric is designed to be easy to use, with a command-line interface and a variety of helper apps. It is also extensible, allowing users to integrate it with their own AI applications and infrastructure.

Tiny-Predictive-Text

Tiny-Predictive-Text is a demonstration of predictive text without an LLM, using permy.link. It provides a detailed description of the tool, including its features, benefits, and how to use it. The tool is suitable for a variety of jobs, including content writers, editors, and researchers. It can be used to perform a variety of tasks, such as generating text, completing sentences, and correcting errors.

garak

Garak is a free tool that checks if a Large Language Model (LLM) can be made to fail in a way that is undesirable. It probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. Garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

llamafile

llamafile is a tool that enables users to distribute and run Large Language Models (LLMs) with a single file. It combines llama.cpp with Cosmopolitan Libc to create a framework that simplifies the complexity of LLMs into a single-file executable called a 'llamafile'. Users can run these executable files locally on most computers without the need for installation, making open LLMs more accessible to developers and end users. llamafile also provides example llamafiles for various LLM models, allowing users to try out different LLMs locally. The tool supports multiple CPU microarchitectures, CPU architectures, and operating systems, making it versatile and easy to use.

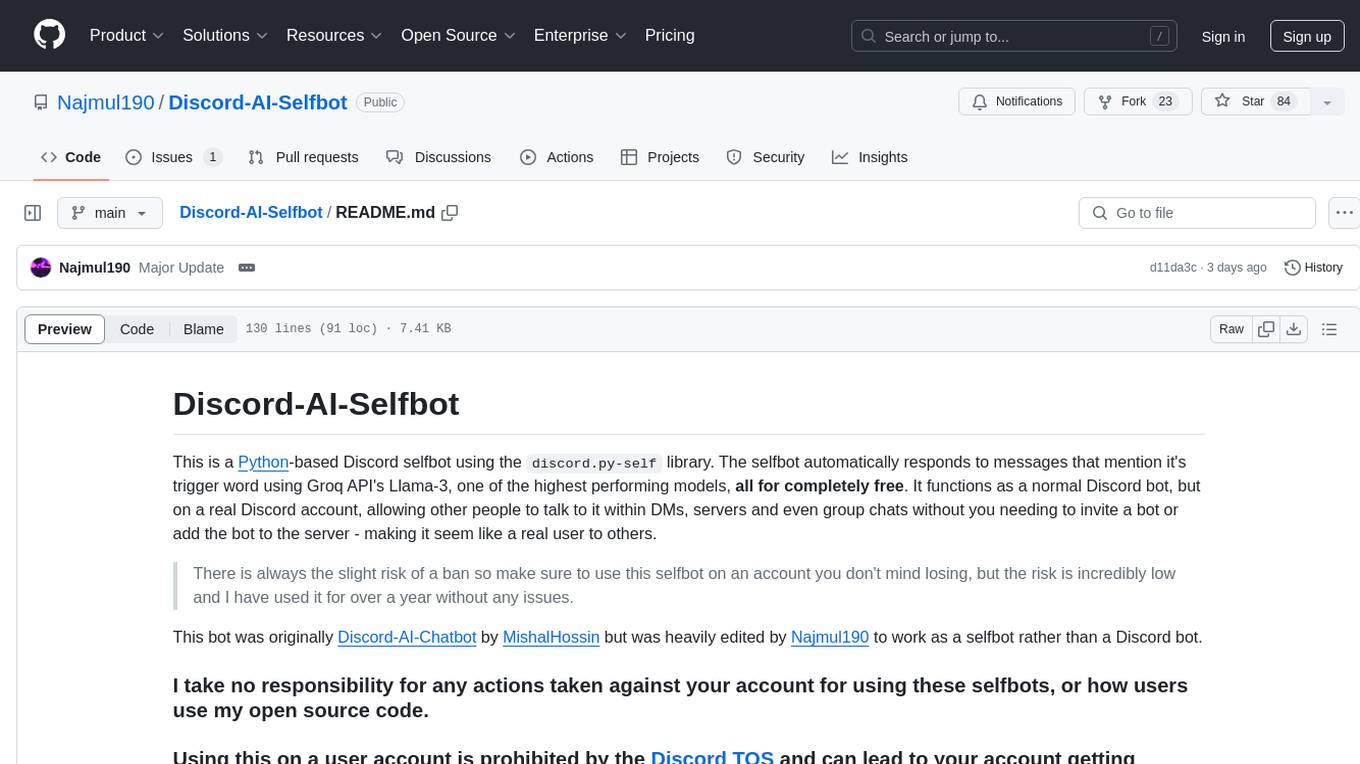

Discord-AI-Selfbot

Discord-AI-Selfbot is a Python-based Discord selfbot that uses the `discord.py-self` library to automatically respond to messages mentioning its trigger word using Groq API's Llama-3 model. It functions as a normal Discord bot on a real Discord account, enabling interactions in DMs, servers, and group chats without needing to invite a bot. The selfbot comes with features like custom AI instructions, free LLM model usage, mention and reply recognition, message handling, channel-specific responses, and a psychoanalysis command to analyze user messages for insights on personality.

bia-bob

BIA `bob` is a Jupyter-based assistant for interacting with data using large language models to generate Python code. It can utilize OpenAI's chatGPT, Google's Gemini, Helmholtz' blablador, and Ollama. Users need respective accounts to access these services. Bob can assist in code generation, bug fixing, code documentation, GPU-acceleration, and offers a no-code custom Jupyter Kernel. It provides example notebooks for various tasks like bio-image analysis, model selection, and bug fixing. Installation is recommended via conda/mamba environment. Custom endpoints like blablador and ollama can be used. Google Cloud AI API integration is also supported. The tool is extensible for Python libraries to enhance Bob's functionality.

For similar tasks

ai-comic-factory

The AI Comic Factory is a tool that allows you to create your own AI comics with a single prompt. It uses a large language model (LLM) to generate the story and dialogue, and a rendering API to generate the panel images. The AI Comic Factory is open-source and can be run on your own website or computer. It is a great tool for anyone who wants to create their own comics, or for anyone who is interested in the potential of AI for storytelling.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

LLaMa2lang

This repository contains convenience scripts to finetune LLaMa3-8B (or any other foundation model) for chat towards any language (that isn't English). The rationale behind this is that LLaMa3 is trained on primarily English data and while it works to some extent for other languages, its performance is poor compared to English.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

InvokeAI

InvokeAI is a leading creative engine built to empower professionals and enthusiasts alike. Generate and create stunning visual media using the latest AI-driven technologies. InvokeAI offers an industry leading Web Interface, interactive Command Line Interface, and also serves as the foundation for multiple commercial products.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

For similar jobs

ai-comic-factory

The AI Comic Factory is a tool that allows you to create your own AI comics with a single prompt. It uses a large language model (LLM) to generate the story and dialogue, and a rendering API to generate the panel images. The AI Comic Factory is open-source and can be run on your own website or computer. It is a great tool for anyone who wants to create their own comics, or for anyone who is interested in the potential of AI for storytelling.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

InvokeAI

InvokeAI is a leading creative engine built to empower professionals and enthusiasts alike. Generate and create stunning visual media using the latest AI-driven technologies. InvokeAI offers an industry leading Web Interface, interactive Command Line Interface, and also serves as the foundation for multiple commercial products.

ap-plugin

AP-PLUGIN is an AI drawing plugin for the Yunzai series robot framework, allowing you to have a convenient AI drawing experience in the input box. It uses the open source Stable Diffusion web UI as the backend, deploys it for free, and generates a variety of images with richer functions.

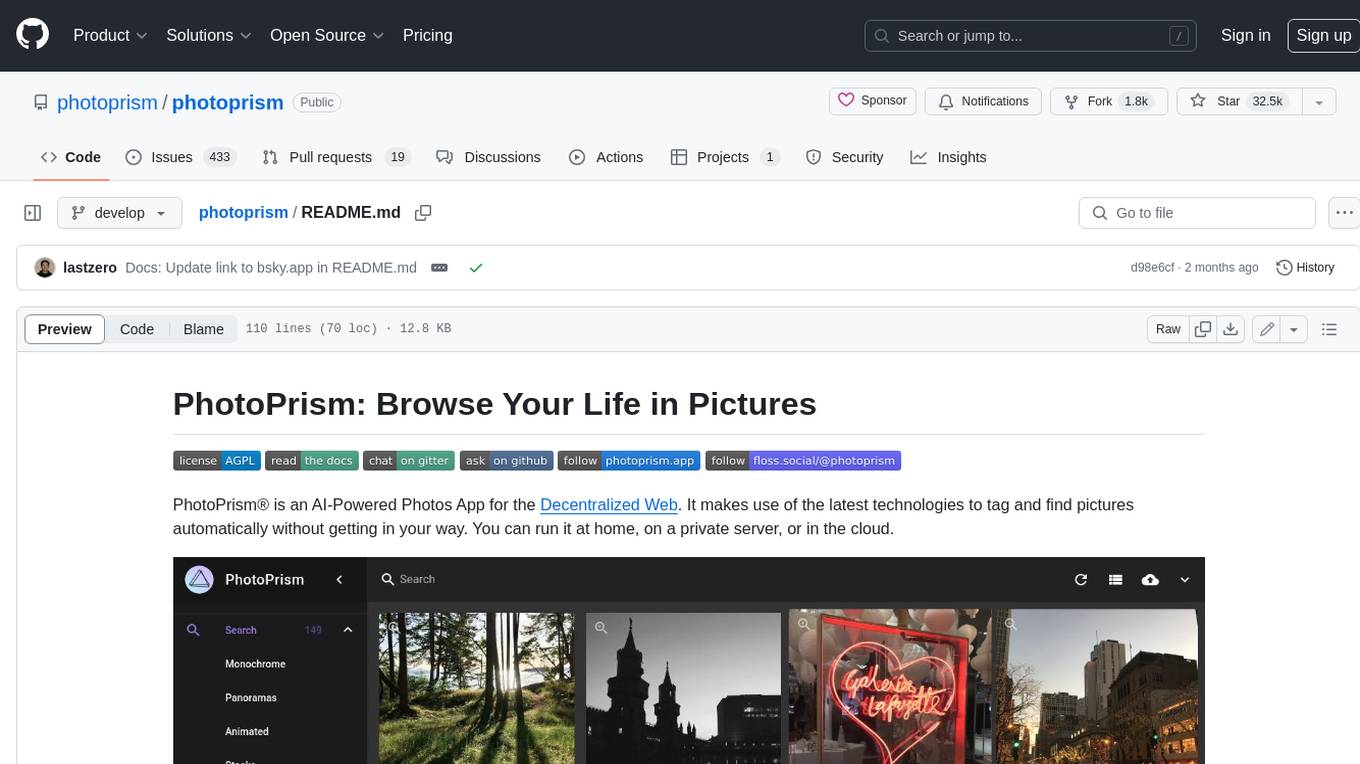

photoprism

PhotoPrism is an AI-powered photos app for the decentralized web. It uses the latest technologies to tag and find pictures automatically without getting in your way. You can run it at home, on a private server, or in the cloud.

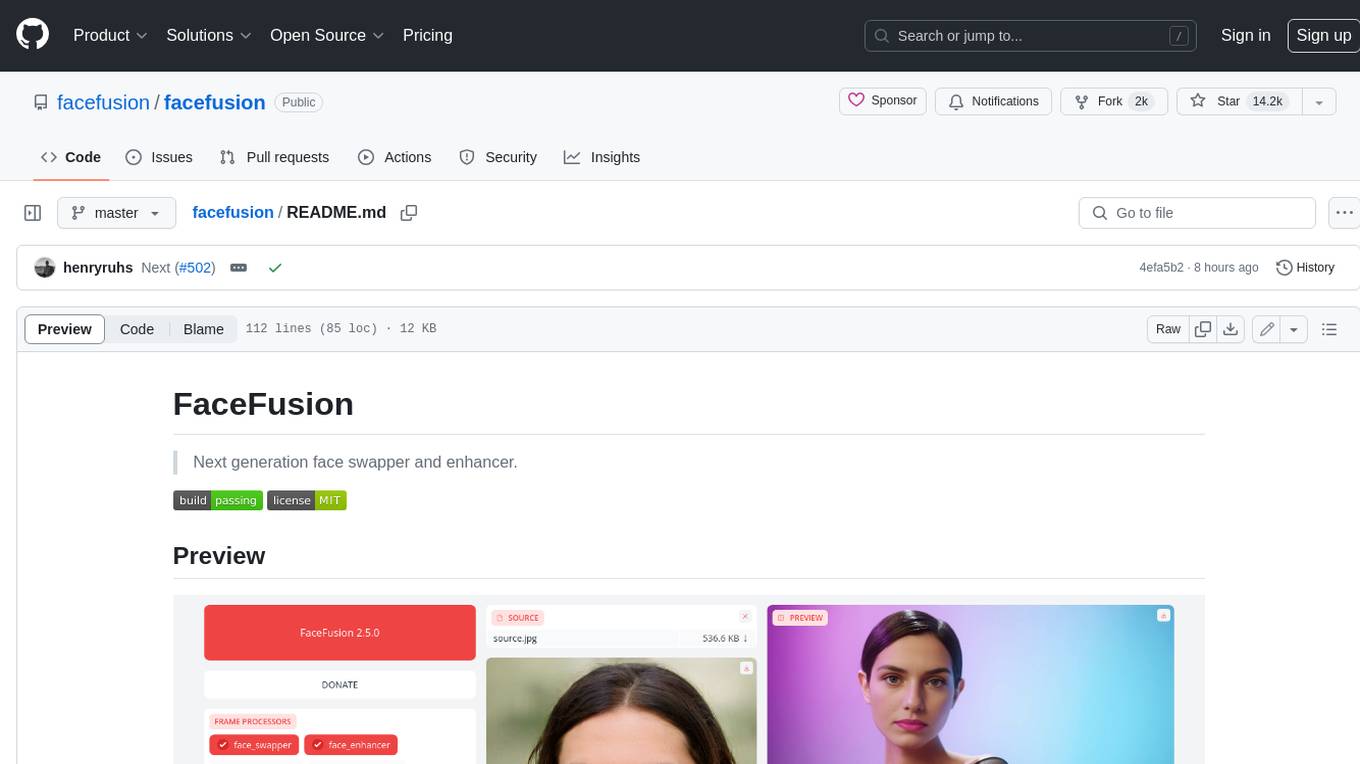

facefusion

FaceFusion is a next-generation face swapper and enhancer that allows users to seamlessly swap faces in images and videos, as well as enhance facial features for a more polished and refined look. With its advanced deep learning models, FaceFusion provides users with a wide range of options for customizing their face swaps and enhancements, making it an ideal tool for content creators, artists, and anyone looking to explore their creativity with facial manipulation.

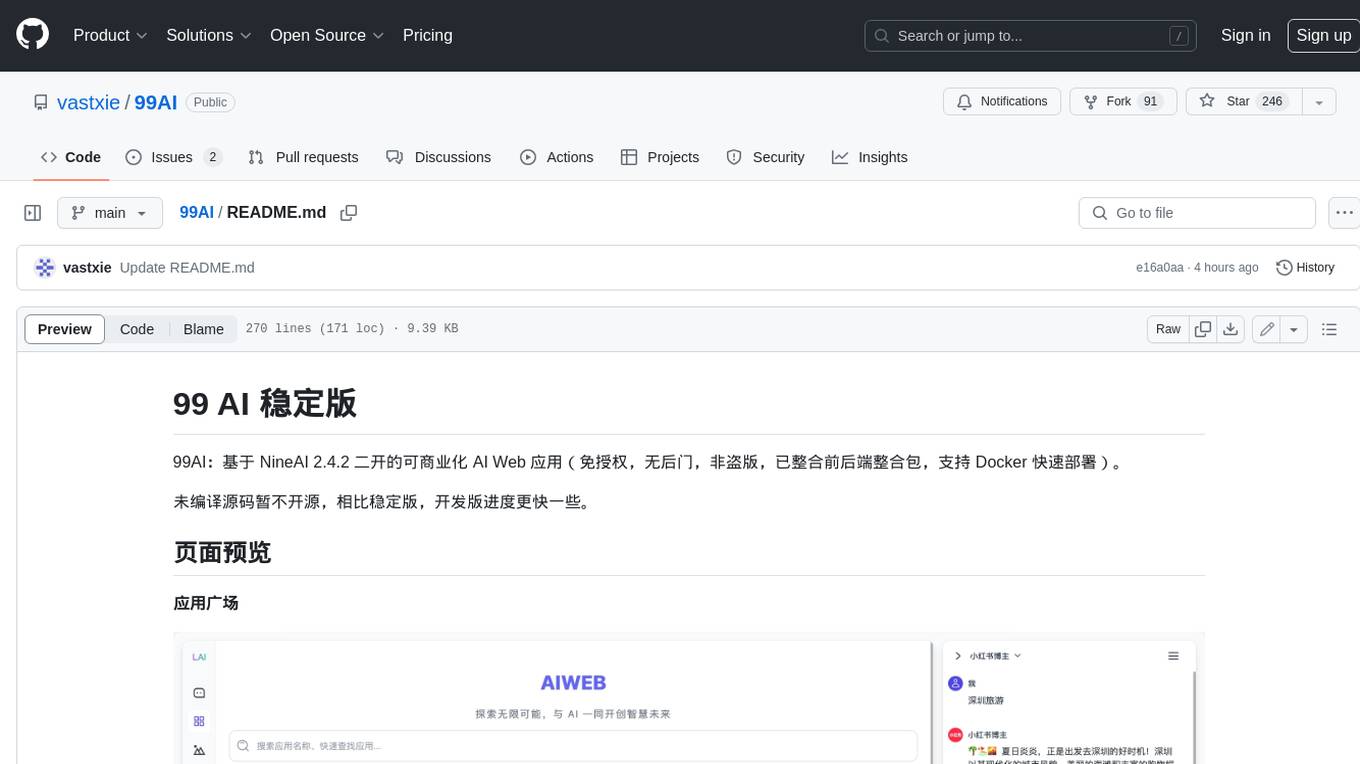

99AI

99AI is a commercializable AI web application based on NineAI 2.4.2 (no authorization, no backdoors, no piracy, integrated front-end and back-end integration packages, supports Docker rapid deployment). The uncompiled source code is temporarily closed. Compared with the stable version, the development version is faster.

wunjo.wladradchenko.ru

Wunjo AI is a comprehensive tool that empowers users to explore the realm of speech synthesis, deepfake animations, video-to-video transformations, and more. Its user-friendly interface and privacy-first approach make it accessible to both beginners and professionals alike. With Wunjo AI, you can effortlessly convert text into human-like speech, clone voices from audio files, create multi-dialogues with distinct voice profiles, and perform real-time speech recognition. Additionally, you can animate faces using just one photo combined with audio, swap faces in videos, GIFs, and photos, and even remove unwanted objects or enhance the quality of your deepfakes using the AI Retouch Tool. Wunjo AI is an all-in-one solution for your voice and visual AI needs, offering endless possibilities for creativity and expression.