GenerativeAI-Prompt-Sample-Japanese

ChatGPTやCopilotなど各種生成AI用の「日本語]の Prompt のサンプル

Stars: 305

This repository provides sample prompts for GenerativeAI in Japanese. Users should exercise caution and not input sensitive information. The included tools are Microsoft Copilot, OpenAI, Azure OpenAI Service, and Prompt Engineering Basic. The repository also offers a guide for Prompt Engineering in Japanese, along with references to various Japanese examples of Prompt Engineering techniques.

README:

GenerativeAI の Prompt のサンプルです。

全ては自己責任です。ご利用は十二分に注意をしてください。以下は ChatGPT に限らず情報管理の一般論とも言えますが。

- 機微な情報、機密情報は入力しない

- 出力結果を鵜呑みにしない

- 利用にあたっては、知財・法的な課題を会社・組織の法律の専門家に確認する

https://www.promptingguide.ai/jp

https://learn.microsoft.com/ja-jp/azure/cognitive-services/openai/overview

https://github.com/microsoft/OpenAIWorkshop/tree/main/scenarios/prompt_engineering

ChatGPT の基礎的な Prompt です。

### 役割 ###

関西の方として振舞ってください。ChatGPTに限らずLLMには出力トークン数の制限があります。出力途中で「終わった」という扱いになる事があります。プログラムコードを作成してもらっている際などには、良く遭遇します。

そんな際には、以下のようなPromptを使ってみるといいかもしれません。

作業を続けてください。各種Prompt Engineeringの日本語実例集(Zero-CoT、mock、ReAct、ToT、Metacog、Step Back、IEPなど) by @YutaroOgawa2 さん

https://qiita.com/YutaroOgawa2/items/aca32f8fd7d551596cf8

LLMのプロンプト技術まとめ by @fuyu_quant さん

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for GenerativeAI-Prompt-Sample-Japanese

Similar Open Source Tools

GenerativeAI-Prompt-Sample-Japanese

This repository provides sample prompts for GenerativeAI in Japanese. Users should exercise caution and not input sensitive information. The included tools are Microsoft Copilot, OpenAI, Azure OpenAI Service, and Prompt Engineering Basic. The repository also offers a guide for Prompt Engineering in Japanese, along with references to various Japanese examples of Prompt Engineering techniques.

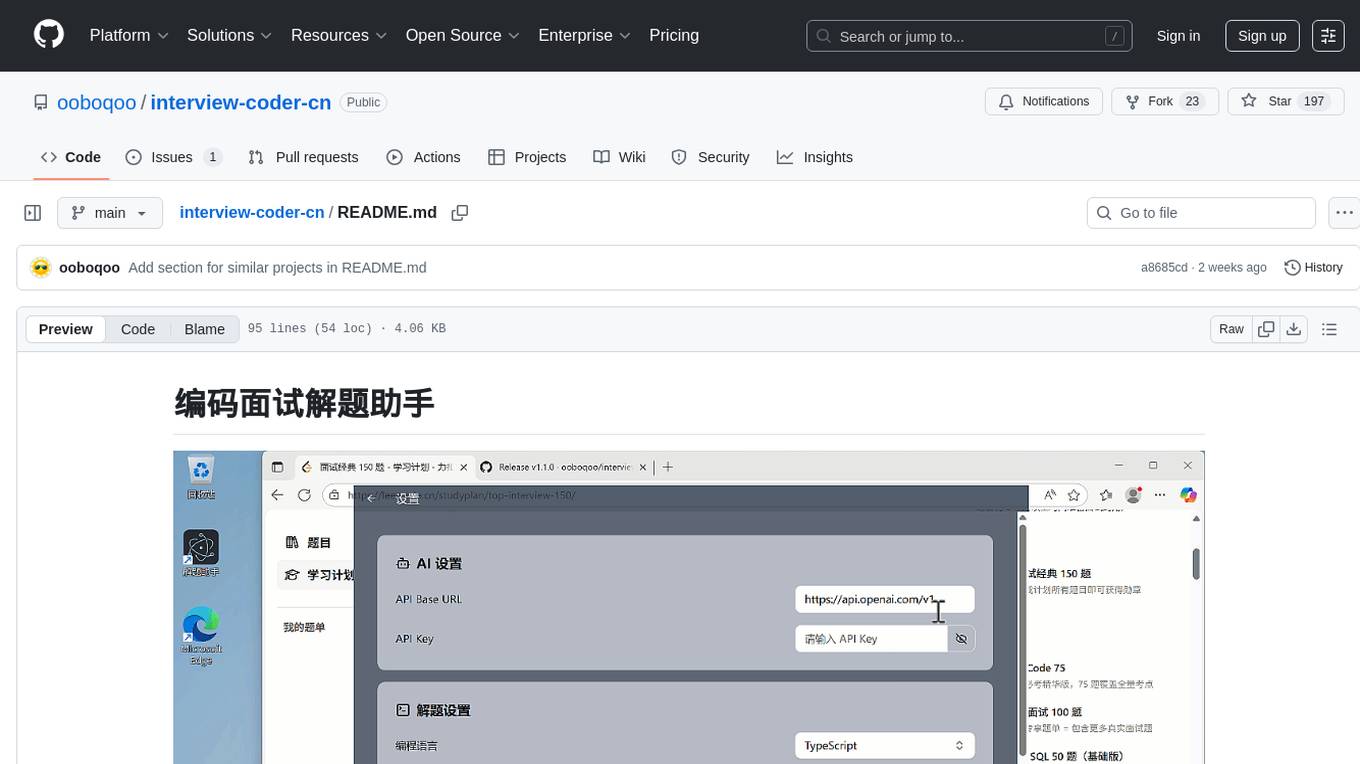

interview-coder-cn

This is a coding problem-solving assistant for Chinese users, tailored to the domestic AI ecosystem, simple and easy to use. It provides real-time problem-solving ideas and code analysis for coding interviews, avoiding detection during screen sharing. Users can also extend its functionality for other scenarios by customizing prompt words. The tool supports various programming languages and has stealth capabilities to hide its interface from interviewers even when screen sharing.

azooKey-Desktop

azooKey-Desktop is an open-source Japanese input system for macOS that incorporates the high-precision neural kana-kanji conversion engine 'Zenzai'. It offers features such as neural kana-kanji conversion, profile prompt, history learning, user dictionary, integration with personal optimization system 'Tuner', 'nice feeling conversion' with LLM, live conversion, and native support for AZIK. The tool is currently in alpha version, and its operation is not guaranteed. Users can install it via `.pkg` file or Homebrew. Development contributions are welcome, and the project has received support from the Information-technology Promotion Agency, Japan (IPA) for the 2024 fiscal year's untapped IT human resources discovery and nurturing project.

aituber-server

AITuberKit server-side is a tool that allows users to receive messages via WebSocket and obtain responses from Open Interpreter. Users can also send files to the server for storage and issue commands to Open Interpreter. The tool is designed for WebSocket operation and provides a default connection URL of `ws://127.0.0.1:8000/ws`. It supports debugging in VSCode with DEBUG_MODE=1. The tool is licensed under KillianLucas/open-interpreter and includes a guide on how to use Open Interpreter.

Shellsage

Shell Sage is an intelligent terminal companion and AI-powered terminal assistant that enhances the terminal experience with features like local and cloud AI support, context-aware error diagnosis, natural language to command translation, and safe command execution workflows. It offers interactive workflows, supports various API providers, and allows for custom model selection. Users can configure the tool for local or API mode, select specific models, and switch between modes easily. Currently in alpha development, Shell Sage has known limitations like limited Windows support and occasional false positives in error detection. The roadmap includes improvements like better context awareness, Windows PowerShell integration, Tmux integration, and CI/CD error pattern database.

quantcoder

QuantCoder is a local-first CLI tool that generates QuantConnect trading algorithms from academic research papers. It uses local LLMs for code generation, refinement, and error fixing. The tool does not require cloud API keys and offers models for reasoning, summarization, and chat. Users can interact with QuantCoder through interactive, CLI, programmatic, and autonomous modes. It also features an evolution mode inspired by AlphaEvolve, backtesting with detailed metrics, library building, and integration with QuantConnect for backtesting and deployment. The tool's architecture includes components for CLI, configuration management, NLP, multi-agent system, evolution engine, self-improving pipeline, library builder, and integration with QuantConnect MCP.

100x-LLM

This repository contains code snippets and examples from the 100x Applied AI cohort lectures. It includes implementations of LLM Workflows, RAG (Retrieval Augmented Generation), Agentic Patterns, Chat Completions with various providers, Function Calling, and more. The repository structure consists of core components like LLM Workflows, RAG Implementations, Agentic Patterns, Chat Completions, Function Calling, Hugging Face Integration, and additional components for various agent implementations, presentation generation, Notion API integration, FastAPI-based endpoints, authentication implementations, and LangChain usage examples.

binglish

binglish is a desktop English learning tool that automatically changes the Bing daily wallpaper while helping users learn new words through AI-generated images, example sentences, and translations. Users can enjoy beautiful scenery, acquire knowledge, and build vocabulary towers. The tool excludes bad words and offers words ranging from CET-4 to GRE difficulty levels. It refreshes every 3 hours and is designed for Windows 10 and above with a resolution of 1920x1080. The AI-generated content may not be completely accurate.

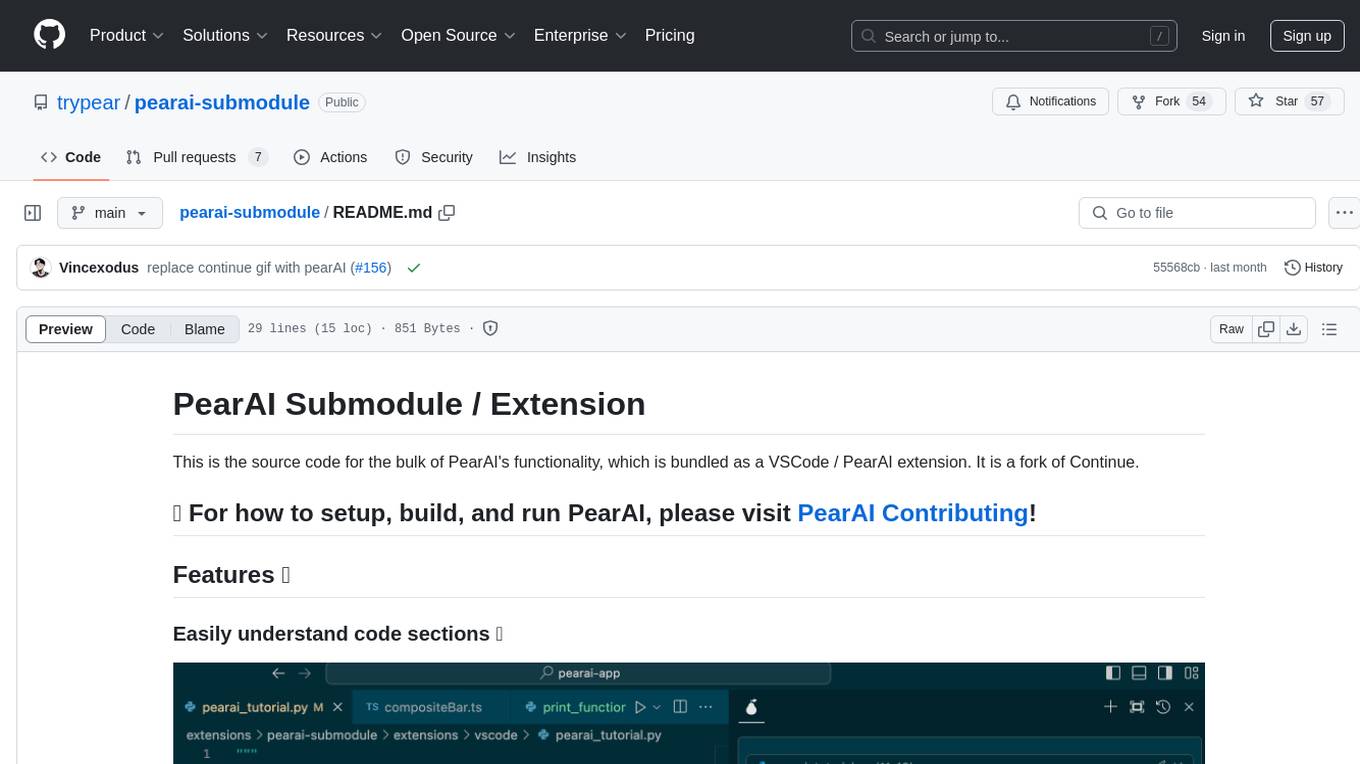

pearai-submodule

PearAI Submodule / Extension is the source code for the bulk of PearAI's functionality, bundled as a VSCode / PearAI extension. It allows users to easily understand code sections, refactor functions, and ask questions by mentioning a file. The tool aims to enhance coding experience and productivity within the VSCode environment.

chunkr

Chunkr is an open-source document intelligence API that provides a production-ready service for document layout analysis, OCR, and semantic chunking. It allows users to convert PDFs, PPTs, Word docs, and images into RAG/LLM-ready chunks. The API offers features such as layout analysis, OCR with bounding boxes, structured HTML and markdown output, and VLM processing controls. Users can interact with Chunkr through a Python SDK, enabling them to upload documents, process them, and export results in various formats. The tool also supports self-hosted deployment options using Docker Compose or Kubernetes, with configurations for different AI models like OpenAI, Google AI Studio, and OpenRouter. Chunkr is dual-licensed under the GNU Affero General Public License v3.0 (AGPL-3.0) and a commercial license, providing flexibility for different usage scenarios.

rag-security-scanner

RAG/LLM Security Scanner is a professional security testing tool designed for Retrieval-Augmented Generation (RAG) systems and LLM applications. It identifies critical vulnerabilities in AI-powered applications such as chatbots, virtual assistants, and knowledge retrieval systems. The tool offers features like prompt injection detection, data leakage assessment, function abuse testing, context manipulation identification, professional reporting with JSON/HTML formats, and easy integration with OpenAI, HuggingFace, and custom RAG systems.

morphic

Morphic is an AI-powered answer engine with a generative UI. It utilizes a stack of Next.js, Vercel AI SDK, OpenAI, Tavily AI, shadcn/ui, Radix UI, and Tailwind CSS. To get started, fork and clone the repo, install dependencies, fill out secrets in the .env.local file, and run the app locally using 'bun dev'. You can also deploy your own live version of Morphic with Vercel. Verified models that can be specified to writers include Groq, LLaMA3 8b, and LLaMA3 70b.

takt

TAKT is a tool designed to coordinate AI coding agents by providing structured review loops, managed prompts, and guardrails to ensure the delivery of quality code. It runs AI agents through YAML-defined workflows with built-in review cycles, allowing users to define tasks, queue them, and let TAKT handle the execution process. The tool is practical for daily development, reproducible with consistent results, and supports multi-agent orchestration with different personas and review criteria. TAKT is built with a focus on architecture, security, and AI antipattern review criteria, providing a comprehensive solution for code quality assurance.

E2B

E2B Sandbox is a secure sandboxed cloud environment made for AI agents and AI apps. Sandboxes allow AI agents and apps to have long running cloud secure environments. In these environments, large language models can use the same tools as humans do. For example: * Cloud browsers * GitHub repositories and CLIs * Coding tools like linters, autocomplete, "go-to defintion" * Running LLM generated code * Audio & video editing The E2B sandbox can be connected to any LLM and any AI agent or app.

moling

MoLing is a computer-use and browser-use MCP Server that implements system interaction through operating system APIs, enabling file system operations such as reading, writing, merging, statistics, and aggregation, as well as the ability to execute system commands. It is a dependency-free local office automation assistant. Requiring no installation of any dependencies, MoLing can be run directly and is compatible with multiple operating systems, including Windows, Linux, and macOS. This eliminates the hassle of dealing with environment conflicts involving Node.js, Python, Docker, and other development environments. Command-line operations are dangerous and should be used with caution. MoLing supports features like file system operations, command-line terminal execution, browser control powered by 'github.com/chromedp/chromedp', and future plans for personal PC data organization, document writing assistance, schedule planning, and life assistant features. MoLing has been tested on macOS but may have issues on other operating systems.

ccprompts

ccprompts is a collection of ~70 Claude Code commands for software development workflows with agent generation capabilities. It includes safety validation and can be used directly with Claude Code or adapted for specific needs. The agent template system provides a wizard for creating specialized sub-agents (e.g., security auditors, systems architects) with standardized formatting and proper tool access. The repository is under active development, so caution is advised when using it in production environments.

For similar tasks

ai-commits-intellij-plugin

AI Commits is a plugin for IntelliJ-based IDEs and Android Studio that generates commit messages using git diff and OpenAI. It offers features such as generating commit messages from diff using OpenAI API, computing diff only from selected files and lines in the commit dialog, creating custom prompts for commit message generation, using predefined variables and hints to customize prompts, choosing any of the models available in OpenAI API, setting OpenAI network proxy, and setting custom OpenAI compatible API endpoint.

extensionOS

Extension | OS is an open-source browser extension that brings AI directly to users' web browsers, allowing them to access powerful models like LLMs seamlessly. Users can create prompts, fix grammar, and access intelligent assistance without switching tabs. The extension aims to revolutionize online information interaction by integrating AI into everyday browsing experiences. It offers features like Prompt Factory for tailored prompts, seamless LLM model access, secure API key storage, and a Mixture of Agents feature. The extension was developed to empower users to unleash their creativity with custom prompts and enhance their browsing experience with intelligent assistance.

img-prompt

IMGPrompt is an AI prompt editor tailored for image and video generation tools like Stable Diffusion, Midjourney, DALL·E, FLUX, and Sora. It offers a clean interface for viewing and combining prompts with translations in multiple languages. The tool includes features like smart recommendations, translation, random color generation, prompt tagging, interactive editing, categorized tag display, character count, and localization. Users can enhance their creative workflow by simplifying prompt creation and boosting efficiency.

5ire

5ire is a cross-platform desktop client that integrates a local knowledge base for multilingual vectorization, supports parsing and vectorization of various document formats, offers usage analytics to track API spending, provides a prompts library for creating and organizing prompts with variable support, allows bookmarking of conversations, and enables quick keyword searches across conversations. It is licensed under the GNU General Public License version 3.

sidecar

Sidecar is the AI brains of Aide the editor, responsible for creating prompts, interacting with LLM, and ensuring seamless integration of all functionalities. It includes 'tool_box.rs' for handling language-specific smartness, 'symbol/' for smart and independent symbols, 'llm_prompts/' for creating prompts, and 'repomap' for creating a repository map using page rank on code symbols. Users can contribute by submitting bugs, feature requests, reviewing source code changes, and participating in the development workflow.

labs-ai-tools-for-devs

This repository provides AI tools for developers through Docker containers, enabling agentic workflows. It allows users to create complex workflows using Dockerized tools and Markdown, leveraging various LLM models. The core features include Dockerized tools, conversation loops, multi-model agents, project-first design, and trackable prompts stored in a git repo.

Prompt_Engineering

Prompt Engineering Techniques is a comprehensive repository for learning, building, and sharing prompt engineering techniques, from basic concepts to advanced strategies for leveraging large language models. It provides step-by-step tutorials, practical implementations, and a platform for showcasing innovative prompt engineering techniques. The repository covers fundamental concepts, core techniques, advanced strategies, optimization and refinement, specialized applications, and advanced applications in prompt engineering.

mark

Mark is a CLI tool that allows users to interact with large language models (LLMs) using Markdown format. It enables users to seamlessly integrate GPT responses into Markdown files, supports image recognition, scraping of local and remote links, and image generation. Mark focuses on using Markdown as both a prompt and response medium for LLMs, offering a unique and flexible way to interact with language models for various use cases in development and documentation processes.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.