extensionOS

Imagine a world where everyone can access powerful AI models—LLMs, generative image models, and speech recognition—directly in their web browser. Integrating AI into daily browsing will revolutionise online interactions, offering instant, intelligent assistance tailored to individual needs.

Stars: 73

Extension | OS is an open-source browser extension that brings AI directly to users' web browsers, allowing them to access powerful models like LLMs seamlessly. Users can create prompts, fix grammar, and access intelligent assistance without switching tabs. The extension aims to revolutionize online information interaction by integrating AI into everyday browsing experiences. It offers features like Prompt Factory for tailored prompts, seamless LLM model access, secure API key storage, and a Mixture of Agents feature. The extension was developed to empower users to unleash their creativity with custom prompts and enhance their browsing experience with intelligent assistance.

README:

⭐️ Welcome to Extension | OS

Tired of the endless back-and-forth with ChatGPT, Claude, and other AI tools just to repeat the same task over and over?

You're not alone! I felt the same frustration, so I built a solution: Extension | OS—an open-source browser extension that makes AI accessible directly where you need it.

Imagine: You create a prompt like "Fix the grammar for this text," right-click, and job done—no more switching tabs, no more wasted time.

Imagine a world where every user has access to powerful models (LLMs and more) directly within their web browser. By integrating AI into everyday internet browsing, we can revolutionise the way people interact with information online, providing them with instant, intelligent assistance tailored to their needs.

Join an exclusive group of up to 100 early adopters and be among the first to experience the future of AI-powered browsing!

Select, right-click and select the functionality—it's that easy!

Pick your favorite provider and select the model that excites you the most.

Customize your look and feel, and unleash your creativity with your own prompts!

Mixture of Agents (pre-release)

s

s

Use my affiliation code when you sign-up on VAPI: https://vapi.ai/?aff=extension-os

- Clone the extension or download the latest release.

- Open the Chrome browser and navigate to chrome://extensions.

- Enable the developer mode by clicking the toggle switch in the top right corner of the page.

- Unpack/Unzip the

chrome-mv3-prod.zip - Click on the "Load unpacked" button and select the folder you just unzipped.

- The options page automatically opens, insert your API keys.

- Prompt Factory: Effortlessly Tailor Every Prompt to Your Needs with Our Standard Installation.

- Prompt Factory: Choose the Functionality for Every Prompt: From Copy-Pasting to Opening a New Sidebar.

- Seamless Integration: Effortlessly access any LLM model directly from your favorite website.

- Secure Storage: Your API key is securely stored in the browser's local storage, ensuring it never leaves your device.

- [Beta] Mixture of Agents: Experience the innovative Mixture Of Agents feature.

On the morning of July 27th, 2024, I began an exciting journey by joining the SF Hackathon x Build Club. After months of refining the concept in my mind, I decided it was time to bring it to life. I worked on enhancing my idea, updating what I had already created, and empowering everyone to unleash their creativity with custom prompts.

All your data is stored locally on your hard drive.

/Users/<your-username>/Library/Application Support/Google/Chrome/Default/Sync Extension Settings/

To utilize the localhost option and perform LLM inference, you must set up a local Ollama server. You can download and install Ollama along with the CLI here.

Example:

ollama pull llama3.1

Example:

OLLAMA_ORIGINS=chrome-extension://* ollama serve

Important: You need to configure the environment variable OLLAMA_ORIGINS to chrome-extension://* to permit requests from the Chrome extension. If OLLAMA_ORIGINS is not correctly configured, you will encounter an error in the Chrome extension.

Secutity the * in chrome-extension://* should be replaced with the extension id. If you have downloaded Extension | OS from chrome, please use chrome-extension://bahjnakiionbepnlbogdkojcehaeefnp

Run launchctl setenv to set OLLAMA_ORIGINS.

launchctl setenv OLLAMA_ORIGINS "chrome-extension://bahjnakiionbepnlbogdkojcehaeefnp"

Setting environment variables on Mac (Ollama)

The Ollama server can also be run in a Docker container. The container should have the OLLAMA_ORIGINS environment variable set to chrome-extension://*.

Run docker run with the -e flag to set the OLLAMA_ORIGINS environment variable:

docker run -e OLLAMA_ORIGINS="chrome-extension://bahjnakiionbepnlbogdkojcehaeefnp" -d -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama

Move it somewhere else ASAP:

- https://github.com/rowyio/roadmap?tab=readme-ov-file#step-1-setup-backend-template

- https://canny.io

- https://sleekplan.com/

- [ ] Logging: Determine a location to store log files.

- [ ] Prompt Factory: Add the ability to create custom prompts.

- [ ] Add the ability to chat within the browser.

- [ ] Encryption of keys : They are stored locally, nonetheless being my first chrome extension i need to research more about how this can be accessed.

- [ ] Automated Testing

- [ ] Investigate if Playwright supports Chrome extension testing.

- [ ] Automated Tagging / Release

- [ ] Locale

- [ ] UI for the Prompt Factory is not intuitive and the "save all" button UX is cr@p.

- [ ] The sidebar API doesn't work after the storage API is called (User Interaction must be done)

- [ ] Move files to a

/srcfolder to improve organization. - [ ] Strategically organize the codebase structure.

- [ ] Decide on a package manager: npm, pnpm, or yarn.

- [ ] Workflow to update the models automatically.

- [ ] Prompt Factory: Add the ability to build workflows.

- [ ] Prompt Factory: Add the option to select which LLM to use for each prompt.

- [ ] Remove all the silly comments, maybe one day....

- ElevenLabs

- Build Club -> Hackaton Organiser

- Leonardo.ai -> Icon generated with the phoenix model

- Canva -> The other images not generated with AI

- ShadCn -> All the UI?

- Plasmo -> The Framework

- Groq -> Extra credits

- Icons -> icons8

- https://shadcnui-expansions.typeart.cc/

- Adding the ability to specify a custom URL

- Adding the uninstall hook to understand what can we improve.

- Fixed the X,Y positioning in page like LinkedIn, Reddit and so on.

- The declarativeNetRequest has been removed to enhance the release lifecycle in light of Chrome Store authorization requirements. Ollama continue to be fully supported, and detailed configuration instructions can be found in the README.

- Chaged the introductory GIF demonstrating how to use the Extension | OS.

- PromptFactory: Implemented a notification to inform users that any selected text will be automatically appended to the end of the prompt.

- Settings: Using Switch vs CheckBoxes

- Implemented optional (disabled by default) anonymous tracking to monitor usage patterns, including the most frequently used models and vendors.

- SelectionMenu: Now accessible on Reddit as well! (Consider prefixing all Tailwind classes for consistency)

- PromptSelector: Resolved all React warnings for a smoother experience

- Verified that pre-selection functions correctly (Thanks to E2E testing)

- Added more instruction for ollama

- localhost: Add the ability to specify the model by input text (vs select box)

- Fixed a useEffect bug

- SelectionMenu: Now you can choose to enable/disable

- SelectionMenu: When a key is pressed (e.g backspace for remove, or CTRL/CMD + C for copying) the menu automatically disappear

- Development: Integrated Playwright for testing and added a suite of automated tests

- SelectionMenu: Fixed a bug that caused the menu to vanish unexpectedly after the onMouseUp event, leading to confusion regarding item selection for users.

- SelectionMenu: Adjusted the visual gap to provide more space to the user.

- UI: Eliminated the conflicting success/loading state for a clearer user experience.

- SelectionMenu: Refined the triggering mechanism for improved responsiveness.

- SelectionMenu: Reduced the size for a more compact design.

- SelectionMenu: Automatically refreshes items immediately after the user updates the prompts.

- Fixed grammar issues, thanks to Luca.

- Introduced a new menu, courtesy of Denis.

- The new menu currently does not support phone calls (feature coming soon).

- Enhanced UI (tooltips are now more noticeable) thanks to Juanjo (We Move Experience) and Agostina (PepperStudio)

- Prompt Factory: Utilizing AutoTextArea for improved prompt display

- Prompt Factory: Removed the ID to improve user experience (non-tech users)

- System: Split the systemPrompt from the userPrompt.

- UX: Small improvements and removed the complicated items

- General: Free tier exhaustion. We haven't got a sponsor (yet) to support our community users.

- Google: Added identity, identity.email to enable automatic log-in using your google credentials.

- General: Introduced a FREE Tier for users to explore the Extension | OS without needing to understand API Keys.

- Development: Implemented the CRX Public Key to maintain a consistent extension ID across re-installations during development.

- Development: Integrated OAUTH for user authentication when accessing the FREE tier.

- Permissions: Added identity permissions to facilitate user identity retrieval.

- Showcase: Updated images for improved visual presentation.

- Prompt Factory: Set Extension | OS as the default model, enabling users to utilize the extension without prior knowledge of API Key setup.

- Context Menu: Added a new right-click option for seamless access to configuration settings.

- Context Menu: Improved the layout and organization of the context menu for enhanced user experience.

- Prompt Factory: Introduced a comprehensive sheet that details the context and functionality of each feature.

- Prompt Factory: Implemented a clickable icon to indicate that the tooltip contains additional information when clicked.

- Bug fixes

- Clean up codebase

- UX for the functionality improved

- Removed an unnecessary dependency to comply with Chrome Store publication guidelines.

- Introduced a new icon.

- Implemented a loading state.

- Fixed an issue where Reddit visibility was broken.

- Adding missing models from together.ai

- Adding missing models from groq

- Updated About page

- MoA: Add the ability to use a custom prompt.

- Popup: UI revamped

- Popup: New Presentation image and slogan

- Options: Unified fonts

- Options: Minor UI updates

- Content: Better error handling and UX (user get redireted to the option page when the API key is missing)

- Fix for together.ai (it was using a non-chat model)

- Vapi affilation link (help me maintain this extension, sign up with the link)

- Vapi Enhancements: Prompts now support selecting a specific phone number to call.

- Vapi Enhancements: Prompts can now include a custom initial message for the conversation.

- Vapi Enhancements: Now every prompt can be customised using the

- UI: Section for specific configurations

- Hotfix: declarativeNetRequest was intercepting every localhost request.

- Added github branch protection.

- Changed the datastructure to achieve a clearer and more abstract way to call functions

- Function to clean the datastructure to adapt to chrome.contextMenus.CreateProperties

- use "side_" as hack to open the sidebar. WHY: The sidebar.open doesn't work after we call the storage.get

- Allowing to change the default prompts

- chrome.runtime.openOptionsPage() opens only in production environment (onInstalled)

- Improved UI (switched to dark theme)

- Allowing to change the functionality; The "side_" bug is annoying as it is over complicating the codebase.

- How to install and start polishing the repository

- Check the demo video

- Ensure that the open.sidePanel is always initialized before the Plasmo Storage.

- We currently have two menus that function similarly but not identically; we need to implement a more efficient solution to consolidate them into one.

- The Plasmo handler may stop functioning unexpectedly without errors if a response is not returned; ensure to always return a response to prevent this issue.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for extensionOS

Similar Open Source Tools

extensionOS

Extension | OS is an open-source browser extension that brings AI directly to users' web browsers, allowing them to access powerful models like LLMs seamlessly. Users can create prompts, fix grammar, and access intelligent assistance without switching tabs. The extension aims to revolutionize online information interaction by integrating AI into everyday browsing experiences. It offers features like Prompt Factory for tailored prompts, seamless LLM model access, secure API key storage, and a Mixture of Agents feature. The extension was developed to empower users to unleash their creativity with custom prompts and enhance their browsing experience with intelligent assistance.

llm-answer-engine

This repository contains the code and instructions needed to build a sophisticated answer engine that leverages the capabilities of Groq, Mistral AI's Mixtral, Langchain.JS, Brave Search, Serper API, and OpenAI. Designed to efficiently return sources, answers, images, videos, and follow-up questions based on user queries, this project is an ideal starting point for developers interested in natural language processing and search technologies.

testzeus-hercules

Hercules is the world’s first open-source testing agent designed to handle the toughest testing tasks for modern web applications. It turns simple Gherkin steps into fully automated end-to-end tests, making testing simple, reliable, and efficient. Hercules adapts to various platforms like Salesforce and is suitable for CI/CD pipelines. It aims to democratize and disrupt test automation, making top-tier testing accessible to everyone. The tool is transparent, reliable, and community-driven, empowering teams to deliver better software. Hercules offers multiple ways to get started, including using PyPI package, Docker, or building and running from source code. It supports various AI models, provides detailed installation and usage instructions, and integrates with Nuclei for security testing and WCAG for accessibility testing. The tool is production-ready, open core, and open source, with plans for enhanced LLM support, advanced tooling, improved DOM distillation, community contributions, extensive documentation, and a bounty program.

Instrukt

Instrukt is a terminal-based AI integrated environment that allows users to create and instruct modular AI agents, generate document indexes for question-answering, and attach tools to any agent. It provides a platform for users to interact with AI agents in natural language and run them inside secure containers for performing tasks. The tool supports custom AI agents, chat with code and documents, tools customization, prompt console for quick interaction, LangChain ecosystem integration, secure containers for agent execution, and developer console for debugging and introspection. Instrukt aims to make AI accessible to everyone by providing tools that empower users without relying on external APIs and services.

GPThemes

GPThemes is a GitHub repository that provides a collection of customizable themes for various programming languages and text editors. It offers a wide range of color schemes and styling options to enhance the visual appearance of code editors and terminals. Users can easily browse through the available themes and apply them to their preferred development environment to personalize the coding experience. With GPThemes, developers can quickly switch between different themes to find the one that best suits their preferences and workflow, making coding more enjoyable and visually appealing.

ComfyUIMini

ComfyUI Mini is a lightweight and mobile-friendly frontend designed to run ComfyUI workflows. It allows users to save workflows locally on their device or PC, easily import workflows, and view generation progress information. The tool requires ComfyUI to be installed on the PC and a modern browser with WebSocket support on the mobile device. Users can access the WebUI by running the app and connecting to the local address of the PC. ComfyUI Mini provides a simple and efficient way to manage workflows on mobile devices.

Local-Multimodal-AI-Chat

Local Multimodal AI Chat is a multimodal chat application that integrates various AI models to manage audio, images, and PDFs seamlessly within a single interface. It offers local model processing with Ollama for data privacy, integration with OpenAI API for broader AI capabilities, audio chatting with Whisper AI for accurate voice interpretation, and PDF chatting with Chroma DB for efficient PDF interactions. The application is designed for AI enthusiasts and developers seeking a comprehensive solution for multimodal AI technologies.

AIOLists

AIOLists is a stateless open source list management addon for Stremio that allows users to import and manage lists from various sources in one place. It offers unified search, metadata customization, Trakt integration, MDBList integration, external lists import, list sorting, customization options, watchlist updates, RPDB support, genre filtering, discovery lists, and shareable configurations. The addon aims to enhance the list management experience for Stremio users by providing a comprehensive set of features and functionalities.

langmanus

LangManus is a community-driven AI automation framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It implements a hierarchical multi-agent system with agents like Coordinator, Planner, Supervisor, Researcher, Coder, Browser, and Reporter. The framework supports LLM integration, search and retrieval tools, Python integration, workflow management, and visualization. LangManus aims to give back to the open-source community and welcomes contributions in various forms.

MyDeviceAI

MyDeviceAI is a personal AI assistant app for iPhone that brings the power of artificial intelligence directly to the device. It focuses on privacy, performance, and personalization by running AI models locally and integrating with privacy-focused web services. The app offers seamless user experience, web search integration, advanced reasoning capabilities, personalization features, chat history access, and broad device support. It requires macOS, Xcode, CocoaPods, Node.js, and a React Native development environment for installation. The technical stack includes React Native framework, AI models like Qwen 3 and BGE Small, SearXNG integration, Redux for state management, AsyncStorage for storage, Lucide for UI components, and tools like ESLint and Prettier for code quality.

burpference

Burpference is an open-source extension designed to capture in-scope HTTP requests and responses from Burp's proxy history and send them to a remote LLM API in JSON format. It automates response capture, integrates with APIs, optimizes resource usage, provides color-coded findings visualization, offers comprehensive logging, supports native Burp reporting, and allows flexible configuration. Users can customize system prompts, API keys, and remote hosts, and host models locally to prevent high inference costs. The tool is ideal for offensive web application engagements to surface findings and vulnerabilities.

nextjs-ollama-llm-ui

This web interface provides a user-friendly and feature-rich platform for interacting with Ollama Large Language Models (LLMs). It offers a beautiful and intuitive UI inspired by ChatGPT, making it easy for users to get started with LLMs. The interface is fully local, storing chats in local storage for convenience, and fully responsive, allowing users to chat on their phones with the same ease as on a desktop. It features easy setup, code syntax highlighting, and the ability to easily copy codeblocks. Users can also download, pull, and delete models directly from the interface, and switch between models quickly. Chat history is saved and easily accessible, and users can choose between light and dark mode. To use the web interface, users must have Ollama downloaded and running, and Node.js (18+) and npm installed. Installation instructions are provided for running the interface locally. Upcoming features include the ability to send images in prompts, regenerate responses, import and export chats, and add voice input support.

DevDocs

DevDocs is a platform designed to simplify the process of digesting technical documentation for software engineers and developers. It automates the extraction and conversion of web content into markdown format, making it easier for users to access and understand the information. By crawling through child pages of a given URL, DevDocs provides a streamlined approach to gathering relevant data and integrating it into various tools for software development. The tool aims to save time and effort by eliminating the need for manual research and content extraction, ultimately enhancing productivity and efficiency in the development process.

OpenDAN-Personal-AI-OS

OpenDAN is an open source Personal AI OS that consolidates various AI modules for personal use. It empowers users to create powerful AI agents like assistants, tutors, and companions. The OS allows agents to collaborate, integrate with services, and control smart devices. OpenDAN offers features like rapid installation, AI agent customization, connectivity via Telegram/Email, building a local knowledge base, distributed AI computing, and more. It aims to simplify life by putting AI in users' hands. The project is in early stages with ongoing development and future plans for user and kernel mode separation, home IoT device control, and an official OpenDAN SDK release.

nanobrowser

Nanobrowser is an open-source AI web automation tool that runs in your browser. It is a free alternative to OpenAI Operator with flexible LLM options and a multi-agent system. Nanobrowser offers premium web automation capabilities while keeping users in complete control, with features like a multi-agent system, interactive side panel, task automation, follow-up questions, and multiple LLM support. Users can easily download and install Nanobrowser as a Chrome extension, configure agent models, and accomplish tasks such as news summary, GitHub research, and shopping research with just a sentence. The tool uses a specialized multi-agent system powered by large language models to understand and execute complex web tasks. Nanobrowser is actively developed with plans to expand LLM support, implement security measures, optimize memory usage, enable session replay, and develop specialized agents for domain-specific tasks. Contributions from the community are welcome to improve Nanobrowser and build the future of web automation.

crawlee-python

Crawlee-python is a web scraping and browser automation library that covers crawling and scraping end-to-end, helping users build reliable scrapers fast. It allows users to crawl the web for links, scrape data, and store it in machine-readable formats without worrying about technical details. With rich configuration options, users can customize almost any aspect of Crawlee to suit their project's needs.

For similar tasks

extensionOS

Extension | OS is an open-source browser extension that brings AI directly to users' web browsers, allowing them to access powerful models like LLMs seamlessly. Users can create prompts, fix grammar, and access intelligent assistance without switching tabs. The extension aims to revolutionize online information interaction by integrating AI into everyday browsing experiences. It offers features like Prompt Factory for tailored prompts, seamless LLM model access, secure API key storage, and a Mixture of Agents feature. The extension was developed to empower users to unleash their creativity with custom prompts and enhance their browsing experience with intelligent assistance.

ai-commits-intellij-plugin

AI Commits is a plugin for IntelliJ-based IDEs and Android Studio that generates commit messages using git diff and OpenAI. It offers features such as generating commit messages from diff using OpenAI API, computing diff only from selected files and lines in the commit dialog, creating custom prompts for commit message generation, using predefined variables and hints to customize prompts, choosing any of the models available in OpenAI API, setting OpenAI network proxy, and setting custom OpenAI compatible API endpoint.

img-prompt

IMGPrompt is an AI prompt editor tailored for image and video generation tools like Stable Diffusion, Midjourney, DALL·E, FLUX, and Sora. It offers a clean interface for viewing and combining prompts with translations in multiple languages. The tool includes features like smart recommendations, translation, random color generation, prompt tagging, interactive editing, categorized tag display, character count, and localization. Users can enhance their creative workflow by simplifying prompt creation and boosting efficiency.

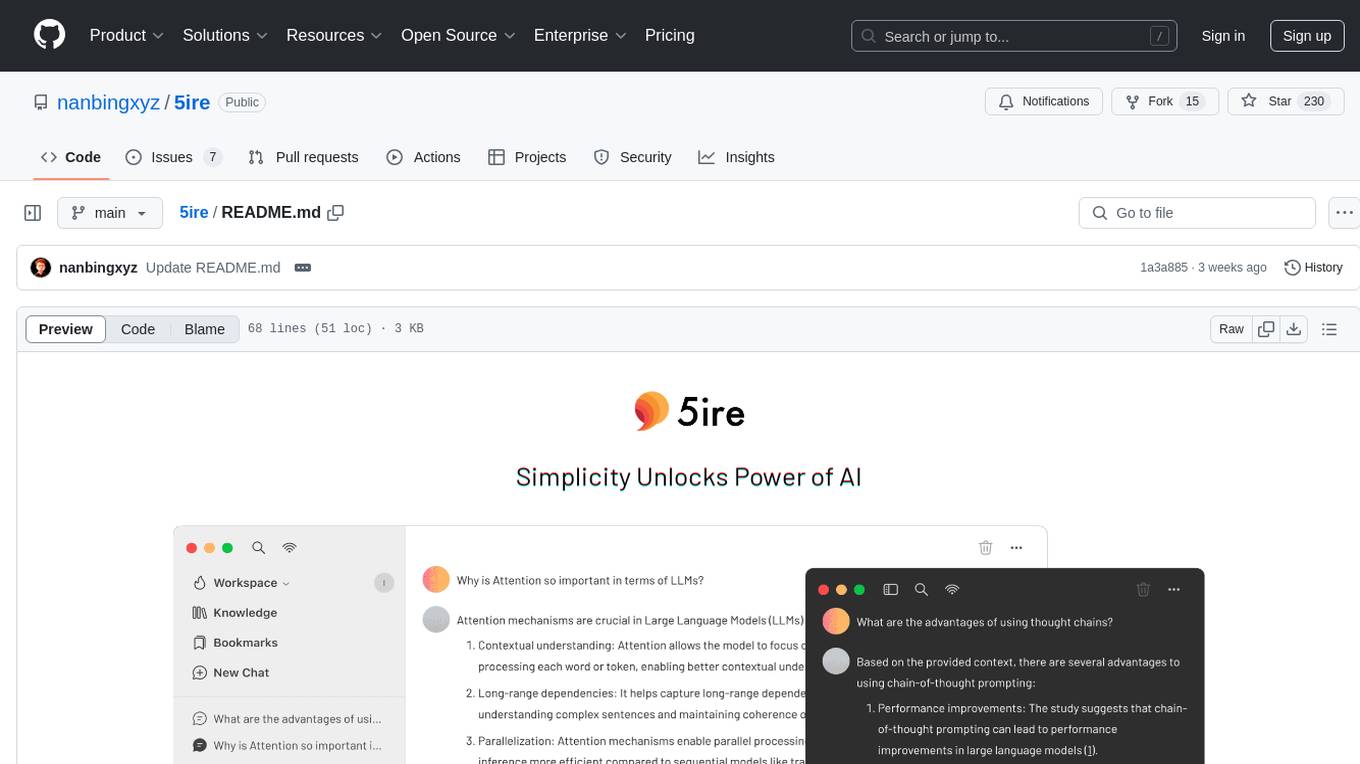

5ire

5ire is a cross-platform desktop client that integrates a local knowledge base for multilingual vectorization, supports parsing and vectorization of various document formats, offers usage analytics to track API spending, provides a prompts library for creating and organizing prompts with variable support, allows bookmarking of conversations, and enables quick keyword searches across conversations. It is licensed under the GNU General Public License version 3.

sidecar

Sidecar is the AI brains of Aide the editor, responsible for creating prompts, interacting with LLM, and ensuring seamless integration of all functionalities. It includes 'tool_box.rs' for handling language-specific smartness, 'symbol/' for smart and independent symbols, 'llm_prompts/' for creating prompts, and 'repomap' for creating a repository map using page rank on code symbols. Users can contribute by submitting bugs, feature requests, reviewing source code changes, and participating in the development workflow.

labs-ai-tools-for-devs

This repository provides AI tools for developers through Docker containers, enabling agentic workflows. It allows users to create complex workflows using Dockerized tools and Markdown, leveraging various LLM models. The core features include Dockerized tools, conversation loops, multi-model agents, project-first design, and trackable prompts stored in a git repo.

Prompt_Engineering

Prompt Engineering Techniques is a comprehensive repository for learning, building, and sharing prompt engineering techniques, from basic concepts to advanced strategies for leveraging large language models. It provides step-by-step tutorials, practical implementations, and a platform for showcasing innovative prompt engineering techniques. The repository covers fundamental concepts, core techniques, advanced strategies, optimization and refinement, specialized applications, and advanced applications in prompt engineering.

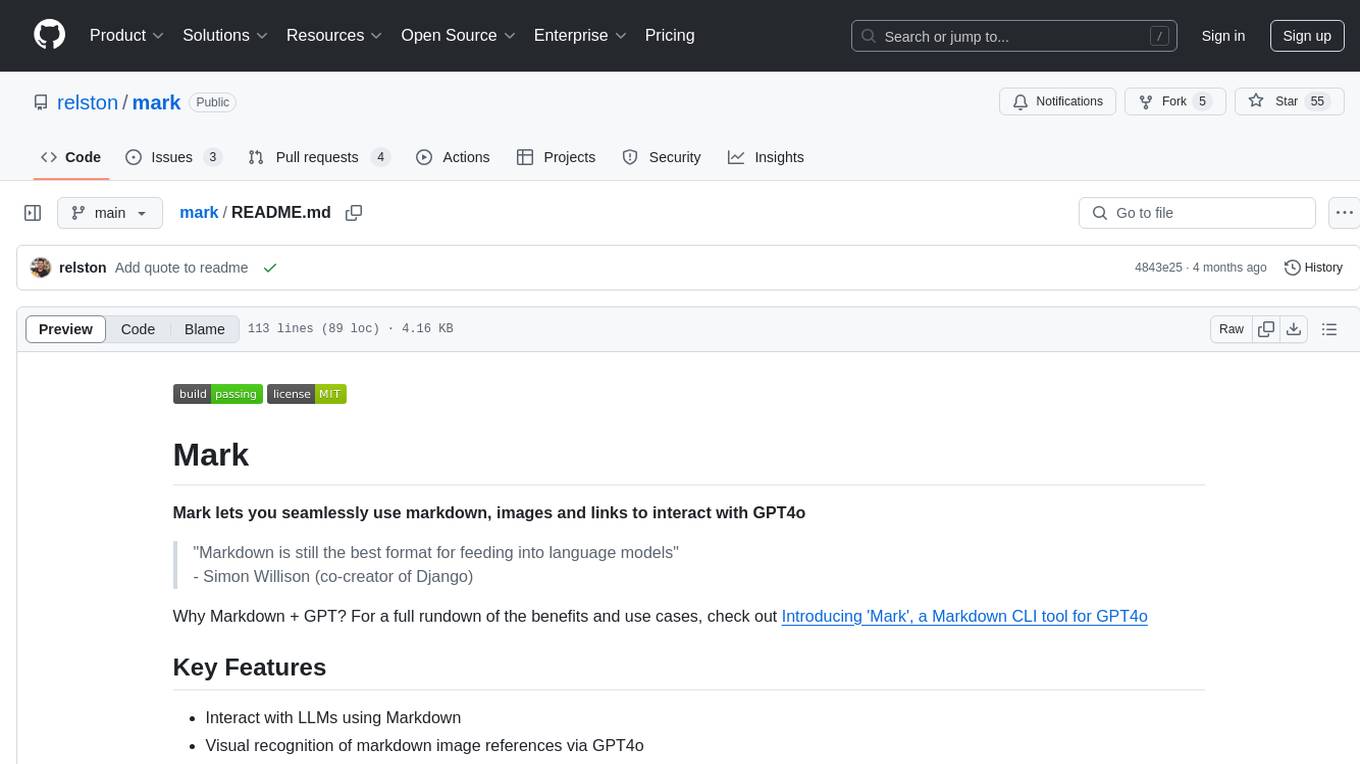

mark

Mark is a CLI tool that allows users to interact with large language models (LLMs) using Markdown format. It enables users to seamlessly integrate GPT responses into Markdown files, supports image recognition, scraping of local and remote links, and image generation. Mark focuses on using Markdown as both a prompt and response medium for LLMs, offering a unique and flexible way to interact with language models for various use cases in development and documentation processes.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.