ai

None

Stars: 715

This repository contains various packages and demo apps related to consuming Cloudflare's AI offerings on the client-side. It is a monorepo powered by Nx and Changesets. The repository provides custom providers for enabling Workers AI's models and AI Gateway Provider for Vercel AI SDK. It also includes guidelines for local development, testing, linting, creating new demo apps, contributing, and the release process.

README:

This repository contains various packages and demo apps related consuming Cloudflare's AI offerings on the client-side. It is a monorepo powered by Nx and Changesets.

-

workers-ai-provider: A custom provider that enables Workers AI's models for the Vercel AI SDK. -

ai-gateway-provider: AI Gateway Provider for Vercel AI SDK.

-

Clone the repository.

git clone [email protected]:cloudflare/ai.git

-

Install Dependencies.

From the root directory, run:

cd ai pnpm install -

Develop.

To start a development server for a specific app (for instance,

tool-calling):pnpm nx dev tool-calling

Ideally all commands should be executed from the repository root with the

pnpm nxprefix. This will ensure that the dependency graph is managed correctly, e.g. if one package relies on the output of an other. -

Testing and Linting.

-

To execute your continuous integration tests for a specific project (e.g.,

workers-ai-provider):pnpm nx test:ci workers-ai-provider

-

To lint a specific project:

pnpm nx lint my-project

-

To run a more comprehensive sweep of tasks (lint, tests, type checks, build) against one or more projects:

pnpm nx run-many -t lint test:ci type-check build -p "my-project other-project"

- Other Nx Tasks.

-

build: Compiles a project or a set of projects. -

test: Runs project tests in watch mode. -

test:ci: Runs tests in CI mode (no watch). -

test:smoke: Runs smoke tests. -

type-check: Performs TypeScript type checks.

In order to scaffold a new demo app, you can use the create-demo script. This script will create a new demo app in the demos directory.

pnpm create-demo <demo-name>After creating the app, pnpm install will be run to install the dependencies, and pnpm nx cf-typegen <demo-name> will be run to generate the types for the demo app. Then it's simply a case of starting the app with:

pnpm nx dev <demo-name>We appreciate contributions and encourage pull requests. Please follow these guidelines:

- Project Setup: After forking or cloning, install dependencies with

pnpm install. - Branching: Create a new branch for your feature or fix.

- Making Changes:

- Add or update relevant tests.

- On pushing your changes, automated tasks will be run (courtesy of a Husky pre-push hook).

- Changesets: If your changes affect a published package, run

pnpm changesetto create a changeset. Provide a concise summary of your changes in the changeset prompt. - Pull Request: Submit a pull request to the

mainbranch. The team will review it and merge if everything is in order.

This repository uses Changesets to manage versioning and publication:

-

Changeset Creation: Whenever a change is made that warrants a new release (e.g., bug fixes, new features), run:

pnpm changeset

Provide a clear description of the changes.

-

Merging: Once the changeset is merged into

main, our GitHub Actions workflows will:

- Detect the changed packages, and create a Version Packages PR.

- Increment versions automatically (via Changesets).

- Publish any package that has a version number to npm. (Demos and other internal items do not require versioning.)

-

Publication: The release workflow (

.github/workflows/release.yml) will run on every push tomain. It ensures each published package is tagged and released on npm. Any package with a version field in itspackage.jsonwill be included in this process.

For any queries or guidance, kindly open an issue or submit a pull request. We hope this structure and process help you to contribute effectively.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai

Similar Open Source Tools

ai

This repository contains various packages and demo apps related to consuming Cloudflare's AI offerings on the client-side. It is a monorepo powered by Nx and Changesets. The repository provides custom providers for enabling Workers AI's models and AI Gateway Provider for Vercel AI SDK. It also includes guidelines for local development, testing, linting, creating new demo apps, contributing, and the release process.

temporal-ai-agent

Temporal AI Agent is a demo showcasing a multi-turn conversation with an AI agent running inside a Temporal workflow. The agent collects information towards a goal using a simple DSL input. It is currently set up to search for events, book flights around those events, and create an invoice for those flights. The AI agent responds with clarifications and prompts for missing information. Users can configure the agent to use ChatGPT 4o or a local LLM via Ollama. The tool requires Rapidapi key for sky-scrapper to find flights and a Stripe key for creating invoices. Users can customize the agent by modifying tool and goal definitions in the codebase.

dravid

Dravid (DRD) is an advanced, AI-powered CLI coding framework designed to follow user instructions until the job is completed, including fixing errors. It can generate code, fix errors, handle image queries, manage file operations, integrate with external APIs, and provide a development server with error handling. Dravid is extensible and requires Python 3.7+ and CLAUDE_API_KEY. Users can interact with Dravid through CLI commands for various tasks like creating projects, asking questions, generating content, handling metadata, and file-specific queries. It supports use cases like Next.js project development, working with existing projects, exploring new languages, Ruby on Rails project development, and Python project development. Dravid's project structure includes directories for source code, CLI modules, API interaction, utility functions, AI prompt templates, metadata management, and tests. Contributions are welcome, and development setup involves cloning the repository, installing dependencies with Poetry, setting up environment variables, and using Dravid for project enhancements.

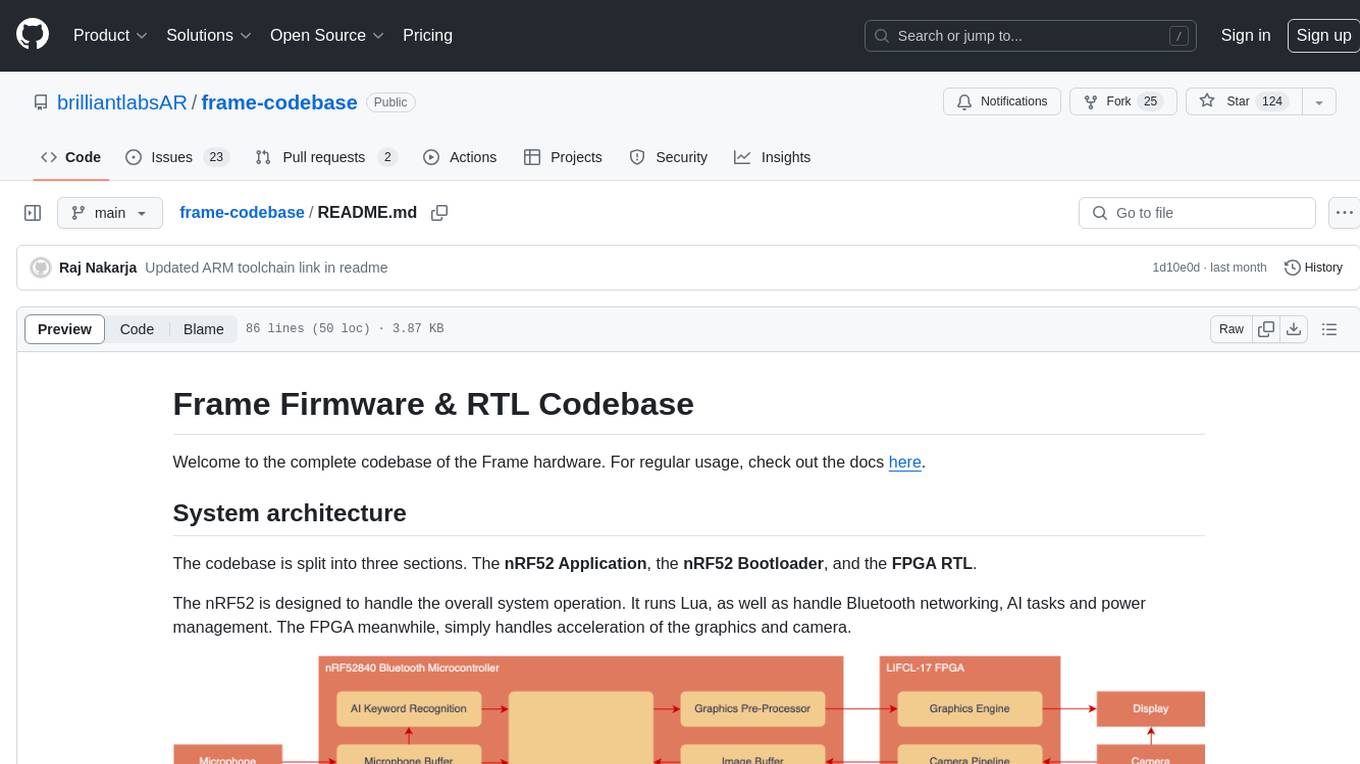

frame-codebase

The Frame Firmware & RTL Codebase is a comprehensive repository containing code for the Frame hardware system architecture. It includes sections for nRF52 Application, nRF52 Bootloader, and FPGA RTL. The nRF52 handles system operation, Lua scripting, Bluetooth networking, AI tasks, and power management, while the FPGA accelerates graphics and camera processing. The repository provides instructions for firmware development, debugging in VSCode, and FPGA development using tools like ARM GCC Toolchain, nRF Command Line Tools, Yosys, Project Oxide, and nextpnr. Users can build and flash projects for nRF52840 DK, modify FPGA RTL, and access pre-built accelerators bundled in the repo.

ai-deadlines

AI Deadlines is a web app that displays submission deadlines for top AI conferences like NeurIPS and ICLR. It helps researchers know when to submit their papers. The data is fetched from a GitHub repository and updated automatically using a CRON job. The project is based on an existing repository and features a new UI. Users can contribute by updating conference deadlines in the provided YAML file. The app can be run locally with Node.js and npm or deployed using Docker. It is built with Vite, TypeScript, React, shadcn-ui, and Tailwind CSS. The project is licensed under MIT.

quivr-mobile

Quivr-Mobile is a React Native mobile application that allows users to upload files and engage in chat conversations using the Quivr backend API. It supports features like file upload and chatting with a language model about uploaded data. The project uses technologies like React Native, React Native Paper, and React Native Navigation. Users can follow the installation steps to set up the client and contribute to the project by opening issues or submitting pull requests following the existing coding style.

grafana-llm-app

This repository contains separate packages for Grafana LLM Plugin and the @grafana/llm package for interfacing with it. The packages are tightly coupled and developed together with identical dependencies. The repository provides instructions for developing the packages, including backend and frontend development, testing, and release processes.

leptonai

A Pythonic framework to simplify AI service building. The LeptonAI Python library allows you to build an AI service from Python code with ease. Key features include a Pythonic abstraction Photon, simple abstractions to launch models like those on HuggingFace, prebuilt examples for common models, AI tailored batteries, a client to automatically call your service like native Python functions, and Pythonic configuration specs to be readily shipped in a cloud environment.

ai-digest

ai-digest is a CLI tool designed to aggregate your codebase into a single Markdown file for use with Claude Projects or custom ChatGPTs. It aggregates all files in the specified directory and subdirectories, ignores common build artifacts and configuration files, and provides options for whitespace removal and custom ignore patterns. The tool is useful for preparing codebases for AI analysis and assistance.

LLM-Merging

LLM-Merging is a repository containing starter code for the LLM-Merging competition. It provides a platform for efficiently building LLMs through merging methods. Users can develop new merging methods by creating new files in the specified directory and extending existing classes. The repository includes instructions for setting up the environment, developing new merging methods, testing the methods on specific datasets, and submitting solutions for evaluation. It aims to facilitate the development and evaluation of merging methods for LLMs.

PrAIvateSearch

PrAIvateSearch is a NextJS web application that aims to implement similar features to SearchGPT in an open-source, local, and private way. It allows users to search the web using their own AI model. The application provides a user-friendly interface for interacting with the AI model and accessing search results. PrAIvateSearch is designed to be easy to install and use, with detailed instructions provided in the readme file. The project is in beta stage and welcomes contributions from the community to improve and enhance its functionality. Users are encouraged to support the project through funding to help it grow and continue to be maintained as an open-source tool under the MIT license.

redbox

Redbox is a retrieval augmented generation (RAG) app that uses GenAI to chat with and summarise civil service documents. It increases organisational memory by indexing documents and can summarise reports read months ago, supplement them with current work, and produce a first draft that lets civil servants focus on what they do best. The project uses a microservice architecture with each microservice running in its own container defined by a Dockerfile. Dependencies are managed using Python Poetry. Contributions are welcome, and the project is licensed under the MIT License. Security measures are in place to ensure user data privacy and considerations are being made to make the core-api secure.

TypeGPT

TypeGPT is a Python application that enables users to interact with ChatGPT or Google Gemini from any text field in their operating system using keyboard shortcuts. It provides global accessibility, keyboard shortcuts for communication, and clipboard integration for larger text inputs. Users need to have Python 3.x installed along with specific packages and API keys from OpenAI for ChatGPT access. The tool allows users to run the program normally or in the background, manage processes, and stop the program. Users can use keyboard shortcuts like `/ask`, `/see`, `/stop`, `/chatgpt`, `/gemini`, `/check`, and `Shift + Cmd + Enter` to interact with the application in any text field. Customization options are available by modifying files like `keys.txt` and `system_prompt.txt`. Contributions are welcome, and future plans include adding support for other APIs and a user-friendly GUI.

ai-models

The `ai-models` command is a tool used to run AI-based weather forecasting models. It provides functionalities to install, run, and manage different AI models for weather forecasting. Users can easily install and run various models, customize model settings, download assets, and manage input data from different sources such as ECMWF, CDS, and GRIB files. The tool is designed to optimize performance by running on GPUs and provides options for better organization of assets and output files. It offers a range of command line options for users to interact with the models and customize their forecasting tasks.

PolyMind

PolyMind is a multimodal, function calling powered LLM webui designed for various tasks such as internet searching, image generation, port scanning, Wolfram Alpha integration, Python interpretation, and semantic search. It offers a plugin system for adding extra functions and supports different models and endpoints. The tool allows users to interact via function calling and provides features like image input, image generation, and text file search. The application's configuration is stored in a `config.json` file with options for backend selection, compatibility mode, IP address settings, API key, and enabled features.

0chain

Züs is a high-performance cloud on a fast blockchain offering privacy and configurable uptime. It uses erasure code to distribute data between data and parity servers, allowing flexibility for IT managers to design for security and uptime. Users can easily share encrypted data with business partners through a proxy key sharing protocol. The ecosystem includes apps like Blimp for cloud migration, Vult for personal cloud storage, and Chalk for NFT artists. Other apps include Bolt for secure wallet and staking, Atlus for blockchain explorer, and Chimney for network participation. The QoS protocol challenges providers based on response time, while the privacy protocol enables secure data sharing. Züs supports hybrid and multi-cloud architectures, allowing users to improve regulatory compliance and security requirements.

For similar tasks

ai

This repository contains various packages and demo apps related to consuming Cloudflare's AI offerings on the client-side. It is a monorepo powered by Nx and Changesets. The repository provides custom providers for enabling Workers AI's models and AI Gateway Provider for Vercel AI SDK. It also includes guidelines for local development, testing, linting, creating new demo apps, contributing, and the release process.

companion-vscode

Quack Companion is a VSCode extension that provides smart linting, code chat, and coding guideline curation for developers. It aims to enhance the coding experience by offering a new tab with features like curating software insights with the team, code chat similar to ChatGPT, smart linting, and upcoming code completion. The extension focuses on creating a smooth contribution experience for developers by turning contribution guidelines into a live pair coding experience, helping developers find starter contribution opportunities, and ensuring alignment between contribution goals and project priorities. Quack collects limited telemetry data to improve its services and products for developers, with options for anonymization and disabling telemetry available to users.

langchain-google

LangChain Google is a repository containing three packages with Google integrations: langchain-google-genai for Google Generative AI models, langchain-google-vertexai for Google Cloud Generative AI on Vertex AI, and langchain-google-community for other Google product integrations. The repository is organized as a monorepo with a structure including libs for different packages, and files like pyproject.toml and Makefile for building, linting, and testing. It provides guidelines for contributing, local development dependencies installation, formatting, linting, working with optional dependencies, and testing with unit and integration tests. The focus is on maintaining unit test coverage and avoiding excessive integration tests, with annotations for GCP infrastructure-dependent tests.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.