basic-memory

AI conversations that actually remember. Never re-explain your project to your AI again. Join our Discord: https://discord.gg/tyvKNccgqN

Stars: 2506

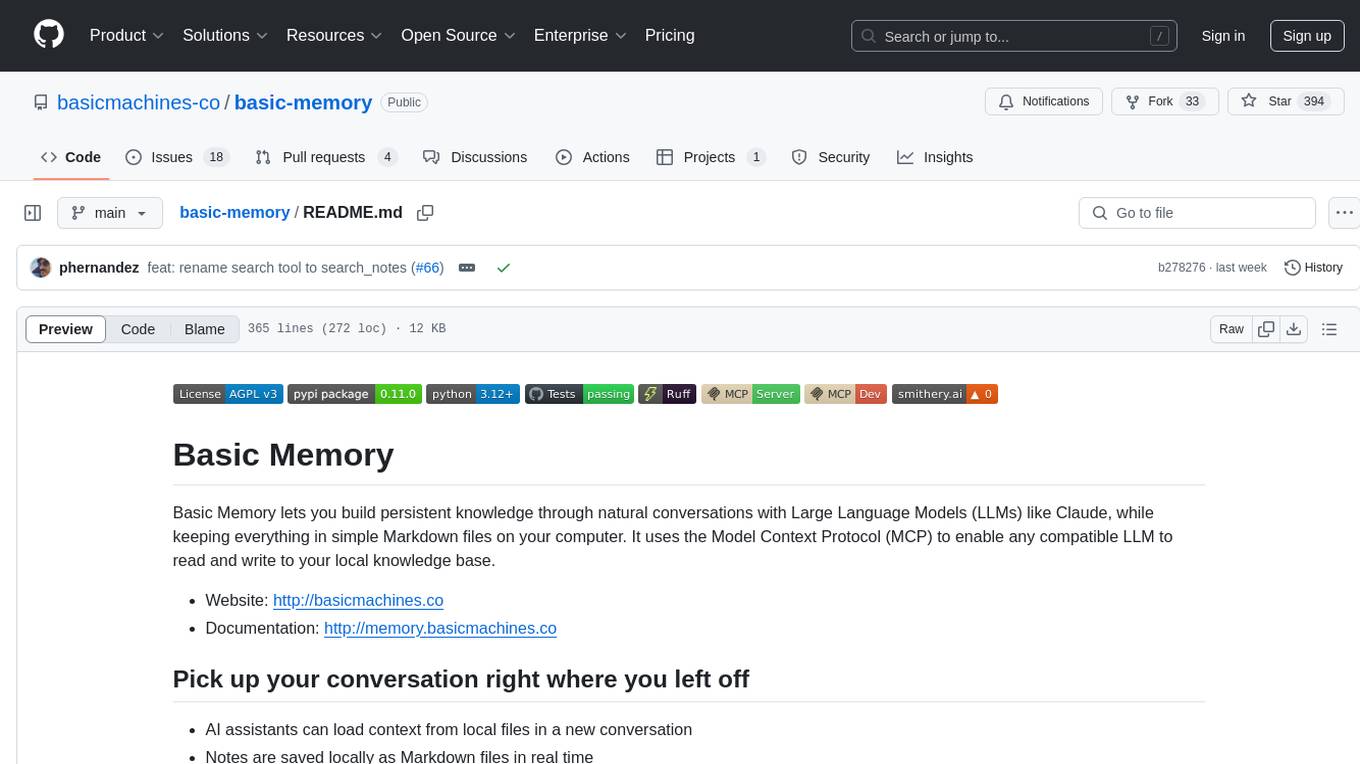

Basic Memory is a tool that enables users to build persistent knowledge through natural conversations with Large Language Models (LLMs) like Claude. It uses the Model Context Protocol (MCP) to allow compatible LLMs to read and write to a local knowledge base stored in simple Markdown files on the user's computer. The tool facilitates creating structured notes during conversations, maintaining a semantic knowledge graph, and keeping all data local and under user control. Basic Memory aims to address the limitations of ephemeral LLM interactions by providing a structured, bi-directional, and locally stored knowledge management solution.

README:

- Cross-device and multi-platform support is here. Your knowledge graph now works on desktop, web, and mobile.

- Cloud is optional. The local-first open-source workflow continues as always.

-

OSS discount: use code

{{OSS_DISCOUNT_CODE}}for 20% off for 3 months.

with a 7 day free trial

Basic Memory lets you build persistent knowledge through natural conversations with Large Language Models (LLMs) like Claude, while keeping everything in simple Markdown files on your computer. It uses the Model Context Protocol (MCP) to enable any compatible LLM to read and write to your local knowledge base.

- Website: https://basicmemory.com

- Documentation: https://docs.basicmemory.com

- AI assistants can load context from local files in a new conversation

- Notes are saved locally as Markdown files in real time

- No project knowledge or special prompting required

https://github.com/user-attachments/assets/a55d8238-8dd0-454a-be4c-8860dbbd0ddc

# Install with uv (recommended)

uv tool install basic-memory

# Configure Claude Desktop (edit ~/Library/Application Support/Claude/claude_desktop_config.json)

# Add this to your config:

{

"mcpServers": {

"basic-memory": {

"command": "uvx",

"args": [

"basic-memory",

"mcp"

]

}

}

}

# Now in Claude Desktop, you can:

# - Write notes with "Create a note about coffee brewing methods"

# - Read notes with "What do I know about pour over coffee?"

# - Search with "Find information about Ethiopian beans"

You can view shared context via files in ~/basic-memory (default directory location).

Most LLM interactions are ephemeral - you ask a question, get an answer, and everything is forgotten. Each conversation starts fresh, without the context or knowledge from previous ones. Current workarounds have limitations:

- Chat histories capture conversations but aren't structured knowledge

- RAG systems can query documents but don't let LLMs write back

- Vector databases require complex setups and often live in the cloud

- Knowledge graphs typically need specialized tools to maintain

Basic Memory addresses these problems with a simple approach: structured Markdown files that both humans and LLMs can read and write to. The key advantages:

- Local-first: All knowledge stays in files you control

- Bi-directional: Both you and the LLM read and write to the same files

- Structured yet simple: Uses familiar Markdown with semantic patterns

- Traversable knowledge graph: LLMs can follow links between topics

- Standard formats: Works with existing editors like Obsidian

- Lightweight infrastructure: Just local files indexed in a local SQLite database

With Basic Memory, you can:

- Have conversations that build on previous knowledge

- Create structured notes during natural conversations

- Have conversations with LLMs that remember what you've discussed before

- Navigate your knowledge graph semantically

- Keep everything local and under your control

- Use familiar tools like Obsidian to view and edit notes

- Build a personal knowledge base that grows over time

- Sync your knowledge to the cloud with bidirectional synchronization

- Authenticate and manage cloud projects with subscription validation

- Mount cloud storage for direct file access

Let's say you're exploring coffee brewing methods and want to capture your knowledge. Here's how it works:

- Start by chatting normally:

I've been experimenting with different coffee brewing methods. Key things I've learned:

- Pour over gives more clarity in flavor than French press

- Water temperature is critical - around 205°F seems best

- Freshly ground beans make a huge difference

... continue conversation.

- Ask the LLM to help structure this knowledge:

"Let's write a note about coffee brewing methods."

LLM creates a new Markdown file on your system (which you can see instantly in Obsidian or your editor):

---

title: Coffee Brewing Methods

permalink: coffee-brewing-methods

tags:

- coffee

- brewing

---

# Coffee Brewing Methods

## Observations

- [method] Pour over provides more clarity and highlights subtle flavors

- [technique] Water temperature at 205°F (96°C) extracts optimal compounds

- [principle] Freshly ground beans preserve aromatics and flavor

## Relations

- relates_to [[Coffee Bean Origins]]

- requires [[Proper Grinding Technique]]

- affects [[Flavor Extraction]]The note embeds semantic content and links to other topics via simple Markdown formatting.

- You see this file on your computer in real time in the current project directory (default

~/$HOME/basic-memory).

- Realtime sync can be enabled via running

basic-memory sync --watch

- In a chat with the LLM, you can reference a topic:

Look at `coffee-brewing-methods` for context about pour over coffee

The LLM can now build rich context from the knowledge graph. For example:

Following relation 'relates_to [[Coffee Bean Origins]]':

- Found information about Ethiopian Yirgacheffe

- Notes on Colombian beans' nutty profile

- Altitude effects on bean characteristics

Following relation 'requires [[Proper Grinding Technique]]':

- Burr vs. blade grinder comparisons

- Grind size recommendations for different methods

- Impact of consistent particle size on extraction

Each related document can lead to more context, building a rich semantic understanding of your knowledge base.

This creates a two-way flow where:

- Humans write and edit Markdown files

- LLMs read and write through the MCP protocol

- Sync keeps everything consistent

- All knowledge stays in local files.

Under the hood, Basic Memory:

- Stores everything in Markdown files

- Uses a SQLite database for searching and indexing

- Extracts semantic meaning from simple Markdown patterns

- Files become

Entityobjects - Each

Entitycan haveObservations, or facts associated with it -

Relationsconnect entities together to form the knowledge graph

- Files become

- Maintains the local knowledge graph derived from the files

- Provides bidirectional synchronization between files and the knowledge graph

- Implements the Model Context Protocol (MCP) for AI integration

- Exposes tools that let AI assistants traverse and manipulate the knowledge graph

- Uses memory:// URLs to reference entities across tools and conversations

The file format is just Markdown with some simple markup:

Each Markdown file has:

title: <Entity title>

type: <The type of Entity> (e.g. note)

permalink: <a uri slug>

- <optional metadata> (such as tags) Observations are facts about a topic.

They can be added by creating a Markdown list with a special format that can reference a category, tags using a

"#" character, and an optional context.

Observation Markdown format:

- [category] content #tag (optional context)Examples of observations:

- [method] Pour over extracts more floral notes than French press

- [tip] Grind size should be medium-fine for pour over #brewing

- [preference] Ethiopian beans have bright, fruity flavors (especially from Yirgacheffe)

- [fact] Lighter roasts generally contain more caffeine than dark roasts

- [experiment] Tried 1:15 coffee-to-water ratio with good results

- [resource] James Hoffman's V60 technique on YouTube is excellent

- [question] Does water temperature affect extraction of different compounds differently?

- [note] My favorite local shop uses a 30-second bloom timeRelations are links to other topics. They define how entities connect in the knowledge graph.

Markdown format:

- relation_type [[WikiLink]] (optional context)Examples of relations:

- pairs_well_with [[Chocolate Desserts]]

- grown_in [[Ethiopia]]

- contrasts_with [[Tea Brewing Methods]]

- requires [[Burr Grinder]]

- improves_with [[Fresh Beans]]

- relates_to [[Morning Routine]]

- inspired_by [[Japanese Coffee Culture]]

- documented_in [[Coffee Journal]]Add the following JSON block to your User Settings (JSON) file in VS Code. You can do this by pressing Ctrl + Shift + P and typing Preferences: Open User Settings (JSON).

{

"mcp": {

"servers": {

"basic-memory": {

"command": "uvx",

"args": ["basic-memory", "mcp"]

}

}

}

}Optionally, you can add it to a file called .vscode/mcp.json in your workspace. This will allow you to share the configuration with others.

{

"servers": {

"basic-memory": {

"command": "uvx",

"args": ["basic-memory", "mcp"]

}

}

}You can use Basic Memory with VS Code to easily retrieve and store information while coding.

Basic Memory is built using the MCP (Model Context Protocol) and works with the Claude desktop app (https://claude.ai/):

- Configure Claude Desktop to use Basic Memory:

Edit your MCP configuration file (usually located at ~/Library/Application Support/Claude/claude_desktop_config.json

for OS X):

{

"mcpServers": {

"basic-memory": {

"command": "uvx",

"args": [

"basic-memory",

"mcp"

]

}

}

}If you want to use a specific project (see Multiple Projects below), update your Claude Desktop config:

{

"mcpServers": {

"basic-memory": {

"command": "uvx",

"args": [

"basic-memory",

"mcp",

"--project",

"your-project-name"

]

}

}

}- Sync your knowledge:

# One-time sync of local knowledge updates

basic-memory sync

# Run realtime sync process (recommended)

basic-memory sync --watch- Cloud features (optional, requires subscription):

# Authenticate with cloud (global cloud mode via OAuth)

basic-memory cloud login

# Bidirectional sync with cloud

basic-memory cloud sync

# Verify cloud integrity

basic-memory cloud check

# Mount cloud storage

basic-memory cloud mountPer-Project Cloud Routing (API key based):

Individual projects can be routed through the cloud while others stay local. This uses an API key instead of OAuth:

# Save an API key (create one in the web app or via CLI)

basic-memory cloud set-key bmc_abc123...

# Or create one via CLI (requires OAuth login first)

basic-memory cloud create-key "my-laptop"

# Set a project to route through cloud

basic-memory project set-cloud research

# Revert a project to local mode

basic-memory project set-local research

# List projects with mode column (local/cloud)

basic-memory project listRouting Flags (for users with global cloud mode):

When global cloud mode is enabled, CLI commands communicate with the cloud API by default. Use routing flags to override this:

# Force local routing (useful for local MCP server while cloud mode is enabled)

basic-memory status --local

basic-memory project list --local

# Force cloud routing (when cloud mode is disabled but you want cloud access)

basic-memory status --cloud

basic-memory project info my-project --cloudThe local MCP server (basic-memory mcp) automatically uses local routing, so you can use both local Claude Desktop and cloud-based clients simultaneously.

- In Claude Desktop, the LLM can now use these tools:

Content Management:

write_note(title, content, folder, tags) - Create or update notes

read_note(identifier, page, page_size) - Read notes by title or permalink

read_content(path) - Read raw file content (text, images, binaries)

view_note(identifier) - View notes as formatted artifacts

edit_note(identifier, operation, content) - Edit notes incrementally

move_note(identifier, destination_path) - Move notes with database consistency

delete_note(identifier) - Delete notes from knowledge base

Knowledge Graph Navigation:

build_context(url, depth, timeframe) - Navigate knowledge graph via memory:// URLs

recent_activity(type, depth, timeframe) - Find recently updated information

list_directory(dir_name, depth) - Browse directory contents with filtering

Search & Discovery:

search(query, page, page_size) - Search across your knowledge base

search_notes(query, page, page_size, search_type, types, entity_types, after_date, metadata_filters, tags, status, project) - Search with filters

search_by_metadata(filters, limit, offset, project) - Structured frontmatter search

Project Management:

list_memory_projects() - List all available projects

create_memory_project(project_name, project_path) - Create new projects

get_current_project() - Show current project stats

sync_status() - Check synchronization status

Cloud Discovery (opt-in):

cloud_info() - Show optional Cloud overview and setup guidance

release_notes() - Show latest release notes

Visualization:

canvas(nodes, edges, title, folder) - Generate knowledge visualizations

- Example prompts to try:

"Create a note about our project architecture decisions"

"Find information about JWT authentication in my notes"

"Create a canvas visualization of my project components"

"Read my notes on the authentication system"

"What have I been working on in the past week?"

See the Documentation for more info, including:

- Complete User Guide

- CLI tools

- Cloud CLI and Sync

- Managing multiple Projects

- Importing data from OpenAI/Claude Projects

Basic Memory uses Loguru for logging. The logging behavior varies by entry point:

| Entry Point | Default Behavior | Use Case |

|---|---|---|

| CLI commands | File only | Prevents log output from interfering with command output |

| MCP server | File only | Stdout would corrupt the JSON-RPC protocol |

| API server | File (local) or stdout (cloud) | Docker/cloud deployments use stdout |

Log file location: ~/.basic-memory/basic-memory.log (10MB rotation, 10 days retention)

| Variable | Default | Description |

|---|---|---|

BASIC_MEMORY_LOG_LEVEL |

INFO |

Log level: DEBUG, INFO, WARNING, ERROR |

BASIC_MEMORY_CLOUD_MODE |

false |

When true, API logs to stdout with structured context |

BASIC_MEMORY_FORCE_LOCAL |

false |

When true, forces local API routing (ignores cloud mode) |

BASIC_MEMORY_ENV |

dev |

Set to test for test mode (stderr only) |

# Enable debug logging

BASIC_MEMORY_LOG_LEVEL=DEBUG basic-memory sync

# View logs

tail -f ~/.basic-memory/basic-memory.log

# Cloud/Docker mode (stdout logging with structured context)

BASIC_MEMORY_CLOUD_MODE=true uvicorn basic_memory.api.app:appBasic Memory supports dual database backends (SQLite and Postgres). By default, tests run against SQLite. Set BASIC_MEMORY_TEST_POSTGRES=1 to run against Postgres (uses testcontainers - Docker required).

Quick Start:

# Run all tests against SQLite (default, fast)

just test-sqlite

# Run all tests against Postgres (uses testcontainers)

just test-postgres

# Run both SQLite and Postgres tests

just testAvailable Test Commands:

-

just test- Run all tests against both SQLite and Postgres -

just test-sqlite- Run all tests against SQLite (fast, no Docker needed) -

just test-postgres- Run all tests against Postgres (uses testcontainers) -

just test-unit-sqlite- Run unit tests against SQLite -

just test-unit-postgres- Run unit tests against Postgres -

just test-int-sqlite- Run integration tests against SQLite -

just test-int-postgres- Run integration tests against Postgres -

just test-windows- Run Windows-specific tests (auto-skips on other platforms) -

just test-benchmark- Run performance benchmark tests -

just testmon- Run tests impacted by recent changes (pytest-testmon) -

just test-smoke- Run fast MCP end-to-end smoke test -

just fast-check- Run fix/format/typecheck + impacted tests + smoke test -

just doctor- Run local file <-> DB consistency checks with temp config

Postgres Testing:

Postgres tests use testcontainers which automatically spins up a Postgres instance in Docker. No manual database setup required - just have Docker running.

Testmon Note: When no files have changed, just testmon may collect 0 tests. That's expected and means no impacted tests were detected.

Test Markers:

Tests use pytest markers for selective execution:

-

windows- Windows-specific database optimizations -

benchmark- Performance tests (excluded from default runs) -

smoke- Fast MCP end-to-end smoke tests

Other Development Commands:

just install # Install with dev dependencies

just lint # Run linting checks

just typecheck # Run type checking

just format # Format code with ruff

just fast-check # Fast local loop (fix/format/typecheck + testmon + smoke)

just doctor # Local consistency check (temp config)

just check # Run all quality checks

just migration "msg" # Create database migrationLocal Consistency Check:

basic-memory doctor # Verifies file <-> database sync in a temp projectSee the justfile for the complete list of development commands.

AGPL-3.0

Contributions are welcome. See the Contributing guide for info about setting up the project locally and submitting PRs.

Built with

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for basic-memory

Similar Open Source Tools

basic-memory

Basic Memory is a tool that enables users to build persistent knowledge through natural conversations with Large Language Models (LLMs) like Claude. It uses the Model Context Protocol (MCP) to allow compatible LLMs to read and write to a local knowledge base stored in simple Markdown files on the user's computer. The tool facilitates creating structured notes during conversations, maintaining a semantic knowledge graph, and keeping all data local and under user control. Basic Memory aims to address the limitations of ephemeral LLM interactions by providing a structured, bi-directional, and locally stored knowledge management solution.

Memori

Memori is a memory fabric designed for enterprise AI that seamlessly integrates into existing software and infrastructure. It is agnostic to LLM, datastore, and framework, providing support for major foundational models and databases. With features like vectorized memories, in-memory semantic search, and a knowledge graph, Memori simplifies the process of attributing LLM interactions and managing sessions. It offers Advanced Augmentation for enhancing memories at different levels and supports various platforms, frameworks, database integrations, and datastores. Memori is designed to reduce development overhead and provide efficient memory management for AI applications.

bedrock-claude-chat

This repository is a sample chatbot using the Anthropic company's LLM Claude, one of the foundational models provided by Amazon Bedrock for generative AI. It allows users to have basic conversations with the chatbot, personalize it with their own instructions and external knowledge, and analyze usage for each user/bot on the administrator dashboard. The chatbot supports various languages, including English, Japanese, Korean, Chinese, French, German, and Spanish. Deployment is straightforward and can be done via the command line or by using AWS CDK. The architecture is built on AWS managed services, eliminating the need for infrastructure management and ensuring scalability, reliability, and security.

refact-lsp

Refact Agent is a small executable written in Rust as part of the Refact Agent project. It lives inside your IDE to keep AST and VecDB indexes up to date, supporting connection graphs between definitions and usages in popular programming languages. It functions as an LSP server, offering code completion, chat functionality, and integration with various tools like browsers, databases, and debuggers. Users can interact with it through a Text UI in the command line.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

raglite

RAGLite is a Python toolkit for Retrieval-Augmented Generation (RAG) with PostgreSQL or SQLite. It offers configurable options for choosing LLM providers, database types, and rerankers. The toolkit is fast and permissive, utilizing lightweight dependencies and hardware acceleration. RAGLite provides features like PDF to Markdown conversion, multi-vector chunk embedding, optimal semantic chunking, hybrid search capabilities, adaptive retrieval, and improved output quality. It is extensible with a built-in Model Context Protocol server, customizable ChatGPT-like frontend, document conversion to Markdown, and evaluation tools. Users can configure RAGLite for various tasks like configuring, inserting documents, running RAG pipelines, computing query adapters, evaluating performance, running MCP servers, and serving frontends.

LEANN

LEANN is an innovative vector database that democratizes personal AI, transforming your laptop into a powerful RAG system that can index and search through millions of documents using 97% less storage than traditional solutions without accuracy loss. It achieves this through graph-based selective recomputation and high-degree preserving pruning, computing embeddings on-demand instead of storing them all. LEANN allows semantic search of file system, emails, browser history, chat history, codebase, or external knowledge bases on your laptop with zero cloud costs and complete privacy. It is a drop-in semantic search MCP service fully compatible with Claude Code, enabling intelligent retrieval without changing your workflow.

exospherehost

Exosphere is an open source infrastructure designed to run AI agents at scale for large data and long running flows. It allows developers to define plug and playable nodes that can be run on a reliable backbone in the form of a workflow, with features like dynamic state creation at runtime, infinite parallel agents, persistent state management, and failure handling. This enables the deployment of production agents that can scale beautifully to build robust autonomous AI workflows.

Fabric

Fabric is an open-source framework designed to augment humans using AI by organizing prompts by real-world tasks. It addresses the integration problem of AI by creating and organizing prompts for various tasks. Users can create, collect, and organize AI solutions in a single place for use in their favorite tools. Fabric also serves as a command-line interface for those focused on the terminal. It offers a wide range of features and capabilities, including support for multiple AI providers, internationalization, speech-to-text, AI reasoning, model management, web search, text-to-speech, desktop notifications, and more. The project aims to help humans flourish by leveraging AI technology to solve human problems and enhance creativity.

llama_index

LlamaIndex is a data framework for building LLM applications. It provides tools for ingesting, structuring, and querying data, as well as integrating with LLMs and other tools. LlamaIndex is designed to be easy to use for both beginner and advanced users, and it provides a comprehensive set of features for building LLM applications.

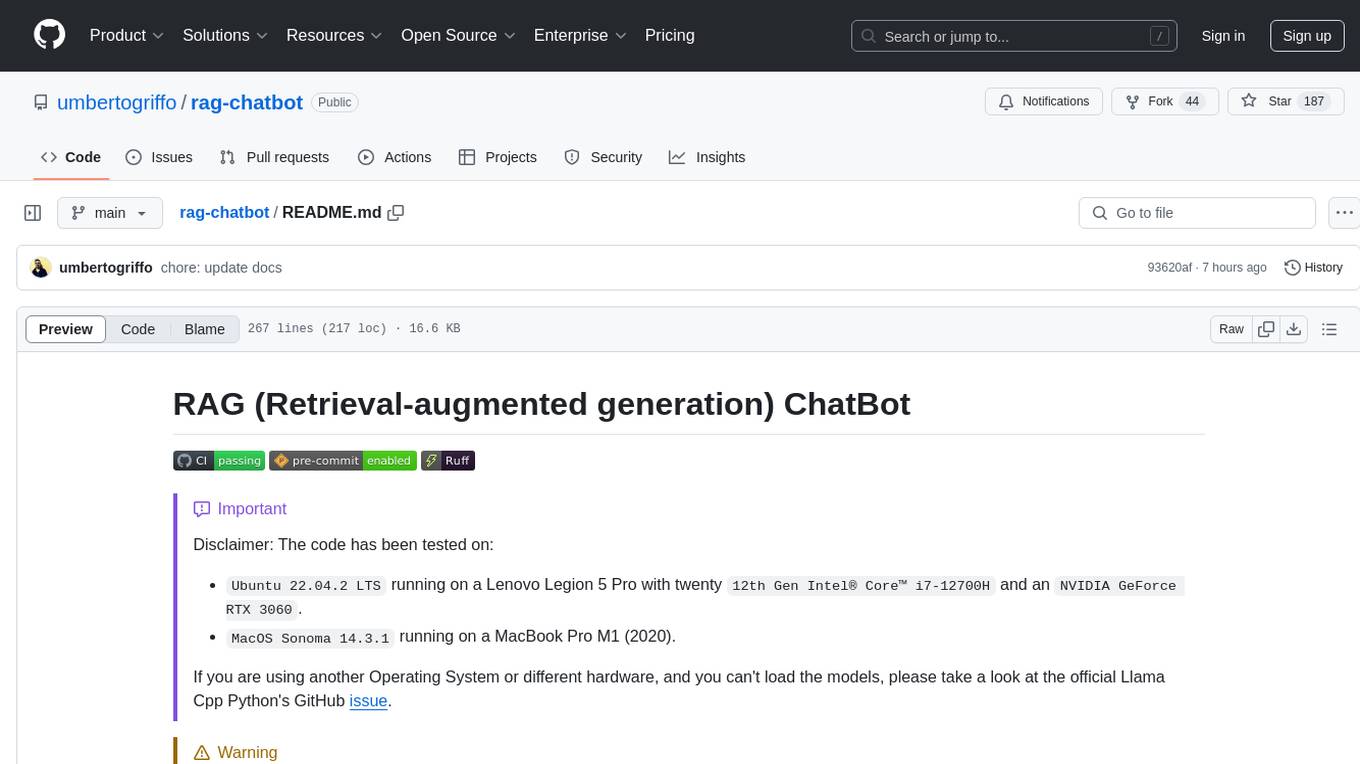

rag-chatbot

The RAG ChatBot project combines Lama.cpp, Chroma, and Streamlit to build a Conversation-aware Chatbot and a Retrieval-augmented generation (RAG) ChatBot. The RAG Chatbot works by taking a collection of Markdown files as input and provides answers based on the context provided by those files. It utilizes a Memory Builder component to load Markdown pages, divide them into sections, calculate embeddings, and save them in an embedding database. The chatbot retrieves relevant sections from the database, rewrites questions for optimal retrieval, and generates answers using a local language model. It also remembers previous interactions for more accurate responses. Various strategies are implemented to deal with context overflows, including creating and refining context, hierarchical summarization, and async hierarchical summarization.

uLoopMCP

uLoopMCP is a Unity integration tool designed to let AI drive your Unity project forward with minimal human intervention. It provides a 'self-hosted development loop' where an AI can compile, run tests, inspect logs, and fix issues using tools like compile, run-tests, get-logs, and clear-console. It also allows AI to operate the Unity Editor itself—creating objects, calling menu items, inspecting scenes, and refining UI layouts from screenshots via tools like execute-dynamic-code, execute-menu-item, and capture-window. The tool enables AI-driven development loops to run autonomously inside existing Unity projects.

agenticSeek

AgenticSeek is a voice-enabled AI assistant powered by DeepSeek R1 agents, offering a fully local alternative to cloud-based AI services. It allows users to interact with their filesystem, code in multiple languages, and perform various tasks autonomously. The tool is equipped with memory to remember user preferences and past conversations, and it can divide tasks among multiple agents for efficient execution. AgenticSeek prioritizes privacy by running entirely on the user's hardware without sending data to the cloud.

bigcodebench

BigCodeBench is an easy-to-use benchmark for code generation with practical and challenging programming tasks. It aims to evaluate the true programming capabilities of large language models (LLMs) in a more realistic setting. The benchmark is designed for HumanEval-like function-level code generation tasks, but with much more complex instructions and diverse function calls. BigCodeBench focuses on the evaluation of LLM4Code with diverse function calls and complex instructions, providing precise evaluation & ranking and pre-generated samples to accelerate code intelligence research. It inherits the design of the EvalPlus framework but differs in terms of execution environment and test evaluation.

py-llm-core

PyLLMCore is a light-weighted interface with Large Language Models with native support for llama.cpp, OpenAI API, and Azure deployments. It offers a Pythonic API that is simple to use, with structures provided by the standard library dataclasses module. The high-level API includes the assistants module for easy swapping between models. PyLLMCore supports various models including those compatible with llama.cpp, OpenAI, and Azure APIs. It covers use cases such as parsing, summarizing, question answering, hallucinations reduction, context size management, and tokenizing. The tool allows users to interact with language models for tasks like parsing text, summarizing content, answering questions, reducing hallucinations, managing context size, and tokenizing text.

iloom-cli

iloom is a tool designed to streamline AI-assisted development by focusing on maintaining alignment between human developers and AI agents. It treats context as a first-class concern, persisting AI reasoning in issue comments rather than temporary chats. The tool allows users to collaborate with AI agents in an isolated environment, switch between complex features without losing context, document AI decisions publicly, and capture key insights and lessons learned from AI sessions. iloom is not just a tool for managing git worktrees, but a control plane for maintaining alignment between users and their AI assistants.

For similar tasks

NaLLM

The NaLLM project repository explores the synergies between Neo4j and Large Language Models (LLMs) through three primary use cases: Natural Language Interface to a Knowledge Graph, Creating a Knowledge Graph from Unstructured Data, and Generating a Report using static and LLM data. The repository contains backend and frontend code organized for easy navigation. It includes blog posts, a demo database, instructions for running demos, and guidelines for contributing. The project aims to showcase the potential of Neo4j and LLMs in various applications.

kweaver

KWeaver is an open-source cognitive intelligence development framework that provides data scientists, application developers, and domain experts with the ability for rapid development, comprehensive openness, and high-performance knowledge network generation and cognitive intelligence large model framework. It offers features such as automated and visual knowledge graph construction, visualization and analysis of knowledge graph data, knowledge graph integration, knowledge graph resource management, large model prompt engineering and debugging, and visual configuration for large model access.

graphrag-local-ollama

GraphRAG Local Ollama is a repository that offers an adaptation of Microsoft's GraphRAG, customized to support local models downloaded using Ollama. It enables users to leverage local models with Ollama for large language models (LLMs) and embeddings, eliminating the need for costly OpenAPI models. The repository provides a simple setup process and allows users to perform question answering over private text corpora by building a graph-based text index and generating community summaries for closely-related entities. GraphRAG Local Ollama aims to improve the comprehensiveness and diversity of generated answers for global sensemaking questions over datasets.

trustgraph

TrustGraph is a tool that deploys private GraphRAG pipelines to build a RDF style knowledge graph from data, enabling accurate and secure `RAG` requests compatible with cloud LLMs and open-source SLMs. It showcases the reliability and efficiencies of GraphRAG algorithms, capturing contextual language flags missed in conventional RAG approaches. The tool offers features like PDF decoding, text chunking, inference of various LMs, RDF-aligned Knowledge Graph extraction, and more. TrustGraph is designed to be modular, supporting multiple Language Models and environments, with a plug'n'play architecture for easy customization.

basic-memory

Basic Memory is a tool that enables users to build persistent knowledge through natural conversations with Large Language Models (LLMs) like Claude. It uses the Model Context Protocol (MCP) to allow compatible LLMs to read and write to a local knowledge base stored in simple Markdown files on the user's computer. The tool facilitates creating structured notes during conversations, maintaining a semantic knowledge graph, and keeping all data local and under user control. Basic Memory aims to address the limitations of ephemeral LLM interactions by providing a structured, bi-directional, and locally stored knowledge management solution.

helix-db

HelixDB is a database designed specifically for AI applications, providing a single platform to manage all components needed for AI applications. It supports graph + vector data model and also KV, documents, and relational data. Key features include built-in tools for MCP, embeddings, knowledge graphs, RAG, security, logical isolation, and ultra-low latency. Users can interact with HelixDB using the Helix CLI tool and SDKs in TypeScript and Python. The roadmap includes features like organizational auth, server code improvements, 3rd party integrations, educational content, and binary quantisation for better performance. Long term projects involve developing in-house tools for knowledge graph ingestion, graph-vector storage engine, and network protocol & serdes libraries.

strwythura

Strwythura is a library and tutorial focused on constructing a knowledge graph from unstructured data sources using state-of-the-art models for named entity recognition. It implements an enhanced GraphRAG approach and curates semantics for optimizing AI application outcomes within a specific domain. The tutorial emphasizes the use of sophisticated NLP pipelines based on spaCy, GLiNER, TextRank, and related libraries to provide better/faster/cheaper results with more control over the intentional arrangement of the knowledge graph. It leverages neurosymbolic AI methods and combines practices from natural language processing, graph data science, entity resolution, ontology pipeline, context engineering, and human-in-the-loop processes.

ApeRAG

ApeRAG is a production-ready platform for Retrieval-Augmented Generation (RAG) that combines Graph RAG, vector search, and full-text search with advanced AI agents. It is ideal for building Knowledge Graphs, Context Engineering, and deploying intelligent AI agents for autonomous search and reasoning across knowledge bases. The platform offers features like advanced index types, intelligent AI agents with MCP support, enhanced Graph RAG with entity normalization, multimodal processing, hybrid retrieval engine, MinerU integration for document parsing, production-grade deployment with Kubernetes, enterprise management features, MCP integration, and developer-friendly tools for customization and contribution.

For similar jobs

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.