code-cli

Autohand Code CLI - Ultra fast coding agent that runs in your terminal

Stars: 64

Autohand Code CLI is an autonomous coding agent in CLI form that uses the ReAct pattern to understand, plan, and execute code changes. It is designed for seamless coding experience without context switching or copy-pasting. The tool is fast, intuitive, and extensible with modular skills. It can be used to automate coding tasks, enforce code quality, and speed up development. Autohand can be integrated into team workflows and CI/CD pipelines to enhance productivity and efficiency.

README:

An coding agentic CLI that reads, reasons, and writes code across your entire project. No context switching. No copy-paste, No you're absolute right!.

Autohand Code CLI is an autonomous LLM-powered coding agent that lives in your terminal. It uses the ReAct (Reason + Act) pattern to understand your codebase, plan changes, and execute them with your approval. It's blazing fast, intuitive, and extensible with a modular skill system.

We built with a very minimalistic design philosophy to keep the focus on coding. Just install, run autohand, and start giving instructions in natural language. Autohand handles the rest.

Scale Autohand across your team and CI/CD pipelines to automate repetitive coding tasks, enforce code quality, and accelerate development velocity.

curl -fsSL https://autohand.ai/install.sh | bash# Clone and build

git clone https://github.com/autohandai/cli.git

cd cli

bun install

bun run build

# Install globally

bun add -g .- Bun ≥1.0 (

curl -fsSL https://bun.sh/install | bash) - Git (for version control features)

- ripgrep (optional, for faster search)

# Interactive mode - start a coding session

autohand

# Command mode - run a single instruction

autohand -p "add a dark mode toggle to the settings page"

# With auto-confirmation

autohand -p "fix the TypeScript errors" -y

# Auto-commit changes after task completion

autohand -p "refactor the auth module" -cUse Autohand directly in your favorite editor:

Install the extension from the VS Code Marketplace or via command line:

code --install-extension AutohandAI.vscode-autohandInstall from the Zed Extensions marketplace.

Launch without arguments for a full REPL experience:

autohandFeatures:

- Type

/for slash command suggestions - Type

@for file autocomplete (e.g.,@src/index.ts) - Type

!to run terminal commands (e.g.,! git status,! ls -la) - Smart Paste: Paste any amount of code (5+ lines shows compact indicator, full content sent to LLM)

- Press

ESCto cancel in-flight requests - Press

Ctrl+Ctwice to exit

Run single instructions for CI/CD, scripts, or quick tasks:

# Basic usage

autohand --prompt "add tests for the user service"

# Short form

autohand -p "fix linting errors"

# With options

autohand -p "update dependencies" --yes --auto-commit

# Dry run (preview changes without applying)

autohand -p "refactor database queries" --dry-run| Option | Short | Description |

|---|---|---|

--prompt <text> |

-p |

Run a single instruction in command mode |

--yes |

-y |

Auto-confirm risky actions |

--auto-commit |

-c |

Auto-commit changes after completing tasks |

--dry-run |

Preview actions without applying mutations | |

--model <model> |

Override the configured LLM model | |

--path <path> |

Workspace path to operate in | |

--auto-skill |

Auto-generate skills based on project analysis | |

--unrestricted |

Run without approval prompts (use with caution) | |

--restricted |

Deny all dangerous operations automatically | |

--config <path> |

Path to config file | |

--temperature <value> |

Sampling temperature for LLM | |

--login |

Sign in to your Autohand account | |

--logout |

Sign out of your Autohand account |

Skills are modular instruction packages that extend Autohand with specialized workflows. They work like on-demand AGENTS.md files for specific tasks.

# List available skills

/skills

# Activate a skill

/skills use changelog-generator

# Create a new skill interactively

/skills new

# Auto-generate project-specific skills

autohand --auto-skillAnalyze your project and generate tailored skills automatically:

$ autohand --auto-skill

Analyzing project structure...

Detected: typescript, react, nextjs, testing

Platform: darwin

Generating skills...

✓ nextjs-component-creator

✓ typescript-test-generator

✓ changelog-generator

✓ Generated 3 skills in .autohand/skillsSkills are discovered from:

-

~/.autohand/skills/- User-level skills -

<project>/.autohand/skills/- Project-level skills - Compatible with Codex and Claude skill formats

See Agent Skills Documentation for creating custom skills.

| Command | Description |

|---|---|

/help |

Display available commands |

/quit |

Exit the session |

/model |

Switch LLM models |

/new |

Start fresh conversation |

/undo |

Revert last changes |

/session |

Show current session details |

/sessions |

List past sessions |

/resume |

Resume a previous session |

/memory |

View/manage stored memories |

/init |

Create AGENTS.md file |

/agents |

List sub-agents |

/agents-new |

Create new agent via wizard |

/skills |

List and manage skills |

/skills new |

Create a new skill |

/feedback |

Send feedback |

/formatters |

List code formatters |

/lint |

List code linters |

/completion |

Generate shell completion scripts |

/export |

Export session to markdown/JSON/HTML |

/status |

Show workspace status |

/login |

Authenticate with Autohand API |

/logout |

Sign out |

/permissions |

Manage tool permissions |

Autohand includes 40+ tools for autonomous coding:

read_file, write_file, append_file, apply_patch, search, search_replace, semantic_search, list_tree, create_directory, delete_path, rename_path, copy_path, multi_file_edit

git_status, git_diff, git_commit, git_add, git_branch, git_switch, git_merge, git_rebase, git_cherry_pick, git_stash, git_fetch, git_pull, git_push, auto_commit

run_command, custom_command, add_dependency, remove_dependency

plan, todo_write, save_memory, recall_memory

Create ~/.autohand/config.json:

{

"provider": "openrouter",

"openrouter": {

"apiKey": "sk-or-...",

"model": "anthropic/claude-sonnet-4-20250514"

},

"workspace": {

"defaultRoot": ".",

"allowDangerousOps": false

},

"ui": {

"theme": "dark",

"autoConfirm": false

}

}| Provider | Config Key | Notes |

|---|---|---|

| OpenRouter | openrouter |

Access to Claude, GPT-4, Grok, etc. |

| Anthropic | anthropic |

Direct Claude API access |

| OpenAI | openai |

GPT-4 and other models |

| Ollama | ollama |

Local models |

| llama.cpp | llamacpp |

Local inference |

| MLX | mlx |

Apple Silicon optimized |

Sessions are auto-saved to ~/.autohand/sessions/:

# Resume via command

autohand resume <session-id>

# Or in interactive mode

/resumeAutohand includes a permission system for sensitive operations:

- Interactive (default): Prompts for confirmation on risky actions

-

Unrestricted (

--unrestricted): No approval prompts -

Restricted (

--restricted): Denies all dangerous operations

Configure granular permissions in ~/.autohand/config.json:

{

"permissions": {

"whitelist": ["run_command:npm *", "run_command:bun *"],

"blacklist": ["run_command:rm -rf *", "run_command:sudo *"]

}

}- macOS

- Linux

- Windows

Telemetry is disabled by default. Opt-in to help improve Autohand:

{

"telemetry": {

"enabled": true

}

}When enabled, Autohand collects anonymous usage data (no PII, no code content). See Telemetry Documentation for details.

The backend API is available at: https://github.com/autohandai/api

# Install dependencies

bun install

# Development mode

bun run dev

# Build

bun run build

# Type check

bun run typecheck

# Run tests

bun testFROM oven/bun:1

WORKDIR /app

COPY . .

RUN bun install && bun run build

CMD ["./dist/cli.js"]docker build -t autohand .

docker run -it autohand- Playbook - 20 use cases for the software development lifecycle

- Features - Complete feature list

- Agent Skills - Skills system guide

- Configuration Reference - All config options

Apache License 2.0 - Free for individuals, non-profits, educational institutions, open source projects, and companies with ARR under $5M. See LICENSE and COMMERCIAL.md for details.

- Website: https://autohand.ai

- CLI Install: https://autohand.ai/cli/

- GitHub: https://github.com/autohandai/cli

- API Backend: https://github.com/autohandai/api

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for code-cli

Similar Open Source Tools

code-cli

Autohand Code CLI is an autonomous coding agent in CLI form that uses the ReAct pattern to understand, plan, and execute code changes. It is designed for seamless coding experience without context switching or copy-pasting. The tool is fast, intuitive, and extensible with modular skills. It can be used to automate coding tasks, enforce code quality, and speed up development. Autohand can be integrated into team workflows and CI/CD pipelines to enhance productivity and efficiency.

tunacode

TunaCode CLI is an AI-powered coding assistant that provides a command-line interface for developers to enhance their coding experience. It offers features like model selection, parallel execution for faster file operations, and various commands for code management. The tool aims to improve coding efficiency and provide a seamless coding environment for developers.

aicommit2

AICommit2 is a Reactive CLI tool that streamlines interactions with various AI providers such as OpenAI, Anthropic Claude, Gemini, Mistral AI, Cohere, and unofficial providers like Huggingface and Clova X. Users can request multiple AI simultaneously to generate git commit messages without waiting for all AI responses. The tool runs 'git diff' to grab code changes, sends them to configured AI, and returns the AI-generated commit message. Users can set API keys or Cookies for different providers and configure options like locale, generate number of messages, commit type, proxy, timeout, max-length, and more. AICommit2 can be used both locally with Ollama and remotely with supported providers, offering flexibility and efficiency in generating commit messages.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

atlas-mcp-server

ATLAS (Adaptive Task & Logic Automation System) is a high-performance Model Context Protocol server designed for LLMs to manage complex task hierarchies. Built with TypeScript, it features ACID-compliant storage, efficient task tracking, and intelligent template management. ATLAS provides LLM Agents task management through a clean, flexible tool interface. The server implements the Model Context Protocol (MCP) for standardized communication between LLMs and external systems, offering hierarchical task organization, task state management, smart templates, enterprise features, and performance optimization.

worker-vllm

The worker-vLLM repository provides a serverless endpoint for deploying OpenAI-compatible vLLM models with blazing-fast performance. It supports deploying various model architectures, such as Aquila, Baichuan, BLOOM, ChatGLM, Command-R, DBRX, DeciLM, Falcon, Gemma, GPT-2, GPT BigCode, GPT-J, GPT-NeoX, InternLM, Jais, LLaMA, MiniCPM, Mistral, Mixtral, MPT, OLMo, OPT, Orion, Phi, Phi-3, Qwen, Qwen2, Qwen2MoE, StableLM, Starcoder2, Xverse, and Yi. Users can deploy models using pre-built Docker images or build custom images with specified arguments. The repository also supports OpenAI compatibility for chat completions, completions, and models, with customizable input parameters. Users can modify their OpenAI codebase to use the deployed vLLM worker and access a list of available models for deployment.

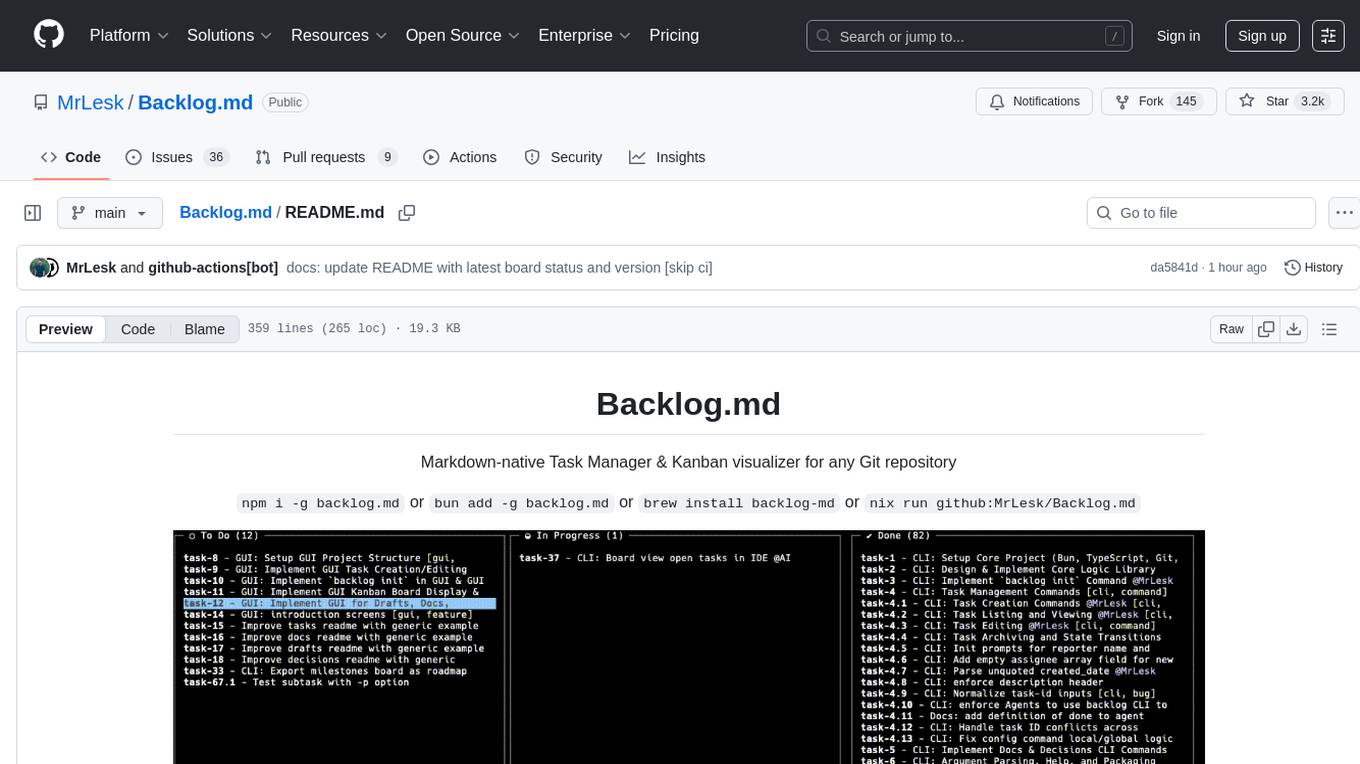

Backlog.md

Backlog.md is a Markdown-native Task Manager & Kanban visualizer for any Git repository. It turns any folder with a Git repo into a self-contained project board powered by plain Markdown files and a zero-config CLI. Features include managing tasks as plain .md files, private & offline usage, instant terminal Kanban visualization, board export, modern web interface, AI-ready CLI, rich query commands, cross-platform support, and MIT-licensed open-source. Users can create tasks, view board, assign tasks to AI, manage documentation, make decisions, and configure settings easily.

ruler

Ruler is a tool designed to centralize AI coding assistant instructions, providing a single source of truth for managing instructions across multiple AI coding tools. It helps in avoiding inconsistent guidance, duplicated effort, context drift, onboarding friction, and complex project structures by automatically distributing instructions to the right configuration files. With support for nested rule loading, Ruler can handle complex project structures with context-specific instructions for different components. It offers features like centralised rule management, nested rule loading, automatic distribution, targeted agent configuration, MCP server propagation, .gitignore automation, and a simple CLI for easy configuration management.

snapai

SnapAI is a tool that leverages AI-powered image generation models to create professional app icons for React Native & Expo developers. It offers lightning-fast icon generation, iOS optimized icons, privacy-first approach with local API key storage, multiple sizes and HD quality icons. The tool is developer-friendly with a simple CLI for easy integration into CI/CD pipelines.

mcp-devtools

MCP DevTools is a high-performance server written in Go that replaces multiple Node.js and Python-based servers. It provides access to essential developer tools through a unified, modular interface. The server is efficient, with minimal memory footprint and fast response times. It offers a comprehensive tool suite for agentic coding, including 20+ essential developer agent tools. The tool registry allows for easy addition of new tools. The server supports multiple transport modes, including STDIO, HTTP, and SSE. It includes a security framework for multi-layered protection and a plugin system for adding new tools.

paperbanana

PaperBanana is an automated academic illustration tool designed for AI scientists. It implements an agentic framework for generating publication-quality academic diagrams and statistical plots from text descriptions. The tool utilizes a two-phase multi-agent pipeline with iterative refinement, Gemini-based VLM planning, and image generation. It offers a CLI, Python API, and MCP server for IDE integration, along with Claude Code skills for generating diagrams, plots, and evaluating diagrams. PaperBanana is not affiliated with or endorsed by the original authors or Google Research, and it may differ from the original system described in the paper.

gpt-load

GPT-Load is a high-performance, enterprise-grade AI API transparent proxy service designed for enterprises and developers needing to integrate multiple AI services. Built with Go, it features intelligent key management, load balancing, and comprehensive monitoring capabilities for high-concurrency production environments. The tool serves as a transparent proxy service, preserving native API formats of various AI service providers like OpenAI, Google Gemini, and Anthropic Claude. It supports dynamic configuration, distributed leader-follower deployment, and a Vue 3-based web management interface. GPT-Load is production-ready with features like dual authentication, graceful shutdown, and error recovery.

pup

Pup is a Go-based command-line wrapper designed for easy interaction with Datadog APIs. It provides a fast, cross-platform binary with support for OAuth2 authentication and traditional API key authentication. The tool offers simple commands for common Datadog operations, structured JSON output for parsing and automation, and dynamic client registration with unique OAuth credentials per installation. Pup currently implements 38 out of 85+ available Datadog APIs, covering core observability, monitoring & alerting, security & compliance, infrastructure & cloud, incident & operations, CI/CD & development, organization & access, and platform & configuration domains. Users can easily install Pup via Homebrew, Go Install, or manual download, and authenticate using OAuth2 or API key methods. The tool supports various commands for tasks such as testing connection, managing monitors, querying metrics, handling dashboards, working with SLOs, and handling incidents.

google_workspace_mcp

The Google Workspace MCP Server is a production-ready server that integrates major Google Workspace services with AI assistants. It supports single-user and multi-user authentication via OAuth 2.1, making it a powerful backend for custom applications. Built with FastMCP for optimal performance, it features advanced authentication handling, service caching, and streamlined development patterns. The server provides full natural language control over Google Calendar, Drive, Gmail, Docs, Sheets, Slides, Forms, Tasks, and Chat through all MCP clients, AI assistants, and developer tools. It supports free Google accounts and Google Workspace plans with expanded app options like Chat & Spaces. The server also offers private cloud instance options.

aiosmb

aiosmb is a fully asynchronous SMB library written in pure Python, supporting Python 3.7 and above. It offers various authentication methods such as Kerberos, NTLM, SSPI, and NEGOEX. The library supports connections over TCP and QUIC protocols, with proxy support for SOCKS4 and SOCKS5. Users can specify an SMB connection using a URL format, making it easier to authenticate and connect to SMB hosts. The project aims to implement DCERPC features, VSS mountpoint operations, and other enhancements in the future. It is inspired by Impacket and AzureADJoinedMachinePTC projects.

kubectl-mcp-server

Control your entire Kubernetes infrastructure through natural language conversations with AI. Talk to your clusters like you talk to a DevOps expert. Debug crashed pods, optimize costs, deploy applications, audit security, manage Helm charts, and visualize dashboards—all through natural language. The tool provides 253 powerful tools, 8 workflow prompts, 8 data resources, and works with all major AI assistants. It offers AI-powered diagnostics, built-in cost optimization, enterprise-ready features, zero learning curve, universal compatibility, visual insights, and production-grade deployment options. From debugging crashed pods to optimizing cluster costs, kubectl-mcp-server is your AI-powered DevOps companion.

For similar tasks

claude-coder

Claude Coder is an AI-powered coding companion in the form of a VS Code extension that helps users transform ideas into code, convert designs into applications, debug intuitively, accelerate development with automation, and improve coding skills. It aims to bridge the gap between imagination and implementation, making coding accessible and efficient for developers of all skill levels.

code-cli

Autohand Code CLI is an autonomous coding agent in CLI form that uses the ReAct pattern to understand, plan, and execute code changes. It is designed for seamless coding experience without context switching or copy-pasting. The tool is fast, intuitive, and extensible with modular skills. It can be used to automate coding tasks, enforce code quality, and speed up development. Autohand can be integrated into team workflows and CI/CD pipelines to enhance productivity and efficiency.

elysium

Elysium is an Emacs package that allows users to automatically apply AI-generated changes while coding. By calling `elysium-query`, users can request a set of changes that will be merged into the code buffer. The tool supports making queries on a specific region without leaving the code buffer. It uses the `gptel` backend and currently recommends using the Claude 3-5 Sonnet model for generating code. Users can customize the window size and style of the Elysium buffer. The tool also provides functions to keep or discard AI-suggested changes and navigate conflicting hunks with `smerge-mode`.

For similar jobs

aiscript

AiScript is a lightweight scripting language that runs on JavaScript. It supports arrays, objects, and functions as first-class citizens, and is easy to write without the need for semicolons or commas. AiScript runs in a secure sandbox environment, preventing infinite loops from freezing the host. It also allows for easy provision of variables and functions from the host.

askui

AskUI is a reliable, automated end-to-end automation tool that only depends on what is shown on your screen instead of the technology or platform you are running on.

bots

The 'bots' repository is a collection of guides, tools, and example bots for programming bots to play video games. It provides resources on running bots live, installing the BotLab client, debugging bots, testing bots in simulated environments, and more. The repository also includes example bots for games like EVE Online, Tribal Wars 2, and Elvenar. Users can learn about developing bots for specific games, syntax of the Elm programming language, and tools for memory reading development. Additionally, there are guides on bot programming, contributing to BotLab, and exploring Elm syntax and core library.

ain

Ain is a terminal HTTP API client designed for scripting input and processing output via pipes. It allows flexible organization of APIs using files and folders, supports shell-scripts and executables for common tasks, handles url-encoding, and enables sharing the resulting curl, wget, or httpie command-line. Users can put things that change in environment variables or .env-files, and pipe the API output for further processing. Ain targets users who work with many APIs using a simple file format and uses curl, wget, or httpie to make the actual calls.

LaVague

LaVague is an open-source Large Action Model framework that uses advanced AI techniques to compile natural language instructions into browser automation code. It leverages Selenium or Playwright for browser actions. Users can interact with LaVague through an interactive Gradio interface to automate web interactions. The tool requires an OpenAI API key for default examples and offers a Playwright integration guide. Contributors can help by working on outlined tasks, submitting PRs, and engaging with the community on Discord. The project roadmap is available to track progress, but users should exercise caution when executing LLM-generated code using 'exec'.

robocorp

Robocorp is a platform that allows users to create, deploy, and operate Python automations and AI actions. It provides an easy way to extend the capabilities of AI agents, assistants, and copilots with custom actions written in Python. Users can create and deploy tools, skills, loaders, and plugins that securely connect any AI Assistant platform to their data and applications. The Robocorp Action Server makes Python scripts compatible with ChatGPT and LangChain by automatically creating and exposing an API based on function declaration, type hints, and docstrings. It simplifies the process of developing and deploying AI actions, enabling users to interact with AI frameworks effortlessly.

Open-Interface

Open Interface is a self-driving software that automates computer tasks by sending user requests to a language model backend (e.g., GPT-4V) and simulating keyboard and mouse inputs to execute the steps. It course-corrects by sending current screenshots to the language models. The tool supports MacOS, Linux, and Windows, and requires setting up the OpenAI API key for access to GPT-4V. It can automate tasks like creating meal plans, setting up custom language model backends, and more. Open Interface is currently not efficient in accurate spatial reasoning, tracking itself in tabular contexts, and navigating complex GUI-rich applications. Future improvements aim to enhance the tool's capabilities with better models trained on video walkthroughs. The tool is cost-effective, with user requests priced between $0.05 - $0.20, and offers features like interrupting the app and primary display visibility in multi-monitor setups.

AI-Case-Sorter-CS7.1

AI-Case-Sorter-CS7.1 is a project focused on building a case sorter using machine vision and machine learning AI to sort cases by headstamp. The repository includes Arduino code and 3D models necessary for the project.