appwrite

Appwrite® - complete cloud infrastructure for your web, mobile and AI apps. Including Auth, Databases, Storage, Functions, Messaging, Hosting, Realtime and more

Stars: 54721

Appwrite is a best-in-class, developer-first platform that provides everything needed to create scalable, stable, and production-ready software quickly. It is an end-to-end platform for building Web, Mobile, Native, or Backend apps, packaged as Docker microservices. Appwrite abstracts the complexity of building modern apps and allows users to build secure, full-stack applications faster. It offers features like user authentication, database management, storage, file management, image manipulation, Cloud Functions, messaging, and more services.

README:

We just announced DB operators for Appwrite Databases - Learn more

Appwrite Cloud is now Generally Available - Learn more

Appwrite is a best-in-class, developer-first platform that gives builders everything they need to create scalable, stable, and production-ready software, fast.

English | 简体中文

Appwrite is an end-to-end platform for building Web, Mobile, Native, or Backend apps, packaged as a set of Docker microservices. It includes both a backend server and a fully integrated hosting solution for deploying static and server-side rendered frontends. Appwrite abstracts the complexity and repetitiveness required to build modern apps from scratch and allows you to build secure, full-stack applications faster.

Using Appwrite, you can easily integrate your app with user authentication and multiple sign-in methods, a database for storing and querying users and team data, storage and file management, image manipulation, Cloud Functions, messaging, and more services.

Find out more at: https://appwrite.io.

Table of Contents:

- Installation & Setup

- Self-Hosting

- One-Click Setups

- Getting Started

- Architecture

- Contributing

- Security

- Follow Us

- License

The easiest way to get started with Appwrite is by signing up for Appwrite Cloud. While Appwrite Cloud is in public beta, you can build with Appwrite completely free, and we won't collect your credit card information.

Appwrite is designed to run in a containerized environment. Running your server is as easy as running one command from your terminal. You can either run Appwrite on your localhost using docker-compose or on any other container orchestration tool, such as Kubernetes, Docker Swarm, or Rancher.

Before running the installation command, make sure you have Docker installed on your machine:

docker run -it --rm \

--volume /var/run/docker.sock:/var/run/docker.sock \

--volume "$(pwd)"/appwrite:/usr/src/code/appwrite:rw \

--entrypoint="install" \

appwrite/appwrite:1.8.0docker run -it --rm ^

--volume //var/run/docker.sock:/var/run/docker.sock ^

--volume "%cd%"/appwrite:/usr/src/code/appwrite:rw ^

--entrypoint="install" ^

appwrite/appwrite:1.8.0docker run -it --rm `

--volume /var/run/docker.sock:/var/run/docker.sock `

--volume ${pwd}/appwrite:/usr/src/code/appwrite:rw `

--entrypoint="install" `

appwrite/appwrite:1.8.0Once the Docker installation is complete, go to http://localhost to access the Appwrite console from your browser. Please note that on non-Linux native hosts, the server might take a few minutes to start after completing the installation.

For advanced production and custom installation, check out our Docker environment variables docs. You can also use our public docker-compose.yml and .env files to manually set up an environment.

If you are upgrading your Appwrite server from an older version, you should use the Appwrite migration tool once your setup is completed. For more information regarding this, check out the Installation Docs.

In addition to running Appwrite locally, you can also launch Appwrite using a pre-configured setup. This allows you to get up and running quickly with Appwrite without installing Docker on your local machine.

Choose from one of the providers below:

|

DigitalOcean |

Akamai Compute |

AWS Marketplace |

Getting started with Appwrite is as easy as creating a new project, choosing your platform, and integrating its SDK into your code. You can easily get started with your platform of choice by reading one of our Getting Started tutorials.

- Account - Manage current user authentication and account. Track and manage the user sessions, devices, sign-in methods, and security logs.

- Users - Manage and list all project users when building backend integrations with Server SDKs.

- Teams - Manage and group users in teams. Manage memberships, invites, and user roles within a team.

- Databases - Manage databases, collections, and documents. Read, create, update, and delete documents and filter lists of document collections using advanced filters.

- Storage - Manage storage files. Read, create, delete, and preview files. Manipulate the preview of your files to perfectly fit your app. All files are scanned by ClamAV and stored in a secure and encrypted way.

- Functions - Customize your Appwrite project by executing your custom code in a secure, isolated environment. You can trigger your code on any Appwrite system event either manually or using a CRON schedule.

- Messaging - Communicate with your users through push notifications, emails, and SMS text messages using Appwrite Messaging.

- Realtime - Listen to real-time events for any of your Appwrite services including users, storage, functions, databases, and more.

- Locale - Track your user's location and manage your app locale-based data.

- Avatars - Manage your users' avatars, countries' flags, browser icons, and credit card symbols. Generate QR codes from links or plaintext strings.

- MCP - Use Appwrite's Model Context Protocol (MCP) server to allow LLMs and AI tools like Claude Desktop, Cursor, and Windsurf Editor to directly interact with your Appwrite project through natural language.

- Sites - Develop, deploy, and scale your web applications directly from Appwrite, alongside your backend.

For the complete API documentation, visit https://appwrite.io/docs. For more tutorials, news and announcements check out our blog and Discord Server.

Below is a list of currently supported platforms and languages. If you would like to help us add support to your platform of choice, you can go over to our SDK Generator project and view our contribution guide.

- ✅ Web (Maintained by the Appwrite Team)

- ✅ Flutter (Maintained by the Appwrite Team)

- ✅ Apple (Maintained by the Appwrite Team)

- ✅ Android (Maintained by the Appwrite Team)

- ✅ React Native - Beta (Maintained by the Appwrite Team)

- ✅ NodeJS (Maintained by the Appwrite Team)

- ✅ PHP (Maintained by the Appwrite Team)

- ✅ Dart (Maintained by the Appwrite Team)

- ✅ Deno (Maintained by the Appwrite Team)

- ✅ Ruby (Maintained by the Appwrite Team)

- ✅ Python (Maintained by the Appwrite Team)

- ✅ Kotlin (Maintained by the Appwrite Team)

- ✅ Swift (Maintained by the Appwrite Team)

- ✅ .NET - Beta (Maintained by the Appwrite Team)

- ✅ Appcelerator Titanium (Maintained by Michael Gangolf)

- ✅ Godot Engine (Maintained by fenix-hub @GodotNuts)

Looking for more SDKs? - Help us by contributing a pull request to our SDK Generator!

Appwrite uses a microservices architecture that was designed for easy scaling and delegation of responsibilities. In addition, Appwrite supports multiple APIs, such as REST, WebSocket, and GraphQL to allow you to interact with your resources by leveraging your existing knowledge and protocols of choice.

The Appwrite API layer was designed to be extremely fast by leveraging in-memory caching and delegating any heavy-lifting tasks to the Appwrite background workers. The background workers also allow you to precisely control your compute capacity and costs using a message queue to handle the load. You can learn more about our architecture in the contribution guide.

All code contributions, including those of people having commit access, must go through a pull request and be approved by a core developer before being merged. This is to ensure a proper review of all the code.

We truly ❤️ pull requests! If you wish to help, you can learn more about how you can contribute to this project in the contribution guide.

For security issues, kindly email us at [email protected] instead of posting a public issue on GitHub.

Join our growing community around the world! Check out our official Blog. Follow us on X, LinkedIn, Dev Community or join our live Discord server for more help, ideas, and discussions.

This repository is available under the BSD 3-Clause License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for appwrite

Similar Open Source Tools

appwrite

Appwrite is a best-in-class, developer-first platform that provides everything needed to create scalable, stable, and production-ready software quickly. It is an end-to-end platform for building Web, Mobile, Native, or Backend apps, packaged as Docker microservices. Appwrite abstracts the complexity of building modern apps and allows users to build secure, full-stack applications faster. It offers features like user authentication, database management, storage, file management, image manipulation, Cloud Functions, messaging, and more services.

kubesphere

KubeSphere is a distributed operating system for cloud-native application management, using Kubernetes as its kernel. It provides a plug-and-play architecture, allowing third-party applications to be seamlessly integrated into its ecosystem. KubeSphere is also a multi-tenant container platform with full-stack automated IT operation and streamlined DevOps workflows. It provides developer-friendly wizard web UI, helping enterprises to build out a more robust and feature-rich platform, which includes most common functionalities needed for enterprise Kubernetes strategy.

swirl-search

Swirl is an open-source software that allows users to simultaneously search multiple content sources and receive AI-ranked results. It connects to various data sources, including databases, public data services, and enterprise sources, and utilizes AI and LLMs to generate insights and answers based on the user's data. Swirl is easy to use, requiring only the download of a YML file, starting in Docker, and searching with Swirl. Users can add credentials to preloaded SearchProviders to access more sources. Swirl also offers integration with ChatGPT as a configured AI model. It adapts and distributes user queries to anything with a search API, re-ranking the unified results using Large Language Models without extracting or indexing anything. Swirl includes five Google Programmable Search Engines (PSEs) to get users up and running quickly. Key features of Swirl include Microsoft 365 integration, SearchProvider configurations, query adaptation, synchronous or asynchronous search federation, optional subscribe feature, pipelining of Processor stages, results stored in SQLite3 or PostgreSQL, built-in Query Transformation support, matching on word stems and handling of stopwords, duplicate detection, re-ranking of unified results using Cosine Vector Similarity, result mixers, page through all results requested, sample data sets, optional spell correction, optional search/result expiration service, easily extensible Connector and Mixer objects, and a welcoming community for collaboration and support.

dify

Dify is an open-source LLM app development platform that combines AI workflow, RAG pipeline, agent capabilities, model management, observability features, and more. It allows users to quickly go from prototype to production. Key features include: 1. Workflow: Build and test powerful AI workflows on a visual canvas. 2. Comprehensive model support: Seamless integration with hundreds of proprietary / open-source LLMs from dozens of inference providers and self-hosted solutions. 3. Prompt IDE: Intuitive interface for crafting prompts, comparing model performance, and adding additional features. 4. RAG Pipeline: Extensive RAG capabilities that cover everything from document ingestion to retrieval. 5. Agent capabilities: Define agents based on LLM Function Calling or ReAct, and add pre-built or custom tools. 6. LLMOps: Monitor and analyze application logs and performance over time. 7. Backend-as-a-Service: All of Dify's offerings come with corresponding APIs for easy integration into your own business logic.

SurfSense

SurfSense is a tool designed to help users save and organize content from the internet into a personal Knowledge Graph. It allows users to capture web browsing sessions and webpage content using a Chrome extension, enabling easy retrieval and recall of saved information. SurfSense offers features like powerful search capabilities, natural language interaction with saved content, self-hosting options, and integration with GraphRAG for meaningful content relations. The tool eliminates the need for web scraping by directly reading data from the DOM, making it a convenient solution for managing online information.

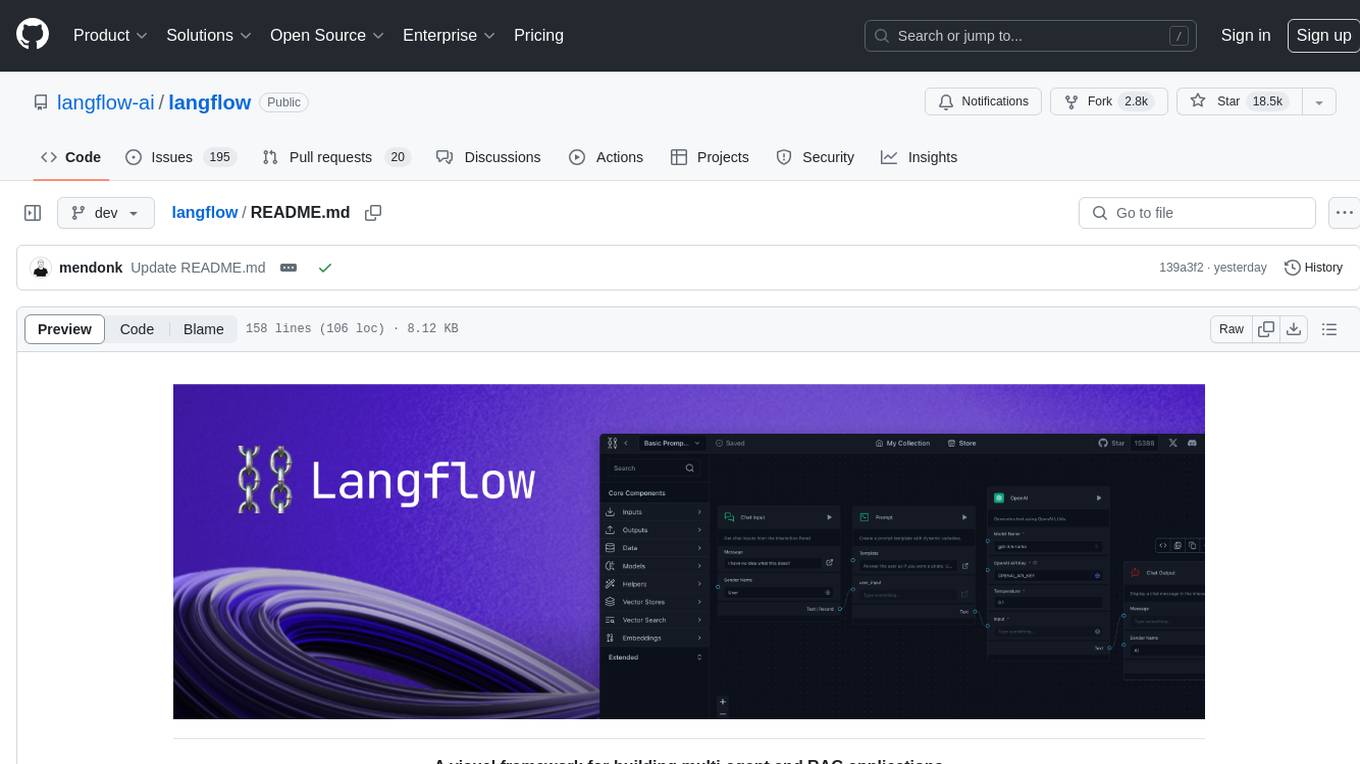

langflow

Langflow is an open-source Python-powered visual framework designed for building multi-agent and RAG applications. It is fully customizable, language model agnostic, and vector store agnostic. Users can easily create flows by dragging components onto the canvas, connect them, and export the flow as a JSON file. Langflow also provides a command-line interface (CLI) for easy management and configuration, allowing users to customize the behavior of Langflow for development or specialized deployment scenarios. The tool can be deployed on various platforms such as Google Cloud Platform, Railway, and Render. Contributors are welcome to enhance the project on GitHub by following the contributing guidelines.

tuff

Tuff is a local-first, AI-native, and infinitely extensible desktop command center designed to enhance workflow efficiency. It offers a seamless integration of core utilities, AI-powered search, contextual intelligence, and extensibility through custom plugins. With a beautiful UI design, rich functionality, simple operations, and a focus on security and reliability, Tuff provides users with a cross-platform desktop software that is easy to use and offers a good user experience.

ToolJet

ToolJet is an open-source platform for building and deploying internal tools, workflows, and AI agents. It offers a visual builder with drag-and-drop UI, integrations with databases, APIs, SaaS apps, and object storage. The community edition includes features like a visual app builder, ToolJet database, multi-page apps, collaboration tools, extensibility with plugins, code execution, and security measures. ToolJet AI, the enterprise version, adds AI capabilities for app generation, query building, debugging, agent creation, security compliance, user management, environment management, GitSync, branding, access control, embedded apps, and enterprise support.

superduper

superduper.io is a Python framework that integrates AI models, APIs, and vector search engines directly with existing databases. It allows hosting of models, streaming inference, and scalable model training/fine-tuning. Key features include integration of AI with data infrastructure, inference via change-data-capture, scalable model training, model chaining, simple Python interface, Python-first approach, working with difficult data types, feature storing, and vector search capabilities. The tool enables users to turn their existing databases into centralized repositories for managing AI model inputs and outputs, as well as conducting vector searches without the need for specialized databases.

Revornix

Revornix is an information management tool designed for the AI era. It allows users to conveniently integrate all visible information and generates comprehensive reports at specific times. The tool offers cross-platform availability, all-in-one content aggregation, document transformation & vectorized storage, native multi-tenancy, localization & open-source features, smart assistant & built-in MCP, seamless LLM integration, and multilingual & responsive experience for users.

kilocode

Kilo Code is an open-source VS Code AI agent that allows users to generate code from natural language, check its own work, run terminal commands, automate the browser, and utilize the latest AI models. It offers features like task automation, automated refactoring, and integration with MCP servers. Users can access 400+ AI models and benefit from transparent pricing. Kilo Code is a fork of Roo Code and Cline, with improvements and unique features developed independently.

ocular

Ocular is a set of modules and tools that allow you to build rich, reliable, and performant Generative AI-Powered Search Platforms without the need to reinvent Search Architecture. We help you build you spin up customized internal search in days not months.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

pyqt-openai

VividNode is a cross-platform AI desktop chatbot application for LLM such as GPT, Claude, Gemini, Llama chatbot interaction and image generation. It offers customizable features, local chat history, and enhanced performance without requiring a browser. The application is powered by GPT4Free and allows users to interact with chatbots and generate images seamlessly. VividNode supports Windows, Mac, and Linux, securely stores chat history locally, and provides features like chat interface customization, image generation, focus and accessibility modes, and extensive customization options with keyboard shortcuts for efficient operations.

super-agent-party

A 3D AI desktop companion with endless possibilities! This repository provides a platform for enhancing the LLM API without code modification, supporting seamless integration of various functionalities such as knowledge bases, real-time networking, multimodal capabilities, automation, and deep thinking control. It offers one-click deployment to multiple terminals, ecological tool interconnection, standardized interface opening, and compatibility across all platforms. Users can deploy the tool on Windows, macOS, Linux, or Docker, and access features like intelligent agent deployment, VRM desktop pets, Tavern character cards, QQ bot deployment, and developer-friendly interfaces. The tool supports multi-service providers, extensive tool integration, and ComfyUI workflows. Hardware requirements are minimal, making it suitable for various deployment scenarios.

For similar tasks

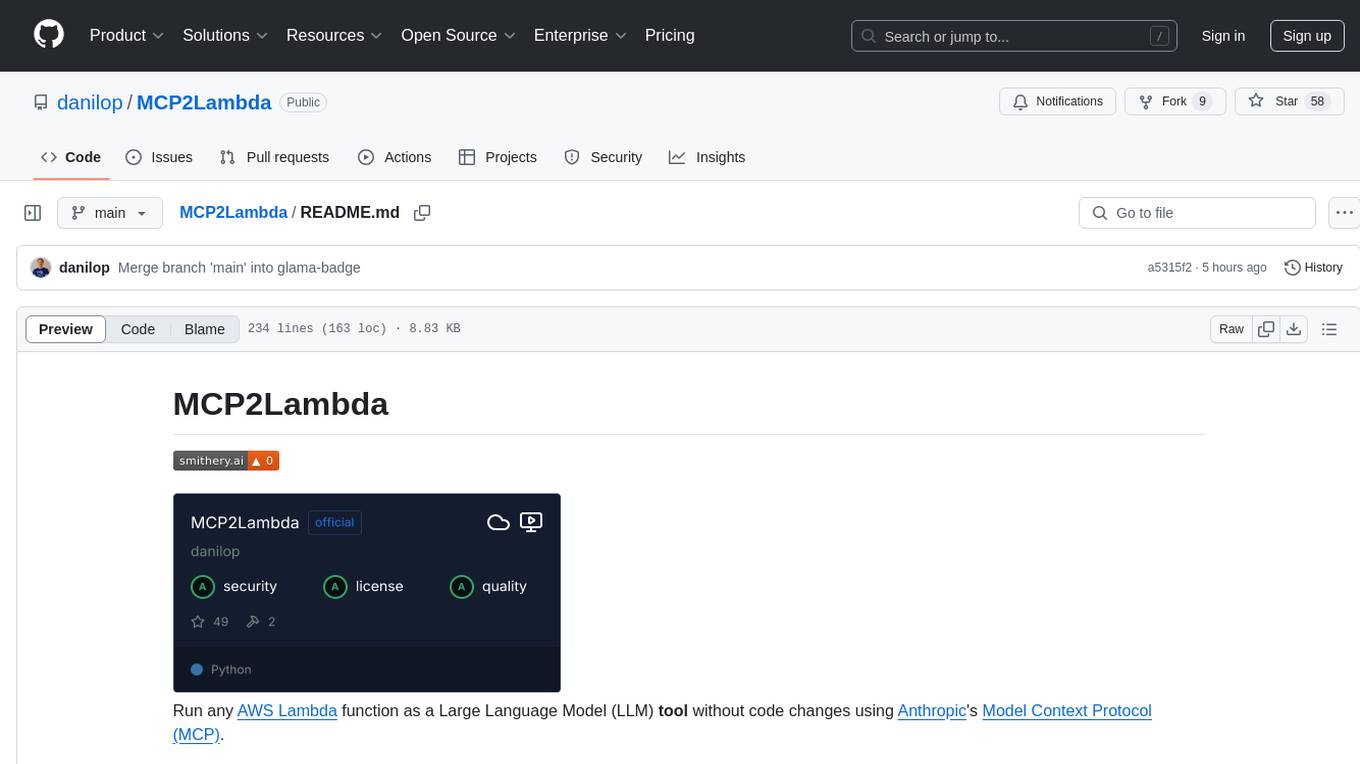

MCP2Lambda

MCP2Lambda is a server that acts as a bridge between MCP clients and AWS Lambda functions, allowing generative AI models to access and run Lambda functions as tools. It enables Large Language Models (LLMs) to interact with Lambda functions without code changes, providing access to private resources, AWS services, private networks, and the public internet. The server supports autodiscovery of Lambda functions and their invocation by name with parameters. It standardizes AI model access to external tools using the MCP protocol.

appwrite

Appwrite is a best-in-class, developer-first platform that provides everything needed to create scalable, stable, and production-ready software quickly. It is an end-to-end platform for building Web, Mobile, Native, or Backend apps, packaged as Docker microservices. Appwrite abstracts the complexity of building modern apps and allows users to build secure, full-stack applications faster. It offers features like user authentication, database management, storage, file management, image manipulation, Cloud Functions, messaging, and more services.

echo

Echo is a tool that simplifies the integration of AI SDKs into applications, providing instant OAuth, user accounts, and usage billing in just 5 lines of code. It eliminates the need to manage API keys, build authentication flows, or set up payment processing, allowing developers to go live in minutes. Users benefit from a universal balance that works across all Echo-powered apps, with revenue directly hitting the developer's GitHub account. Echo offers various SDKs for different frameworks like Next.js and React, along with templates to quickly start projects with Echo integration.

For similar jobs

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.

pluto

Pluto is a development tool dedicated to helping developers **build cloud and AI applications more conveniently** , resolving issues such as the challenging deployment of AI applications and open-source models. Developers are able to write applications in familiar programming languages like **Python and TypeScript** , **directly defining and utilizing the cloud resources necessary for the application within their code base** , such as AWS SageMaker, DynamoDB, and more. Pluto automatically deduces the infrastructure resource needs of the app through **static program analysis** and proceeds to create these resources on the specified cloud platform, **simplifying the resources creation and application deployment process**.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

aioconsole

aioconsole is a Python package that provides asynchronous console and interfaces for asyncio. It offers asynchronous equivalents to input, print, exec, and code.interact, an interactive loop running the asynchronous Python console, customization and running of command line interfaces using argparse, stream support to serve interfaces instead of using standard streams, and the apython script to access asyncio code at runtime without modifying the sources. The package requires Python version 3.8 or higher and can be installed from PyPI or GitHub. It allows users to run Python files or modules with a modified asyncio policy, replacing the default event loop with an interactive loop. aioconsole is useful for scenarios where users need to interact with asyncio code in a console environment.

aiosqlite

aiosqlite is a Python library that provides a friendly, async interface to SQLite databases. It replicates the standard sqlite3 module but with async versions of all the standard connection and cursor methods, along with context managers for automatically closing connections and cursors. It allows interaction with SQLite databases on the main AsyncIO event loop without blocking execution of other coroutines while waiting for queries or data fetches. The library also replicates most of the advanced features of sqlite3, such as row factories and total changes tracking.