ansari-backend

Ansari is an AI assistant to help Muslims practice more effectively and non-Muslims to understand Islam

Stars: 87

Ansari is an experimental open source project that utilizes large language models to assist Muslims in enhancing their practice of Islam and non-Muslims in gaining a precise understanding of Islamic teachings. It employs carefully crafted prompts and multiple sources accessed through retrieval augmented generation. The tool can be installed from PyPI and offers a command-line interface for interactive and direct input modes. Users can also run Ansari as a backend service or on the command line. Additionally, the project includes CLI tools for interacting with the Ansari API and exploring individual search tools.

README:

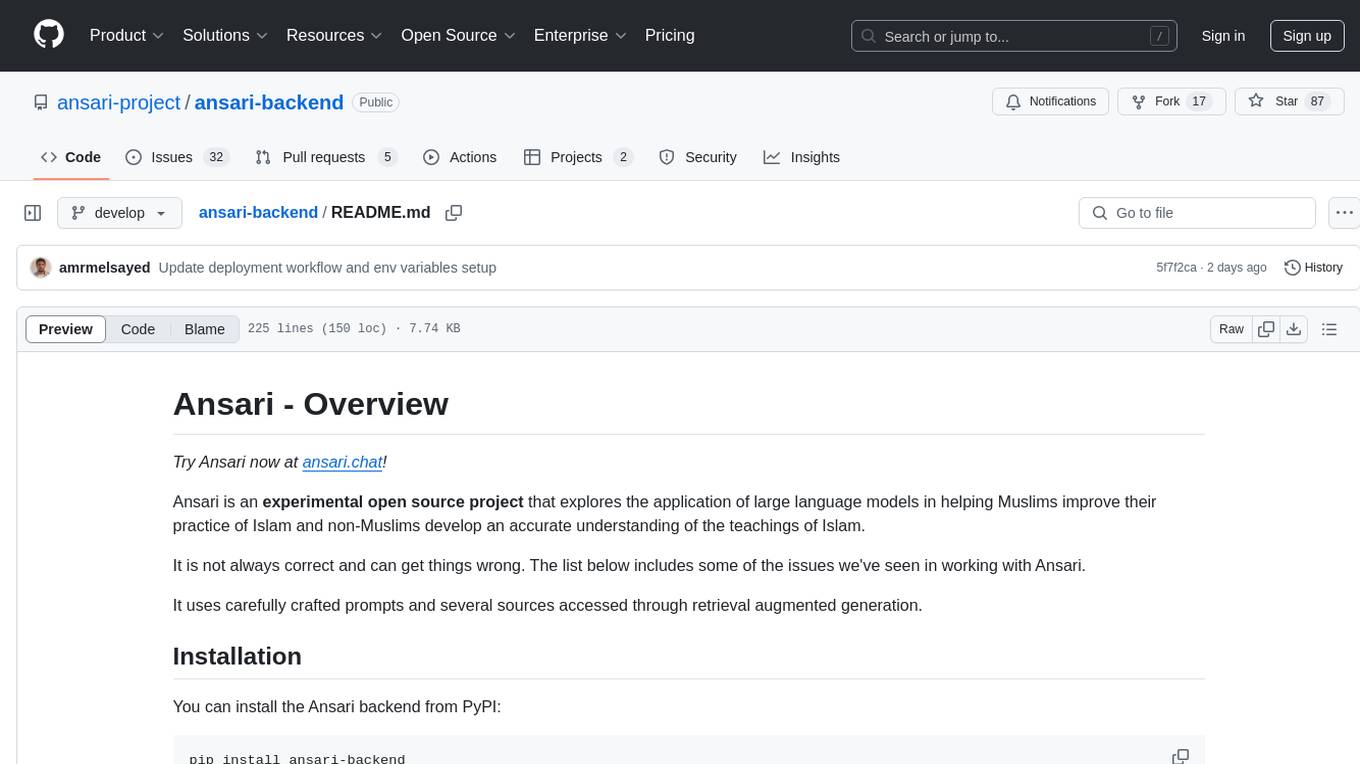

Try Ansari now at ansari.chat!

Ansari is an experimental open source project that explores the application of large language models in helping Muslims improve their practice of Islam and non-Muslims develop an accurate understanding of the teachings of Islam.

It is not always correct and can get things wrong. The list below includes some of the issues we've seen in working with Ansari.

It uses carefully crafted prompts and several sources accessed through retrieval augmented generation.

You can install the Ansari backend from PyPI:

pip install ansari-backendOnce installed, you can use the command-line interface:

# Interactive mode

ansari

# With direct input

ansari -i "your question here"

# Using Claude agent

ansari -a AnsariClaudeTOC

- Ansari - Overview

- How can you help?

- What can Ansari do?

- What is logged with Ansari?

- Getting the Ansari Backend running on your local machine

- The Roadmap

- Acknowledgements

- Try Ansari out and let us know your experiences – mail us at [email protected].

- Help us implement the feature roadmap below.

A complete list of what Ansari can do can be found here.

- Only the conversations are logged. Nothing incidental about your identity is currently retained: no ip address, no accounts etc. If you share personal details with Ansari as part of a conversation e.g. "my name is Mohammed Ali" that will be logged.

- If you shared something that shouldn't have been shared, drop us an e-mail at [email protected] and we can delete any logs.

Ansari will quite comfortably run on your local machine -- almost all heavy lifting is done using other services. The only complexities are it needs a lot of environment variables to be set and you need to run postgres.

You can also run it on Heroku and you can use the Procfile and runtime.txt filed to deploy it directly on Heroku.

Follow the instructions here to install postgres.

Do a % python -V to checck that you have python 3 installed. If you get an error or the version starts with a 2, follow the instructions here.

% git clone [email protected]:waleedkadous/ansari-backend.git

% cd ansari-backend% python -m venv .venv

% source .venv/bin/activate% pip install -r requirements.txtYou can have a look at .env.example, and save it as .env.

You need at a minimum:

-

OPENAI_API_KEY(for the language processing) -

KALEMAT_API_KEY(for Qur'an and Hadith search). -

DATABASE_URL=postgresql://user:password@localhost:5432/ansari_db. Replace 'user', 'password' with whatever you used to set up your own database

Optional environment variable(s):

-

SENDGRID_API_KEY: Sendgrid is the system we use for sending password reset emails. If it's not set it will print the e-mails that were sent

You can run the follwing commad to create missing tables in your database.

% python setup_database.pyWe use uvicorn to run the service.

% # Make sure to load environment variables:

% source .env

% uvicorn main_api:app --reload # Reload automatically reloads python files as you edit. This starts a service on port 8000. You can check it's up by checking at http://localhost:8000/docs which gives you a nice interface to the API.

If you just want to run Ansari on the command line such that it takes input from stdin and outputs on stdout (e.g. for debugging or to feed it text) you can just do:

% python src/ansari/app/main_stdio.pyThe Ansari project includes several command-line tools in the src/ansari/cli directory:

The query_api.py tool allows you to interact with the Ansari API directly from the command line:

# Interactive mode with user login

% python src/ansari/cli/query_api.py

# Guest mode (no account needed)

% python src/ansari/cli/query_api.py --guest

# Single query mode

% python src/ansari/cli/query_api.py --guest --input "What does the Quran say about kindness?"The use_tools.py tool provides direct access to Ansari's individual search tools. This is particularly useful for:

- Debugging search functionality

- Understanding how new tools work

- Testing tool responses without running the full Ansari system

- Developing and refining new search tools

Examples:

# Search the Quran

% python src/ansari/cli/use_tools.py "mercy" --tool quran

# Search hadith collections

% python src/ansari/cli/use_tools.py "fasting" --tool hadith

# Output format options for easier debugging

% python src/ansari/cli/use_tools.py "zakat" --tool mawsuah --format raw # Raw API response

% python src/ansari/cli/use_tools.py "zakat" --tool mawsuah --format list # Formatted results listSide note: If you're contributing to the main project, you should style your code with ruff.

This roadmap is preliminary, but it gives you an idea of where Ansari is heading. Please contact us at [email protected] if you'd like to help with these.

Add feedback buttons to the UI (thumbs up, thumbs down, explanation)- ~~Add "share" button to the UI that captures a conversation and gives you a URL for it to share with friends. ~~

Add "share Ansari" web site.Improve logging of chats – move away from PromptLayer.- ~~Add Hadith Search. ~~

- Improve source citation.

- Add prayer times.

Add login support- Add personalization – remembers who you are, what scholars you turn to etc.

Separate frontend from backend in preparation for mobile app.- ~~Replace Gradio interface with Flutter. ~~

- Ship Android and iOS versions of Ansari.

- Add notifications (e.g. around prayer times).

- Add more sources including:

- Videos by prominent scholars (transcribed into English)

- Islamic question and answer web sites.

- Turn into a platform so different Islamic organizations can customize Ansari to their needs.

- Amin Ahmad: general advice on LLMs, integration with vector databases.

- Hossam Hassan: vector database backend for Qur'an search.

- Saifeldeen Hadid: testing and identifying issues.

- Iman Sadreddin: Identifying output issues.

- Wael Hamza: Characterizing output.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ansari-backend

Similar Open Source Tools

ansari-backend

Ansari is an experimental open source project that utilizes large language models to assist Muslims in enhancing their practice of Islam and non-Muslims in gaining a precise understanding of Islamic teachings. It employs carefully crafted prompts and multiple sources accessed through retrieval augmented generation. The tool can be installed from PyPI and offers a command-line interface for interactive and direct input modes. Users can also run Ansari as a backend service or on the command line. Additionally, the project includes CLI tools for interacting with the Ansari API and exploring individual search tools.

webwhiz

WebWhiz is an open-source tool that allows users to train ChatGPT on website data to build AI chatbots for customer queries. It offers easy integration, data-specific responses, regular data updates, no-code builder, chatbot customization, fine-tuning, and offline messaging. Users can create and train chatbots in a few simple steps by entering their website URL, automatically fetching and preparing training data, training ChatGPT, and embedding the chatbot on their website. WebWhiz can crawl websites monthly, collect text data and metadata, and process text data using tokens. Users can train custom data, but bringing custom open AI keys is not yet supported. The tool has no limitations on context size but may limit the number of pages based on the chosen plan. WebWhiz SDK is available on NPM, CDNs, and GitHub, and users can self-host it using Docker or manual setup involving MongoDB, Redis, Node, Python, and environment variables setup. For any issues, users can contact [email protected].

CLI

Bito CLI provides a command line interface to the Bito AI chat functionality, allowing users to interact with the AI through commands. It supports complex automation and workflows, with features like long prompts and slash commands. Users can install Bito CLI on Mac, Linux, and Windows systems using various methods. The tool also offers configuration options for AI model type, access key management, and output language customization. Bito CLI is designed to enhance user experience in querying AI models and automating tasks through the command line interface.

qrev

QRev is an open-source alternative to Salesforce, offering AI agents to scale sales organizations infinitely. It aims to provide digital workers for various sales roles or a superagent named Qai. The tech stack includes TypeScript for frontend, NodeJS for backend, MongoDB for app server database, ChromaDB for vector database, SQLite for AI server SQL relational database, and Langchain for LLM tooling. The tool allows users to run client app, app server, and AI server components. It requires Node.js and MongoDB to be installed, and provides detailed setup instructions in the README file.

Sentient

Sentient is a personal, private, and interactive AI companion developed by Existence. The project aims to build a completely private AI companion that is deeply personalized and context-aware of the user. It utilizes automation and privacy to create a true companion for humans. The tool is designed to remember information about the user and use it to respond to queries and perform various actions. Sentient features a local and private environment, MBTI personality test, integrations with LinkedIn, Reddit, and more, self-managed graph memory, web search capabilities, multi-chat functionality, and auto-updates for the app. The project is built using technologies like ElectronJS, Next.js, TailwindCSS, FastAPI, Neo4j, and various APIs.

Open_Data_QnA

Open Data QnA is a Python library that allows users to interact with their PostgreSQL or BigQuery databases in a conversational manner, without needing to write SQL queries. The library leverages Large Language Models (LLMs) to bridge the gap between human language and database queries, enabling users to ask questions in natural language and receive informative responses. It offers features such as conversational querying with multiturn support, table grouping, multi schema/dataset support, SQL generation, query refinement, natural language responses, visualizations, and extensibility. The library is built on a modular design and supports various components like Database Connectors, Vector Stores, and Agents for SQL generation, validation, debugging, descriptions, embeddings, responses, and visualizations.

SeaGOAT

SeaGOAT is a local search tool that leverages vector embeddings to enable you to search your codebase semantically. It is designed to work on Linux, macOS, and Windows and can process files in various formats, including text, Markdown, Python, C, C++, TypeScript, JavaScript, HTML, Go, Java, PHP, and Ruby. SeaGOAT uses a vector database called ChromaDB and a local vector embedding engine to provide fast and accurate search results. It also supports regular expression/keyword-based matches. SeaGOAT is open-source and licensed under an open-source license, and users are welcome to examine the source code, raise concerns, or create pull requests to fix problems.

bolt-python-ai-chatbot

The 'bolt-python-ai-chatbot' is a Slack chatbot app template that allows users to integrate AI-powered conversations into their Slack workspace. Users can interact with the bot in conversations and threads, send direct messages for private interactions, use commands to communicate with the bot, customize bot responses, and store user preferences. The app supports integration with Workflow Builder, custom language models, and different AI providers like OpenAI, Anthropic, and Google Cloud Vertex AI. Users can create user objects, manage user states, and select from various AI models for communication.

AilyticMinds

AilyticMinds Chatbot UI is an open-source AI chat app designed for easy deployment and improved backend compatibility. It provides a user-friendly interface for creating and hosting chatbots, with features like mobile layout optimization and support for various providers. The tool utilizes Supabase for data storage and management, offering a secure and scalable solution for chatbot development. Users can quickly set up their own instances locally or in the cloud, with detailed instructions provided for installation and configuration.

bia-bob

BIA `bob` is a Jupyter-based assistant for interacting with data using large language models to generate Python code. It can utilize OpenAI's chatGPT, Google's Gemini, Helmholtz' blablador, and Ollama. Users need respective accounts to access these services. Bob can assist in code generation, bug fixing, code documentation, GPU-acceleration, and offers a no-code custom Jupyter Kernel. It provides example notebooks for various tasks like bio-image analysis, model selection, and bug fixing. Installation is recommended via conda/mamba environment. Custom endpoints like blablador and ollama can be used. Google Cloud AI API integration is also supported. The tool is extensible for Python libraries to enhance Bob's functionality.

ell

ell is a command-line interface for Language Model Models (LLMs) written in Bash. It allows users to interact with LLMs from the terminal, supports piping, context bringing, and chatting with LLMs. Users can also call functions and use templates. The tool requires bash, jq for JSON parsing, curl for HTTPS requests, and perl for PCRE. Configuration involves setting variables for different LLM models and APIs. Usage examples include asking questions, specifying models, recording input/output, running in interactive mode, and using templates. The tool is lightweight, easy to install, and pipe-friendly, making it suitable for interacting with LLMs in a terminal environment.

actions

Sema4.ai Action Server is a tool that allows users to build semantic actions in Python to connect AI agents with real-world applications. It enables users to create custom actions, skills, loaders, and plugins that securely connect any AI Assistant platform to data and applications. The tool automatically creates and exposes an API based on function declaration, type hints, and docstrings by adding '@action' to Python scripts. It provides an end-to-end stack supporting various connections between AI and user's apps and data, offering ease of use, security, and scalability.

OrionChat

Orion is a web-based chat interface that simplifies interactions with multiple AI model providers. It provides a unified platform for chatting and exploring various large language models (LLMs) such as Ollama, OpenAI (GPT model), Cohere (Command-r models), Google (Gemini models), Anthropic (Claude models), Groq Inc., Cerebras, and SambaNova. Users can easily navigate and assess different AI models through an intuitive, user-friendly interface. Orion offers features like browser-based access, code execution with Google Gemini, text-to-speech (TTS), speech-to-text (STT), seamless integration with multiple AI models, customizable system prompts, language translation tasks, document uploads for analysis, and more. API keys are stored locally, and requests are sent directly to official providers' APIs without external proxies.

dravid

Dravid (DRD) is an advanced, AI-powered CLI coding framework designed to follow user instructions until the job is completed, including fixing errors. It can generate code, fix errors, handle image queries, manage file operations, integrate with external APIs, and provide a development server with error handling. Dravid is extensible and requires Python 3.7+ and CLAUDE_API_KEY. Users can interact with Dravid through CLI commands for various tasks like creating projects, asking questions, generating content, handling metadata, and file-specific queries. It supports use cases like Next.js project development, working with existing projects, exploring new languages, Ruby on Rails project development, and Python project development. Dravid's project structure includes directories for source code, CLI modules, API interaction, utility functions, AI prompt templates, metadata management, and tests. Contributions are welcome, and development setup involves cloning the repository, installing dependencies with Poetry, setting up environment variables, and using Dravid for project enhancements.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

Perplexica

Perplexica is an open-source AI-powered search engine that utilizes advanced machine learning algorithms to provide clear answers with sources cited. It offers various modes like Copilot Mode, Normal Mode, and Focus Modes for specific types of questions. Perplexica ensures up-to-date information by using SearxNG metasearch engine. It also features image and video search capabilities and upcoming features include finalizing Copilot Mode and adding Discover and History Saving features.

For similar tasks

ansari-backend

Ansari is an experimental open source project that utilizes large language models to assist Muslims in enhancing their practice of Islam and non-Muslims in gaining a precise understanding of Islamic teachings. It employs carefully crafted prompts and multiple sources accessed through retrieval augmented generation. The tool can be installed from PyPI and offers a command-line interface for interactive and direct input modes. Users can also run Ansari as a backend service or on the command line. Additionally, the project includes CLI tools for interacting with the Ansari API and exploring individual search tools.

For similar jobs

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.