story-flicks

使用AI大模型,一键生成高清故事短视频。Generate high-definition story short videos with one click using AI large models.

Stars: 1337

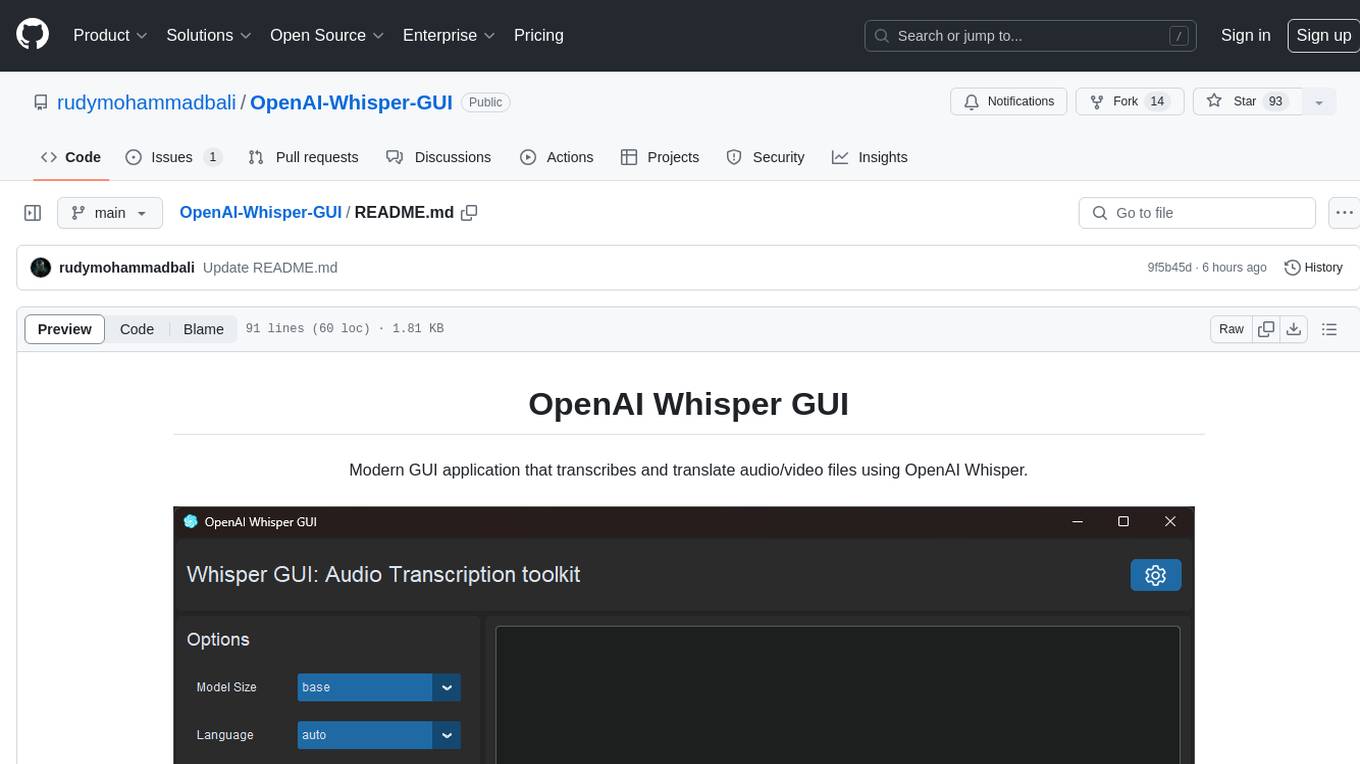

This project enables users to create story videos by inputting a story theme, utilizing a large language model to generate AI-generated images, story content, audio, and subtitles. The backend is built with Python and FastAPI, while the frontend utilizes React, Ant Design, and Vite.

README:

English | 简体中文

This project allows users to input a story theme and generates a story video using a large language model. The video includes AI-generated images, story content, audio, and subtitles.

The backend technology stack consists of Python + FastAPI framework, while the frontend is built with React + Ant Design + Vite.

|

|

|

|---|---|

git clone https://github.com/alecm20/story-flicks.git

# First, switch to the project’s backend directory first.

cd backend

cp .env.example .env

text_provider = "openai" # Provider of the text generation model. Currently supports openai, aliyun, deepseek, ollama, and siliconflow.

# Aliyun documentation: https://www.aliyun.com/product/bailian

image_provider = "aliyun" # Provider of the image generation model. Currently supports openai, aliyun, and siliconflow.

openai_base_url="https://api.openai.com/v1" # The base URL for OpenAI

aliyun_base_url="https://dashscope.aliyuncs.com/compatible-mode/v1" # The base URL for Aliyun

deepseek_base_url="https://api.deepseek.com/v1" # The base URL for DeepSeek

ollama_base_url="http://localhost:11434/v1" # The base URL for Ollama

siliconflow_base_url="https://api.siliconflow.cn/v1" # The base URL for SiliconFlow

openai_api_key= # The API key for OpenAI, only one key needs to be provided

aliyun_api_key= # The API key for Aliyun Bailian, only one key needs to be provided

deepseek_api_key= # The API key for DeepSeek, currently only text generation is supported

ollama_api_key= # If you need to use it, please set api_key to “ollama”. Currently, this API key only supports text generation and cannot be used with models that have too few parameters. It is recommended to use qwen2.5:14b or a larger model.

siliconflow_api_key= # The API key for SiliconFlow, siliconflow's text model currently only supports large models compatible with the OpenAI format, such as Qwen/Qwen2.5-7B-Instruct. The image model has only been tested with black-forest-labs/FLUX.1-dev.

text_llm_model=gpt-4o # If text_provider is set to openai, only OpenAI models can be used, such as gpt-4o. If aliyun is selected, Aliyun models like qwen-plus or qwen-max can be used. Ollama models cannot be used with models that have too few parameters. It is recommended to use qwen2.5:14b or a larger model.

image_llm_model=flux-dev # If image_provider is set to openai, only OpenAI models can be used, such as dall-e-3. If aliyun is selected, Aliyun models like flux-dev are recommended, which are currently available for free trial. More details: https://help.aliyun.com/zh/model-studio/getting-started/models#a1a9f05a675m4.

Start the backend project

# First, switch to the project root directory

cd backend

conda create -n story-flicks python=3.10 # Using conda, other virtual environments can also be used

conda activate story-flicks

pip install -r requirements.txt

uvicorn main:app --reload

If the project starts successfully, the following output will appear:

INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)

INFO: Started reloader process [78259] using StatReload

INFO: Started server process [78261]

INFO: Waiting for application startup.

INFO: Application startup complete.

Start the frontend project

# First, switch to the project root directory

cd frontend

npm install

npm run dev

# After successful startup, open: http://localhost:5173/

When successfully started, the following output will appear:

VITE v6.0.7 ready in 199 ms

➜ Local: http://localhost:5173/

➜ Network: use --host to expose

➜ press h + enter to show help

In the project root directory, run:

docker-compose up --build

Once successful, open the frontend project at: http://localhost:5173/

Based on the fields in the interface, select the text generation model provider, image generation model provider, text model, image model, video language, voice, story theme, and story segments. Then click “Generate” to create the video. The number of images generated will correspond to the number of segments specified, with one image per segment. The more segments you set, the longer it will take to generate the video. Once the generation is successful, the video will be displayed on the frontend page.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for story-flicks

Similar Open Source Tools

story-flicks

This project enables users to create story videos by inputting a story theme, utilizing a large language model to generate AI-generated images, story content, audio, and subtitles. The backend is built with Python and FastAPI, while the frontend utilizes React, Ant Design, and Vite.

album-ai

Album AI is an experimental project that uses GPT-4o-mini to automatically identify metadata from image files in the album. It leverages RAG technology to enable conversations with the album, serving as a photo album or image knowledge base to assist in content generation. The tool provides APIs for search and chat functionalities, supports one-click deployment to platforms like Render, and allows for integration and modification under a permissive open-source license.

comfyui_LLM_party

COMFYUI LLM PARTY is a node library designed for LLM workflow development in ComfyUI, an extremely minimalist UI interface primarily used for AI drawing and SD model-based workflows. The project aims to provide a complete set of nodes for constructing LLM workflows, enabling users to easily integrate them into existing SD workflows. It features various functionalities such as API integration, local large model integration, RAG support, code interpreters, online queries, conditional statements, looping links for large models, persona mask attachment, and tool invocations for weather lookup, time lookup, knowledge base, code execution, web search, and single-page search. Users can rapidly develop web applications using API + Streamlit and utilize LLM as a tool node. Additionally, the project includes an omnipotent interpreter node that allows the large model to perform any task, with recommendations to use the 'show_text' node for display output.

vscode-reborn-ai

VSCode Reborn AI is a tool that allows users to write, refactor, and improve code in Visual Studio Code using artificial intelligence. Users can work offline with AI using a local LLM. The tool provides enhanced support for OpenRouter.ai API and ollama. It also offers compatibility with various local LLMs and alternative APIs. Additionally, it includes features such as internationalization, development setup instructions, testing in VS Code, packaging for VS Code, tech stack details, and licensing information.

agentok

Agentok Studio is a visual tool built for AutoGen, a cutting-edge agent framework from Microsoft and various contributors. It offers intuitive visual tools to simplify the construction and management of complex agent-based workflows. Users can create workflows visually as graphs, chat with agents, and share flow templates. The tool is designed to streamline the development process for creators and developers working on next-generation Multi-Agent Applications.

langchainjs-quickstart-demo

Discover the journey of building a generative AI application using LangChain.js and Azure. This demo explores the development process from idea to production, using a RAG-based approach for a Q&A system based on YouTube video transcripts. The application allows to ask text-based questions about a YouTube video and uses the transcript of the video to generate responses. The code comes in two versions: local prototype using FAISS and Ollama with LLaMa3 model for completion and all-minilm-l6-v2 for embeddings, and Azure cloud version using Azure AI Search and GPT-4 Turbo model for completion and text-embedding-3-large for embeddings. Either version can be run as an API using the Azure Functions runtime.

Ollama-SwiftUI

Ollama-SwiftUI is a user-friendly interface for Ollama.ai created in Swift. It allows seamless chatting with local Large Language Models on Mac. Users can change models mid-conversation, restart conversations, send system prompts, and use multimodal models with image + text. The app supports managing models, including downloading, deleting, and duplicating them. It offers light and dark mode, multiple conversation tabs, and a localized interface in English and Arabic.

vector_companion

Vector Companion is an AI tool designed to act as a virtual companion on your computer. It consists of two personalities, Axiom and Axis, who can engage in conversations based on what is happening on the screen. The tool can transcribe audio output and user microphone input, take screenshots, and read text via OCR to create lifelike interactions. It requires specific prerequisites to run on Windows and uses VB Cable to capture audio. Users can interact with Axiom and Axis by running the main script after installation and configuration.

OrionChat

Orion is a web-based chat interface that simplifies interactions with multiple AI model providers. It provides a unified platform for chatting and exploring various large language models (LLMs) such as Ollama, OpenAI (GPT model), Cohere (Command-r models), Google (Gemini models), Anthropic (Claude models), Groq Inc., Cerebras, and SambaNova. Users can easily navigate and assess different AI models through an intuitive, user-friendly interface. Orion offers features like browser-based access, code execution with Google Gemini, text-to-speech (TTS), speech-to-text (STT), seamless integration with multiple AI models, customizable system prompts, language translation tasks, document uploads for analysis, and more. API keys are stored locally, and requests are sent directly to official providers' APIs without external proxies.

PrAIvateSearch

PrAIvateSearch is a NextJS web application that aims to implement similar features to SearchGPT in an open-source, local, and private way. It allows users to search the web using their own AI model. The application provides a user-friendly interface for interacting with the AI model and accessing search results. PrAIvateSearch is designed to be easy to install and use, with detailed instructions provided in the readme file. The project is in beta stage and welcomes contributions from the community to improve and enhance its functionality. Users are encouraged to support the project through funding to help it grow and continue to be maintained as an open-source tool under the MIT license.

sdkit

sdkit (stable diffusion kit) is an easy-to-use library for utilizing Stable Diffusion in AI Art projects. It includes features like ControlNets, LoRAs, Textual Inversion Embeddings, GFPGAN, CodeFormer for face restoration, RealESRGAN for upscaling, k-samplers, support for custom VAEs, NSFW filter, model-downloader, parallel GPU support, and more. It offers a model database, auto-scanning for malicious models, and various optimizations. The API consists of modules for loading models, generating images, filters, model merging, and utilities, all managed through the sdkit.Context object.

Kuebiko

Kuebiko is a Twitch Chat Bot that reads twitch chat and generates text-to-speech responses using Google Cloud API and OpenAI's GPT-3 text completion model. It allows users to set up their own VTuber AI similar to 'Neuro-Sama'. The project is built with Python and requires setting up various API keys and configurations to enable the bot functionality. Users can customize the voice of their VTuber and route audio using VBAudio Cable. Kuebiko provides a unique way to interact with viewers through chat responses and captions in OBS.

chatdev

ChatDev IDE is a tool for building your AI agent, Whether it's NPCs in games or powerful agent tools, you can design what you want for this platform. It accelerates prompt engineering through **JavaScript Support** that allows implementing complex prompting techniques.

dataline

DataLine is an AI-driven data analysis and visualization tool designed for technical and non-technical users to explore data quickly. It offers privacy-focused data storage on the user's device, supports various data sources, generates charts, executes queries, and facilitates report building. The tool aims to speed up data analysis tasks for businesses and individuals by providing a user-friendly interface and natural language querying capabilities.

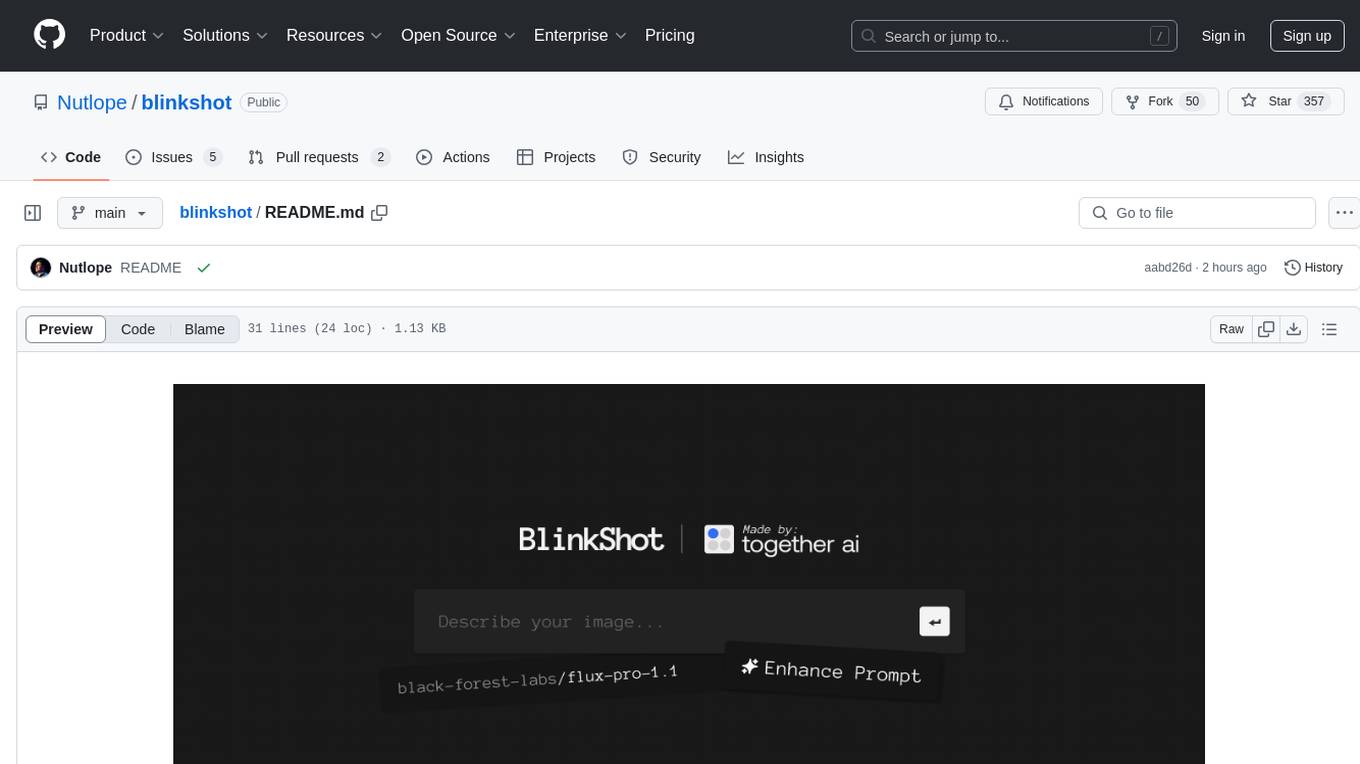

blinkshot

BlinkShot is an open source real-time AI image generator powered by Flux through Together.ai. It utilizes Flux Schnell from BFL for the image model, Together AI for inference, Next.js app router with Tailwind for the frontend, Helicone for observability, and Plausible for website analytics. Users can clone the repository, add their Together AI API key, and run the app locally to generate AI images. Future tasks include adding a call-to-action to fork the code on GitHub, implementing a download button on hover, allowing users to adjust resolutions and steps, adding an app description to the footer, and introducing themes.

vidai

vidai is a CLI tool for RunwayML that generates videos using AI. It supports Gen3 and Gen3 Turbo models, allowing users to create videos directly from the command line using text or image prompts. Users can also extend videos, edit videos, and explore unlimited generations. The tool requires a RunwayML account and ffmpeg for extended videos.

For similar tasks

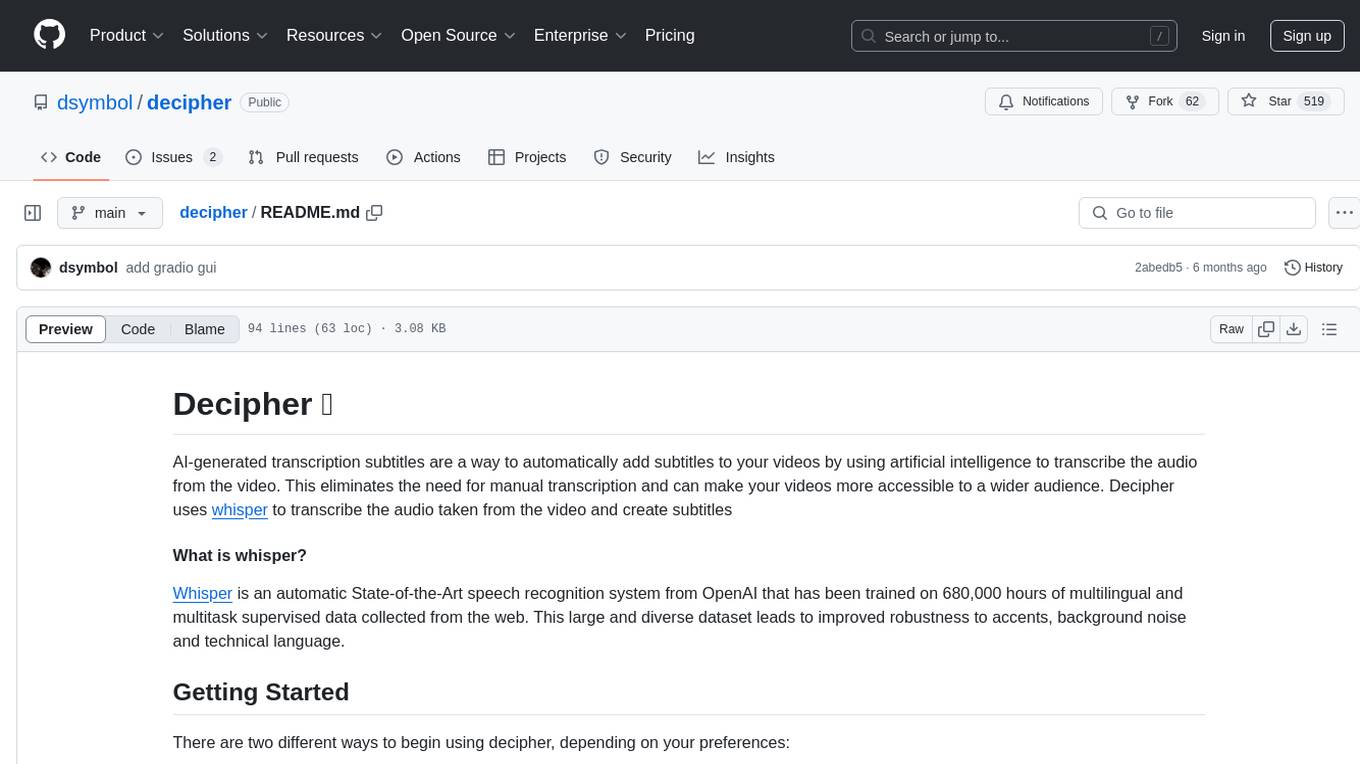

OpenAI-Whisper-GUI

OpenAI Whisper GUI is a modern GUI application designed to transcribe and translate audio/video files using OpenAI Whisper. It features a modern UI with light/dark mode, the ability to export transcribed text, add subtitles to videos, and more. The latest version includes updates to widgets, layouts, and themes, as well as new features such as a config handler, GPU info retrieval, a new app logo, settings interface, and bug fixes like code refactoring and fixing Cuda not found warning message. Users can easily install the tool by cloning the GitHub repository and running setup.py and main.py scripts. For more information, users can visit the OpenAI Whisper GitHub repository.

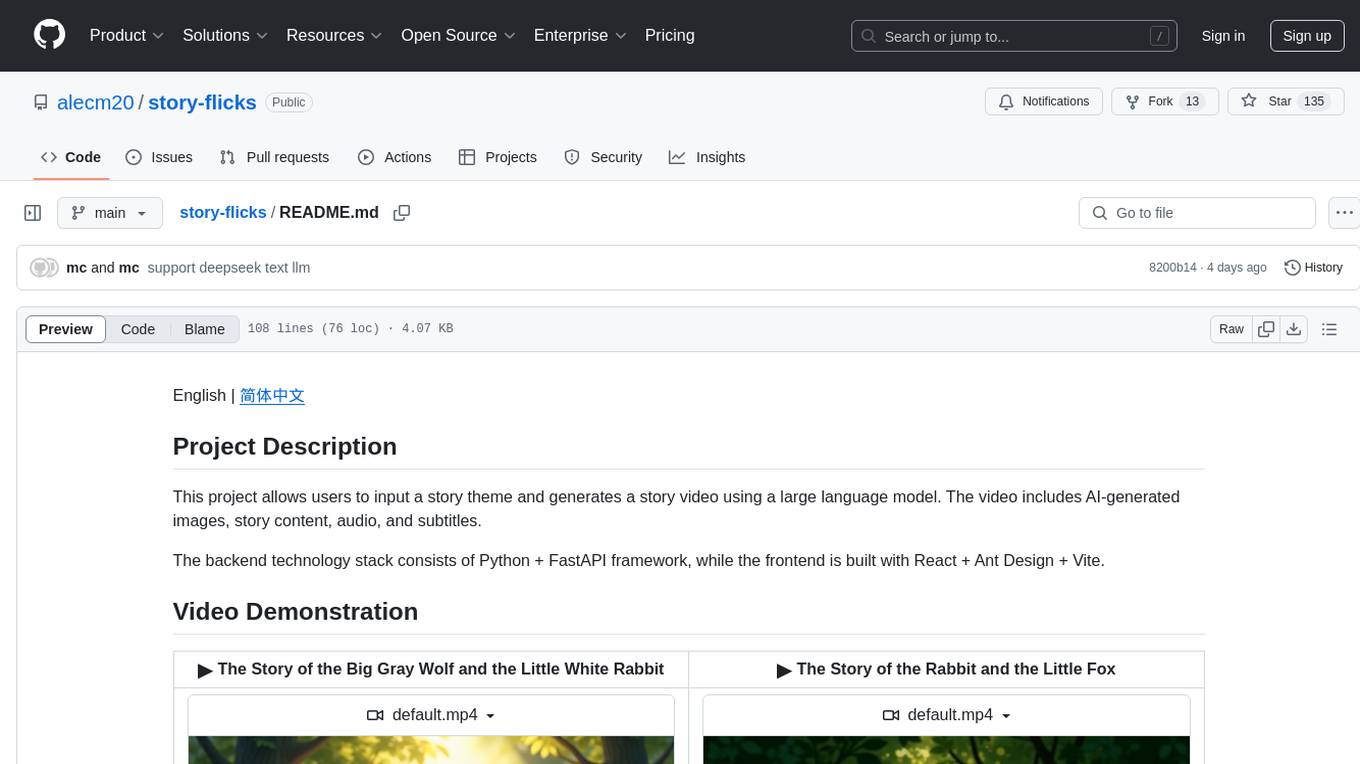

decipher

Decipher is a tool that utilizes AI-generated transcription subtitles to automatically add subtitles to videos. It eliminates the need for manual transcription, making videos more accessible. The tool uses OpenAI's Whisper, a State-of-the-Art speech recognition system trained on a large dataset for improved robustness to accents, background noise, and technical language.

story-flicks

This project enables users to create story videos by inputting a story theme, utilizing a large language model to generate AI-generated images, story content, audio, and subtitles. The backend is built with Python and FastAPI, while the frontend utilizes React, Ant Design, and Vite.

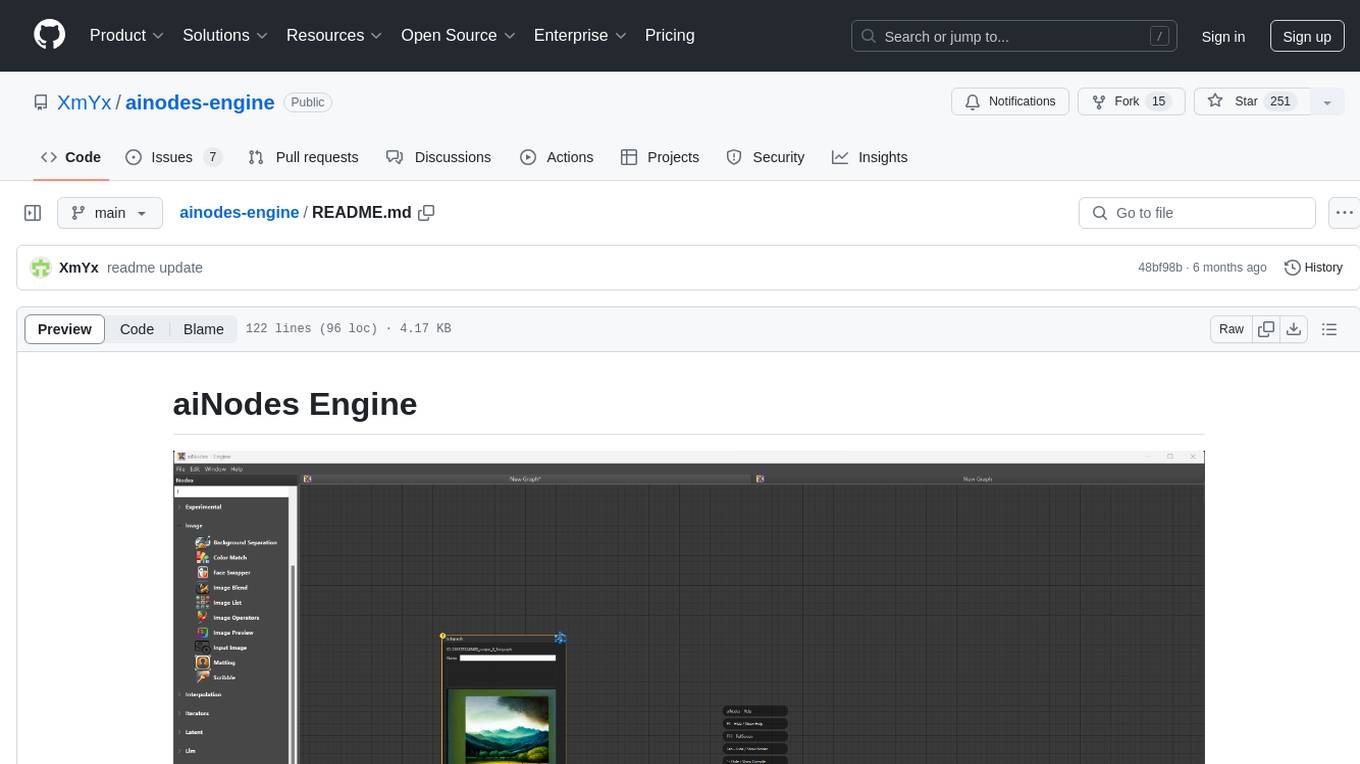

ainodes-engine

aiNodes Engine is a Python-based AI image/motion picture generator node engine with a live execution chain, python code editor node, and plug-in support. It offers full modularity, colored background drop, and easy node creation with IDE annotations. The project is officially supported by Deforum and incorporates various open-source projects like ComfyUI. It is designed to be flexible, with an Unreal-like execution chain, supporting features such as Deforum, Stable Diffusion, Upscalers, Kandinsky, ControlNet, and more. The engine allows for background separation, human matting/masking, compositing, drag and drop, subgraphs, and graph saving/loading from image metadata. It aims to provide a unique, controllable manner of working with a strict user-declared execution chain.

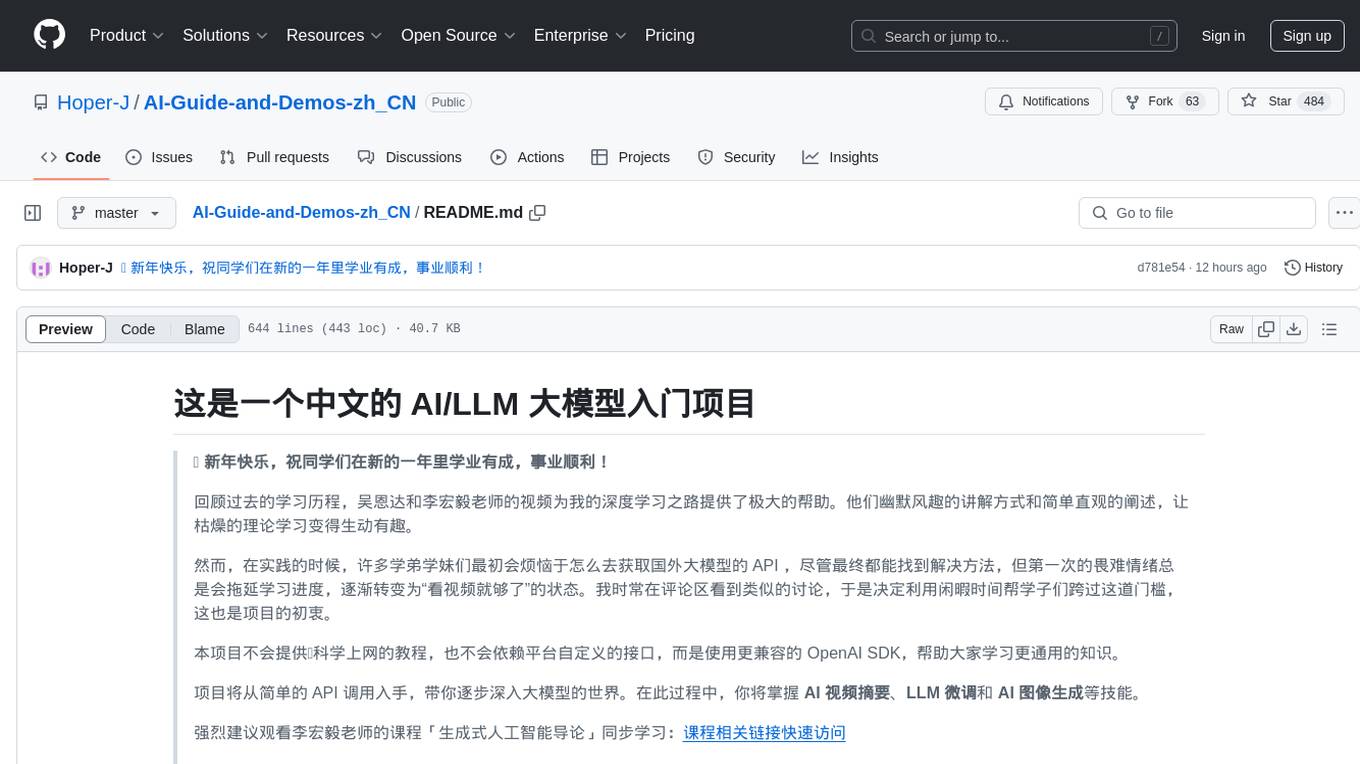

AI-Guide-and-Demos-zh_CN

This is a Chinese AI/LLM introductory project that aims to help students overcome the initial difficulties of accessing foreign large models' APIs. The project uses the OpenAI SDK to provide a more compatible learning experience. It covers topics such as AI video summarization, LLM fine-tuning, and AI image generation. The project also offers a CodePlayground for easy setup and one-line script execution to experience the charm of AI. It includes guides on API usage, LLM configuration, building AI applications with Gradio, customizing prompts for better model performance, understanding LoRA, and more.

swift-chat

SwiftChat is a fast and responsive AI chat application developed with React Native and powered by Amazon Bedrock. It offers real-time streaming conversations, AI image generation, multimodal support, conversation history management, and cross-platform compatibility across Android, iOS, and macOS. The app supports multiple AI models like Amazon Bedrock, Ollama, DeepSeek, and OpenAI, and features a customizable system prompt assistant. With a minimalist design philosophy and robust privacy protection, SwiftChat delivers a seamless chat experience with various features like rich Markdown support, comprehensive multimodal analysis, creative image suite, and quick access tools. The app prioritizes speed in launch, request, render, and storage, ensuring a fast and efficient user experience. SwiftChat also emphasizes app privacy and security by encrypting API key storage, minimal permission requirements, local-only data storage, and a privacy-first approach.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.