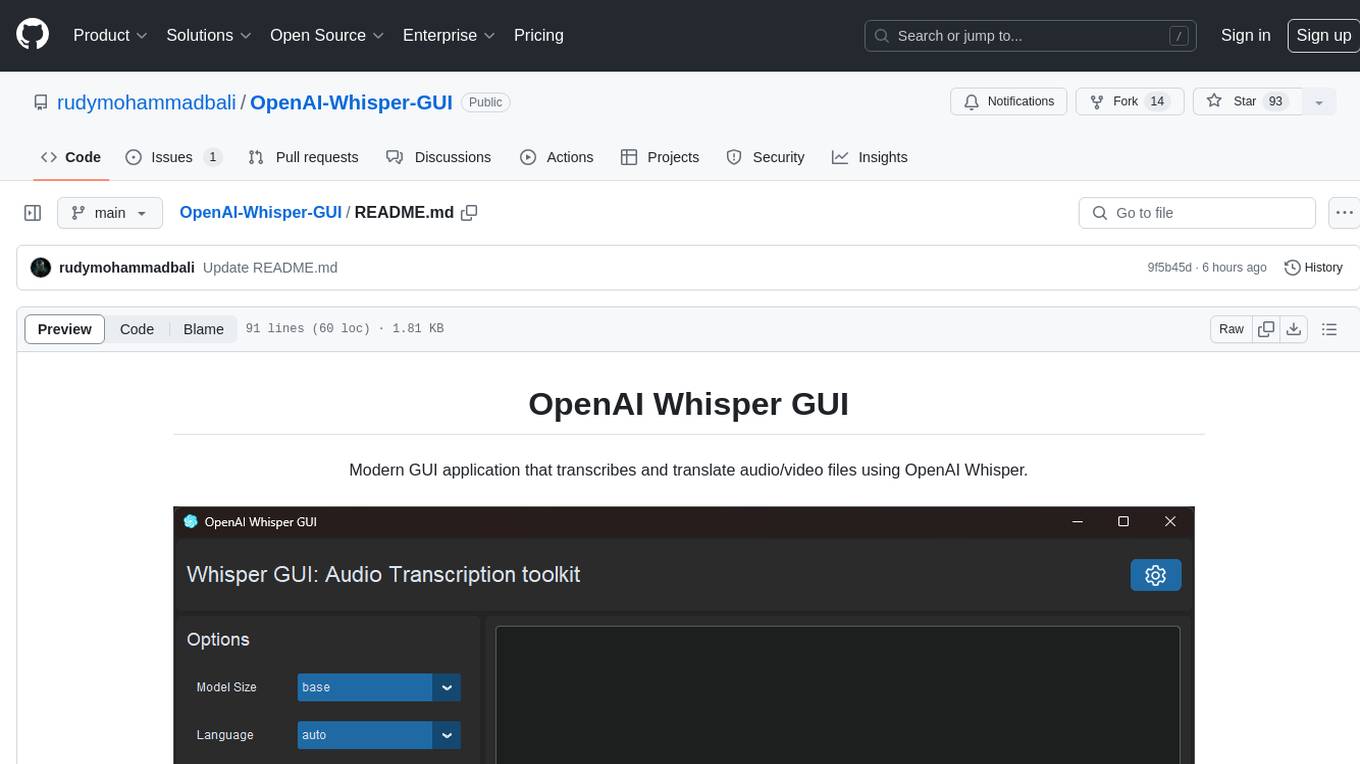

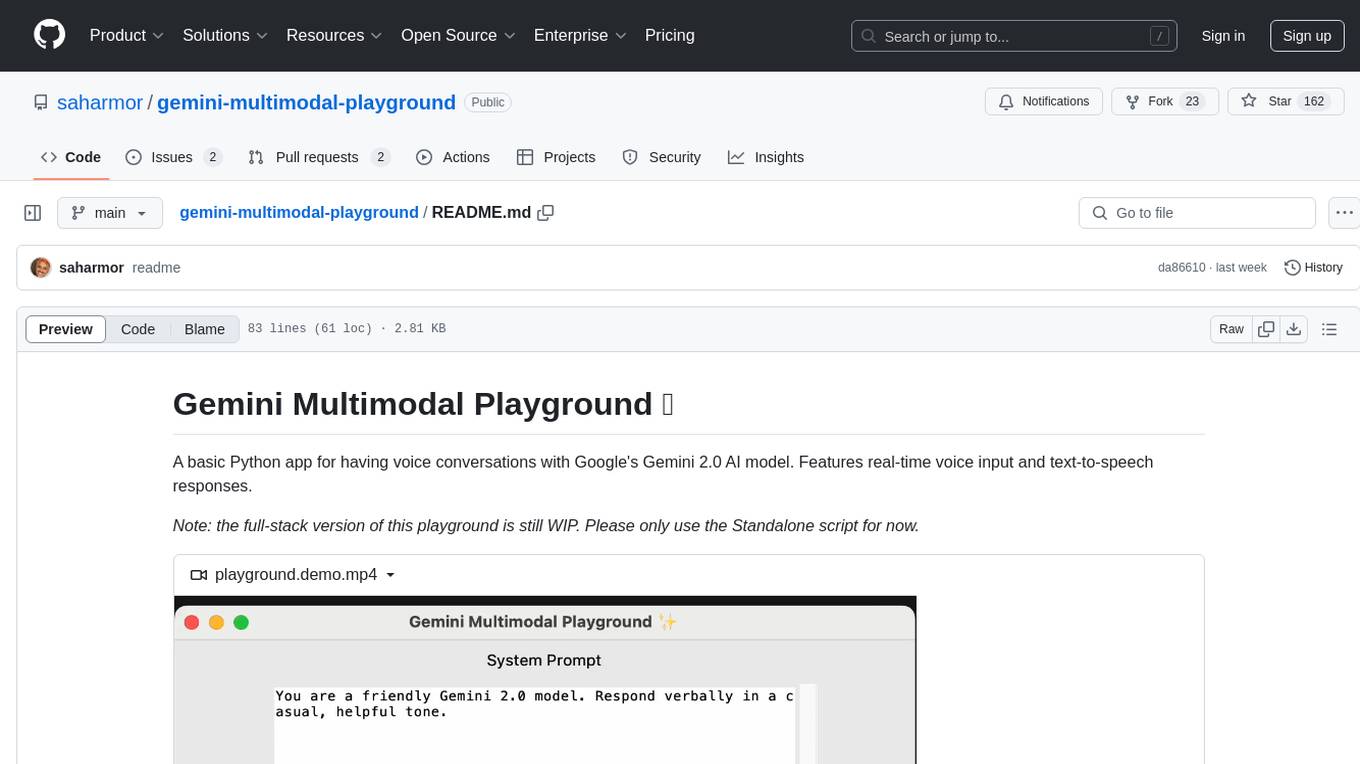

OpenAI-Whisper-GUI

Modern GUI application that transcribes and translate audio files using OpenAI Whisper.

Stars: 93

OpenAI Whisper GUI is a modern GUI application designed to transcribe and translate audio/video files using OpenAI Whisper. It features a modern UI with light/dark mode, the ability to export transcribed text, add subtitles to videos, and more. The latest version includes updates to widgets, layouts, and themes, as well as new features such as a config handler, GPU info retrieval, a new app logo, settings interface, and bug fixes like code refactoring and fixing Cuda not found warning message. Users can easily install the tool by cloning the GitHub repository and running setup.py and main.py scripts. For more information, users can visit the OpenAI Whisper GitHub repository.

README:

Modern GUI application that transcribes and translate audio/video files using OpenAI Whisper.

- Main Update

- Update to widgets, layouts and theme

- Removed Show Timestamps option, which is not necessary

- New Features

- Config handler: Save, load and reset config

- Get GPU info and automatically set available models

- New app logo

- Settings Interface

- Remember last used options

- Bug Fixes

- Code refactoring and optimization

- Fix Cuda not found warning message

- Modern UI

- Light/dark mode

- Export transcribed text

- Add subtitle to video

- and more...

Python version 3.9 or newer

Torch with Cuda (Works on CPU but slower)

ffmpeg

git clone https://github.com/rudymohammadbali/OpenAI-Whisper-GUI.git

cd OpenAI-Whisper-GUI

python setup.py

python main.py

For more information please visit OpenAI Whisper GitHub: https://github.com/openai/whisper

If you'd like to support my ongoing efforts in sharing fantastic open-source projects, you can contribute by making a donation via PayPal.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for OpenAI-Whisper-GUI

Similar Open Source Tools

OpenAI-Whisper-GUI

OpenAI Whisper GUI is a modern GUI application designed to transcribe and translate audio/video files using OpenAI Whisper. It features a modern UI with light/dark mode, the ability to export transcribed text, add subtitles to videos, and more. The latest version includes updates to widgets, layouts, and themes, as well as new features such as a config handler, GPU info retrieval, a new app logo, settings interface, and bug fixes like code refactoring and fixing Cuda not found warning message. Users can easily install the tool by cloning the GitHub repository and running setup.py and main.py scripts. For more information, users can visit the OpenAI Whisper GitHub repository.

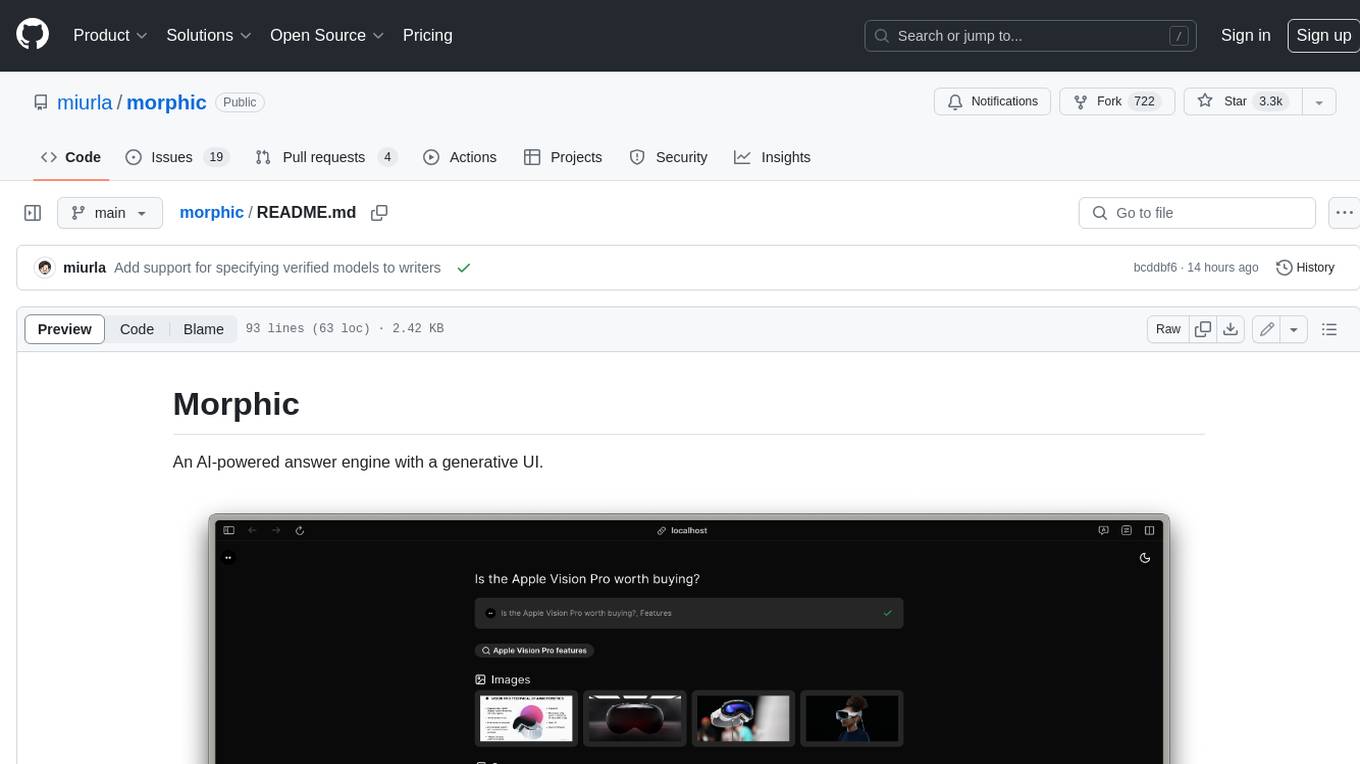

morphic

Morphic is an AI-powered answer engine with a generative UI. It utilizes a stack of Next.js, Vercel AI SDK, OpenAI, Tavily AI, shadcn/ui, Radix UI, and Tailwind CSS. To get started, fork and clone the repo, install dependencies, fill out secrets in the .env.local file, and run the app locally using 'bun dev'. You can also deploy your own live version of Morphic with Vercel. Verified models that can be specified to writers include Groq, LLaMA3 8b, and LLaMA3 70b.

qwen-code

Qwen Code is an open-source AI agent optimized for Qwen3-Coder, designed to help users understand large codebases, automate tedious work, and expedite the shipping process. It offers an agentic workflow with rich built-in tools, a terminal-first approach with optional IDE integration, and supports both OpenAI-compatible API and Qwen OAuth authentication methods. Users can interact with Qwen Code in interactive mode, headless mode, IDE integration, and through a TypeScript SDK. The tool can be configured via settings.json, environment variables, and CLI flags, and offers benchmark results for performance evaluation. Qwen Code is part of an ecosystem that includes AionUi and Gemini CLI Desktop for graphical interfaces, and troubleshooting guides are available for issue resolution.

ray-so

ray.so is a collection of tools built by Raycast, including code images creation, icon making for Raycast extensions, AI prompt exploration, AI preset exploration, Raycast snippet browsing, and Raycast theme browsing. Users can contribute new presets, prompts, snippets, themes, and bug fixes to enhance the tool's functionality.

OmniSteward

OmniSteward is an AI-powered steward system based on large language models that can interact with users through voice or text to help control smart home devices and computer programs. It supports multi-turn dialogue, tool calling for complex tasks, multiple LLM models, voice recognition, smart home control, computer program management, online information retrieval, command line operations, and file management. The system is highly extensible, allowing users to customize and share their own tools.

AudioNotes

AudioNotes is a system built on FunASR and Qwen2 that can quickly extract content from audio and video, and organize it using large models into structured markdown notes for easy reading. Users can interact with the audio and video content, install Ollama, pull models, and deploy services using Docker or locally with a PostgreSQL database. The system provides a seamless way to convert audio and video into structured notes for efficient consumption.

CrewAI-Studio

CrewAI Studio is an application with a user-friendly interface for interacting with CrewAI, offering support for multiple platforms and various backend providers. It allows users to run crews in the background, export single-page apps, and use custom tools for APIs and file writing. The roadmap includes features like better import/export, human input, chat functionality, automatic crew creation, and multiuser environment support.

moling

MoLing is a computer-use and browser-use MCP Server that implements system interaction through operating system APIs, enabling file system operations such as reading, writing, merging, statistics, and aggregation, as well as the ability to execute system commands. It is a dependency-free local office automation assistant. Requiring no installation of any dependencies, MoLing can be run directly and is compatible with multiple operating systems, including Windows, Linux, and macOS. This eliminates the hassle of dealing with environment conflicts involving Node.js, Python, Docker, and other development environments. Command-line operations are dangerous and should be used with caution. MoLing supports features like file system operations, command-line terminal execution, browser control powered by 'github.com/chromedp/chromedp', and future plans for personal PC data organization, document writing assistance, schedule planning, and life assistant features. MoLing has been tested on macOS but may have issues on other operating systems.

azooKey-Desktop

azooKey-Desktop is an open-source Japanese input system for macOS that incorporates the high-precision neural kana-kanji conversion engine 'Zenzai'. It offers features such as neural kana-kanji conversion, profile prompt, history learning, user dictionary, integration with personal optimization system 'Tuner', 'nice feeling conversion' with LLM, live conversion, and native support for AZIK. The tool is currently in alpha version, and its operation is not guaranteed. Users can install it via `.pkg` file or Homebrew. Development contributions are welcome, and the project has received support from the Information-technology Promotion Agency, Japan (IPA) for the 2024 fiscal year's untapped IT human resources discovery and nurturing project.

qwery-core

Qwery is a platform for querying and visualizing data using natural language without technical knowledge. It seamlessly integrates with various datasources, generates optimized queries, and delivers outcomes like result sets, dashboards, and APIs. Features include natural language querying, multi-database support, AI-powered agents, visual data apps, desktop & cloud options, template library, and extensibility through plugins. The project is under active development and not yet suitable for production use.

better-chatbot

Better Chatbot is an open-source AI chatbot designed for individuals and teams, inspired by various AI models. It integrates major LLMs, offers powerful tools like MCP protocol and data visualization, supports automation with custom agents and visual workflows, enables collaboration by sharing configurations, provides a voice assistant feature, and ensures an intuitive user experience. The platform is built with Vercel AI SDK and Next.js, combining leading AI services into one platform for enhanced chatbot capabilities.

growi

GROWI is a collaborative wiki platform that allows users to create hierarchical pages with markdown, edit simultaneously with multiple people, and support authentication with LDAP/Active Directory, OAuth, and SAML. It also integrates with Slack/Mattermost, IFTTT, and allows for plugin customization. GROWI is Docker and Docker Compose ready, supports multiple sites, HTTPS with Let's Encrypt proxy integration, and offers migration guides for on-premise installations. The tool is built with Node.js, npm, pnpm, Turborepo, and requires MongoDB, with optional dependencies on Redis and ElasticSearch for full-text search functionality.

falkordb-browser

FalkorDB Browser is a user-friendly web application for browsing and managing databases. It provides an intuitive interface for users to interact with their databases, allowing them to view, edit, and query data easily. With FalkorDB Browser, users can perform various database operations without the need for complex commands or scripts, making database management more accessible and efficient.

genassist

GenAssist is an AI-powered platform for managing and leveraging various AI workflows, focusing on conversation management, analytics, and agent-based interactions. It provides user management, AI agents configuration, knowledge base management, analytics, conversation management, and audit logging features. The platform is built with React, TypeScript, Vite, Tailwind CSS, FastAPI, SQLAlchemy ORM, and PostgreSQL database. GenAssist offers integration options for React, JavaScript Widget, and iOS, along with UI test automation and backend testing capabilities.

openchamber

OpenChamber is a web and desktop interface for the OpenCode AI coding agent, designed to work alongside the OpenCode TUI. The project was built entirely with AI coding agents under supervision, serving as a proof of concept that AI agents can create usable software. It offers features like integrated terminal, Git operations with AI commit message generation, smart tool visualization, permission management, multi-agent runs, task tracker UI, model selection UX, UI scaling controls, session auto-cleanup, and memory optimizations. OpenChamber provides cross-device continuity, remote access, and a visual alternative for developers preferring GUI workflows.

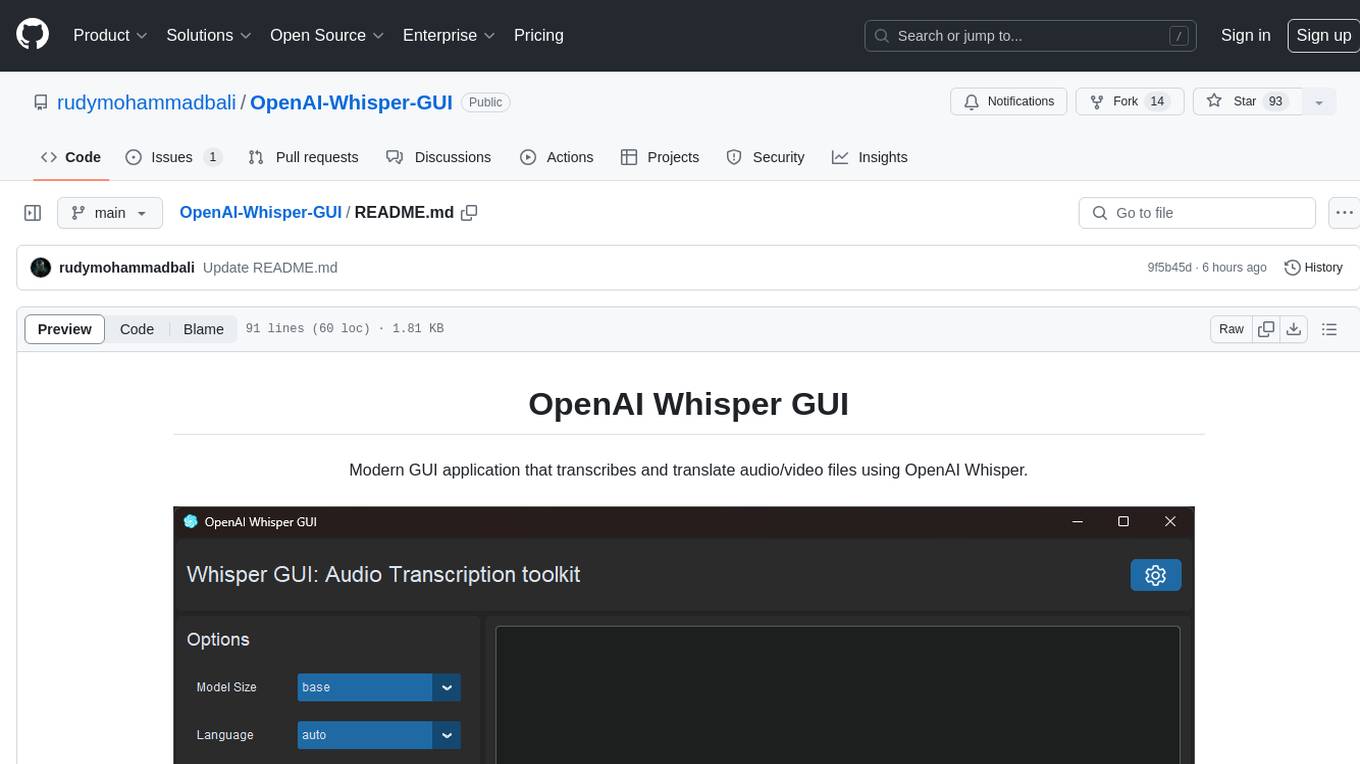

gemini-multimodal-playground

Gemini Multimodal Playground is a basic Python app for voice conversations with Google's Gemini 2.0 AI model. It features real-time voice input and text-to-speech responses. Users can configure settings through the GUI and interact with Gemini by speaking into the microphone. The application provides options for voice selection, system prompt customization, and enabling Google search. Troubleshooting tips are available for handling audio feedback loop issues that may occur during interactions.

For similar tasks

OpenAI-Whisper-GUI

OpenAI Whisper GUI is a modern GUI application designed to transcribe and translate audio/video files using OpenAI Whisper. It features a modern UI with light/dark mode, the ability to export transcribed text, add subtitles to videos, and more. The latest version includes updates to widgets, layouts, and themes, as well as new features such as a config handler, GPU info retrieval, a new app logo, settings interface, and bug fixes like code refactoring and fixing Cuda not found warning message. Users can easily install the tool by cloning the GitHub repository and running setup.py and main.py scripts. For more information, users can visit the OpenAI Whisper GitHub repository.

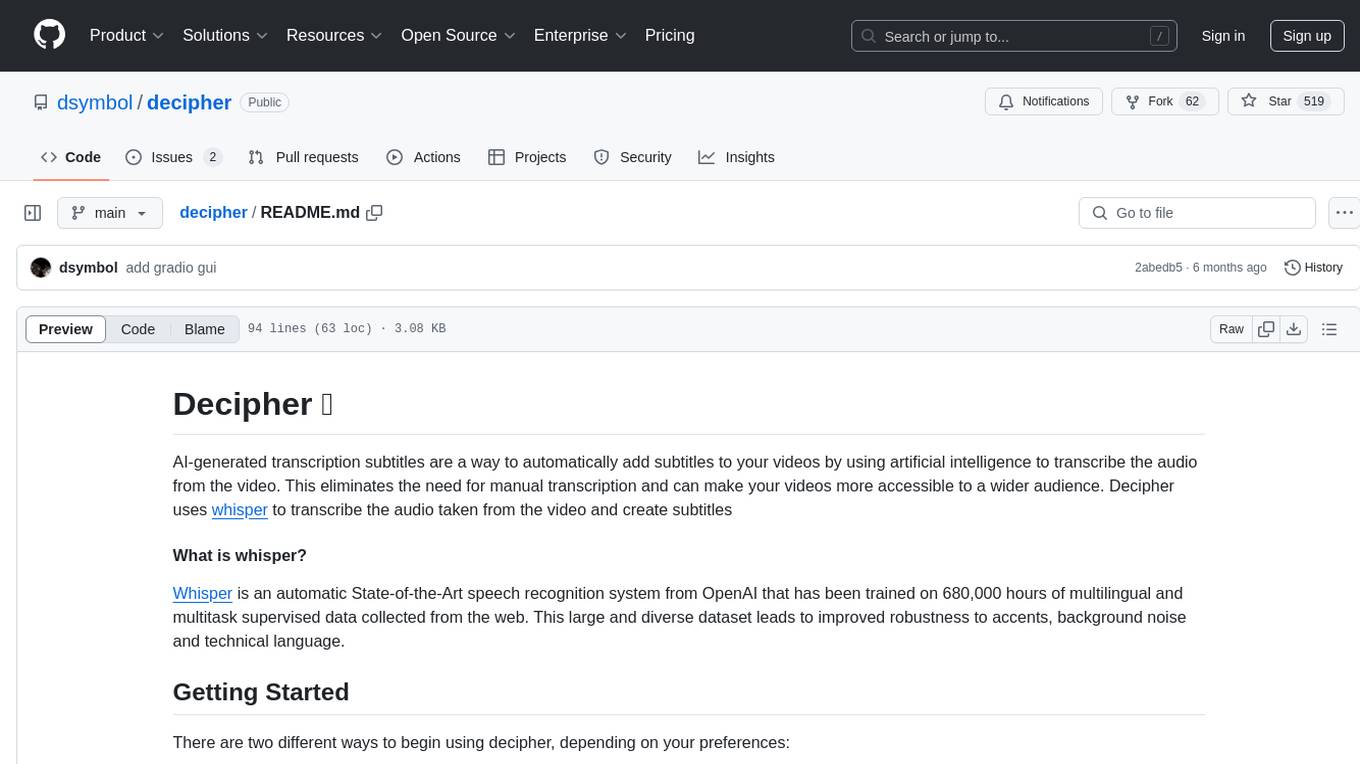

decipher

Decipher is a tool that utilizes AI-generated transcription subtitles to automatically add subtitles to videos. It eliminates the need for manual transcription, making videos more accessible. The tool uses OpenAI's Whisper, a State-of-the-Art speech recognition system trained on a large dataset for improved robustness to accents, background noise, and technical language.

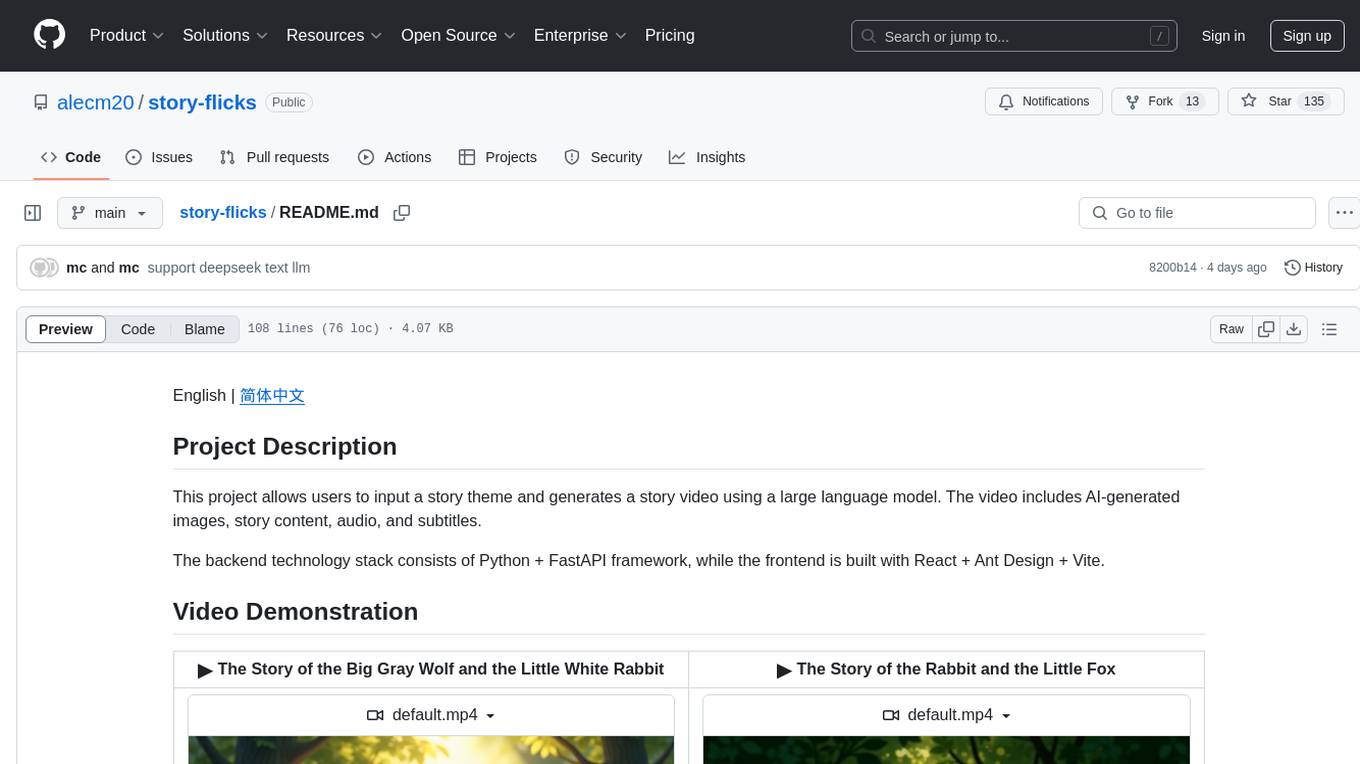

story-flicks

This project enables users to create story videos by inputting a story theme, utilizing a large language model to generate AI-generated images, story content, audio, and subtitles. The backend is built with Python and FastAPI, while the frontend utilizes React, Ant Design, and Vite.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

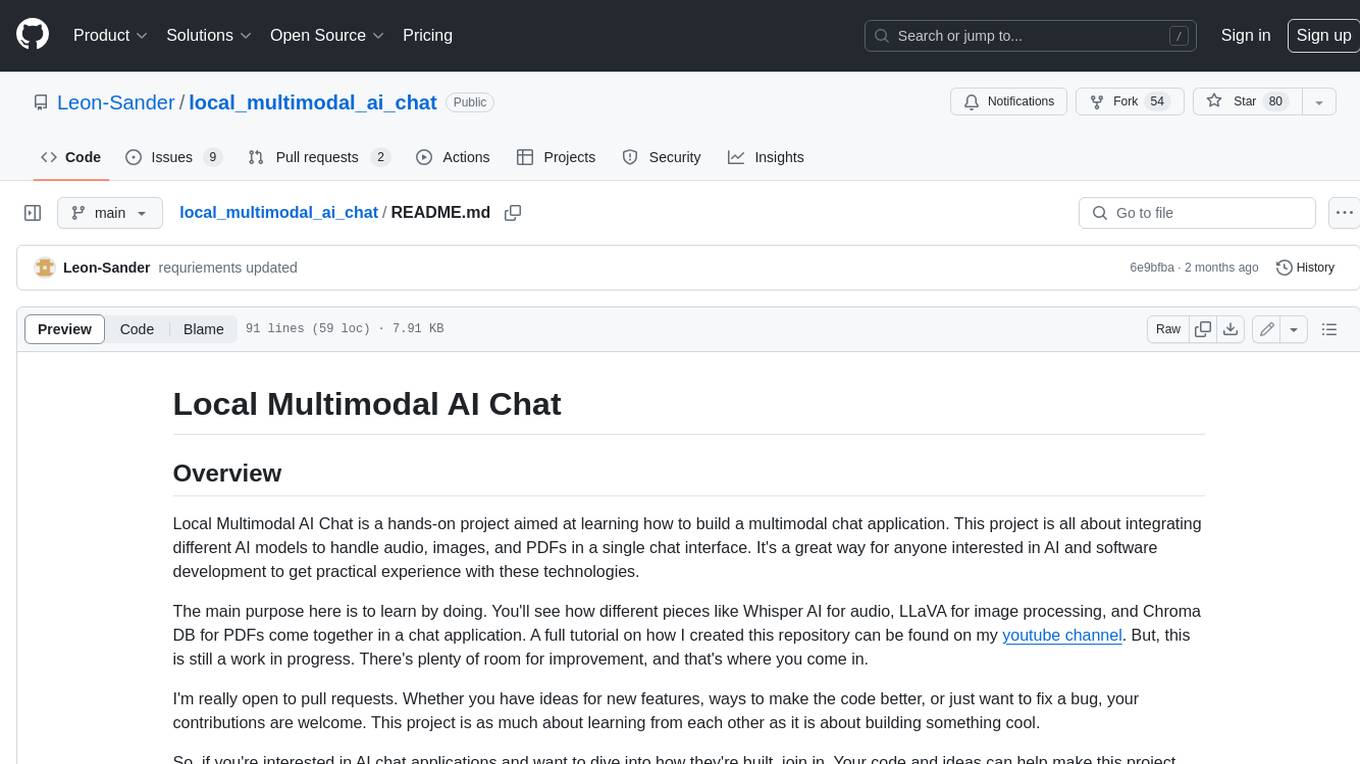

local_multimodal_ai_chat

Local Multimodal AI Chat is a hands-on project that teaches you how to build a multimodal chat application. It integrates different AI models to handle audio, images, and PDFs in a single chat interface. This project is perfect for anyone interested in AI and software development who wants to gain practical experience with these technologies.

openai-cf-workers-ai

OpenAI for Workers AI is a simple, quick, and dirty implementation of OpenAI's API on Cloudflare's new Workers AI platform. It allows developers to use the OpenAI SDKs with the new LLMs without having to rewrite all of their code. The API currently supports completions, chat completions, audio transcription, embeddings, audio translation, and image generation. It is not production ready but will be semi-regularly updated with new features as they roll out to Workers AI.

ruby-openai

Use the OpenAI API with Ruby! 🤖🩵 Stream text with GPT-4, transcribe and translate audio with Whisper, or create images with DALL·E... Hire me | 🎮 Ruby AI Builders Discord | 🐦 Twitter | 🧠 Anthropic Gem | 🚂 Midjourney Gem ## Table of Contents * Ruby OpenAI * Table of Contents * Installation * Bundler * Gem install * Usage * Quickstart * With Config * Custom timeout or base URI * Extra Headers per Client * Logging * Errors * Faraday middleware * Azure * Ollama * Counting Tokens * Models * Examples * Chat * Streaming Chat * Vision * JSON Mode * Functions * Edits * Embeddings * Batches * Files * Finetunes * Assistants * Threads and Messages * Runs * Runs involving function tools * Image Generation * DALL·E 2 * DALL·E 3 * Image Edit * Image Variations * Moderations * Whisper * Translate * Transcribe * Speech * Errors * Development * Release * Contributing * License * Code of Conduct

deepgram-js-sdk

Deepgram JavaScript SDK. Power your apps with world-class speech and Language AI models.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.