AIW

Alice in Wonderland code base for experiments and raw experiments data

Stars: 110

AIW is a code base for experiments and raw data related to Alice in Wonderland, showcasing complete reasoning breakdown in state-of-the-art large language models. Users can collect experiments data using LiteLLM and TogetherAI, and plot the data using provided scripts. The tool allows for executing experiments over LiteLLM and lmsys, with options for different prompt types and AIW variations. The project also includes acknowledgments and a citation for reference.

README:

🎩🐇 Alice in Wonderland: Simple Tasks Showing Complete Reasoning Breakdown in State-Of-the-Art Large Language Models

Alice in Wonderland code base for experiments and raw experiments data

Homepage | Paper | ArXiv | Dataset explorer

Install requirements:

pip install requirements.txt

Collect using LiteLLM: Refer to the LiteLLM Docs on how to setup your account and API keys.

Workflow init:

export SHARED_MINICONDA=/path/to/miniconda_install

export CONDA_ENV=/path/to/conda_env

export AIW_REPO_PATH=/path/to_local_cloned_AIW_repo

source ${SHARED_MINICONDA}/bin/activate ${CONDA_ENV}

export PYTHONPATH=$PYTHONPATH:$AIW_REPO_PATH

# export your API keys

export TOGETHERAI_API_KEY=

export OPENAI_API_KEY=

export ANTHROPIC_API_KEY=

export MISTRAL_API_KEY=

export GEMINI_API_KEY=

export COHERE_API_KEY=

cd $AIW_REPO_PATH

# LiteLLM based experiments; 30 trials for STANDARD prompt type, AIW Variation 1 (Prompt ID 55 in prompts.json)

python examples/example_litellm.py --prompt_id=55 --n_trials=30 --n_sessions=1 --prompts_json=lmsys_tools/prompts.json --models_json=lmsys_tools/models_plot_set.json --exp_name=model_set_STANDARD_run-1

# 30 trials for THINKING prompt type, AIW Variation 2 (Prompt ID 58 in prompts.json)

python examples/example_litellm.py --prompt_id=58 --n_trials=30 --n_sessions=1 --prompts_json=lmsys_tools/prompts.json --models_json=lmsys_tools/models_plot_set.json --exp_name=model_set_THINKING_run-1

# 30 trials for RESTRICTED prompt type, AIW Variation 2 (Prompt ID 58 in prompts.json)

python examples/example_litellm.py --prompt_id=53 --n_trials=30 --n_sessions=1 --prompts_json=lmsys_tools/prompts.json --models_json=lmsys_tools/models_plot_set.json --exp_name=model_set_RESTRICTED_run-1

# Same for LMSys based experiments

python examples/example_lmsys.py --prompt_id=53 --n_trials=30 --n_sessions=1 --prompts_json=lmsys_tools/prompts.json --models_json=lmsys_tools/models_plot_set.json --exp_name=model_set_RESTRICTED_run-1

Hint: n_sessions is now purely a dummy, and can be set to 1; the only thing that matters is number of trials

Execution example for a whole range of ID:

Hint: rename script file names inside the script files, they have to be adapted, as those are using local own naming)

# Execute experiments over LiteLLM: 30 trials, start with run counter set to 1, perform 2 rounds; for AIW Variations 1-2, prompt types STANDARD, THINKING, RESTRICTED (as defined in prompt ID)

source execute_litellm_data_gathering.sh 30 1 2 models_plot_set.json "55 56 57 58 53 54" "model_set_STANDARD model_set_STANDARD model_set_THINKING model_set_THINKING model_set_RESTRICTED model_set_RESTRICTED"

# Do the same for AIW Variation 3, prompt types STANDARD, THINKING, RESTRICTED (as defined in prompt ID)

source execute_litellm_data_gathering.sh 30 1 2 models_plot_set.json "63 64 65" "model_set_AIW-VAR-3_STANDARD model_set_AIW-VAR-3_THINKING model_set_AIW-VAR-3_RESTRICTED"

# Execute experiments over lmsys: 7 trials, start with run counter set to 1, perform 2 rounds

source execute_lmsys_data_gathering.sh 7 1 2 models_plot_set.json "55 56 57 58 53 54" "model_set_STANDARD model_set_STANDARD model_set_THINKING model_set_THINKING model_set_RESTRICTED model_set_RESTRICTED"

source execute_lmsys_data_gathering.sh 7 1 2 models_plot_set.json "63 64 65" "model_set_EASY_STANDARD model_set_EASY_THINKING model_set_EASY_RESTRICTED"- Usage for the script call:

source execute_litellm_data_gathering.sh NUM_TRIALS RUN_ID_START NUM_ROUNDS models_plot_set.json "PROMPT_ID_1 PROMPT_ID_2 PROMPT_ID_3" "EXP_NAME_1 EXP_NAME_2 EXP_NAME_3"

source execute_lmsys_data_gathering.sh NUM_TRIALS RUN_ID_START NUM_ROUNDS models_plot_set.json "PROMPT_ID_1 PROMPT_ID_2 PROMPT_ID_3" "EXP_NAME_1 EXP_NAME_2 EXP_NAME_3"where

-

NUM_TRIALS: trials to conduct in each round

-

START_RUN_ID: starting from run id (will apply to file name run-ID)

-

NUM_ROUNDS: how many rounds to go, each round will have NUM_TRIALS trials and own incremental run id

-

"PROMPT_ID_X ..." : list of IDs pointing to corresponding entries defined in prompt.json file

-

"EXP_NAME_X ..." : list of experiment names that can be chosed freely for each corresponding prompt ID to be appended to the filename with saved data

-

Example for a collecting data for a full plot (Fig. 1) in the paper

# Reading models from models_plot_set_reference.json and prompt IDs from prompt.json; full experiment set over all main AIW variations 1-4 and prompt types STANDARD, THINKING, RESTRICTED; doing 30 trials starting with run counter 1, for 2 rounds, aiming ot 60 trials in total per each model and prompt ID (that is a given combination of a prompt type and AIW variation)

source execute_litellm_data_gathering.sh 30 1 2 models_plot_set_reference.json "55 56 57 58 53 54 63 64 65 69 70 71" "model_set_reference_AIW-VAR-1_STANDARD model_set_reference_AIW-VAR-2_STANDARD model_set_reference_AIW-VAR-1_THINKING model_set_reference_AIW-VAR-2_THINKING model_set_reference_AIW-VAR-1_RESTRICTED model_set_reference_AIW-VAR-2_RESTRICTED model_set_reference_AIW-VAR-3_STANDARD model_set_reference_AIW-VAR-3_THINKING model_set_reference_AIW-VAR-3_RESTRICTED model_set_reference_AIW-VAR-4_STANDARD model_set_reference_AIW-VAR-4_THINKING model_set_reference_AIW-VAR-4_RESTRICTED"

Refer to this bash script to see how to use litellm to gather model responses.

Collect using TogetherAI:

Refer to the TogetherAI Docs on how to setup your account and API keys.

Refer to this Python script to see how to use togetherAI to gather model responses.

Collect by scraping LMSYS Chatbot Arena:

Note This method is not recommended since it's limited for purpose of automated model evaluation. The platform is gated by cloudflare.

Refer to this bash script to see how to use litellm to gather model responses.

Run script to generate plots from the paper (by default plots will be saved in the working directory):

bash scripts/plot.sh

We would like to express gratitude to all the people who are working on making code, models and data publicly available, advancing community based research and making research more reproducible. Specifically, we would like to thank all the members of the LAION Discord server community and Open-Ψ (Open-Sci) Collective for providing fruitful ground for scientific exchange and open-source development.

Marianna Nezhurina acknowledges funding by the Federal Ministry of Education and Research of Germany under grant no. 01IS22094B WestAI - AI Service Center West.

Lucia Cipolina-Kun acknowledges the Helmholtz Information & Data Science Academy (HIDA) for providing financial support enabling a short-term research stay at Juelich Supercomputing Center (JSC), Research Center Juelich (FZJ) to conduct research on foundation models.

If you like this work, please cite:

@article{nezhurina2024alice,

title={Alice in Wonderland: Simple Tasks Showing Complete Reasoning Breakdown in State-Of-the-Art Large Language Models},

author={Marianna Nezhurina and Lucia Cipolina-Kun and Mehdi Cherti and Jenia Jitsev},

year={2024},

journal={arXiv preprint arXiv:2406.02061},

eprint={2406.02061},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

Copyright 2024 Marianna Nezhurina, Lucia Cipolina-Kun, Mehdi Cherti, Jenia Jitsev

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AIW

Similar Open Source Tools

AIW

AIW is a code base for experiments and raw data related to Alice in Wonderland, showcasing complete reasoning breakdown in state-of-the-art large language models. Users can collect experiments data using LiteLLM and TogetherAI, and plot the data using provided scripts. The tool allows for executing experiments over LiteLLM and lmsys, with options for different prompt types and AIW variations. The project also includes acknowledgments and a citation for reference.

tensorrtllm_backend

The TensorRT-LLM Backend is a Triton backend designed to serve TensorRT-LLM models with Triton Inference Server. It supports features like inflight batching, paged attention, and more. Users can access the backend through pre-built Docker containers or build it using scripts provided in the repository. The backend can be used to create models for tasks like tokenizing, inferencing, de-tokenizing, ensemble modeling, and more. Users can interact with the backend using provided client scripts and query the server for metrics related to request handling, memory usage, KV cache blocks, and more. Testing for the backend can be done following the instructions in the 'ci/README.md' file.

codellm-devkit

Codellm-devkit (CLDK) is a Python library that serves as a multilingual program analysis framework bridging traditional static analysis tools and Large Language Models (LLMs) specialized for code (CodeLLMs). It simplifies the process of analyzing codebases across multiple programming languages, enabling the extraction of meaningful insights and facilitating LLM-based code analysis. The library provides a unified interface for integrating outputs from various analysis tools and preparing them for effective use by CodeLLMs. Codellm-devkit aims to enable the development and experimentation of robust analysis pipelines that combine traditional program analysis tools and CodeLLMs, reducing friction in multi-language code analysis and ensuring compatibility across different tools and LLM platforms. It is designed to seamlessly integrate with popular analysis tools like WALA, Tree-sitter, LLVM, and CodeQL, acting as a crucial intermediary layer for efficient communication between these tools and CodeLLMs. The project is continuously evolving to include new tools and frameworks, maintaining its versatility for code analysis and LLM integration.

verifAI

VerifAI is a document-based question-answering system that addresses hallucinations in generative large language models and search engines. It retrieves relevant documents, generates answers with references, and verifies answers for accuracy. The engine uses generative search technology and a verification model to ensure no misinformation. VerifAI supports various document formats and offers user registration with a React.js interface. It is open-source and designed to be user-friendly, making it accessible for anyone to use.

aimo-progress-prize

This repository contains the training and inference code needed to replicate the winning solution to the AI Mathematical Olympiad - Progress Prize 1. It consists of fine-tuning DeepSeekMath-Base 7B, high-quality training datasets, a self-consistency decoding algorithm, and carefully chosen validation sets. The training methodology involves Chain of Thought (CoT) and Tool Integrated Reasoning (TIR) training stages. Two datasets, NuminaMath-CoT and NuminaMath-TIR, were used to fine-tune the models. The models were trained using open-source libraries like TRL, PyTorch, vLLM, and DeepSpeed. Post-training quantization to 8-bit precision was done to improve performance on Kaggle's T4 GPUs. The project structure includes scripts for training, quantization, and inference, along with necessary installation instructions and hardware/software specifications.

cuvs

cuVS is a library that contains state-of-the-art implementations of several algorithms for running approximate nearest neighbors and clustering on the GPU. It can be used directly or through the various databases and other libraries that have integrated it. The primary goal of cuVS is to simplify the use of GPUs for vector similarity search and clustering.

kafka-ml

Kafka-ML is a framework designed to manage the pipeline of Tensorflow/Keras and PyTorch machine learning models on Kubernetes. It enables the design, training, and inference of ML models with datasets fed through Apache Kafka, connecting them directly to data streams like those from IoT devices. The Web UI allows easy definition of ML models without external libraries, catering to both experts and non-experts in ML/AI.

MegatronApp

MegatronApp is a toolchain built around the Megatron-LM training framework, offering performance tuning, slow-node detection, and training-process visualization. It includes modules like MegaScan for anomaly detection, MegaFBD for forward-backward decoupling, MegaDPP for dynamic pipeline planning, and MegaScope for visualization. The tool aims to enhance large-scale distributed training by providing valuable capabilities and insights.

monitors4codegen

This repository hosts the official code and data artifact for the paper 'Monitor-Guided Decoding of Code LMs with Static Analysis of Repository Context'. It introduces Monitor-Guided Decoding (MGD) for code generation using Language Models, where a monitor uses static analysis to guide the decoding. The repository contains datasets, evaluation scripts, inference results, a language server client 'multilspy' for static analyses, and implementation of various monitors monitoring for different properties in 3 programming languages. The monitors guide Language Models to adhere to properties like valid identifier dereferences, correct number of arguments to method calls, typestate validity of method call sequences, and more.

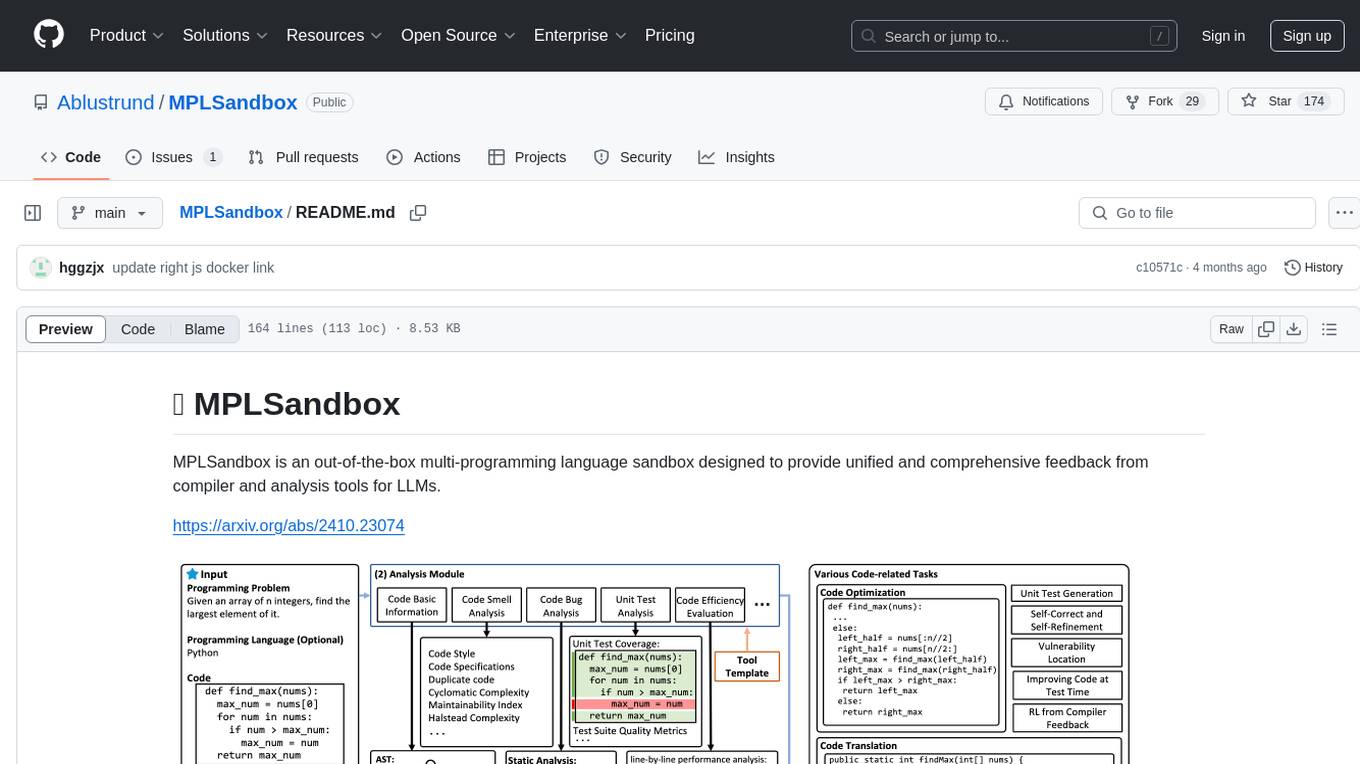

MPLSandbox

MPLSandbox is an out-of-the-box multi-programming language sandbox designed to provide unified and comprehensive feedback from compiler and analysis tools for LLMs. It simplifies code analysis for researchers and can be seamlessly integrated into LLM training and application processes to enhance performance in a range of code-related tasks. The sandbox environment ensures safe code execution, the code analysis module offers comprehensive analysis reports, and the information integration module combines compilation feedback and analysis results for complex code-related tasks.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

multilspy

Multilspy is a Python library developed for research purposes to facilitate the creation of language server clients for querying and obtaining results of static analyses from various language servers. It simplifies the process by handling server setup, communication, and configuration parameters, providing a common interface for different languages. The library supports features like finding function/class definitions, callers, completions, hover information, and document symbols. It is designed to work with AI systems like Large Language Models (LLMs) for tasks such as Monitor-Guided Decoding to ensure code generation correctness and boost compilability.

gepa

GEPA (Genetic-Pareto) is a framework for optimizing arbitrary systems composed of text components like AI prompts, code snippets, or textual specs against any evaluation metric. It employs LLMs to reflect on system behavior, using feedback from execution and evaluation traces to drive targeted improvements. Through iterative mutation, reflection, and Pareto-aware candidate selection, GEPA evolves robust, high-performing variants with minimal evaluations, co-evolving multiple components in modular systems for domain-specific gains. The repository provides the official implementation of the GEPA algorithm as proposed in the paper titled 'GEPA: Reflective Prompt Evolution Can Outperform Reinforcement Learning'.

Trace

Trace is a new AutoDiff-like tool for training AI systems end-to-end with general feedback. It generalizes the back-propagation algorithm by capturing and propagating an AI system's execution trace. Implemented as a PyTorch-like Python library, users can write Python code directly and use Trace primitives to optimize certain parts, similar to training neural networks.

OnAIR

The On-board Artificial Intelligence Research (OnAIR) Platform is a framework that enables AI algorithms written in Python to interact with NASA's cFS. It is intended to explore research concepts in autonomous operations in a simulated environment. The platform provides tools for generating environments, handling telemetry data through Redis, running unit tests, and contributing to the repository. Users can set up a conda environment, configure telemetry and Redis examples, run simulations, and conduct unit tests to ensure the functionality of their AI algorithms. The platform also includes guidelines for licensing, copyright, and contributions to the repository.

DBCopilot

The development of Natural Language Interfaces to Databases (NLIDBs) has been greatly advanced by the advent of large language models (LLMs), which provide an intuitive way to translate natural language (NL) questions into Structured Query Language (SQL) queries. DBCopilot is a framework that addresses challenges in real-world scenarios of natural language querying over massive databases by employing a compact and flexible copilot model for routing. It decouples schema-agnostic NL2SQL into schema routing and SQL generation, utilizing a lightweight differentiable search index for semantic mappings and relation-aware joint retrieval. DBCopilot introduces a reverse schema-to-question generation paradigm for automatic learning and adaptation over massive databases, providing a scalable and effective solution for schema-agnostic NL2SQL.

For similar tasks

AIW

AIW is a code base for experiments and raw data related to Alice in Wonderland, showcasing complete reasoning breakdown in state-of-the-art large language models. Users can collect experiments data using LiteLLM and TogetherAI, and plot the data using provided scripts. The tool allows for executing experiments over LiteLLM and lmsys, with options for different prompt types and AIW variations. The project also includes acknowledgments and a citation for reference.

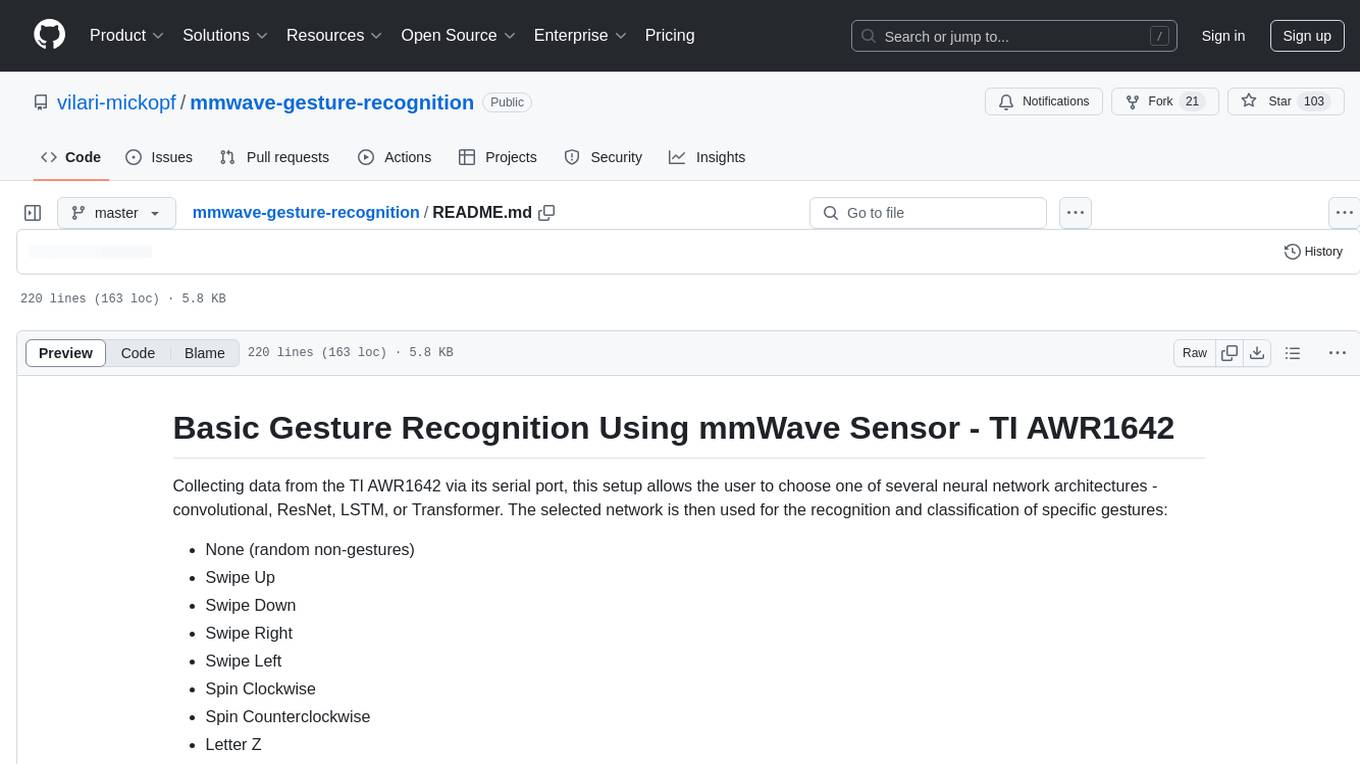

mmwave-gesture-recognition

This repository provides a setup for basic gesture recognition using the TI AWR1642 mmWave sensor. Users can collect data from the sensor and choose from various neural network architectures for gesture recognition. The supported gestures include Swipe Up, Swipe Down, Swipe Right, Swipe Left, Spin Clockwise, Spin Counterclockwise, Letter Z, Letter S, and Letter X. The repository includes data and models for training and inference, along with instructions for installation, serial permissions setup, flashing firmware, running the system, collecting data, training models, selecting different models, and accessing help documentation. The project is developed using Python and TensorFlow 2.15.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.