all-rag-techniques

Implementation of all RAG techniques in a simpler way

Stars: 504

This repository provides a hands-on approach to Retrieval-Augmented Generation (RAG) techniques, simplifying advanced concepts into understandable implementations using Python libraries like openai, numpy, and matplotlib. It offers a collection of Jupyter Notebooks with concise explanations, step-by-step implementations, code examples, evaluations, and visualizations for various RAG techniques. The goal is to make RAG more accessible and demystify its workings for educational purposes.

README:

This repository takes a clear, hands-on approach to Retrieval-Augmented Generation (RAG), breaking down advanced techniques into straightforward, understandable implementations. Instead of relying on frameworks like LangChain or FAISS, everything here is built using familiar Python libraries openai, numpy, matplotlib, and a few others.

The goal is simple: provide code that is readable, modifiable, and educational. By focusing on the fundamentals, this project helps demystify RAG and makes it easier to understand how it really works.

- (20-Mar-2025) Added a new notebook on RAG with Reinforcement Learning.

- (07-Mar-2025) Added 20 RAG techniques to the repository.

This repository contains a collection of Jupyter Notebooks, each focusing on a specific RAG technique. Each notebook provides:

- A concise explanation of the technique.

- A step-by-step implementation from scratch.

- Clear code examples with inline comments.

- Evaluations and comparisons to demonstrate the technique's effectiveness.

- Visualization to visualize the results.

Here's a glimpse of the techniques covered:

| Notebook | Description |

|---|---|

| 1. Simple RAG | A basic RAG implementation. A great starting point! |

| 2. Semantic Chunking | Splits text based on semantic similarity for more meaningful chunks. |

| 3. Chunk Size Selector | Explores the impact of different chunk sizes on retrieval performance. |

| 4. Context Enriched RAG | Retrieves neighboring chunks to provide more context. |

| 5. Contextual Chunk Headers | Prepends descriptive headers to each chunk before embedding. |

| 6. Document Augmentation RAG | Generates questions from text chunks to augment the retrieval process. |

| 7. Query Transform | Rewrites, expands, or decomposes queries to improve retrieval. Includes Step-back Prompting and Sub-query Decomposition. |

| 8. Reranker | Re-ranks initially retrieved results using an LLM for better relevance. |

| 9. RSE | Relevant Segment Extraction: Identifies and reconstructs continuous segments of text, preserving context. |

| 10. Contextual Compression | Implements contextual compression to filter and compress retrieved chunks, maximizing relevant information. |

| 11. Feedback Loop RAG | Incorporates user feedback to learn and improve RAG system over time. |

| 12. Adaptive RAG | Dynamically selects the best retrieval strategy based on query type. |

| 13. Self RAG | Implements Self-RAG, dynamically decides when and how to retrieve, evaluates relevance, and assesses support and utility. |

| 14. Proposition Chunking | Breaks down documents into atomic, factual statements for precise retrieval. |

| 15. Multimodel RAG | Combines text and images for retrieval, generating captions for images using LLaVA. |

| 16. Fusion RAG | Combines vector search with keyword-based (BM25) retrieval for improved results. |

| 17. Graph RAG | Organizes knowledge as a graph, enabling traversal of related concepts. |

| 18. Hierarchy RAG | Builds hierarchical indices (summaries + detailed chunks) for efficient retrieval. |

| 19. HyDE RAG | Uses Hypothetical Document Embeddings to improve semantic matching. |

| 20. CRAG | Corrective RAG: Dynamically evaluates retrieval quality and uses web search as a fallback. |

| 21. Rag with RL | Maximize the reward of the RAG model using Reinforcement Learning. |

fareedkhan-dev-all-rag-techniques/

├── README.md <- You are here!

├── 1_simple_rag.ipynb

├── 2_semantic_chunking.ipynb

├── 3_chunk_size_selector.ipynb

├── 4_context_enriched_rag.ipynb

├── 5_contextual_chunk_headers_rag.ipynb

├── 6_doc_augmentation_rag.ipynb

├── 7_query_transform.ipynb

├── 8_reranker.ipynb

├── 9_rse.ipynb

├── 10_contextual_compression.ipynb

├── 11_feedback_loop_rag.ipynb

├── 12_adaptive_rag.ipynb

├── 13_self_rag.ipynb

├── 14_proposition_chunking.ipynb

├── 15_multimodel_rag.ipynb

├── 16_fusion_rag.ipynb

├── 17_graph_rag.ipynb

├── 18_hierarchy_rag.ipynb

├── 19_HyDE_rag.ipynb

├── 20_crag.ipynb

├── 21_rag_with_rl.ipynb

├── requirements.txt <- Python dependencies

└── data/

└── val.json <- Sample validation data (queries and answers)

└── AI_information.pdf <- A sample PDF document for testing.

└── attention_is_all_you_need.pdf <- A sample PDF document for testing (for Multi-Modal RAG).

-

Clone the repository:

git clone https://github.com/FareedKhan-dev/all-rag-techniques.git cd all-rag-techniques -

Install dependencies:

pip install -r requirements.txt

-

Set up your OpenAI API key:

-

Obtain an API key from Nebius AI.

-

Set the API key as an environment variable:

export OPENAI_API_KEY='YOUR_NEBIUS_AI_API_KEY'

or

setx OPENAI_API_KEY "YOUR_NEBIUS_AI_API_KEY" # On Windows

or, within your Python script/notebook:

import os os.environ["OPENAI_API_KEY"] = "YOUR_NEBIUS_AI_API_KEY"

-

-

Run the notebooks:

Open any of the Jupyter Notebooks (

.ipynbfiles) using Jupyter Notebook or JupyterLab. Each notebook is self-contained and can be run independently. The notebooks are designed to be executed sequentially within each file.Note: The

data/AI_information.pdffile provides a sample document for testing. You can replace it with your own PDF. Thedata/val.jsonfile contains sample queries and ideal answers for evaluation. The 'attention_is_all_you_need.pdf' is for testing Multi-Modal RAG Notebook.

-

Embeddings: Numerical representations of text that capture semantic meaning. We use Nebius AI's embedding API and, in many notebooks, also the

BAAI/bge-en-iclembedding model. -

Vector Store: A simple database to store and search embeddings. We create our own

SimpleVectorStoreclass using NumPy for efficient similarity calculations. -

Cosine Similarity: A measure of similarity between two vectors. Higher values indicate greater similarity.

-

Chunking: Dividing text into smaller, manageable pieces. We explore various chunking strategies.

-

Retrieval: The process of finding the most relevant text chunks for a given query.

-

Generation: Using a Large Language Model (LLM) to create a response based on the retrieved context and the user's query. We use the

meta-llama/Llama-3.2-3B-Instructmodel via Nebius AI's API. -

Evaluation: Assessing the quality of the RAG system's responses, often by comparing them to a reference answer or using an LLM to score relevance.

Contributions are welcome!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for all-rag-techniques

Similar Open Source Tools

all-rag-techniques

This repository provides a hands-on approach to Retrieval-Augmented Generation (RAG) techniques, simplifying advanced concepts into understandable implementations using Python libraries like openai, numpy, and matplotlib. It offers a collection of Jupyter Notebooks with concise explanations, step-by-step implementations, code examples, evaluations, and visualizations for various RAG techniques. The goal is to make RAG more accessible and demystify its workings for educational purposes.

basiclingua-LLM-Based-NLP

BasicLingua is a Python library that provides functionalities for linguistic tasks such as tokenization, stemming, lemmatization, and many others. It is based on the Gemini Language Model, which has demonstrated promising results in dealing with text data. BasicLingua can be used as an API or through a web demo. It is available under the MIT license and can be used in various projects.

FuzzyAI

The FuzzyAI Fuzzer is a powerful tool for automated LLM fuzzing, designed to help developers and security researchers identify jailbreaks and mitigate potential security vulnerabilities in their LLM APIs. It supports various fuzzing techniques, provides input generation capabilities, can be easily integrated into existing workflows, and offers an extensible architecture for customization and extension. The tool includes attacks like ArtPrompt, Taxonomy-based paraphrasing, Many-shot jailbreaking, Genetic algorithm, Hallucinations, DAN (Do Anything Now), WordGame, Crescendo, ActorAttack, Back To The Past, Please, Thought Experiment, and Default. It supports models from providers like Anthropic, OpenAI, Gemini, Azure, Bedrock, AI21, and Ollama, with the ability to add support for newer models. The tool also supports various cloud APIs and datasets for testing and experimentation.

BIRD-CRITIC-1

BIRD-CRITIC 1.0 is a SQL benchmark designed to evaluate the capability of large language models (LLMs) in diagnosing and solving user issues within real-world database environments. It comprises 600 tasks for development and 200 held-out out-of-distribution tests across 4 prominent open-source SQL dialects. The benchmark expands beyond simple SELECT queries to cover a wider range of SQL operations, reflecting actual application scenarios. An optimized execution-based evaluation environment is included for rigorous and efficient validation.

RainbowGPT

RainbowGPT is a versatile tool that offers a range of functionalities, including Stock Analysis for financial decision-making, MySQL Management for database navigation, and integration of AI technologies like GPT-4 and ChatGlm3. It provides a user-friendly interface suitable for all skill levels, ensuring seamless information flow and continuous expansion of emerging technologies. The tool enhances adaptability, creativity, and insight, making it a valuable asset for various projects and tasks.

FinRobot

FinRobot is an open-source AI agent platform designed for financial applications using large language models. It transcends the scope of FinGPT, offering a comprehensive solution that integrates a diverse array of AI technologies. The platform's versatility and adaptability cater to the multifaceted needs of the financial industry. FinRobot's ecosystem is organized into four layers, including Financial AI Agents Layer, Financial LLMs Algorithms Layer, LLMOps and DataOps Layers, and Multi-source LLM Foundation Models Layer. The platform's agent workflow involves Perception, Brain, and Action modules to capture, process, and execute financial data and insights. The Smart Scheduler optimizes model diversity and selection for tasks, managed by components like Director Agent, Agent Registration, Agent Adaptor, and Task Manager. The tool provides a structured file organization with subfolders for agents, data sources, and functional modules, along with installation instructions and hands-on tutorials.

guidellm

GuideLLM is a platform for evaluating and optimizing the deployment of large language models (LLMs). By simulating real-world inference workloads, GuideLLM enables users to assess the performance, resource requirements, and cost implications of deploying LLMs on various hardware configurations. This approach ensures efficient, scalable, and cost-effective LLM inference serving while maintaining high service quality. The tool provides features for performance evaluation, resource optimization, cost estimation, and scalability testing.

atropos

Atropos is a robust and scalable framework for Reinforcement Learning Environments with Large Language Models (LLMs). It provides a flexible platform to accelerate LLM-based RL research across diverse interactive settings. Atropos supports multi-turn and asynchronous RL interactions, integrates with various inference APIs, offers a standardized training interface for experimenting with different RL algorithms, and allows for easy scalability by launching more environment instances. The framework manages diverse environment types concurrently for heterogeneous, multi-modal training.

sec-code-bench

SecCodeBench is a benchmark suite for evaluating the security of AI-generated code, specifically designed for modern Agentic Coding Tools. It addresses challenges in existing security benchmarks by ensuring test case quality, employing precise evaluation methods, and covering Agentic Coding Tools. The suite includes 98 test cases across 5 programming languages, focusing on functionality-first evaluation and dynamic execution-based validation. It offers a highly extensible testing framework for end-to-end automated evaluation of agentic coding tools, generating comprehensive reports and logs for analysis and improvement.

SheetCopilot

SheetCopilot is an assistant agent that manipulates spreadsheets by following user commands. It leverages Large Language Models (LLMs) to interact with spreadsheets like a human expert, enabling non-expert users to complete tasks on complex software such as Google Sheets and Excel via a language interface. The tool observes spreadsheet states, polishes generated solutions based on external action documents and error feedback, and aims to improve success rate and efficiency. SheetCopilot offers a dataset with diverse task categories and operations, supporting operations like entry & manipulation, management, formatting, charts, and pivot tables. Users can interact with SheetCopilot in Excel or Google Sheets, executing tasks like calculating revenue, creating pivot tables, and plotting charts. The tool's evaluation includes performance comparisons with leading LLMs and VBA-based methods on specific datasets, showcasing its capabilities in controlling various aspects of a spreadsheet.

helix-db

HelixDB is a database designed specifically for AI applications, providing a single platform to manage all components needed for AI applications. It supports graph + vector data model and also KV, documents, and relational data. Key features include built-in tools for MCP, embeddings, knowledge graphs, RAG, security, logical isolation, and ultra-low latency. Users can interact with HelixDB using the Helix CLI tool and SDKs in TypeScript and Python. The roadmap includes features like organizational auth, server code improvements, 3rd party integrations, educational content, and binary quantisation for better performance. Long term projects involve developing in-house tools for knowledge graph ingestion, graph-vector storage engine, and network protocol & serdes libraries.

code2prompt

Code2Prompt is a powerful command-line tool that generates comprehensive prompts from codebases, designed to streamline interactions between developers and Large Language Models (LLMs) for code analysis, documentation, and improvement tasks. It bridges the gap between codebases and LLMs by converting projects into AI-friendly prompts, enabling users to leverage AI for various software development tasks. The tool offers features like holistic codebase representation, intelligent source tree generation, customizable prompt templates, smart token management, Gitignore integration, flexible file handling, clipboard-ready output, multiple output options, and enhanced code readability.

sec-parser

The `sec-parser` project simplifies extracting meaningful information from SEC EDGAR HTML documents by organizing them into semantic elements and a tree structure. It helps in parsing SEC filings for financial and regulatory analysis, analytics and data science, AI and machine learning, causal AI, and large language models. The tool is especially beneficial for AI, ML, and LLM applications by streamlining data pre-processing and feature extraction.

Advanced-QA-and-RAG-Series

This repository contains advanced LLM-based chatbots for Retrieval Augmented Generation (RAG) and Q&A with different databases. It provides guides on using AzureOpenAI and OpenAI API for each project. The projects include Q&A and RAG with SQL and Tabular Data, and KnowledgeGraph Q&A and RAG with Tabular Data. Key notes emphasize the importance of good column names, read-only database access, and familiarity with query languages. The chatbots allow users to interact with SQL databases, CSV, XLSX files, and graph databases using natural language.

gpt-rag-ingestion

The GPT-RAG Data Ingestion service automates processing of diverse document types for indexing in Azure AI Search. It uses intelligent chunking strategies tailored to each format, generates text and image embeddings, and enables rich, multimodal retrieval experiences for agent-based RAG applications. Supported data sources include Blob Storage, NL2SQL Metadata, and SharePoint. The service selects chunkers based on file extension, such as DocAnalysisChunker for PDF files, OCR for image files, LangChainChunker for text-based files, TranscriptionChunker for video transcripts, and SpreadsheetChunker for spreadsheets. Deployment requires provisioning infrastructure and assigning specific roles to the user or service principal.

Vitron

Vitron is a unified pixel-level vision LLM designed for comprehensive understanding, generating, segmenting, and editing static images and dynamic videos. It addresses challenges in existing vision LLMs such as superficial instance-level understanding, lack of unified support for images and videos, and insufficient coverage across various vision tasks. The tool requires Python >= 3.8, Pytorch == 2.1.0, and CUDA Version >= 11.8 for installation. Users can deploy Gradio demo locally and fine-tune their models for specific tasks.

For similar tasks

all-rag-techniques

This repository provides a hands-on approach to Retrieval-Augmented Generation (RAG) techniques, simplifying advanced concepts into understandable implementations using Python libraries like openai, numpy, and matplotlib. It offers a collection of Jupyter Notebooks with concise explanations, step-by-step implementations, code examples, evaluations, and visualizations for various RAG techniques. The goal is to make RAG more accessible and demystify its workings for educational purposes.

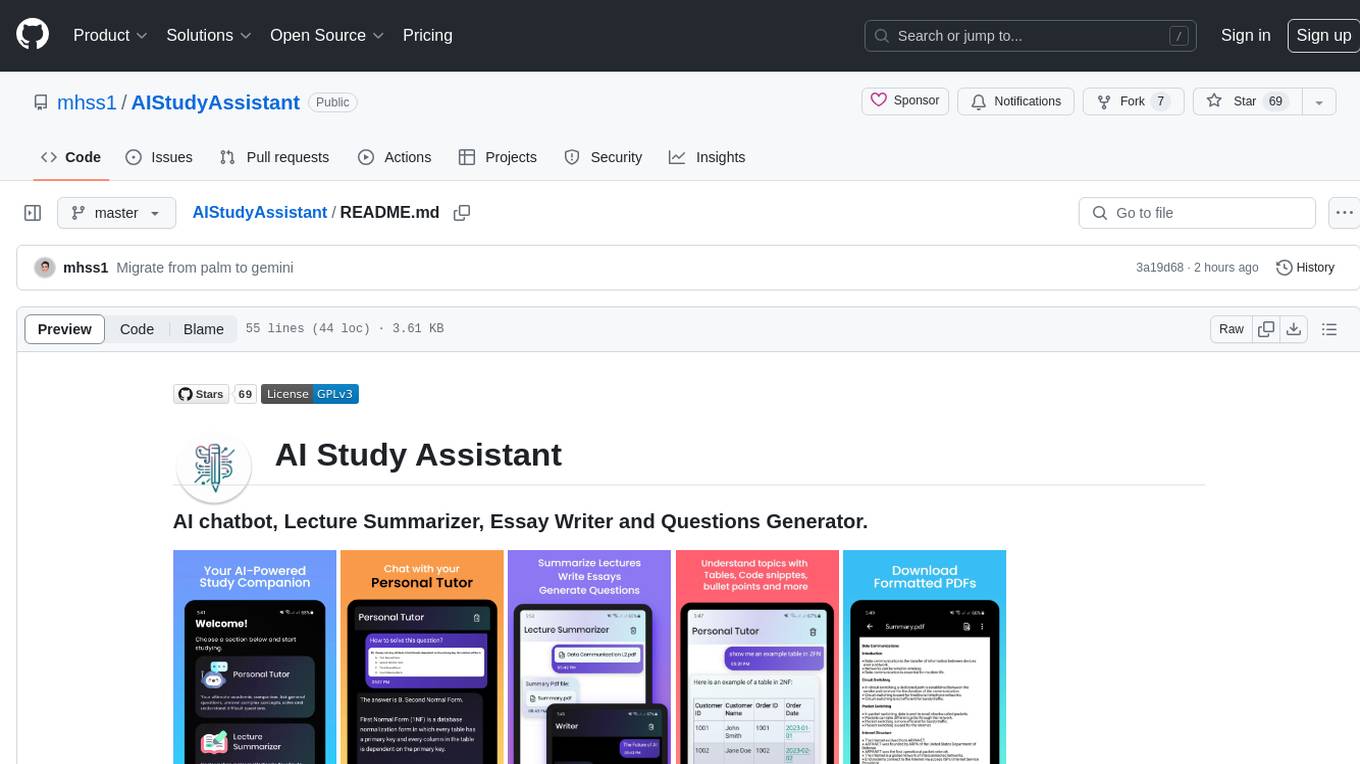

AIStudyAssistant

AI Study Assistant is an app designed to enhance learning experience and boost academic performance. It serves as a personal tutor, lecture summarizer, writer, and question generator powered by Google PaLM 2. Features include interacting with an AI chatbot, summarizing lectures, generating essays, and creating practice questions. The app is built using 100% Kotlin, Jetpack Compose, Clean Architecture, and MVVM design pattern, with technologies like Ktor, Room DB, Hilt, and Kotlin coroutines. AI Study Assistant aims to provide comprehensive AI-powered assistance for students in various academic tasks.

turboseek

TurboSeek is an open source AI search engine powered by Together.ai. It utilizes Next.js with Tailwind for the app router, Together AI for LLM inference, Mixtral 8x7B & Llama-3 for the LLMs, Bing for the search API, Helicone for observability, and Plausible for website analytics. The tool takes a user's question, queries the Bing search API for top results, scrapes text from the links, sends the question and context to Mixtral-8x7B, and generates follow-up questions using Llama-3-8B. Future tasks include optimizing source parsing, ignoring video links, adding regeneration option, ensuring proper citations, enabling sharing, implementing scrolling during answers, fixing hard refresh, adding caching with upstash redis, incorporating advanced RAG techniques, and adding authentication with Clerk and postgres/prisma.

diffbot-kg-chatbot

This project is an end-to-end pipeline for constructing knowledge graphs from news articles using Neo4j and Diffbot. It also utilizes OpenAI LLMs to generate questions based on the knowledge graph. The application offers news monitoring capabilities, data extraction from text, and organization/personal information enrichment. Users can interact with the chatbot interface to ask questions and receive answers based on the knowledge graph.

easy-dataset

Easy Dataset is a specialized application designed to streamline the creation of fine-tuning datasets for Large Language Models (LLMs). It offers an intuitive interface for uploading domain-specific files, intelligently splitting content, generating questions, and producing high-quality training data for model fine-tuning. With Easy Dataset, users can transform domain knowledge into structured datasets compatible with all OpenAI-format compatible LLM APIs, making the fine-tuning process accessible and efficient.

DeepTutor

DeepTutor is an AI-powered personalized learning assistant that offers a suite of modules for massive document knowledge Q&A, interactive learning visualization, knowledge reinforcement with practice exercise generation, deep research, and idea generation. The tool supports multi-agent collaboration, dynamic topic queues, and structured outputs for various tasks. It provides a unified system entry for activity tracking, knowledge base management, and system status monitoring. DeepTutor is designed to streamline learning and research processes by leveraging AI technologies and interactive features.

godoos

GodoOS is an efficient intranet office operating system that includes various office tools such as word/excel/ppt/pdf/internal chat/whiteboard/mind map, with native file storage support. The platform interface mimics the Windows style, making it easy to operate while maintaining low resource consumption and high performance. It automatically connects to intranet users without registration, enabling instant communication and file sharing. The flexible and highly configurable app store allows for unlimited expansion.

dxos

DXOS is an open-source platform that offers Composer, an extensible app platform for developers to organize and sync their knowledge across devices. It enables real-time or offline collaboration with others, emphasizing a local-first and private approach. The DXOS SDK facilitates peer-to-peer collaboration for local-first apps without relying on central sync servers.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.