AI-Makerspace

AI Makerspace: Blueprints for developing AI applications with state-of-the-art technologies.

Stars: 63

AI Makerspace is a repository supporting rapid prototyping of user-centered AI applications. It provides blueprint templates for using state-of-the-art technologies in AI use cases. The repository guides innovation teams through AI Design Thinking/Engineering, Lean AI Engineering, Explainable AI, Responsible and Ethical AI, and User Experience of machine learning products. It also focuses on developing scalable coaching solutions through the Agentic AI Coaching project.

README:

Advances in innovation of AI tools and technologies enable us to solve problems faster, easier, and more effectively. As the technologies grow in complexity and diversity, we experience a growing need for guiding innovation teams through rapid prototyping and user-centred development.

AI Makerspace repository is a hub of blueprint templates for using state-of-the-art technologies in the rapid prototyping of AI use cases. Working closely with cross-functional innovation teams at Digital Product School of UnternehmerTUM, we guide the teams through AI Design Thinking/Engineering, Lean AI Engineering, Explainable AI (XAI), Responsible and Ethical AI, as well as User Experience (UX) of machine learning products.

In addition, we are on an exciting journey to develop scalable coaching solutions through our 🚀 Agentic AI Coaching project 🚀, and we warmly invite you to contribute and be a part of this initiative.

![]() Each folder of our GitHub repository is self-explanatory for using the blueprint templates of selected technologies and AI use cases. Here is the list of some of the tools, platforms, and applications that we have explored:

Each folder of our GitHub repository is self-explanatory for using the blueprint templates of selected technologies and AI use cases. Here is the list of some of the tools, platforms, and applications that we have explored:

- HuggingFace

- GKE-Autopilot

- Heroku

- CloudRun

- VertexAI

- FastAPI

- AWS SageMaker

- Tableau

- CrewAI for building multi-agent systems

- Open Source LLM

- OpenAI GPT

- Streamlit

- Gradio

- PyCaret

- Data Version Control

- Docker

- Assembly AI

- PyTorch

- TensorFlow

- Flask

- Microservices

- Agno for building multi-modal agents

- Agentic AI Coaching

- Rapid Prototyping Agent: Tool Research and Use Case Coding Assistant

- Retrieval-Augmented Generation (RAG)

- 3D Maps, AR & 3D Geo AI interactive applications

- Chatbot

- Emotion detection

- Offensive speech detection

- Keyword extraction

- PDF question-answering

- Text translation

- Text auto-completion

- Text to Speech

- Summarization

- Speech recognition

- Image Generation

- Image Caption Generation

- Neural style transfer

- Synthetic data generation

- Accident prediction

💥 In addition, you can find our curated list of resources to help AI prototyping on our Wiki page: Curated AI Resources

📫 For any questions and if you are interested in receiving our exclusive community offers, fill out this application form.

Enjoy & Rock!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AI-Makerspace

Similar Open Source Tools

AI-Makerspace

AI Makerspace is a repository supporting rapid prototyping of user-centered AI applications. It provides blueprint templates for using state-of-the-art technologies in AI use cases. The repository guides innovation teams through AI Design Thinking/Engineering, Lean AI Engineering, Explainable AI, Responsible and Ethical AI, and User Experience of machine learning products. It also focuses on developing scalable coaching solutions through the Agentic AI Coaching project.

AI-Engineer-Headquarters

AI Engineer Headquarters is a comprehensive learning resource designed to help individuals master scientific methods, processes, algorithms, and systems to build stories and models in the field of Data and AI. The repository provides in-depth content through video sessions and text materials, catering to individuals aspiring to be in the top 1% of Data and AI experts. It covers various topics such as AI engineering foundations, large language models, retrieval-augmented generation, fine-tuning LLMs, reinforcement learning, ethical AI, agentic workflows, and career acceleration. The learning approach emphasizes action-oriented drills and routines, encouraging consistent effort and dedication to excel in the AI field.

awesome-generative-ai-guide

This repository serves as a comprehensive hub for updates on generative AI research, interview materials, notebooks, and more. It includes monthly best GenAI papers list, interview resources, free courses, and code repositories/notebooks for developing generative AI applications. The repository is regularly updated with the latest additions to keep users informed and engaged in the field of generative AI.

applied-ai-engineering-samples

The Google Cloud Applied AI Engineering repository provides reference guides, blueprints, code samples, and hands-on labs developed by the Google Cloud Applied AI Engineering team. It contains resources for Generative AI on Vertex AI, including code samples and hands-on labs demonstrating the use of Generative AI models and tools in Vertex AI. Additionally, it offers reference guides and blueprints that compile best practices and prescriptive guidance for running large-scale AI/ML workloads on Google Cloud AI/ML infrastructure.

learn-applied-generative-ai-fundamentals

This repository is part of the Certified Cloud Native Applied Generative AI Engineer program, focusing on Applied Generative AI Fundamentals. It covers prompt engineering, developing custom GPTs, and Multi AI Agent Systems. The course helps in building a strong understanding of generative AI, applying Large Language Models (LLMs) and diffusion models practically. It introduces principles of prompt engineering to work efficiently with AI, creating custom AI models and GPTs using OpenAI, Azure, and Google technologies. It also utilizes open source libraries like LangChain, CrewAI, and LangGraph to automate tasks and business processes.

awesome-LLM-resourses

A comprehensive repository of resources for Chinese large language models (LLMs), including data processing tools, fine-tuning frameworks, inference libraries, evaluation platforms, RAG engines, agent frameworks, books, courses, tutorials, and tips. The repository covers a wide range of tools and resources for working with LLMs, from data labeling and processing to model fine-tuning, inference, evaluation, and application development. It also includes resources for learning about LLMs through books, courses, and tutorials, as well as insights and strategies from building with LLMs.

nlp-llms-resources

The 'nlp-llms-resources' repository is a comprehensive resource list for Natural Language Processing (NLP) and Large Language Models (LLMs). It covers a wide range of topics including traditional NLP datasets, data acquisition, libraries for NLP, neural networks, sentiment analysis, optical character recognition, information extraction, semantics, topic modeling, multilingual NLP, domain-specific LLMs, vector databases, ethics, costing, books, courses, surveys, aggregators, newsletters, papers, conferences, and societies. The repository provides valuable information and resources for individuals interested in NLP and LLMs.

self-learn-llms

Self Learn LLMs is a repository containing resources for self-learning about Large Language Models. It includes theoretical and practical hands-on resources to facilitate learning. The repository aims to provide a clear roadmap with milestones for proper understanding of LLMs. The owner plans to refactor the repository to remove irrelevant content, organize model zoo better, and enhance the learning experience by adding contributors and hosting notes, tutorials, and open discussions.

awesome-ai

Awesome AI is a curated list of artificial intelligence resources including courses, tools, apps, and open-source projects. It covers a wide range of topics such as machine learning, deep learning, natural language processing, robotics, conversational interfaces, data science, and more. The repository serves as a comprehensive guide for individuals interested in exploring the field of artificial intelligence and its applications across various domains.

ianvs

Ianvs is a distributed synergy AI benchmarking project incubated in KubeEdge SIG AI. It aims to test the performance of distributed synergy AI solutions following recognized standards, providing end-to-end benchmark toolkits, test environment management tools, test case control tools, and benchmark presentation tools. It also collaborates with other organizations to establish comprehensive benchmarks and related applications. The architecture includes critical components like Test Environment Manager, Test Case Controller, Generation Assistant, Simulation Controller, and Story Manager. Ianvs documentation covers quick start, guides, dataset descriptions, algorithms, user interfaces, stories, and roadmap.

awesome-transformer-nlp

This repository contains a hand-curated list of great machine (deep) learning resources for Natural Language Processing (NLP) with a focus on Generative Pre-trained Transformer (GPT), Bidirectional Encoder Representations from Transformers (BERT), attention mechanism, Transformer architectures/networks, Chatbot, and transfer learning in NLP.

awesome-llms-fine-tuning

This repository is a curated collection of resources for fine-tuning Large Language Models (LLMs) like GPT, BERT, RoBERTa, and their variants. It includes tutorials, papers, tools, frameworks, and best practices to aid researchers, data scientists, and machine learning practitioners in adapting pre-trained models to specific tasks and domains. The resources cover a wide range of topics related to fine-tuning LLMs, providing valuable insights and guidelines to streamline the process and enhance model performance.

Sarvadnya

Sarvadnya is a repository focused on interfacing custom data using Large Language Models (LLMs) through Proof-of-Concepts (PoCs) like Retrieval Augmented Generation (RAG) and Fine-Tuning. It aims to enable domain adaptation for LLMs to answer on user-specific corpora. The repository also covers topics such as Indic-languages models, 3D World Simulations, Knowledge Graphs Generation, Signal Processing, Drones, UAV Image Processing, and Floor Plan Segmentation. It provides insights into building chatbots of various modalities, preparing videos, and creating content for different platforms like Medium, LinkedIn, and YouTube. The tech stacks involved range from enterprise solutions like Google Doc AI and Microsoft Azure Language AI Services to open-source tools like Langchain and HuggingFace.

amazon-sagemaker-generativeai

Repository for training and deploying Generative AI models, including text-text, text-to-image generation, prompt engineering playground and chain of thought examples using SageMaker Studio. The tool provides a platform for users to experiment with generative AI techniques, enabling them to create text and image outputs based on input data. It offers a range of functionalities for training and deploying models, as well as exploring different generative AI applications.

awesome-generative-ai

A curated list of Generative AI projects, tools, artworks, and models

oreilly-retrieval-augmented-gen-ai

This repository focuses on Retrieval-Augmented Generation (RAG) and Large Language Models (LLMs). It provides code and resources to augment LLMs with real-time data for dynamic, context-aware applications. The content covers topics such as semantic search, fine-tuning embeddings, building RAG chatbots, evaluating LLMs, and using knowledge graphs in RAG. Prerequisites include Python skills, knowledge of machine learning and LLMs, and introductory experience with NLP and AI models.

For similar tasks

Flowise

Flowise is a tool that allows users to build customized LLM flows with a drag-and-drop UI. It is open-source and self-hostable, and it supports various deployments, including AWS, Azure, Digital Ocean, GCP, Railway, Render, HuggingFace Spaces, Elestio, Sealos, and RepoCloud. Flowise has three different modules in a single mono repository: server, ui, and components. The server module is a Node backend that serves API logics, the ui module is a React frontend, and the components module contains third-party node integrations. Flowise supports different environment variables to configure your instance, and you can specify these variables in the .env file inside the packages/server folder.

nlux

nlux is an open-source Javascript and React JS library that makes it super simple to integrate powerful large language models (LLMs) like ChatGPT into your web app or website. With just a few lines of code, you can add conversational AI capabilities and interact with your favourite LLM.

generative-ai-go

The Google AI Go SDK enables developers to use Google's state-of-the-art generative AI models (like Gemini) to build AI-powered features and applications. It supports use cases like generating text from text-only input, generating text from text-and-images input (multimodal), building multi-turn conversations (chat), and embedding.

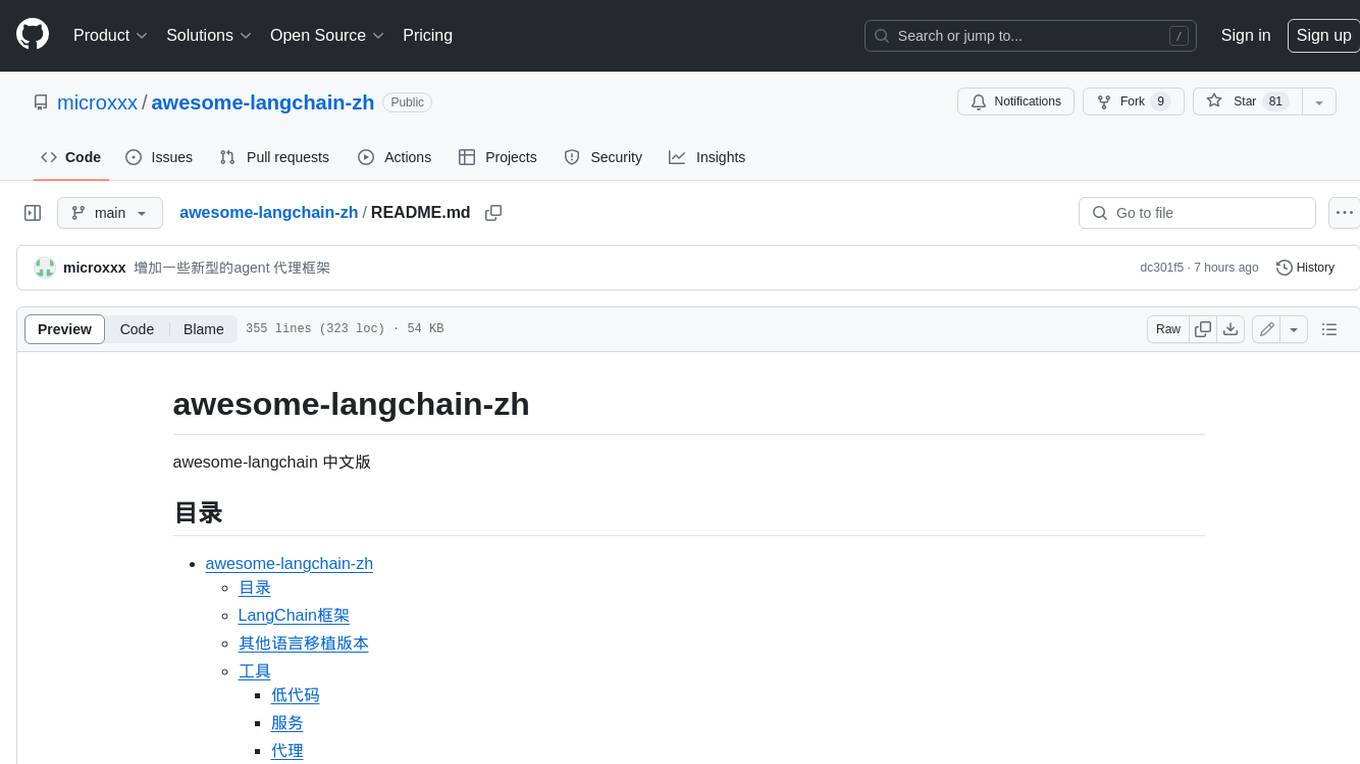

awesome-langchain-zh

The awesome-langchain-zh repository is a collection of resources related to LangChain, a framework for building AI applications using large language models (LLMs). The repository includes sections on the LangChain framework itself, other language ports of LangChain, tools for low-code development, services, agents, templates, platforms, open-source projects related to knowledge management and chatbots, as well as learning resources such as notebooks, videos, and articles. It also covers other LLM frameworks and provides additional resources for exploring and working with LLMs. The repository serves as a comprehensive guide for developers and AI enthusiasts interested in leveraging LangChain and LLMs for various applications.

Large-Language-Model-Notebooks-Course

This practical free hands-on course focuses on Large Language models and their applications, providing a hands-on experience using models from OpenAI and the Hugging Face library. The course is divided into three major sections: Techniques and Libraries, Projects, and Enterprise Solutions. It covers topics such as Chatbots, Code Generation, Vector databases, LangChain, Fine Tuning, PEFT Fine Tuning, Soft Prompt tuning, LoRA, QLoRA, Evaluate Models, Knowledge Distillation, and more. Each section contains chapters with lessons supported by notebooks and articles. The course aims to help users build projects and explore enterprise solutions using Large Language Models.

ai-chatbot

Next.js AI Chatbot is an open-source app template for building AI chatbots using Next.js, Vercel AI SDK, OpenAI, and Vercel KV. It includes features like Next.js App Router, React Server Components, Vercel AI SDK for streaming chat UI, support for various AI models, Tailwind CSS styling, Radix UI for headless components, chat history management, rate limiting, session storage with Vercel KV, and authentication with NextAuth.js. The template allows easy deployment to Vercel and customization of AI model providers.

awesome-local-llms

The 'awesome-local-llms' repository is a curated list of open-source tools for local Large Language Model (LLM) inference, covering both proprietary and open weights LLMs. The repository categorizes these tools into LLM inference backend engines, LLM front end UIs, and all-in-one desktop applications. It collects GitHub repository metrics as proxies for popularity and active maintenance. Contributions are encouraged, and users can suggest additional open-source repositories through the Issues section or by running a provided script to update the README and make a pull request. The repository aims to provide a comprehensive resource for exploring and utilizing local LLM tools.

Awesome-AI-Data-Guided-Projects

A curated list of data science & AI guided projects to start building your portfolio. The repository contains guided projects covering various topics such as large language models, time series analysis, computer vision, natural language processing (NLP), and data science. Each project provides detailed instructions on how to implement specific tasks using different tools and technologies.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.