Legacy-Modernization-Agents

AI-powered COBOL to Java Quarkus modernization agents using Microsoft Semantic Kernel. Automates legacy mainframe code modernization with intelligent agents for analysis, conversion, and dependency mapping.

Stars: 135

Legacy Modernization Agents is an open source migration framework developed to demonstrate AI Agents capabilities for converting legacy COBOL code to Java or C# .NET. The framework uses Microsoft Agent Framework with a dual-API architecture to analyze COBOL code and dependencies, then convert to either Java Quarkus or C# .NET. The web portal provides real-time visualization of migration progress, dependency graphs, and AI-powered Q&A.

README:

This open source migration framework was developed to demonstrate AI Agents capabilities for converting legacy code like COBOL to Java or C# .NET. Each Agent has a persona that can be edited depending on the desired outcome. The migration uses Microsoft Agent Framework with a dual-API architecture (Responses API + Chat Completions API) to analyze COBOL code and its dependencies, then convert to either Java Quarkus or C# .NET (user's choice).

The web portal provides real-time visualization of migration progress, dependency graphs, and AI-powered Q&A.

[!TIP] Two ways to use this framework:

Command What it does ./doctor.sh runRun a full migration — analyze COBOL, convert to Java/C#, generate reports, and launch the portal ./doctor.sh portalOpen the portal only — browse previous migration results, dependency graphs, and chat with your codebase at http://localhost:5028 Both commands handle all configuration, dependency checks, and service startup automatically.

- Quick Start

- Usage: doctor.sh

- Reverse Engineering Reports

- Folder Structure

- Customizing Agent Behavior

- File Splitting & Naming

- Architecture

- Smart Chunking & Token Strategy

- Build & Run

| Requirement | Version | Notes |

|---|---|---|

| .NET SDK | 10.0+ | Download |

| Docker Desktop | Latest | Must be running for Neo4j |

| AI Endpoint | — | Endpoint + API Key or via az login (see below) |

This project supports two Azure OpenAI API types with specific models:

| API Type | Model Example | Used For | Interface |

|---|---|---|---|

| Responses API | gpt-5.1-codex-mini |

Code generation (agents) | ResponsesApiClient |

| Chat Completions API | gpt-5.1-chat |

Reports, portal chat | IChatClient |

⚠️ Want to use different models? You can swap models, but you may need to update API calls:

- Codex models → Responses API (

ResponsesApiClient)- Chat models → Chat Completions API (

IChatClient)See Agents/Infrastructure/ for API client implementations.

[!IMPORTANT] Azure OpenAI Quota Recommendation: 1M+ TPM

For optimal performance, we recommend setting your Azure OpenAI model quota to 1,000,000 tokens per minute (TPM) or higher.

Quota Experience 300K TPM Works, but slower with throttling pauses 1M TPM Recommended - smooth parallel processing Higher quota = faster migration. The tool processes multiple files and chunks in parallel, so more TPM means less waiting.

To increase quota: Azure Portal → Your OpenAI Resource → Model deployments → Edit → Tokens per Minute

To avoid throttling (429 errors), use this formula to calculate safe parallel job limits:

TPM × SafetyFactor

MaxParallelJobs = ─────────────────────────────────

TokensPerRequest × RequestsPerMinute

Where:

- TPM = Your Azure quota (tokens per minute)

- SafetyFactor = 0.7 (recommended, see below)

- TokensPerRequest = Input + Output tokens (~30,000 for code conversion)

- RequestsPerMinute = 60 / SecondsPerRequest

Understanding SafetyFactor (0.7 = 70%):

The SafetyFactor reserves headroom below your quota limit to handle:

| Why You Need Headroom | What Happens Without It |

|---|---|

| Token estimation variance | AI responses vary in length - a 25K estimate might actually be 35K |

| Burst protection | Multiple requests completing simultaneously can spike token usage |

| Retry overhead | Failed requests that retry consume additional tokens |

| Shared quota | Other applications using the same Azure deployment |

| SafetyFactor | Use Case |

|---|---|

| 0.5 (50%) | Shared deployment, conservative, many retries expected |

| 0.7 (70%) | Recommended - good balance of speed and safety |

| 0.85 (85%) | Dedicated deployment, stable workloads |

| 0.95+ |

Example Calculation:

| Your Quota | Tokens/Request | Request Time | Safe Parallel Jobs |

|---|---|---|---|

| 300K TPM | 30K | 30 sec |

(300,000 × 0.7) / (30,000 × 2) = 3-4 jobs

|

| 1M TPM | 30K | 30 sec |

(1,000,000 × 0.7) / (30,000 × 2) = 11-12 jobs

|

| 2M TPM | 30K | 30 sec |

(2,000,000 × 0.7) / (30,000 × 2) = 23 jobs

|

Configure in appsettings.json:

{

"ChunkingSettings": {

"MaxParallelChunks": 6, // Parallel code conversion jobs

"MaxParallelAnalysis": 6, // Parallel analysis jobs

"RateLimitSafetyFactor": 0.7, // 70% of quota

"TokenBudgetPerMinute": 300000 // Match your Azure TPM quota

}

}💡 Rule of thumb: With 1M TPM, use

MaxParallelChunks: 6for safe operation. Scale proportionally with your quota.

This project uses Microsoft Agent Framework (Microsoft.Agents.AI.*), not Semantic Kernel.

<!-- From CobolToQuarkusMigration.csproj -->

<PackageReference Include="Microsoft.Agents.AI.AzureAI" Version="1.0.0-preview.*" />

<PackageReference Include="Microsoft.Agents.AI.OpenAI" Version="1.0.0-preview.*" />

<PackageReference Include="Microsoft.Extensions.AI" Version="10.0.1" />Why Agent Framework over Semantic Kernel?

- Simpler

IChatClientabstraction - Native support for both Responses API and Chat Completions API which is key for being future proof for LLM Api's

- Better streaming and async patterns

- Lighter dependency footprint

# 1. Clone and enter

git clone https://github.com/Azure-Samples/Legacy-Modernization-Agents.git

cd Legacy-Modernization-Agents

# 2. Configure Azure OpenAI

cp Config/ai-config.local.env.example Config/ai-config.local.env

# Edit: _MAIN_ENDPOINT (required), _CODE_MODEL / _CHAT_MODEL (optional)

# Auth: use 'az login' (recommended) OR set _MAIN_API_KEY

# See azlogin-auth-guide.md for Entra ID setup details

# 3. Start Neo4j (dependency graph storage)

docker-compose up -d neo4j

# 4. Build

dotnet build

# 5. Run migration but we recommend using the next section with doctor.sh run or portal for just loading the portal

./doctor.sh runAlways use ./doctor.sh run to run migrations, not dotnet run directly.

./doctor.sh run # Full migration: analyze → convert → launch portal

./doctor.sh portal # Launch web portal only (http://localhost:5028)

./doctor.sh reverse-eng # Extract business logic docs (no code conversion)

./doctor.sh convert-only # Code conversion only (skip analysis)When you run ./doctor.sh run, you'll be prompted:

╔══════════════════════════════════════════════════════════════╗

║ COBOL Migration - Target Language Selection ║

╚══════════════════════════════════════════════════════════════╝

Select target language:

[1] Java Quarkus

[2] C# .NET

Enter choice (1-2):

After migration completes:

Migration complete! Generate report? (Y/n): Y

Launch web portal? (Y/n): Y

After selecting your action and target language, doctor.sh prompts for a speed profile that controls how much reasoning effort the AI model spends per file. This applies to migrations, reverse engineering, and conversion-only runs.

Speed Profile

======================================

1) TURBO

2) FAST

3) BALANCED (default)

4) THOROUGH

Enter choice (1-4) [default: 3]:

| Profile | Reasoning Effort | Max Output Tokens | Best For |

|---|---|---|---|

| TURBO | Low on ALL files, no exceptions | 65,536 | Testing, smoke runs. Speed from low reasoning effort, not token starvation. |

| FAST | Low on most, medium on complex | 32,768 | Quick iterations, proof-of-concept runs. Good balance of speed and quality. |

| BALANCED | Content-aware (low/medium/high based on file complexity) | 100,000 | Production migrations. Simple files get low effort, complex files get high effort. |

| THOROUGH | Medium-to-high on all files | 100,000 | Critical codebases where accuracy matters more than speed. Highest token cost. |

The speed profile works by setting environment variables that override the three-tier content-aware reasoning system configured in appsettings.json. No C# code changes are needed — the existing Program.cs environment variable override mechanism handles everything at startup.

./doctor.sh # Health check - verify configuration

./doctor.sh test # Run system tests

./doctor.sh setup # Interactive setup wizard

./doctor.sh chunking-health # Check smart chunking configurationReverse Engineering (RE) extracts business knowledge from COBOL code before any conversion happens. This is the "understand first" phase.

The BusinessLogicExtractorAgent analyzes COBOL source code and produces human-readable documentation that captures:

| Output | Description | Example |

|---|---|---|

| Business Purpose | What problem does this program solve? | "Processes monthly customer billing statements" |

| Use Cases | CRUD operations identified | CREATE customer, UPDATE balance, VALIDATE account |

| Business Rules | Validation logic as requirements | "Account number must be 10 digits" |

| Data Dictionary | Field meanings in business terms |

WS-CUST-BAL → "Customer Current Balance" |

| Dependencies | What other programs/copybooks it needs | CALLS: PAYMENT.cbl, COPIES: COMMON.cpy |

| Benefit | How |

|---|---|

| Knowledge Preservation | Documents tribal knowledge before COBOL experts retire |

| Migration Planning | Understand complexity before estimating conversion effort |

| Validation | Business team can verify extracted rules match expectations |

| Onboarding | New developers understand legacy systems without reading COBOL |

| Compliance | Audit trail of business rules for regulatory requirements |

./doctor.sh reverse-eng # Extract business logic (no code conversion)This generates output/reverse-engineering-details.md containing all extracted business knowledge.

# Reverse Engineering Report: CUSTOMER.cbl

## Business Purpose

Manages customer account lifecycle including creation,

balance updates, and account closure with audit trail.

## Use Cases

### Use Case 1: Create Customer Account

**Trigger:** New customer registration request

**Key Steps:**

1. Validate customer data (name, address, tax ID)

2. Generate unique account number

3. Initialize balance to zero

4. Write audit record

### Use Case 2: Update Balance

**Trigger:** Transaction posted to account

**Business Rules:**

- Balance cannot go negative without overdraft flag

- Transactions > $10,000 require manager approval code

## Business Rules

| Rule ID | Description | Field |

|---------|-------------|-------|

| BR-001 | Account number must be exactly 10 digits | WS-ACCT-NUM |

| BR-002 | Customer name is required (non-blank) | WS-CUST-NAME |Add business terms to Data/glossary.json for better translations:

{

"terms": [

{ "term": "WS-CUST-BAL", "translation": "Customer Current Balance" },

{ "term": "CALC-INT-RT", "translation": "Calculate Interest Rate" },

{ "term": "PRCS-PMT", "translation": "Process Payment" }

]

}The extractor uses these translations to produce more readable reports.

Legacy-Modernization-Agents/

├── source/ # ⬅️ DROP YOUR COBOL FILES HERE

│ ├── CUSTOMER.cbl

│ ├── PAYMENT.cbl

│ └── COMMON.cpy

│

├── output/ # ⬅️ GENERATED CODE APPEARS HERE

│ ├── java/ # Java Quarkus output

│ │ └── com/example/generated/

│ └── csharp/ # C# .NET output

│ └── Generated/

│

├── Agents/ # AI agent implementations

├── Config/ # Configuration files

├── Data/ # SQLite database (migration.db)

└── Logs/ # Execution logs

Workflow:

- Drop COBOL files (

.cbl,.cpy) intosource/ - Run

./doctor.sh run - Choose target language (Java or C#)

- Collect generated code from

output/java/oroutput/csharp/

Each agent has a system prompt that defines its behavior. To customize output (e.g., DDD patterns, specific frameworks), edit these files:

| Agent | File | Line | What It Does |

|---|---|---|---|

| CobolAnalyzerAgent | Agents/CobolAnalyzerAgent.cs |

~116 | Extracts structure, variables, paragraphs, SQL |

| BusinessLogicExtractorAgent | Agents/BusinessLogicExtractorAgent.cs |

~44 | Extracts user stories, features, business rules |

| JavaConverterAgent | Agents/JavaConverterAgent.cs |

~66 | Converts to Java Quarkus |

| CSharpConverterAgent | Agents/CSharpConverterAgent.cs |

~64 | Converts to C# .NET |

| DependencyMapperAgent | Agents/DependencyMapperAgent.cs |

~129 | Maps CALL/COPY/PERFORM relationships |

| ChunkAwareJavaConverter | Agents/ChunkAwareJavaConverter.cs |

~268 | Large file chunked conversion (Java) |

| ChunkAwareCSharpConverter | Agents/ChunkAwareCSharpConverter.cs |

~269 | Large file chunked conversion (C#) |

To make the Java converter generate Domain-Driven Design code, edit Agents/JavaConverterAgent.cs around line 66:

var systemPrompt = @"

You are an expert in converting COBOL programs to Java with Quarkus framework.

DOMAIN-DRIVEN DESIGN REQUIREMENTS:

- Identify bounded contexts from COBOL program sections

- Create Aggregate Roots for main business entities

- Use Value Objects for immutable data (PIC X fields)

- Implement Repository pattern for data access

- Create Domain Events for state changes

- Separate Application Services from Domain Services

OUTPUT STRUCTURE:

- domain/ → Entities, Value Objects, Aggregates

- application/ → Application Services, DTOs

- infrastructure/→ Repositories, External Services

- ports/ → Interfaces (Ports & Adapters)

...existing prompt content...

";Similarly for C#, edit Agents/CSharpConverterAgent.cs.

File splitting is controlled in Config/appsettings.json:

{

"AssemblySettings": {

"SplitStrategy": "ClassPerFile",

"Java": {

"PackagePrefix": "com.example.generated",

"ServiceSuffix": "Service"

},

"CSharp": {

"NamespacePrefix": "Generated",

"ServiceSuffix": "Service"

}

}

}| Strategy | Output |

|---|---|

SingleFile |

One large file with all classes |

ClassPerFile |

Default - One file per class (recommended) |

FilePerChunk |

One file per processing chunk |

LayeredArchitecture |

Organized into Services/, Repositories/, Models/ |

The split logic is in Models/AssemblySettings.cs:

public enum FileSplitStrategy

{

SingleFile, // All code in one file

ClassPerFile, // One file per class (DEFAULT)

FilePerChunk, // Preserves chunk boundaries

LayeredArchitecture // Service/Repository/Model folders

}Naming strategies are configured in ConversionSettings:

{

"ConversionSettings": {

"NamingStrategy": "Hybrid",

"PreserveLegacyNamesAsComments": true

}

}| Strategy | Input | Output |

|---|---|---|

Hybrid |

CALCULATE-TOTAL |

Business-meaningful name |

PascalCase |

CALCULATE-TOTAL |

CalculateTotal |

camelCase |

CALCULATE-TOTAL |

calculateTotal |

Preserve |

CALCULATE-TOTAL |

CALCULATE_TOTAL |

This project uses a dual-database approach for optimal performance, enhanced with Regex-based deep analysis:

flowchart TB

subgraph INPUT["📁 Input"]

COBOL["COBOL Files<br/>source/*.cbl, *.cpy"]

end

subgraph PROCESS["⚙️ Processing Pipeline"]

REGEX["Regex / Syntax Parsing<br/>(Deep SQL/Variable Extraction)"]

AGENTS["🤖 AI Agents<br/>(MS Agent Framework)"]

ANALYZER["CobolAnalyzerAgent"]

EXTRACTOR["BusinessLogicExtractor"]

CONVERTER["Java/C# Converter"]

MAPPER["DependencyMapper"]

end

subgraph STORAGE["💾 Hybrid Storage"]

SQLITE[("SQLite<br/>Data/migration.db<br/><br/>• Run metadata<br/>• File content<br/>• Raw AI analysis<br/>• Generated code")]

NEO4J[("Neo4j<br/>bolt://localhost:7687<br/><br/>• Dependencies<br/>• Relationship Graph<br/>• Impact Analysis")]

end

subgraph OUTPUT["📦 Output"]

CODE["Java/C# Code<br/>output/java or output/csharp"]

PORTAL["Web Portal & MCP Server<br/>localhost:5028"]

end

COBOL --> REGEX

REGEX --> AGENTS

AGENTS --> ANALYZER

AGENTS --> EXTRACTOR

AGENTS --> CONVERTER

AGENTS --> MAPPER

ANALYZER --> SQLITE

EXTRACTOR --> SQLITE

CONVERTER --> SQLITE

CONVERTER --> CODE

MAPPER --> NEO4J

SQLITE --> PORTAL

NEO4J --> PORTAL| Aspect | SQLite | Neo4j |

|---|---|---|

| Purpose | Document storage | Relationship mapping |

| Strength | Fast queries, simple setup | Graph traversal, visualization |

| Use Case | "What's in this file?" | "What depends on this file?" |

| Query Style | SQL SELECT | Cypher graph queries |

Together: Fast metadata access + Powerful dependency insights 🚀

The Neo4j dependency graph enables:

- Impact Analysis - "If I change CUSTOMER.cbl, what else breaks?"

- Circular Dependency Detection - Find problematic CALL/COPY cycles

- Critical File Identification - Most-connected files = highest risk

- Migration Planning - Convert files in dependency order

- Visual Understanding - See relationships at a glance in the portal

The migration follows a strict Deep Code Analysis pipeline:

sequenceDiagram

participant U as User

participant O as Orchestrator

participant AA as Analyzer Agent

participant DA as Dependency Agent

participant SQ as SQLite

participant CA as Converter Agent

U->>O: Run "analyze" (Step 1)

rect rgb(240, 248, 255)

Note over O, SQ: 1. Deep Analysis Phase

O->>O: Determine File Type<br/>(Program vs Copybook)

O->>O: Regex Parse (SQL, Variables)

O->>SQ: Store raw metadata

O->>AA: Analyze Structure & Logic

AA->>SQ: Save Analysis Result

end

rect rgb(255, 240, 245)

Note over O, SQ: 2. Dependency Phase

U->>O: Run "dependencies" (Step 2)

O->>DA: Resolve Calls/Includes

DA->>SQ: Read definitions

DA->>SQ: Write graph nodes

end

rect rgb(240, 255, 240)

Note over O, SQ: 3. Conversion Phase

U->>O: Run "convert" (Step 3)

O->>SQ: Fetch analysis & deps

O->>CA: Generate Modern Code

CA->>SQ: Save generated code

endPortal Features:

- ✅ Dark theme with modern UI

- ✅ Three-panel layout (resources/chat/graph)

- ✅ AI-powered chat interface

- ✅ Suggestion chips for common queries

- ✅ Interactive dependency graph (zoom/pan/filter)

- ✅ Multi-run queries and comparisons

- ✅ File content analysis with line counts

- ✅ Comprehensive data retrieval guide

- ✅ Enhanced dependency tracking (CALL, COPY, PERFORM, EXEC, READ, WRITE, OPEN, CLOSE)

- ✅ Migration report generation per run

- ✅ Mermaid diagram rendering in documentation

- ✅ Collapsible filter sections for cleaner UI

- ✅ Edge type filtering with color-coded visualization

- ✅ Line number context for all dependencies

Large COBOL files (>3,000 lines or >150K characters) are automatically split at semantic boundaries (DIVISION → SECTION → paragraph) and processed with content-aware reasoning effort. A three-tier complexity scoring system analyzes each file's COBOL patterns (EXEC SQL, CICS, REDEFINES, etc.) to dynamically allocate reasoning effort and output tokens — simple files get fast processing while complex files get thorough analysis.

flowchart TD

subgraph INPUT["📥 FILE INTAKE"]

A[COBOL Source File] --> B{File Size Check}

B -->|"≤ 3,000 lines<br>≤ 150,000 chars"| C[Single-File Processing]

B -->|"> 3,000 lines<br>> 150,000 chars"| D[Smart Chunking Required]

end

subgraph TOKEN_EST["🔢 TOKEN ESTIMATION"]

C --> E[TokenHelper.EstimateCobolTokens]

D --> E

E -->|"COBOL: chars ÷ 3.0"| F[Estimated Input Tokens]

E -->|"General: chars ÷ 3.5"| F

end

subgraph COMPLEXITY["🎯 THREE-TIER COMPLEXITY SCORING"]

F --> G[Complexity Score Calculation]

G -->|"Σ regex×weight + density bonuses"| H{Score Threshold}

H -->|"< 5"| I["🟢 LOW<br>effort: low<br>multiplier: 1.5×"]

H -->|"5 – 14"| J["🟡 MEDIUM<br>effort: medium<br>multiplier: 2.5×"]

H -->|"≥ 15"| K["🔴 HIGH<br>effort: high<br>multiplier: 3.5×"]

end

subgraph OUTPUT_CALC["📐 OUTPUT TOKEN CALCULATION"]

I --> L[estimatedOutput = input × multiplier]

J --> L

K --> L

L --> M["clamp(estimated, minTokens, maxTokens)"]

M -->|"Codex: 32,768 – 100,000"| N[Final maxOutputTokens]

M -->|"Chat: 16,384 – 65,536"| N

end

subgraph CHUNKING["✂️ SMART CHUNKING"]

D --> O[CobolAdapter.IdentifySemanticUnits]

O --> P[Divisions / Sections / Paragraphs]

P --> Q[SemanticUnitChunker.ChunkFileAsync]

Q --> R{Chunking Decision}

R -->|"≤ MaxLinesPerChunk"| S[Single Chunk]

R -->|"Semantic units found"| T["Semantic Boundary Split<br>Priority: DIVISION > SECTION > Paragraph"]

R -->|"No units / oversized units"| U["Line-Based Fallback<br>overlap: 300 lines"]

end

subgraph CONTEXT["📋 CONTEXT WINDOW MANAGEMENT"]

T --> V[ChunkContextManager]

U --> V

S --> V

V --> W["Full Detail Window<br>(last 3 chunks)"]

V --> X["Compressed History<br>(older → 30% size)"]

V --> Y["Cross-Chunk State<br>signatures + type mappings"]

W --> Z[ChunkContext]

X --> Z

Y --> Z

end

subgraph RATE_LIMIT["⏱️ DUAL RATE LIMITING"]

direction TB

Z --> AA["System A: RateLimiter<br>(Token Bucket + Semaphore)"]

Z --> AB["System B: RateLimitTracker<br>(Sliding Window TPM/RPM)"]

AA --> AC{Capacity Check}

AB --> AC

AC -->|"Budget: 300K TPM × 0.7"| AD[Wait / Proceed]

AC -->|"Concurrency: max 3 parallel"| AD

AC -->|"Stagger: 2,000ms between workers"| AD

end

subgraph API_CALL["🤖 API CALL + ESCALATION"]

AD --> AE[Azure OpenAI Responses API]

AE --> AF{Response Status}

AF -->|"Complete"| AG[✅ Success]

AF -->|"Reasoning Exhaustion<br>reasoning ≥ 90% of output"| AH["Escalation Loop<br>① Double maxTokens<br>② Promote effort<br>③ Thrash guard"]

AH -->|"Max 2 retries"| AE

AH -->|"All retries failed"| AI["Adaptive Re-Chunking<br>Split at semantic midpoint<br>50-line overlap"]

AI --> AE

AF -->|"429 Rate Limited"| AJ["Exponential Backoff<br>5s → 60s max<br>up to 5 retries"]

AJ --> AE

end

subgraph RECONCILE["🔗 RECONCILIATION"]

AG --> AK[Record Chunk Result]

AK --> AL[Validate Chunk Output]

AL --> AM{More Chunks?}

AM -->|Yes| V

AM -->|No| AN[Reconciliation Pass]

AN --> AO["Merge Results<br>Resolve forward references<br>Deduplicate imports"]

end

subgraph FINAL["📤 FINAL OUTPUT"]

AO --> AP[Converted Java/C# Code]

AP --> AQ[Write to Output Directory]

end

classDef low fill:#d4edda,stroke:#28a745,color:#000

classDef medium fill:#fff3cd,stroke:#ffc107,color:#000

classDef high fill:#f8d7da,stroke:#dc3545,color:#000

classDef process fill:#d1ecf1,stroke:#17a2b8,color:#000

classDef rate fill:#e2d5f1,stroke:#6f42c1,color:#000

class I low

class J medium

class K high

class AA,AB,AC,AD rate

class AE,AF,AG,AH,AI,AJ processFor detailed ASCII diagrams, constants reference tables, and complexity scoring indicator weights, see smart-chunking-architecture.md.

flowchart TD

CLI[["CLI / doctor.sh\n- Loads AI config\n- Selects target language"]]

subgraph ANALYZE_PHASE["PHASE 1: Deep Analysis"]

REGEX["Regex Parsing\n(Fast SQL/Variable Extraction)"]

ANALYZER["CobolAnalyzerAgent\n(Structure & Logic)"]

SQLITE[("SQLite Storage")]

end

subgraph DEPENDENCY_PHASE["PHASE 2: Dependencies"]

MAPPER["DependencyMapperAgent\n(Builds Graph)"]

NEO4J[("Neo4j Graph DB")]

end

subgraph CONVERT_PHASE["PHASE 3: Conversion"]

FETCHER["Context Fetcher\n(Aggregates Dependencies)"]

CONVERTER["CodeConverterAgent\n(Java/C# Generation)"]

OUTPUT["Output Files"]

end

CLI --> REGEX

REGEX --> SQLITE

REGEX --> ANALYZE_PHASE

ANALYZER --> SQLITE

SQLITE --> MAPPER

MAPPER --> NEO4J

SQLITE --> FETCHER

NEO4J --> FETCHER

FETCHER --> CONVERTER

CONVERTER --> OUTPUTsequenceDiagram

participant User as 🧑 User / doctor.sh

participant CLI as CLI Runner

participant RE as ReverseEngineeringProcess

participant Analyzer as CobolAnalyzerAgent

participant BizLogic as BusinessLogicExtractorAgent

participant Migration as MigrationProcess

participant DepMap as DependencyMapperAgent

participant Converter as CodeConverterAgent (Java/C#)

participant Repo as HybridMigrationRepository

participant Portal as MCP Server & McpChatWeb

User->>CLI: select target language, concurrency flags

CLI->>RE: start reverse engineering

RE->>Analyzer: analyze COBOL files (parallel up to max-parallel)

Analyzer-->>RE: CobolAnalysis[]

RE->>BizLogic: extract business logic summaries

BizLogic-->>RE: BusinessLogic[]

RE->>Repo: persist analyses + documentation

RE-->>CLI: ReverseEngineeringResult

CLI->>Migration: start migration run with latest analyses

Migration->>Analyzer: reuse or refresh CobolAnalysis

Migration->>DepMap: build dependency graph (CALL/COPY/...)

DepMap-->>Migration: DependencyMap

Migration->>Converter: convert to Java/C# (AI-limited concurrency)

Converter-->>Migration: CodeFile artifacts

Migration->>Repo: persist run metadata, graph edges, code files

Repo-->>Portal: expose MCP resources + REST APIs

Portal-->>User: portal UI (chat, graph, reports)- Purpose: Deep structural analysis of COBOL files (divisions, paragraphs, copybooks, metrics).

-

Inputs: COBOL text from

FileHelperor cached content. -

Outputs:

CobolAnalysisobjects consumed by:-

ReverseEngineeringProcess(for documentation & glossary mapping) -

DependencyMapperAgent(seed data for relationships) -

CodeConverterAgent(guides translation prompts)

-

-

Interactions:

- Uses Azure OpenAI via

ResponsesApiClient/IChatClientwith concurrency guard. - Results persisted by

SqliteMigrationRepository.

- Uses Azure OpenAI via

- Purpose: Convert technical analyses into business language (use cases, user stories, glossary).

-

Inputs: Output from

CobolAnalyzerAgent+ optional glossary. -

Outputs:

BusinessLogicrecords and Markdown sections used inreverse-engineering-details.md. -

Interactions:

- Runs in parallel with analyzer results.

- Writes documentation via

FileHelperand logs viaEnhancedLogger.

- Purpose: Identify CALL/COPY/PERFORM/IO relationships and build graph metadata.

- Inputs: COBOL files + analyses (line numbers, paragraphs).

-

Outputs:

DependencyMapwith nodes/edges stored in both SQLite and Neo4j. -

Interactions:

- Feeds the McpChatWeb graph panel and run-selector APIs.

- Enables multi-run queries (e.g., "show me CALL tree for run 42").

-

Variants:

JavaConverterAgentorCSharpConverterAgent(selected viaTargetLanguage). - Purpose: Generate target-language code from COBOL analyses and dependency context.

-

Inputs:

-

CobolAnalysisper file - Target language settings (Quarkus vs. .NET)

- Migration run metadata (for logging & metrics)

-

-

Outputs:

CodeFilerecords saved underoutput/java/oroutput/csharp/. -

Interactions:

- Concurrency guards (pipeline slots vs. AI calls) ensure Azure OpenAI limits respected.

- Results pushed to portal via repositories for browsing/download.

-

Pipeline concurrency (

--max-parallel) controls how many files/chunks run simultaneously (e.g., 8). -

AI concurrency (

--max-ai-parallel) caps concurrent Azure OpenAI calls (e.g., 3) to avoid throttling. - Both values can be surfaced via CLI flags or environment variables to let

doctor.shtune runtime.

-

doctor.sh run→ load configs → choose target language -

Source scanning - Reads all

.cbl/.cpyfiles fromsource/ -

Analysis -

CobolAnalyzerAgentextracts structure;BusinessLogicExtractorAgentgenerates documentation -

Dependencies -

DependencyMapperAgentmaps CALL/COPY/PERFORM relationships → Neo4j -

Conversion -

JavaConverterAgentorCSharpConverterAgentgenerates target code →output/ -

Storage -

HybridMigrationRepositorywrites metadata to SQLite, graph edges to Neo4j -

Portal -

McpChatWebsurfaces chat, graphs, and reports at http://localhost:5028

┌─────────────────┬───────────────────────────┬─────────────────────┐

│ 📋 Resources │ 💬 AI Chat │ 📊 Graph │

│ │ │ │

│ MCP Resources │ Ask about your COBOL: │ Interactive │

│ • Run summary │ "What does CUSTOMER.cbl │ dependency graph │

│ • File lists │ do?" │ │

│ • Dependencies │ │ • Zoom/pan │

│ • Analyses │ AI responses with │ • Filter by type │

│ │ SQLite + Neo4j data │ • Click nodes │

└─────────────────┴───────────────────────────┴─────────────────────┘

Portal URL: http://localhost:5028

dotnet build./doctor.sh run # Interactive - prompts for language choicedotnet run directly - it bypasses the interactive menu and configuration checks.

./doctor.sh portal # Opens http://localhost:5028This project uses a layered configuration system where .env files can override appsettings.json values.

| File | Purpose | Git Tracked? |

|---|---|---|

Config/appsettings.json |

All settings - models, chunking, Neo4j, output paths | ✅ Yes |

Config/ai-config.env |

Template defaults | ✅ Yes |

Config/ai-config.local.env |

Your secrets - API keys, endpoints | ❌ No (gitignored) |

appsettings.json → Non-secret settings (chunking, Neo4j, file paths)

ai-config.local.env → Secrets (API keys, endpoints) - NEVER commit!

When you run ./doctor.sh run, configuration loads in this order:

flowchart LR

A["1. appsettings.json<br/>(base config)"] --> B["2. ai-config.env<br/>(template defaults)"]

B --> C["3. ai-config.local.env<br/>(your overrides)"]

C --> D["4. Environment vars<br/>(highest priority)"]

style C fill:#90EE90

style D fill:#FFD700Later values override earlier ones. This means:

-

ai-config.local.envoverridesappsettings.json - Environment variables override everything

# Inside doctor.sh:

source "$REPO_ROOT/Config/load-config.sh" # Loads the loader

load_ai_config # Executes loadingThe load-config.sh script:

- Reads

ai-config.local.envfirst (your secrets) - Falls back to

ai-config.envfor any unset values - Exports all values as environment variables

- .NET app reads these env vars, which override

appsettings.json

| Setting | appsettings.json Location | .env Override |

|---|---|---|

| Codex model | AISettings.ModelId |

_CODE_MODEL |

| Chat model | AISettings.ChatModelId |

_CHAT_MODEL |

| API endpoint | AISettings.Endpoint |

_MAIN_ENDPOINT |

| API key | AISettings.ApiKey |

_MAIN_API_KEY |

| Neo4j enabled | ApplicationSettings.Neo4j.Enabled |

— |

| Chunking | ChunkingSettings.* |

— |

💡 Best Practice: Keep secrets in

ai-config.local.env, keep everything else inappsettings.json.

In Config/ai-config.local.env:

# Master Configuration

_MAIN_ENDPOINT="https://YOUR-RESOURCE.openai.azure.com/"

_MAIN_API_KEY="your key" # Leave empty to use 'az login' (Entra ID) instead

# Model Selection (override appsettings.json)

_CHAT_MODEL="gpt-5.2-chat" # For Portal Q&A

_CODE_MODEL="gpt-5.1-codex-mini" # For Code Conversion💡 Prefer keyless auth? Run

az loginand leave_MAIN_API_KEYempty. You need the "Cognitive Services OpenAI User" role on your Azure OpenAI resource. See Azure AD / Entra ID Authentication Guide for full instructions.

In Config/appsettings.json:

{

"ApplicationSettings": {

"Neo4j": {

"Enabled": true,

"Uri": "bolt://localhost:7687",

"Username": "neo4j",

"Password": "cobol-migration-2025"

}

}

}Start with: docker-compose up -d neo4j

See Parallel Jobs Formula for chunking configuration details.

| Input | Output |

|---|---|

source/CUSTOMER.cbl |

output/java/com/example/generated/CustomerService.java |

source/PAYMENT.cbl |

output/csharp/Generated/PaymentProcessor.cs |

| Analysis | output/reverse-engineering-details.md |

| Report | output/migration_report_run_X.md |

./doctor.sh # Check configuration

./doctor.sh test # Run system tests

./doctor.sh chunking-health # Check chunking setup| Issue | Solution |

|---|---|

| Neo4j connection refused | docker-compose up -d neo4j |

| Azure API error | Check Config/ai-config.local.env credentials or run az login

|

| No output generated | Ensure COBOL files are in source/

|

| Portal won't start |

lsof -ti :5028 | xargs kill -9 then retry |

- Smart Chunking & Token Architecture - Full diagrams, constants reference, and complexity scoring details

- Smart Chunking Guide - Deep technical details

- Architecture Documentation - System design

- Changelog - Version history

Collaboration between Microsoft's Global Black Belt team and Bankdata. See blog post.

MIT License - Copyright (c) Microsoft Corporation.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Legacy-Modernization-Agents

Similar Open Source Tools

Legacy-Modernization-Agents

Legacy Modernization Agents is an open source migration framework developed to demonstrate AI Agents capabilities for converting legacy COBOL code to Java or C# .NET. The framework uses Microsoft Agent Framework with a dual-API architecture to analyze COBOL code and dependencies, then convert to either Java Quarkus or C# .NET. The web portal provides real-time visualization of migration progress, dependency graphs, and AI-powered Q&A.

prompt-guard

Prompt Guard is a tool designed to provide prompt injection defense for any LLM agent, protecting AI agents from manipulation attacks. It works with various LLM-powered systems like Clawdbot, LangChain, AutoGPT, CrewAI, etc. The tool offers features such as protection against injection attacks, secret exfiltration, jailbreak attempts, auto-approve & MCP abuse, browser & Unicode injection, skill weaponization defense, encoded & obfuscated payloads detection, output DLP, enterprise DLP, Canary Tokens, JSONL logging, token smuggling defense, severity scoring, and SHIELD.md compliance. It supports multiple languages and provides an API-enhanced mode for advanced detection. The tool can be used via CLI or integrated into Python scripts for analyzing user input and LLM output for potential threats.

botserver

General Bots is a self-hosted AI automation platform and LLM conversational platform focused on convention over configuration and code-less approaches. It serves as the core API server handling LLM orchestration, business logic, database operations, and multi-channel communication. The platform offers features like multi-vendor LLM API, MCP + LLM Tools Generation, Semantic Caching, Web Automation Engine, Enterprise Data Connectors, and Git-like Version Control. It enforces a ZERO TOLERANCE POLICY for code quality and security, with strict guidelines for error handling, performance optimization, and code patterns. The project structure includes modules for core functionalities like Rhai BASIC interpreter, security, shared types, tasks, auto task system, file operations, learning system, and LLM assistance.

pipelock

Pipelock is an all-in-one security harness designed for AI agents, offering control over network egress, detection of credential exfiltration, scanning for prompt injection, and monitoring workspace integrity. It utilizes capability separation to restrict the agent process with secrets and employs a separate fetch proxy for web browsing. The tool runs a 7-layer scanner pipeline on every request to ensure security. Pipelock is suitable for users running AI agents like Claude Code, OpenHands, or any AI agent with shell access and API keys.

oh-my-pi

oh-my-pi is an AI coding agent for the terminal, providing tools for interactive coding, AI-powered git commits, Python code execution, LSP integration, time-traveling streamed rules, interactive code review, task management, interactive questioning, custom TypeScript slash commands, universal config discovery, MCP & plugin system, web search & fetch, SSH tool, Cursor provider integration, multi-credential support, image generation, TUI overhaul, edit fuzzy matching, and more. It offers a modern terminal interface with smart session management, supports multiple AI providers, and includes various tools for coding, task management, code review, and interactive questioning.

tokscale

Tokscale is a high-performance CLI tool and visualization dashboard for tracking token usage and costs across multiple AI coding agents. It helps monitor and analyze token consumption from various AI coding tools, providing real-time pricing calculations using LiteLLM's pricing data. Inspired by the Kardashev scale, Tokscale measures token consumption as users scale the ranks of AI-augmented development. It offers interactive TUI mode, multi-platform support, real-time pricing, detailed breakdowns, web visualization, flexible filtering, and social platform features.

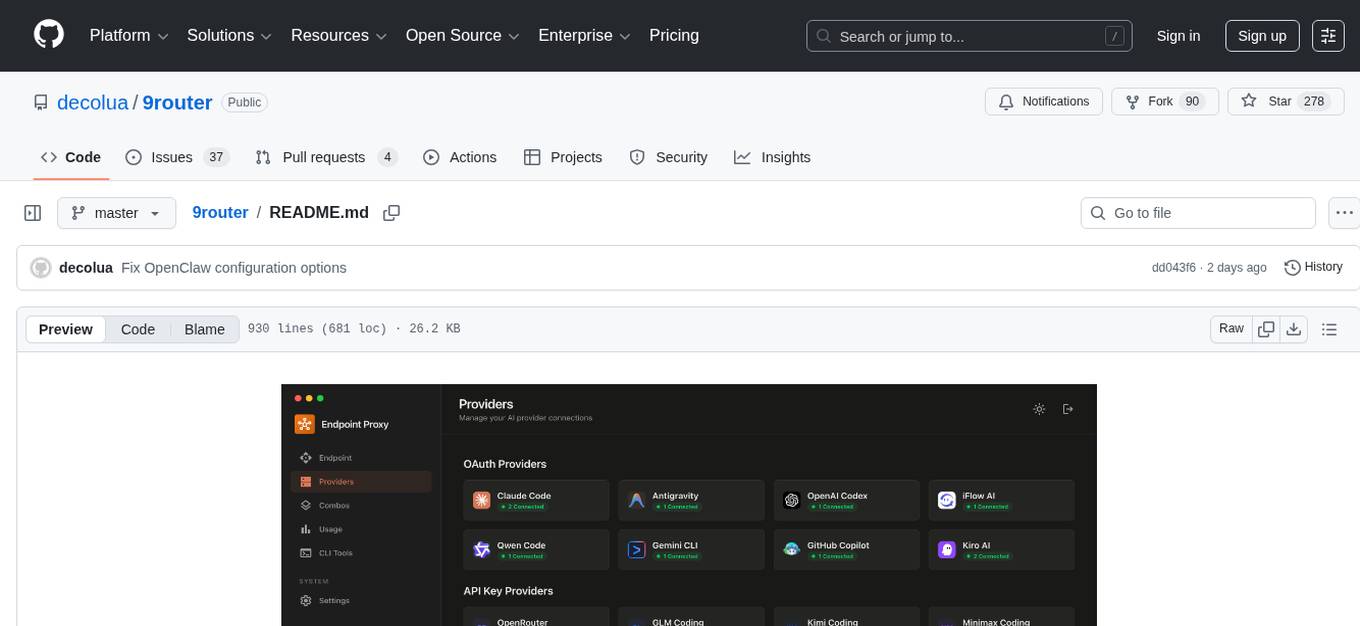

9router

9Router is a free AI router tool designed to help developers maximize their AI subscriptions, auto-route to free and cheap AI models with smart fallback, and avoid hitting limits and wasting money. It offers features like real-time quota tracking, format translation between OpenAI, Claude, and Gemini, multi-account support, auto token refresh, custom model combinations, request logging, cloud sync, usage analytics, and flexible deployment options. The tool supports various providers like Claude Code, Codex, Gemini CLI, GitHub Copilot, GLM, MiniMax, iFlow, Qwen, and Kiro, and allows users to create combos for different scenarios. Users can connect to the tool via CLI tools like Cursor, Claude Code, Codex, OpenClaw, and Cline, and deploy it on VPS, Docker, or Cloudflare Workers.

Automodel

Automodel is a Python library for automating the process of building and evaluating machine learning models. It provides a set of tools and utilities to streamline the model development workflow, from data preprocessing to model selection and evaluation. With Automodel, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to find the best model for their dataset. The library is designed to be user-friendly and customizable, allowing users to define their own pipelines and workflows. Automodel is suitable for data scientists, machine learning engineers, and anyone looking to quickly build and test machine learning models without the need for manual intervention.

headroom

Headroom is a tool designed to optimize the context layer for Large Language Models (LLMs) applications by compressing redundant boilerplate outputs. It intercepts context from tool outputs, logs, search results, and intermediate agent steps, stabilizes dynamic content like timestamps and UUIDs, removes low-signal content, and preserves original data for retrieval only when needed by the LLM. It ensures provider caching works efficiently by aligning prompts for cache hits. The tool works as a transparent proxy with zero code changes, offering significant savings in token count and enabling reversible compression for various types of content like code, logs, JSON, and images. Headroom integrates seamlessly with frameworks like LangChain, Agno, and MCP, supporting features like memory, retrievers, agents, and more.

multi-agent-ralph-loop

Multi-agent RALPH (Reinforcement Learning with Probabilistic Hierarchies) Loop is a framework for multi-agent reinforcement learning research. It provides a flexible and extensible platform for developing and testing multi-agent reinforcement learning algorithms. The framework supports various environments, including grid-world environments, and allows users to easily define custom environments. Multi-agent RALPH Loop is designed to facilitate research in the field of multi-agent reinforcement learning by providing a set of tools and utilities for experimenting with different algorithms and scenarios.

rtk

RTK is a lightweight and flexible tool for real-time kinematic positioning. It provides accurate positioning data by combining data from GPS satellites with a reference station. RTK is commonly used in surveying, agriculture, construction, and drone navigation. The tool offers real-time corrections to improve the accuracy of GPS data, making it ideal for applications requiring precise location information. With RTK, users can achieve centimeter-level accuracy in their positioning data, enabling them to perform tasks that demand high precision and reliability.

mcp-context-forge

MCP Context Forge is a powerful tool for generating context-aware data for machine learning models. It provides functionalities to create diverse datasets with contextual information, enhancing the performance of AI algorithms. The tool supports various data formats and allows users to customize the context generation process easily. With MCP Context Forge, users can efficiently prepare training data for tasks requiring contextual understanding, such as sentiment analysis, recommendation systems, and natural language processing.

DeepMCPAgent

DeepMCPAgent is a model-agnostic tool that enables the creation of LangChain/LangGraph agents powered by MCP tools over HTTP/SSE. It allows for dynamic discovery of tools, connection to remote MCP servers, and integration with any LangChain chat model instance. The tool provides a deep agent loop for enhanced functionality and supports typed tool arguments for validated calls. DeepMCPAgent emphasizes the importance of MCP-first approach, where agents dynamically discover and call tools rather than hardcoding them.

llamafarm

LlamaFarm is a comprehensive AI framework that empowers users to build powerful AI applications locally, with full control over costs and deployment options. It provides modular components for RAG systems, vector databases, model management, prompt engineering, and fine-tuning. Users can create differentiated AI products without needing extensive ML expertise, using simple CLI commands and YAML configs. The framework supports local-first development, production-ready components, strategy-based configuration, and deployment anywhere from laptops to the cloud.

Edit-Banana

Edit Banana is a universal content re-editor that allows users to transform fixed content into fully manipulatable assets. Powered by SAM 3 and multimodal large models, it enables high-fidelity reconstruction while preserving original diagram details and logical relationships. The platform offers advanced segmentation, fixed multi-round VLM scanning, high-quality OCR, user system with credits, multi-user concurrency, and a web interface. Users can upload images or PDFs to get editable DrawIO (XML) or PPTX files in seconds. The project structure includes components for segmentation, text extraction, frontend, models, and scripts, with detailed installation and setup instructions provided. The tool is open-source under the Apache License 2.0, allowing commercial use and secondary development.

For similar tasks

Legacy-Modernization-Agents

Legacy Modernization Agents is an open source migration framework developed to demonstrate AI Agents capabilities for converting legacy COBOL code to Java or C# .NET. The framework uses Microsoft Agent Framework with a dual-API architecture to analyze COBOL code and dependencies, then convert to either Java Quarkus or C# .NET. The web portal provides real-time visualization of migration progress, dependency graphs, and AI-powered Q&A.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.