Best AI tools for< Pull Models >

20 - AI tool Sites

Elessar

Elessar is an AI-powered platform designed to enhance engineering productivity by providing automatic documentation, reporting, and visibility for development teams. It seamlessly integrates with existing ecosystems, generates pull request changelogs, automates Notion documentation, offers Slack bot functionality, provides VS Code extension for easy code understanding, and links with Linear for issue tracking. Elessar ensures data privacy and security by following SOC II compliant policies and encrypting data at rest and in transit. It does not use data for training AI models. With Elessar, organizations can streamline communication, improve visibility, and boost productivity.

Frugal

Frugal is an intelligent application cost engineering platform that optimizes code to reduce cloud costs automatically. It is the first AI-powered cost optimization platform built for engineers, empowering them to find and fix inefficiencies in code that drain cloud budgets. The platform aims to reinvent cost engineering by enabling developers to reduce application costs and improve cloud efficiency through automated identification and resolution of wasteful practices.

What The Diff

What The Diff is an AI-powered code review assistant that helps you to write pull request descriptions, send out summarized notifications, and refactor minor issues during the review. It uses natural language processing to understand the changes in your code and generate clear and concise descriptions. What The Diff also provides rich summary notifications that are easy for non-technical stakeholders to understand, and it can generate beautiful changelogs that you can share with your team or the public.

TextUnbox

TextUnbox is an AI-powered tool that allows users to extract text from images, generate images from text descriptions, translate text, remove image backgrounds, and more. It supports over 20 languages and can be used in the browser or integrated into custom solutions using its REST API.

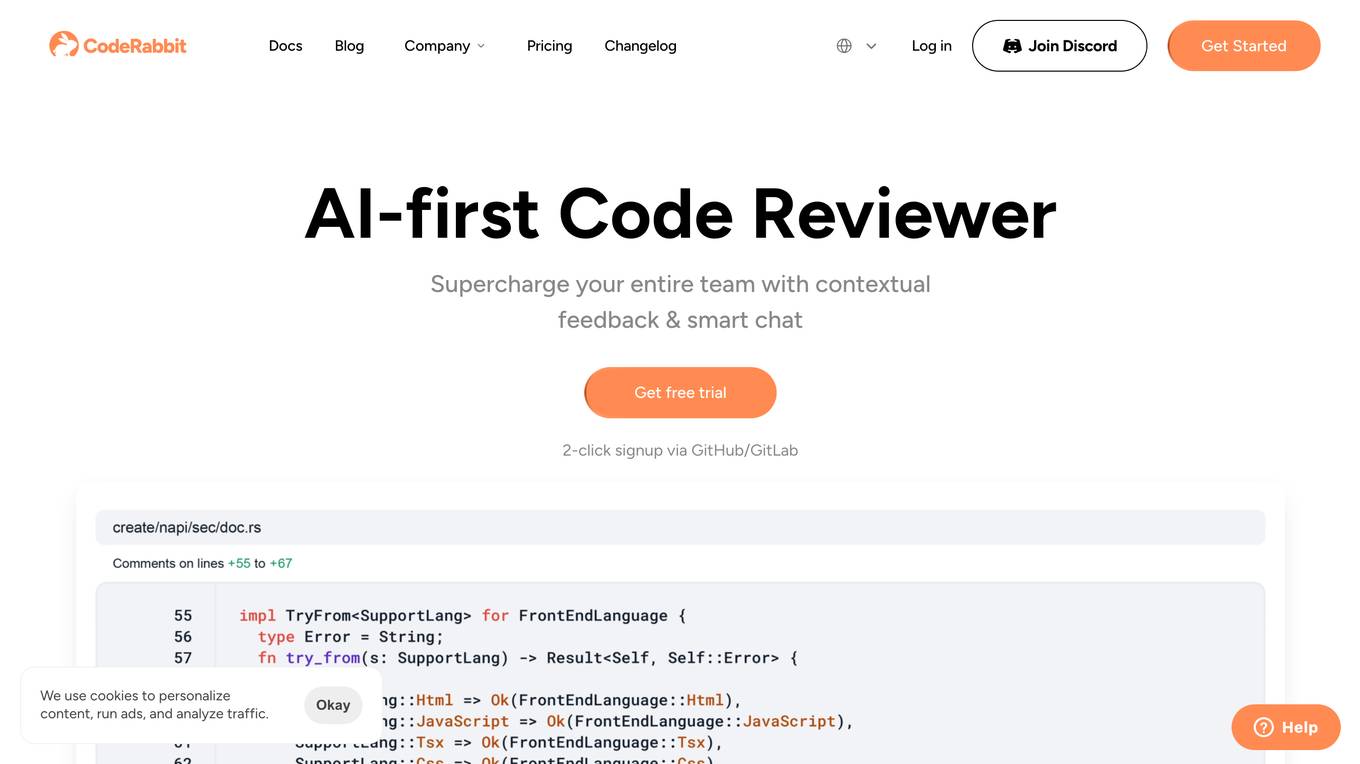

CodeRabbit

CodeRabbit is an innovative AI code review platform that streamlines and enhances the development process. By automating reviews, it dramatically improves code quality while saving valuable time for developers. The system offers detailed, line-by-line analysis, providing actionable insights and suggestions to optimize code efficiency and reliability. Trusted by hundreds of organizations and thousands of developers daily, CodeRabbit has processed millions of pull requests. Backed by CRV, CodeRabbit continues to revolutionize the landscape of AI-assisted software development.

Tusk

Tusk is an AI-powered automated testing platform that helps engineering teams generate high-quality unit and integration tests with codebase and business context. It runs on pull requests to suggest verified test cases, enabling faster and safer code shipping. Tusk offers features like shift-left testing, autonomous test generation, self-healing tests, and seamless integration with CI/CD pipelines. Trusted by engineering leaders at fast-growing companies, Tusk aims to improve test coverage and code quality while reducing the release cycle time.

PYQ

PYQ is an AI-powered platform that helps businesses automate document-related tasks, such as data extraction, form filling, and system integration. It uses natural language processing (NLP) and machine learning (ML) to understand the content of documents and perform tasks accordingly. PYQ's platform is designed to be easy to use, with pre-built automations for common use cases. It also offers custom automation development services for more complex needs.

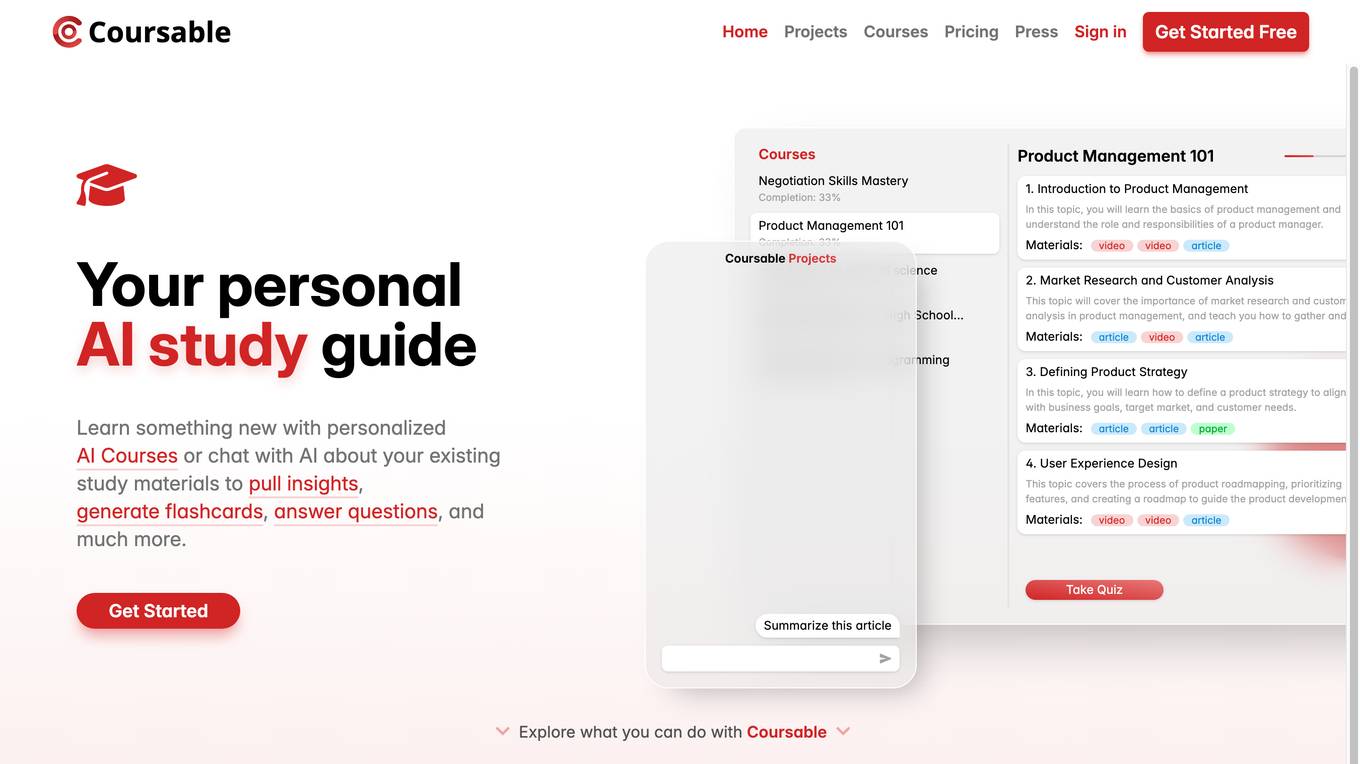

Coursable

Coursable is an AI-powered student workspace designed to enhance learning experiences. It leverages artificial intelligence to provide personalized study recommendations, track progress, and offer interactive learning tools. With Coursable, students can access a virtual study companion that adapts to their learning style and pace, making studying more efficient and engaging. The platform aims to revolutionize traditional learning methods by incorporating AI technology to support students in achieving their academic goals.

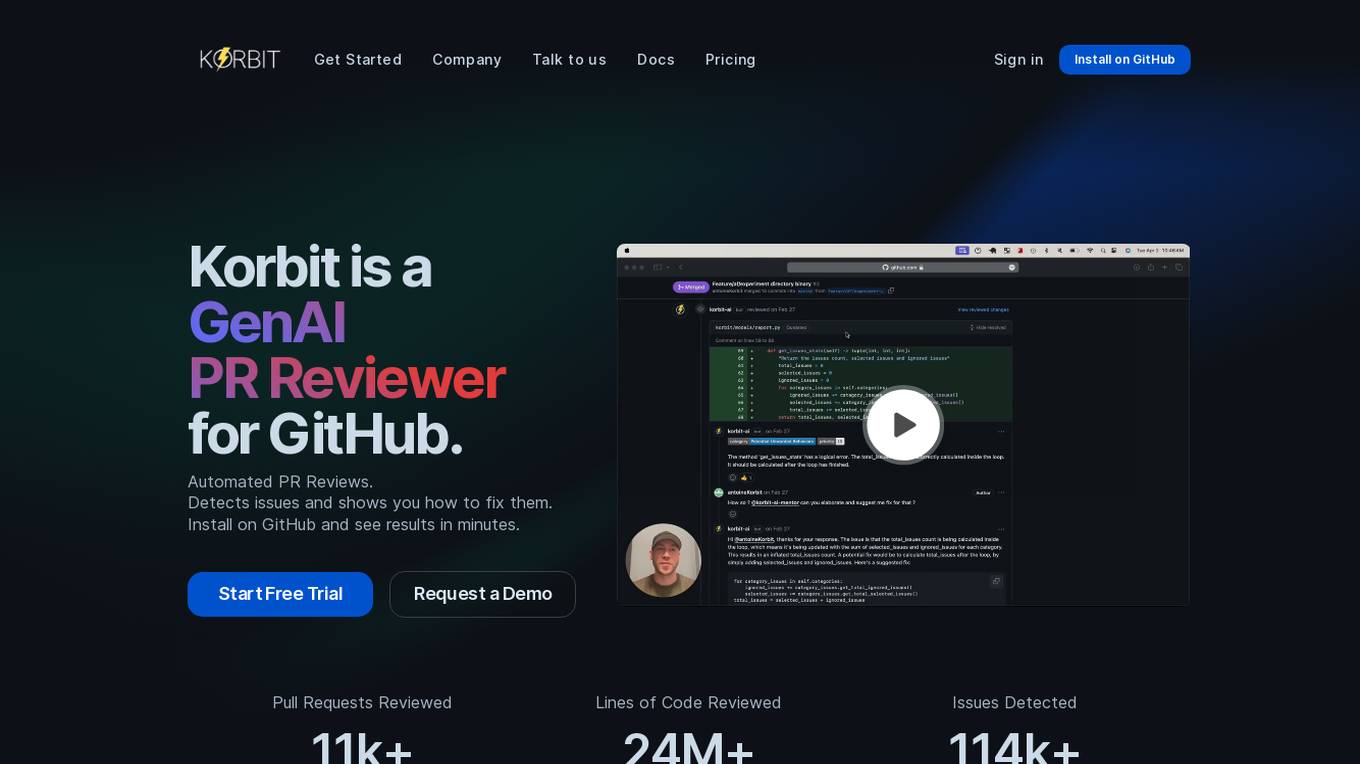

Korbit

Korbit is an AI-powered code review tool that helps developers write better code, faster. It integrates directly into your GitHub PR workflow and provides instant feedback on your code, identifying issues and providing actionable recommendations. Korbit also provides valuable insights into code quality, project status, and developer performance, helping you to boost your productivity and elevate your code.

Superjoin

Superjoin is an AI-powered tool that allows users to automatically pull data from various tools into Google Sheets without the need for writing any code. It offers features like one-click connectors, auto-refresh schedules, data preview, and the ability to send report screenshots to Slack and Email. Superjoin is loved by thousands of users across hundreds of companies for its efficiency in automating workflows and data management.

Gitya

Gitya is an AI-powered GitHub assistant designed to streamline your GitHub workflow by automating minor tasks and enhancing productivity. With features like GitHub App integration, AI-enhanced automation, PR management, and ticket automation, Gitya aims to help users spend less time on busywork and more time on high-impact engineering tasks. Users have praised Gitya for its ability to reduce time spent on bug fixes and PR management, ultimately leading to increased project efficiency and success.

Weekly Github Insights

Weekly Github Insights is an AI-powered platform that provides users with a comprehensive summary of their latest GitHub activities from the past 7 days. It aims to keep users informed and motivated by compiling their weekly GitHub journey. The platform is built by @rohan_2502 using @aceternitylabs, @github APIs, and @OpenAI.

Gitlights

Gitlights is a powerful Git analytics tool that leverages AI and NLP algorithms to provide enriched insights on commits, pull requests, and developer skills. It empowers teams with advanced analytics and insights, revolutionizing the development process. Gitlights offers features such as insightful commits and pull requests dashboard, advanced developer skills analysis, strategic investment balance monitoring, collaborative developers map, and benchmarking comparison with other teams. With Gitlights, users can stay ahead with comparative data, receive smart notifications, and make informed decisions based on precise and detailed data. The tool aims to provide a holistic view of a development team's activity, driving strategic decision-making, continuous improvement, and excellence in collaboration.

Bifrost

Bifrost is an AI-powered tool that converts Figma designs into clean React code automatically. It eliminates the need to write frontend code from scratch, making it ideal for developers at every stage of the development process. With Bifrost, you can effortlessly create component sets from Figma, scale your projects with finesse, and iterate on design changes seamlessly. The tool aims to cut engineering time, empower designers, and revolutionize the way design and engineering teams collaborate.

GPTConsole

GPTConsole is an AI-powered platform that helps developers build production-ready applications faster and more efficiently. Its AI agents can generate code for a variety of applications, including web applications, AI applications, and landing pages. GPTConsole also offers a range of features to help developers build and maintain their applications, including an AI agent that can learn your entire codebase and answer your questions, and a CLI tool for accessing agents directly from the command line.

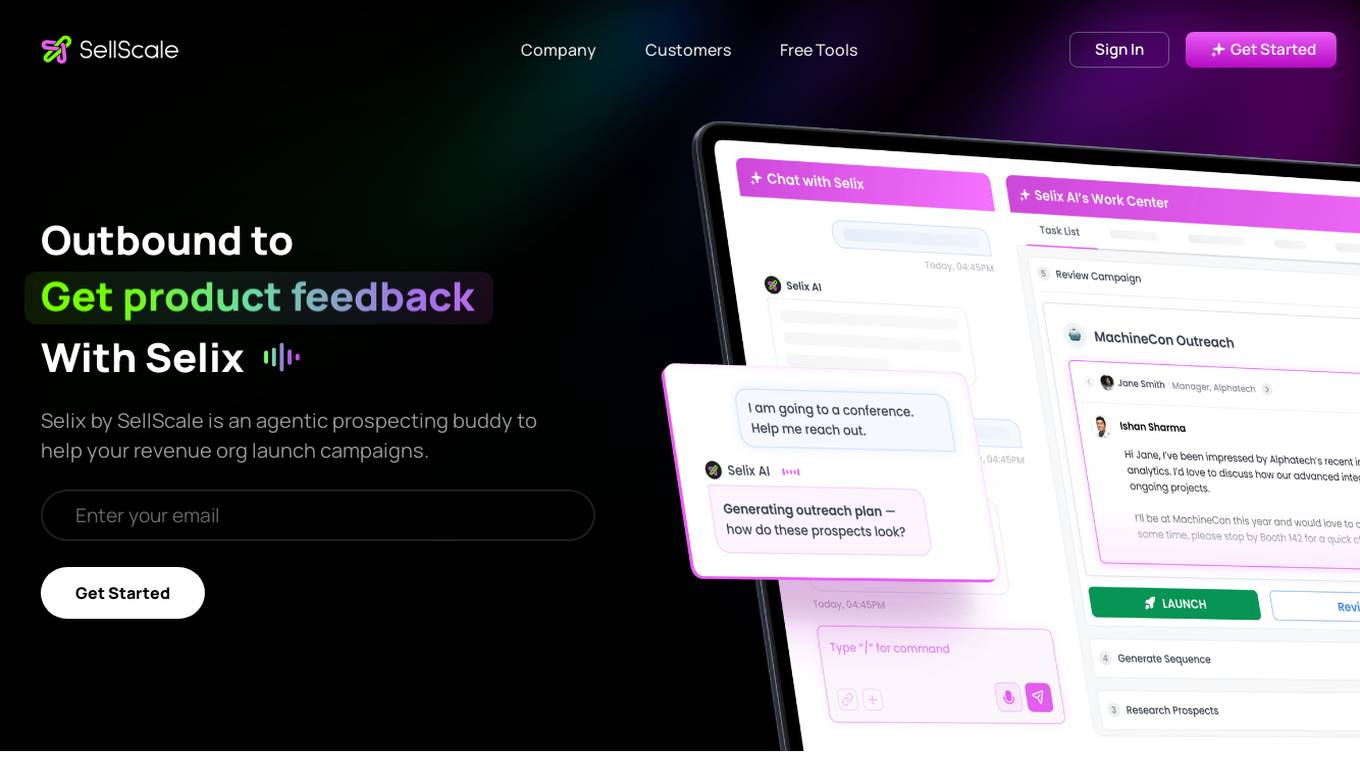

SellScale

SellScale is an AI-powered prospecting tool designed to assist revenue organizations in launching campaigns, engaging with prospects, and obtaining feedback on products. It helps users in setting up conference meetings, launching new products, and reaching out to potential customers effectively. The tool is built with design partners and customers, offering features such as quickly launching campaigns based on recent news, gearing up for product launches, getting feedback from early adopters, and personalizing outreach messages using AI technology. SellScale aims to ensure proper deliverability of emails, enable users to pull contacts from various data providers, and enhance messaging to resonate with the target audience.

Trag

Trag is an AI-powered tool designed to review pull requests in minutes, empowering engineering teams to save time and focus on building products. With Trag, users can create custom patterns for code review, ensuring best practices are followed and bugs are caught early. The tool offers features like autofix with AI, monitoring progress, connecting multiple repositories, pull request review, analytics, and team workspaces. Trag stands out from traditional linters by providing complex code understanding, semantic code analysis, predictive bug detection, and refactoring suggestions. It aims to streamline code reviews and help teams ship faster with AI-powered reviews.

OSS Insight

OSS Insight is an AI tool that provides deep insight into developers and repositories on GitHub, offering information about stars, pull requests, issues, pushes, comments, and reviews. It utilizes artificial intelligence to analyze data and provide valuable insights to users. The tool ranks repositories, tracks trending repositories, and offers real-time information about GitHub events. Additionally, it offers features like data exploration, collections, live blog, API integration, and widgets.

Maige

Maige is an open-source infrastructure designed to run natural language workflows on your codebase. It allows users to connect their repository, define rules for handling issues and pull requests, and monitor the workflow execution through a dashboard. Maige leverages AI capabilities to label, assign, comment, review code, and execute simple code snippets, all while being customizable and flexible with the GitHub API.

Greptile

Greptile is an AI tool designed to assist developers in code review processes. It integrates with GitHub to review pull requests and identify bugs, antipatterns, and other issues in the codebase. By leveraging AI technology, Greptile aims to streamline the code review process and improve code quality.

1 - Open Source AI Tools

OllamaSharp

OllamaSharp is a .NET binding for the Ollama API, providing an intuitive API client to interact with Ollama. It offers support for all Ollama API endpoints, real-time streaming, progress reporting, and an API console for remote management. Users can easily set up the client, list models, pull models with progress feedback, stream completions, and build interactive chats. The project includes a demo console for exploring and managing the Ollama host.

7 - OpenAI Gpts

CodeGPT

This GPT can generate code for you. For now it creates full-stack apps using Typescript. Just describe the feature you want and you will get a link to the Github code pull request and the live app deployed.

REPO MASTER

Expert at fetching repository information from GitHub, Hugging Face. and you local repositories