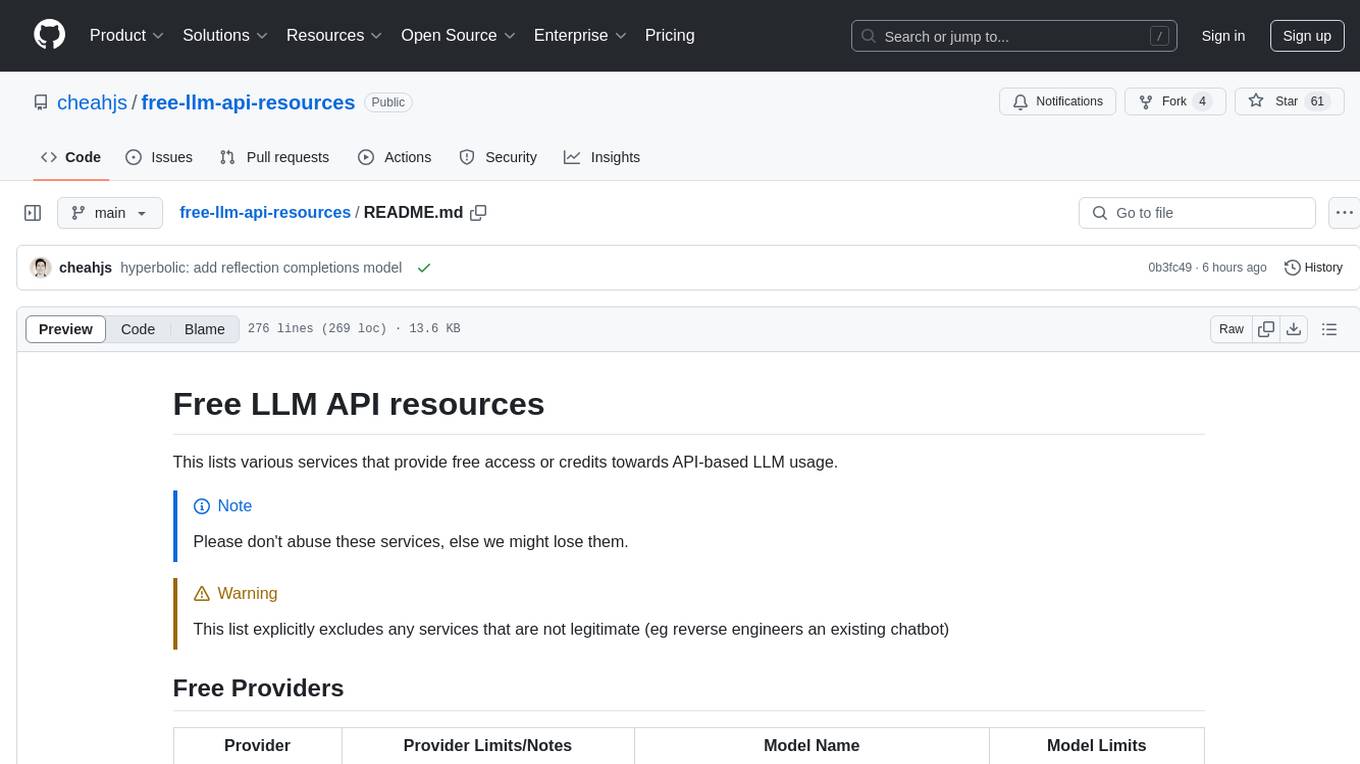

free-llm-api-resources

A list of free LLM inference resources accessible via API.

Stars: 5798

The 'Free LLM API resources' repository provides a comprehensive list of services offering free access or credits for API-based LLM usage. It includes various providers with details on model names, limits, and notes. Users can find information on legitimate services and their respective usage restrictions to leverage LLM capabilities without incurring costs. The repository aims to assist developers and researchers in accessing AI models for experimentation, development, and learning purposes.

README:

This lists various services that provide free access or credits towards API-based LLM usage.

[!NOTE]

Please don't abuse these services, else we might lose them.

[!WARNING]

This list explicitly excludes any services that are not legitimate (eg reverse engineers an existing chatbot)

Limits:

20 requests/minute

50 requests/day

1000 requests/day with $10 lifetime topup

Models share a common quota.

- DeepCoder 14B Preview

- DeepHermes 3 Llama 3 8B Preview

- DeepSeek R1

- DeepSeek R1 Distill Llama 70B

- DeepSeek V3 0324

- Dolphin 3.0 Mistral 24B

- Dolphin 3.0 R1 Mistral 24B

- Gemma 2 9B Instruct

- Gemma 3 12B Instruct

- Gemma 3 27B Instruct

- Gemma 3 4B Instruct

- Kimi VL A3B Thinking

- Llama 3.2 3B Instruct

- Llama 3.3 70B Instruct

- Llama 4 Maverick

- Llama 4 Scout

- Mistral 7B Instruct

- Mistral Nemo

- Mistral Small 24B Instruct 2501

- Mistral Small 3.1 24B Instruct

- QwQ 32B ArliAI RpR v1

- Qwen 2.5 72B Instruct

- Qwen 2.5 VL 32B Instruct

- Qwen2.5 Coder 32B Instruct

- Qwen2.5 VL 72B Instruct

- Shisa V2 Llama 3.3 70B

- cognitivecomputations/dolphin-mistral-24b-venice-edition:free

- deepseek/deepseek-chat-v3.1:free

- deepseek/deepseek-r1-0528-qwen3-8b:free

- deepseek/deepseek-r1-0528:free

- google/gemma-3n-e2b-it:free

- google/gemma-3n-e4b-it:free

- meta-llama/llama-3.3-8b-instruct:free

- microsoft/mai-ds-r1:free

- mistralai/devstral-small-2505:free

- mistralai/mistral-small-3.2-24b-instruct:free

- moonshotai/kimi-dev-72b:free

- moonshotai/kimi-k2:free

- nvidia/nemotron-nano-9b-v2:free

- openai/gpt-oss-120b:free

- openai/gpt-oss-20b:free

- qwen/qwen3-14b:free

- qwen/qwen3-235b-a22b:free

- qwen/qwen3-30b-a3b:free

- qwen/qwen3-4b:free

- qwen/qwen3-8b:free

- qwen/qwen3-coder:free

- tencent/hunyuan-a13b-instruct:free

- tngtech/deepseek-r1t-chimera:free

- tngtech/deepseek-r1t2-chimera:free

- x-ai/grok-4-fast:free

- z-ai/glm-4.5-air:free

Data is used for training when used outside of the UK/CH/EEA/EU.

| Model Name | Model Limits |

|---|---|

| Gemini 2.5 Pro | 3,000,000 tokens/day 125,000 tokens/minute 50 requests/day 2 requests/minute |

| Gemini 2.5 Flash | 250,000 tokens/minute 250 requests/day 10 requests/minute |

| Gemini 2.5 Flash-Lite | 250,000 tokens/minute 1,000 requests/day 15 requests/minute |

| Gemini 2.0 Flash | 1,000,000 tokens/minute 200 requests/day 15 requests/minute |

| Gemini 2.0 Flash-Lite | 1,000,000 tokens/minute 200 requests/day 30 requests/minute |

| Gemini 2.0 Flash (Experimental) | 250,000 tokens/minute 50 requests/day 10 requests/minute |

| LearnLM 2.0 Flash (Experimental) | 1,500 requests/day 15 requests/minute |

| Gemma 3 27B Instruct | 15,000 tokens/minute 14,400 requests/day 30 requests/minute |

| Gemma 3 12B Instruct | 15,000 tokens/minute 14,400 requests/day 30 requests/minute |

| Gemma 3 4B Instruct | 15,000 tokens/minute 14,400 requests/day 30 requests/minute |

| Gemma 3 1B Instruct | 15,000 tokens/minute 14,400 requests/day 30 requests/minute |

Phone number verification required. Models tend to be context window limited.

Limits: 40 requests/minute

- Free tier (Experiment plan) requires opting into data training

- Requires phone number verification.

Limits (per-model): 1 request/second, 500,000 tokens/minute, 1,000,000,000 tokens/month

- Currently free to use

- Monthly subscription based

- Requires phone number verification

Limits: 30 requests/minute, 2,000 requests/day

- Codestral

HuggingFace Serverless Inference limited to models smaller than 10GB. Some popular models are supported even if they exceed 10GB.

Limits: $0.10/month in credits

- Various open models across supported providers

Routes to various supported providers.

Limits: $5/month

| Model Name | Model Limits |

|---|---|

| gpt-oss-120b | 30 requests/minute 60,000 tokens/minute 900 requests/hour 1,000,000 tokens/hour 14,400 requests/day 1,000,000 tokens/day |

| Qwen 3 235B A22B Instruct | 30 requests/minute 60,000 tokens/minute 900 requests/hour 1,000,000 tokens/hour 14,400 requests/day 1,000,000 tokens/day |

| Qwen 3 235B A22B Thinking | 30 requests/minute 60,000 tokens/minute 900 requests/hour 1,000,000 tokens/hour 14,400 requests/day 1,000,000 tokens/day |

| Qwen 3 Coder 480B | 10 requests/minute 150,000 tokens/minute 100 requests/hour 1,000,000 tokens/hour 100 requests/day 1,000,000 tokens/day |

| Llama 3.3 70B | 30 requests/minute 64,000 tokens/minute 900 requests/hour 1,000,000 tokens/hour 14,400 requests/day 1,000,000 tokens/day |

| Qwen 3 32B | 30 requests/minute 64,000 tokens/minute 900 requests/hour 1,000,000 tokens/hour 14,400 requests/day 1,000,000 tokens/day |

| Llama 3.1 8B | 30 requests/minute 60,000 tokens/minute 900 requests/hour 1,000,000 tokens/hour 14,400 requests/day 1,000,000 tokens/day |

| Llama 4 Scout | 30 requests/minute 60,000 tokens/minute 900 requests/hour 1,000,000 tokens/hour 14,400 requests/day 1,000,000 tokens/day |

| Llama 4 Maverick | 30 requests/minute 60,000 tokens/minute 900 requests/hour 1,000,000 tokens/hour 14,400 requests/day 1,000,000 tokens/day |

| Model Name | Model Limits |

|---|---|

| Allam 2 7B | 7,000 requests/day 6,000 tokens/minute |

| DeepSeek R1 Distill Llama 70B | 1,000 requests/day 6,000 tokens/minute |

| Gemma 2 9B Instruct | 14,400 requests/day 15,000 tokens/minute |

| Llama 3.1 8B | 14,400 requests/day 6,000 tokens/minute |

| Llama 3.3 70B | 1,000 requests/day 12,000 tokens/minute |

| Llama 4 Maverick 17B 128E Instruct | 1,000 requests/day 6,000 tokens/minute |

| Llama 4 Scout Instruct | 1,000 requests/day 30,000 tokens/minute |

| Whisper Large v3 | 7,200 audio-seconds/minute 2,000 requests/day |

| Whisper Large v3 Turbo | 7,200 audio-seconds/minute 2,000 requests/day |

| groq/compound | 250 requests/day 70,000 tokens/minute |

| groq/compound-mini | 250 requests/day 70,000 tokens/minute |

| meta-llama/llama-guard-4-12b | 14,400 requests/day 15,000 tokens/minute |

| meta-llama/llama-prompt-guard-2-22m | |

| meta-llama/llama-prompt-guard-2-86m | |

| moonshotai/kimi-k2-instruct | 1,000 requests/day 10,000 tokens/minute |

| moonshotai/kimi-k2-instruct-0905 | 1,000 requests/day 10,000 tokens/minute |

| openai/gpt-oss-120b | 1,000 requests/day 8,000 tokens/minute |

| openai/gpt-oss-20b | 1,000 requests/day 8,000 tokens/minute |

| qwen/qwen3-32b | 1,000 requests/day 6,000 tokens/minute |

Limits: Up to 60 requests/minute

Limits:

20 requests/minute

1,000 requests/month

Models share a common quota.

- Command-A

- Command-R7B

- Command-R+

- Command-R

- Aya Expanse 8B

- Aya Expanse 32B

- Aya Vision 8B

- Aya Vision 32B

Extremely restrictive input/output token limits.

Limits: Dependent on Copilot subscription tier (Free/Pro/Pro+/Business/Enterprise)

- AI21 Jamba 1.5 Large

- AI21 Jamba 1.5 Mini

- Codestral 25.01

- Cohere Command A

- Cohere Command R 08-2024

- Cohere Command R+ 08-2024

- Cohere Embed v3 English

- Cohere Embed v3 Multilingual

- DeepSeek-R1

- DeepSeek-R1-0528

- DeepSeek-V3-0324

- Grok 3

- Grok 3 Mini

- JAIS 30b Chat

- Llama 4 Maverick 17B 128E Instruct FP8

- Llama 4 Scout 17B 16E Instruct

- Llama-3.2-11B-Vision-Instruct

- Llama-3.2-90B-Vision-Instruct

- Llama-3.3-70B-Instruct

- MAI-DS-R1

- Meta-Llama-3.1-405B-Instruct

- Meta-Llama-3.1-8B-Instruct

- Ministral 3B

- Mistral Large 24.11

- Mistral Medium 3 (25.05)

- Mistral Nemo

- Mistral Small 3.1

- OpenAI GPT-4.1

- OpenAI GPT-4.1-mini

- OpenAI GPT-4.1-nano

- OpenAI GPT-4o

- OpenAI GPT-4o mini

- OpenAI Text Embedding 3 (large)

- OpenAI Text Embedding 3 (small)

- OpenAI gpt-5

- OpenAI gpt-5-chat (preview)

- OpenAI gpt-5-mini

- OpenAI gpt-5-nano

- OpenAI o1

- OpenAI o1-mini

- OpenAI o1-preview

- OpenAI o3

- OpenAI o3-mini

- OpenAI o4-mini

- Phi-4

- Phi-4-mini-instruct

- Phi-4-mini-reasoning

- Phi-4-multimodal-instruct

- Phi-4-reasoning

Limits: 10,000 neurons/day

- @cf/aisingapore/gemma-sea-lion-v4-27b-it

- @cf/openai/gpt-oss-120b

- @cf/openai/gpt-oss-20b

- DeepSeek R1 Distill Qwen 32B

- Deepseek Coder 6.7B Base (AWQ)

- Deepseek Coder 6.7B Instruct (AWQ)

- Deepseek Math 7B Instruct

- Discolm German 7B v1 (AWQ)

- Falcom 7B Instruct

- Gemma 2B Instruct (LoRA)

- Gemma 3 12B Instruct

- Gemma 7B Instruct

- Gemma 7B Instruct (LoRA)

- Hermes 2 Pro Mistral 7B

- Llama 2 13B Chat (AWQ)

- Llama 2 7B Chat (FP16)

- Llama 2 7B Chat (INT8)

- Llama 2 7B Chat (LoRA)

- Llama 3 8B Instruct

- Llama 3 8B Instruct (AWQ)

- Llama 3.1 8B Instruct (AWQ)

- Llama 3.1 8B Instruct (FP8)

- Llama 3.2 11B Vision Instruct

- Llama 3.2 1B Instruct

- Llama 3.2 3B Instruct

- Llama 3.3 70B Instruct (FP8)

- Llama 4 Scout Instruct

- Llama Guard 3 8B

- LlamaGuard 7B (AWQ)

- Mistral 7B Instruct v0.1

- Mistral 7B Instruct v0.1 (AWQ)

- Mistral 7B Instruct v0.2

- Mistral 7B Instruct v0.2 (LoRA)

- Mistral Small 3.1 24B Instruct

- Neural Chat 7B v3.1 (AWQ)

- OpenChat 3.5 0106

- OpenHermes 2.5 Mistral 7B (AWQ)

- Phi-2

- Qwen 1.5 0.5B Chat

- Qwen 1.5 1.8B Chat

- Qwen 1.5 14B Chat (AWQ)

- Qwen 1.5 7B Chat (AWQ)

- Qwen 2.5 Coder 32B Instruct

- Qwen QwQ 32B

- SQLCoder 7B 2

- Starling LM 7B Beta

- TinyLlama 1.1B Chat v1.0

- Una Cybertron 7B v2 (BF16)

- Zephyr 7B Beta (AWQ)

Very stringent payment verification for Google Cloud.

| Model Name | Model Limits |

|---|---|

| Llama 3.2 90B Vision Instruct | 30 requests/minute Free during preview |

| Llama 3.1 70B Instruct | 60 requests/minute Free during preview |

| Llama 3.1 8B Instruct | 60 requests/minute Free during preview |

Credits: $1 when you add a payment method

Models: Various open models

Credits: $1

Models: Various open models

Credits: $30

Models: Any supported model - pay by compute time

Credits: $1

Models: Various open models

Credits: $0.5 for 1 year

Models: Various open models

Credits: $10 for 3 months

Models: Jamba family of models

Credits: $10 for 3 months

Models: Solar Pro/Mini

Credits: $15

Requirements: Phone number verification

Models: Various open models

Credits: 1 million tokens/model

Models: Various open and proprietary Qwen models

Credits: $5/month upon sign up, $30/month with payment method added

Models: Any supported model - pay by compute time

Credits: $1, $25 on responding to email survey

Models: Various open models

Credits: $1

Models: Various open models

Credits: $1

Models:

- DeepSeek V3

- DeepSeek V3 0324

- Hermes 3 Llama 3.1 70B

- Llama 3 70B Instruct

- Llama 3.1 405B Base (FP8)

- Llama 3.1 405B Instruct

- Llama 3.1 70B Instruct

- Llama 3.1 8B Instruct

- Llama 3.2 3B Instruct

- Llama 3.3 70B Instruct

- Pixtral 12B (2409)

- Qwen QwQ 32B

- Qwen2.5 72B Instruct

- Qwen2.5 Coder 32B Instruct

- Qwen2.5 VL 72B Instruct

- Qwen2.5 VL 7B Instruct

- deepseek-ai/deepseek-r1-0528

- openai/gpt-oss-120b

- openai/gpt-oss-120b-turbo

- openai/gpt-oss-20b

- qwen/qwen3-235b-a22b

- qwen/qwen3-235b-a22b-instruct-2507-fp8

- qwen/qwen3-coder-480b-a35b-instruct-fp8

- qwen/qwen3-next-80b-a3b-instruct

- qwen/qwen3-next-80b-a3b-thinking

Credits: $5 for 3 months

Models:

- E5-Mistral-7B-Instruct

- Llama 3.1 8B

- Llama 3.3 70B

- Llama 3.3 70B

- Llama-4-Maverick-17B-128E-Instruct

- Qwen/Qwen3-32B

- Whisper-Large-v3

- deepseek-ai/DeepSeek-R1-0528

- deepseek-ai/DeepSeek-R1-Distill-Llama-70B

- deepseek-ai/DeepSeek-V3-0324

- deepseek-ai/DeepSeek-V3.1

- deepseek-ai/DeepSeek-V3.1-Terminus

- openai/gpt-oss-120b

Credits: 1,000,000 free tokens

Models:

- BGE-Multilingual-Gemma2

- DeepSeek R1 Distill Llama 70B

- Gemma 3 27B Instruct

- Llama 3.1 8B Instruct

- Llama 3.3 70B Instruct

- Mistral Nemo 2407

- Mistral Small 3.1 24B Instruct 2503

- Pixtral 12B (2409)

- Qwen2.5 Coder 32B Instruct

- devstral-small-2505

- gpt-oss-120b

- mistral-small-3.2-24b-instruct-2506

- qwen3-235b-a22b-instruct-2507

- qwen3-coder-30b-a3b-instruct

- voxtral-small-24b-2507

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for free-llm-api-resources

Similar Open Source Tools

free-llm-api-resources

The 'Free LLM API resources' repository provides a comprehensive list of services offering free access or credits for API-based LLM usage. It includes various providers with details on model names, limits, and notes. Users can find information on legitimate services and their respective usage restrictions to leverage LLM capabilities without incurring costs. The repository aims to assist developers and researchers in accessing AI models for experimentation, development, and learning purposes.

public

This public repository contains API, tools, and packages for Datagrok, a web-based data analytics platform. It offers support for scientific domains, applications, connectors to web services, visualizations, file importing, scientific methods in R, Python, or Julia, file metadata extractors, custom predictive models, platform enhancements, and more. The open-source packages are free to use, with restrictions on server computational capacities for the public environment. Academic institutions can use Datagrok for research and education, benefiting from reproducible and scalable computations and data augmentation capabilities. Developers can contribute by creating visualizations, scientific methods, file editors, connectors to web services, and more.

oramacore

OramaCore is a database designed for AI projects, answer engines, copilots, and search functionalities. It offers features such as a full-text search engine, vector database, LLM interface, and various utilities. The tool is currently under active development and not recommended for production use due to potential API changes. OramaCore aims to provide a comprehensive solution for managing data and enabling advanced search capabilities in AI applications.

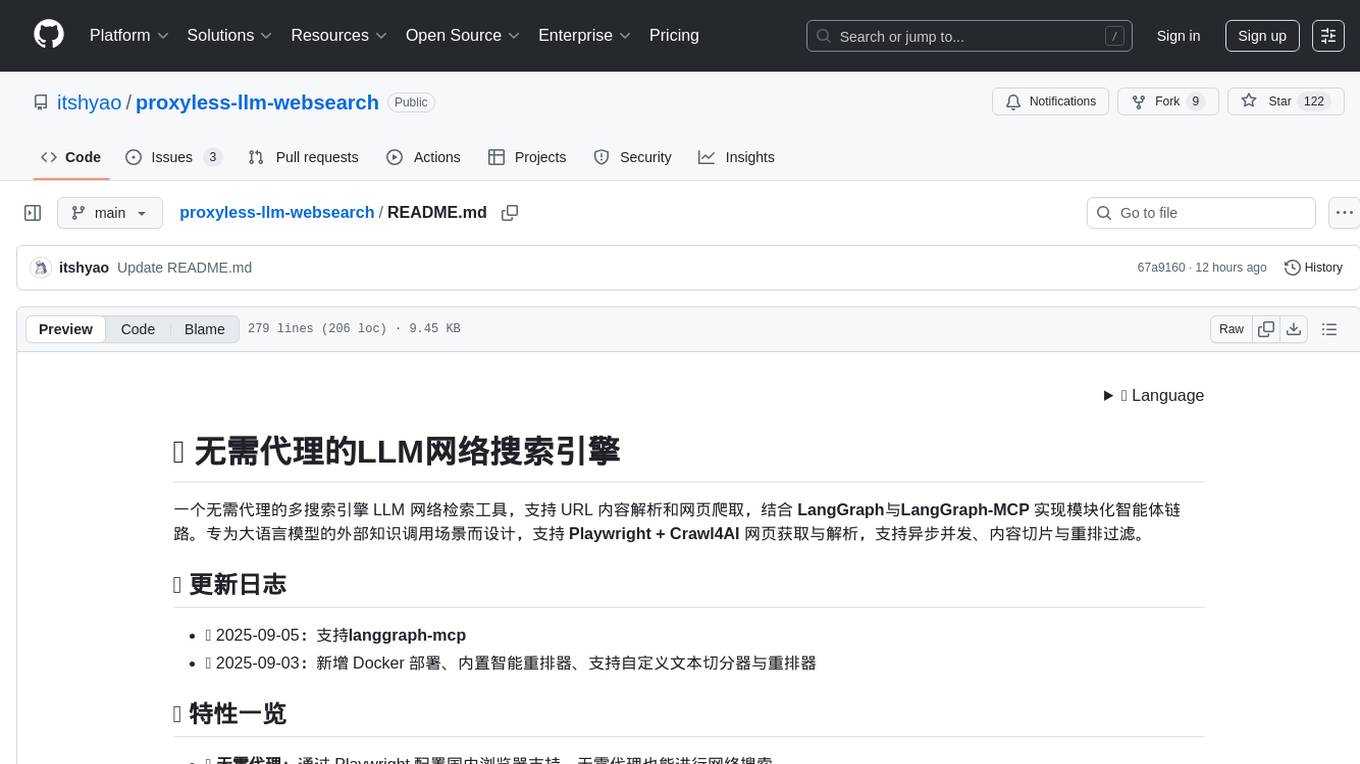

proxyless-llm-websearch

Proxyless-LLM-WebSearch is a tool that enables users to perform large language model-based web search without the need for proxies. It leverages state-of-the-art language models to provide accurate and efficient web search results. The tool is designed to be user-friendly and accessible for individuals looking to conduct web searches at scale. With Proxyless-LLM-WebSearch, users can easily search the web using natural language queries and receive relevant results in a timely manner. This tool is particularly useful for researchers, data analysts, content creators, and anyone interested in leveraging advanced language models for web search tasks.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

OpenAI

OpenAI is a Swift community-maintained implementation over OpenAI public API. It is a non-profit artificial intelligence research organization founded in San Francisco, California in 2015. OpenAI's mission is to ensure safe and responsible use of AI for civic good, economic growth, and other public benefits. The repository provides functionalities for text completions, chats, image generation, audio processing, edits, embeddings, models, moderations, utilities, and Combine extensions.

open-ai

Open AI is a powerful tool for artificial intelligence research and development. It provides a wide range of machine learning models and algorithms, making it easier for developers to create innovative AI applications. With Open AI, users can explore cutting-edge technologies such as natural language processing, computer vision, and reinforcement learning. The platform offers a user-friendly interface and comprehensive documentation to support users in building and deploying AI solutions. Whether you are a beginner or an experienced AI practitioner, Open AI offers the tools and resources you need to accelerate your AI projects and stay ahead in the rapidly evolving field of artificial intelligence.

BentoVLLM

BentoVLLM is an example project demonstrating how to serve and deploy open-source Large Language Models using vLLM, a high-throughput and memory-efficient inference engine. It provides a basis for advanced code customization, such as custom models, inference logic, or vLLM options. The project allows for simple LLM hosting with OpenAI compatible endpoints without the need to write any code. Users can interact with the server using Swagger UI or other methods, and the service can be deployed to BentoCloud for better management and scalability. Additionally, the repository includes integration examples for different LLM models and tools.

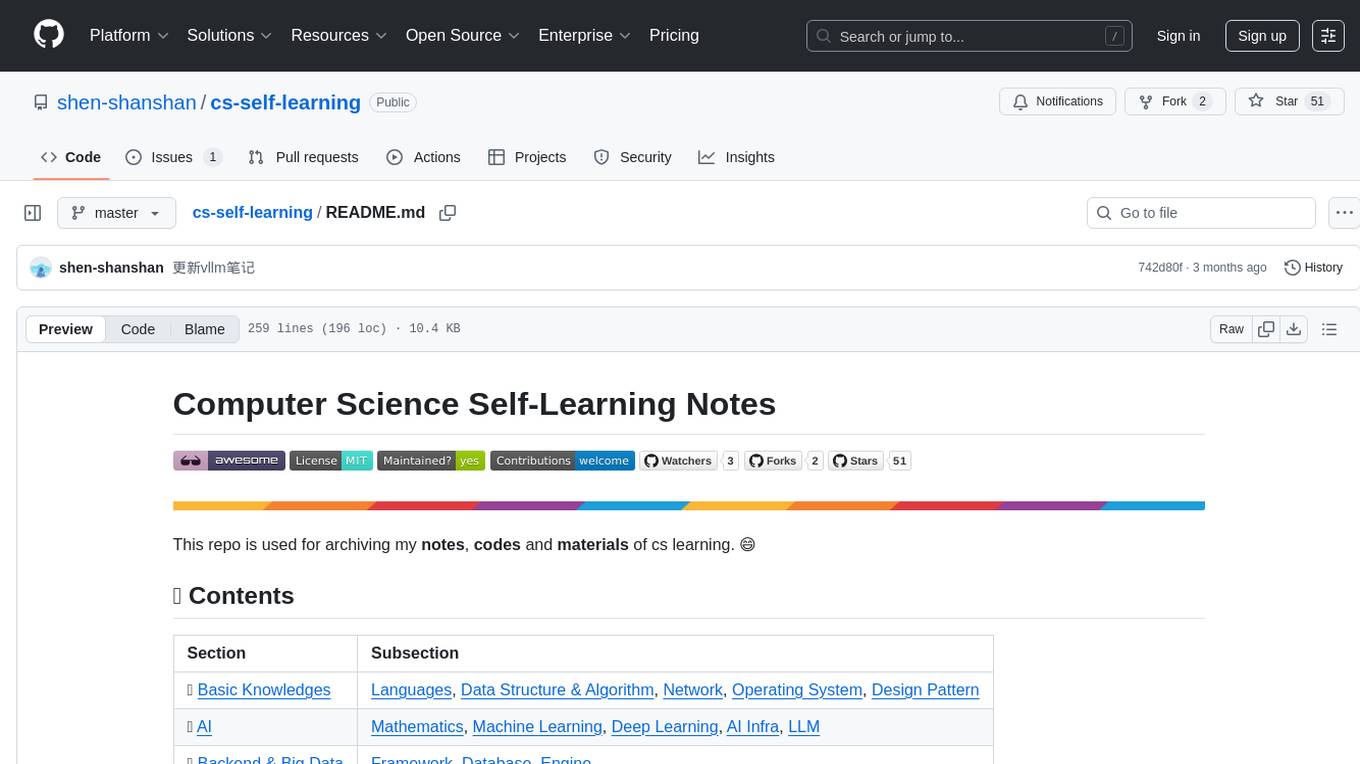

cs-self-learning

This repository serves as an archive for computer science learning notes, codes, and materials. It covers a wide range of topics including basic knowledge, AI, backend & big data, tools, and other related areas. The content is organized into sections and subsections for easy navigation and reference. Users can find learning resources, programming practices, and tutorials on various subjects such as languages, data structures & algorithms, AI, frameworks, databases, development tools, and more. The repository aims to support self-learning and skill development in the field of computer science.

aishare

Aishare is a collaborative platform for sharing AI models and datasets. It allows users to upload, download, and explore various AI models and datasets. Users can also rate and comment on the shared resources, providing valuable feedback to the community. Aishare aims to foster collaboration and knowledge sharing in the field of artificial intelligence.

AgentsMeetRL

AgentsMeetRL is an awesome list that summarizes open-source repositories for training LLM Agents using reinforcement learning. The criteria for identifying an agent project are multi-turn interactions or tool use. The project is based on code analysis from open-source repositories using GitHub Copilot Agent. The focus is on reinforcement learning frameworks, RL algorithms, rewards, and environments that projects depend on, for everyone's reference on technical choices.

ai

This repository contains a collection of AI algorithms and models for various machine learning tasks. It provides implementations of popular algorithms such as neural networks, decision trees, and support vector machines. The code is well-documented and easy to understand, making it suitable for both beginners and experienced developers. The repository also includes example datasets and tutorials to help users get started with building and training AI models. Whether you are a student learning about AI or a professional working on machine learning projects, this repository can be a valuable resource for your development journey.

llm

The 'llm' package for Emacs provides an interface for interacting with Large Language Models (LLMs). It abstracts functionality to a higher level, concealing API variations and ensuring compatibility with various LLMs. Users can set up providers like OpenAI, Gemini, Vertex, Claude, Ollama, GPT4All, and a fake client for testing. The package allows for chat interactions, embeddings, token counting, and function calling. It also offers advanced prompt creation and logging capabilities. Users can handle conversations, create prompts with placeholders, and contribute by creating providers.

llmgateway

The llmgateway repository is a tool that provides a gateway for interacting with various LLM (Large Language Model) models. It allows users to easily access and utilize pre-trained language models for tasks such as text generation, sentiment analysis, and language translation. The tool simplifies the process of integrating LLMs into applications and workflows, enabling developers to leverage the power of state-of-the-art language models for various natural language processing tasks.

awesome-LLM-resources

This repository is a curated list of resources for learning and working with Large Language Models (LLMs). It includes a collection of articles, tutorials, tools, datasets, and research papers related to LLMs such as GPT-3, BERT, and Transformer models. Whether you are a researcher, developer, or enthusiast interested in natural language processing and artificial intelligence, this repository provides valuable resources to help you understand, implement, and experiment with LLMs.

enterprise-h2ogpte

Enterprise h2oGPTe - GenAI RAG is a repository containing code examples, notebooks, and benchmarks for the enterprise version of h2oGPTe, a powerful AI tool for generating text based on the RAG (Retrieval-Augmented Generation) architecture. The repository provides resources for leveraging h2oGPTe in enterprise settings, including implementation guides, performance evaluations, and best practices. Users can explore various applications of h2oGPTe in natural language processing tasks, such as text generation, content creation, and conversational AI.

For similar tasks

free-llm-api-resources

The 'Free LLM API resources' repository provides a comprehensive list of services offering free access or credits for API-based LLM usage. It includes various providers with details on model names, limits, and notes. Users can find information on legitimate services and their respective usage restrictions to leverage LLM capabilities without incurring costs. The repository aims to assist developers and researchers in accessing AI models for experimentation, development, and learning purposes.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.