Best AI tools for< Protect Against Indecent Content >

20 - AI tool Sites

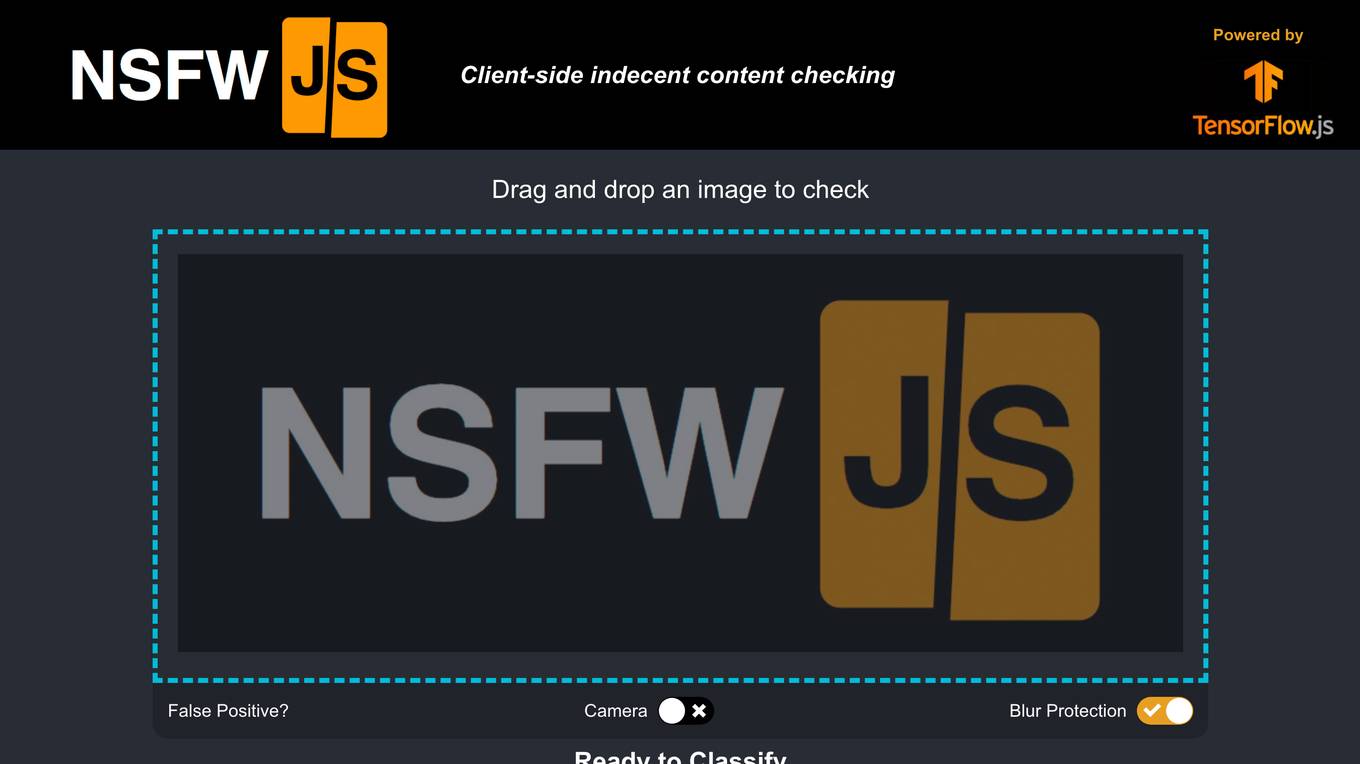

NSFW JS

NSFW JS is an AI tool designed for client-side indecent content checking. It utilizes a drag and drop feature to analyze images for inappropriate content. The tool boasts a high accuracy rate of 93% and offers camera blur protection. Developed by Infinite Red, Inc., NSFW JS is a cutting-edge solution for classifying images and ensuring online safety.

Blackbird.AI

Blackbird.AI is a narrative and risk intelligence platform that helps organizations identify and protect against narrative attacks created by misinformation and disinformation. The platform offers a range of solutions tailored to different industries and roles, enabling users to analyze threats in text, images, and memes across various sources such as social media, news, and the dark web. By providing context and clarity for strategic decision-making, Blackbird.AI empowers organizations to proactively manage and mitigate the impact of narrative attacks on their reputation and financial stability.

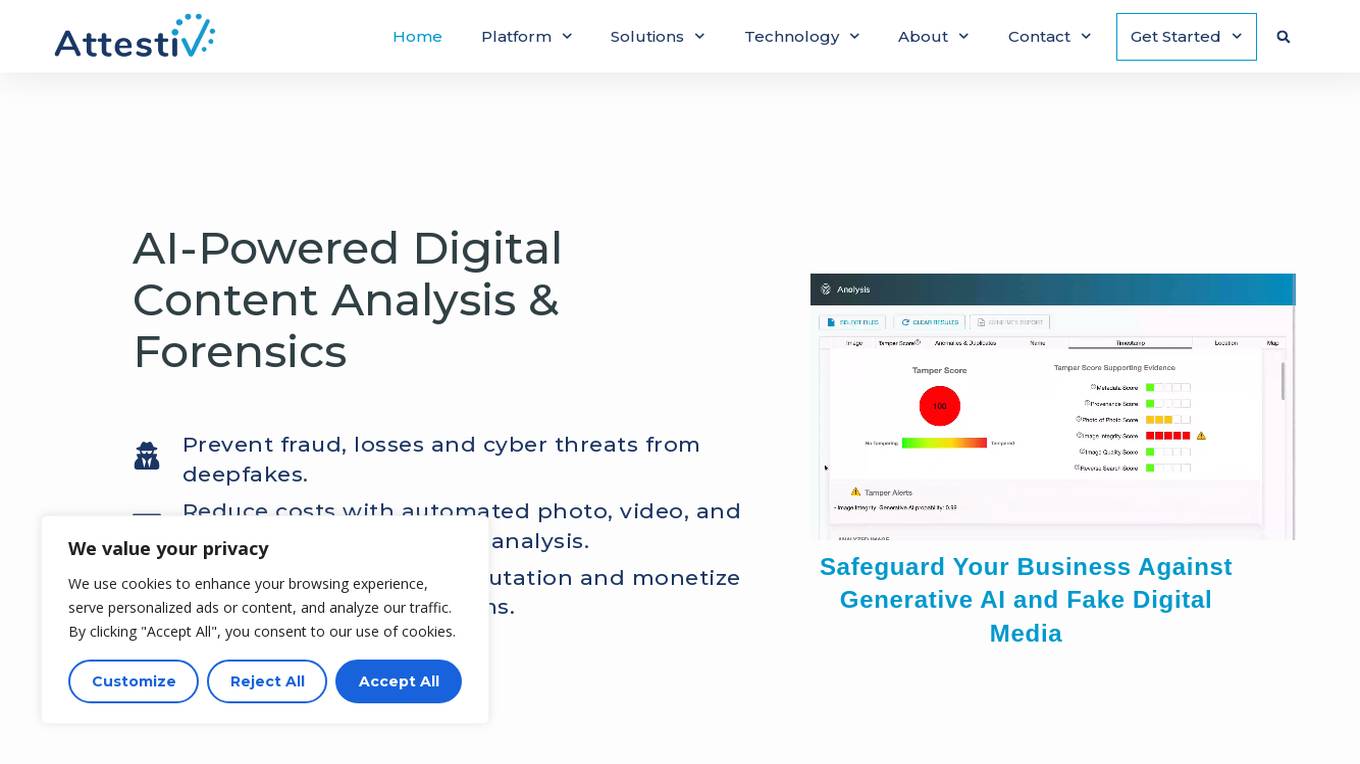

Attestiv

Attestiv is an AI-powered digital content analysis and forensics platform that offers solutions to prevent fraud, losses, and cyber threats from deepfakes. The platform helps in reducing costs through automated photo, video, and document inspection and analysis, protecting company reputation, and monetizing trust in secure systems. Attestiv's technology provides validation and authenticity for all digital assets, safeguarding against altered photos, videos, and documents that are increasingly easy to create but difficult to detect. The platform uses patented AI technology to ensure the authenticity of uploaded media and offers sector-agnostic solutions for various industries.

Hiya

Hiya is an AI-powered caller ID, call blocker, and protection application that enhances voice communication experiences. It helps users identify incoming calls, block spam and fraud, and protect against AI voice fraud and scams. Hiya offers solutions for businesses, carriers, and consumers, with features like branded caller ID, spam detection, call filtering, and more. With a global reach and a user base of over 450 million, Hiya aims to bring trust, identity, and intelligence back to phone calls.

Vibe AI

Vibe AI is an AI-powered cybersecurity product designed to secure business data and digital assets. It offers real-time alert notifications and developer-friendly APIs to protect against suspicious activities. With military-grade security features and a pay-as-you-go subscription model, Vibe AI provides a comprehensive security solution for businesses.

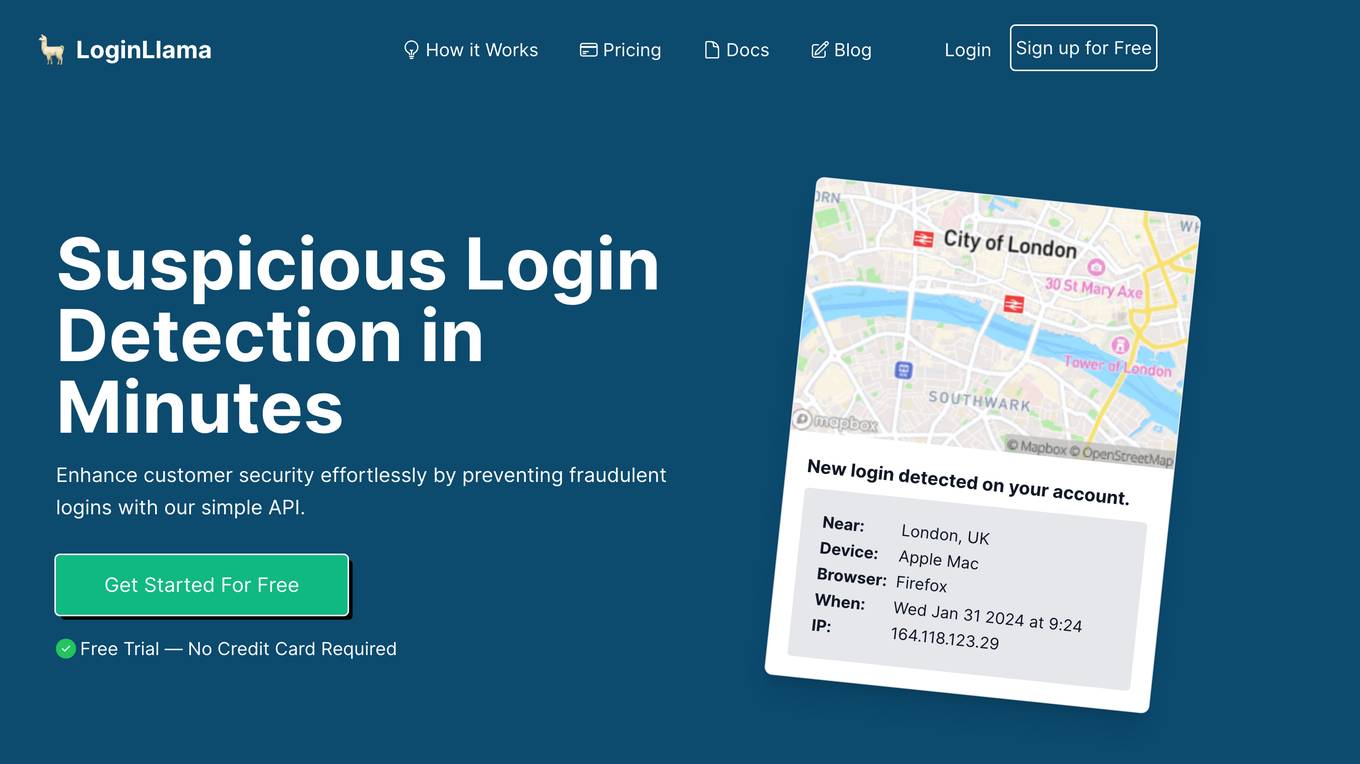

LoginLlama

LoginLlama is an AI-powered suspicious login detection tool designed for developers to enhance customer security effortlessly by preventing fraudulent logins. It provides real-time fraud detection, AI-powered login behavior insights, and easy integration through SDK and API. By evaluating login attempts based on multiple ranking factors and historic behavior analysis, LoginLlama helps protect against unauthorized access, account takeover, credential stuffing, phishing attacks, and insider threats. The tool is user-friendly, offering a simple API for developers to add security checks to their apps with just a few lines of code.

Breacher.ai

Breacher.ai is an AI-powered cybersecurity solution that specializes in deepfake detection and protection. It offers a range of services to help organizations guard against deepfake attacks, including deepfake phishing simulations, awareness training, micro-curriculum, educational videos, and certification. The platform combines advanced AI technology with expert knowledge to detect, educate, and protect against deepfake threats, ensuring the security of employees, assets, and reputation. Breacher.ai's fully managed service and seamless integration with existing security measures provide a comprehensive defense strategy against deepfake attacks.

Prompt Security

Prompt Security is a platform that secures all uses of Generative AI in the organization: from tools used by your employees to your customer-facing apps.

Hive Defender

Hive Defender is an advanced, machine-learning-powered DNS security service that offers comprehensive protection against a vast array of cyber threats including but not limited to cryptojacking, malware, DNS poisoning, phishing, typosquatting, ransomware, zero-day threats, and DNS tunneling. Hive Defender transcends traditional cybersecurity boundaries, offering multi-dimensional protection that monitors both your browser traffic and the entirety of your machine’s network activity.

CrowdStrike

CrowdStrike is a leading cybersecurity platform that uses artificial intelligence (AI) to protect businesses from cyber threats. The platform provides a unified approach to security, combining endpoint security, identity protection, cloud security, and threat intelligence into a single solution. CrowdStrike's AI-powered technology enables it to detect and respond to threats in real-time, providing businesses with the protection they need to stay secure in the face of evolving threats.

Robust Intelligence

Robust Intelligence is an end-to-end solution for securing AI applications. It automates the evaluation of AI models, data, and files for security and safety vulnerabilities and provides guardrails for AI applications in production against integrity, privacy, abuse, and availability violations. Robust Intelligence helps enterprises remove AI security blockers, save time and resources, meet AI safety and security standards, align AI security across stakeholders, and protect against evolving threats.

RTB House

RTB House is a global leader in online ad campaigns, offering a range of AI-powered solutions to help businesses drive sales and engage with customers. Their technology leverages deep learning to optimize ad campaigns, providing personalized retargeting, branding, and fraud protection. RTB House works with agencies and clients across various industries, including fashion, electronics, travel, and multi-category retail.

medium.engineering

medium.engineering is a website that focuses on verifying the security of user connections before allowing access to its content. It ensures that users are human by conducting a verification process that may take a few seconds. The site emphasizes the importance of enabling JavaScript and cookies for a seamless browsing experience. Powered by Cloudflare, medium.engineering prioritizes performance and security to provide a safe online environment for its visitors.

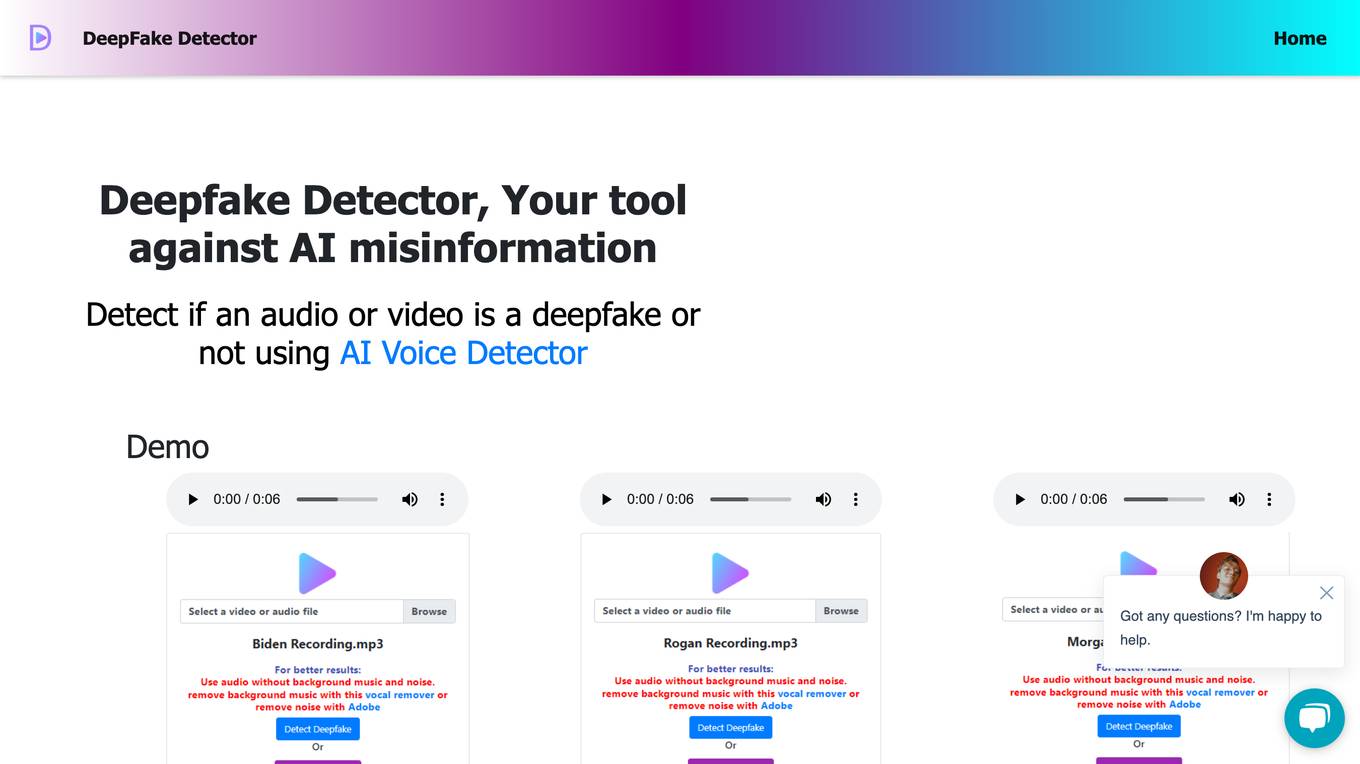

Deepfake Detector

Deepfake Detector is an AI tool designed to identify deepfakes in audio and video files. It offers features such as background noise and music removal, audio and video file analysis, and browser extension integration. The tool helps individuals and businesses protect themselves against deepfake scams by providing accurate detection and filtering of AI-generated content. With a focus on authenticity and reliability, Deepfake Detector aims to prevent financial losses and fraudulent activities caused by deepfake technology.

AI Scam Detective

AI Scam Detective is an AI tool designed to help users detect and prevent online scams. Users can paste messages or conversations into the provided box to receive a scam likelihood score from 1 to 10. The tool aims to assist individuals in identifying potential scams and protecting themselves from fraudulent activities. Created by Sam Meehan.

Turing.school

Turing.school is a website that focuses on verifying human users for security purposes. It ensures that the connection is secure before proceeding with any actions on the site. Users may encounter a brief waiting period while the verification process takes place. The site utilizes JavaScript and cookies to enhance security measures. Additionally, it employs Cloudflare for performance and security enhancements.

Tweetify.it

Tweetify.it is a website that verifies the user's human identity before proceeding. It ensures security by reviewing the connection and requires enabling JavaScript and cookies for further interaction. The site is powered by Cloudflare for performance and security purposes.

glasp.co

The website glasp.co is a security service powered by Cloudflare to protect against online attacks. It helps in preventing unauthorized access and malicious activities on websites by blocking potential threats. Users may encounter a block when triggering certain actions like submitting specific words or phrases, SQL commands, or malformed data. In such cases, they can contact the site owner for resolution by providing details of the incident and the Cloudflare Ray ID. Cloudflare's performance and security features ensure a safe browsing experience for visitors.

Playlab.ai

Playlab.ai is an AI-powered platform that offers a range of tools and applications to enhance online security and protect against cyber attacks. The platform utilizes advanced algorithms to detect and prevent various online threats, such as malicious attacks, SQL injections, and data breaches. Playlab.ai provides users with a secure and reliable online environment by offering real-time monitoring and protection services. With a user-friendly interface and customizable security settings, Playlab.ai is a valuable tool for individuals and businesses looking to safeguard their online presence.

Robust Intelligence

Robust Intelligence is an end-to-end security solution for AI applications. It automates the evaluation of AI models, data, and files for security and safety vulnerabilities and provides guardrails for AI applications in production against integrity, privacy, abuse, and availability violations. Robust Intelligence helps enterprises remove AI security blockers, save time and resources, meet AI safety and security standards, align AI security across stakeholders, and protect against evolving threats.

20 - Open Source AI Tools

openshield

OpenShield is a firewall designed for AI models to protect against various attacks such as prompt injection, insecure output handling, training data poisoning, model denial of service, supply chain vulnerabilities, sensitive information disclosure, insecure plugin design, excessive agency granting, overreliance, and model theft. It provides rate limiting, content filtering, and keyword filtering for AI models. The tool acts as a transparent proxy between AI models and clients, allowing users to set custom rate limits for OpenAI endpoints and perform tokenizer calculations for OpenAI models. OpenShield also supports Python and LLM based rules, with upcoming features including rate limiting per user and model, prompts manager, content filtering, keyword filtering based on LLM/Vector models, OpenMeter integration, and VectorDB integration. The tool requires an OpenAI API key, Postgres, and Redis for operation.

aif

Arno's Iptables Firewall (AIF) is a single- & multi-homed firewall script with DSL/ADSL support. It is a free software distributed under the GNU GPL License. The script provides a comprehensive set of configuration files and plugins for setting up and managing firewall rules, including support for NAT, load balancing, and multirouting. It offers detailed instructions for installation and configuration, emphasizing security best practices and caution when modifying settings. The script is designed to protect against hostile attacks by blocking all incoming traffic by default and allowing users to configure specific rules for open ports and network interfaces.

generative-ai-dart

The Google Generative AI SDK for Dart enables developers to utilize cutting-edge Large Language Models (LLMs) for creating language applications. It provides access to the Gemini API for generating content using state-of-the-art models. Developers can integrate the SDK into their Dart or Flutter applications to leverage powerful AI capabilities. It is recommended to use the SDK for server-side API calls to ensure the security of API keys and protect against potential key exposure in mobile or web apps.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

spring-ai

The Spring AI project provides a Spring-friendly API and abstractions for developing AI applications. It offers a portable client API for interacting with generative AI models, enabling developers to easily swap out implementations and access various models like OpenAI, Azure OpenAI, and HuggingFace. Spring AI also supports prompt engineering, providing classes and interfaces for creating and parsing prompts, as well as incorporating proprietary data into generative AI without retraining the model. This is achieved through Retrieval Augmented Generation (RAG), which involves extracting, transforming, and loading data into a vector database for use by AI models. Spring AI's VectorStore abstraction allows for seamless transitions between different vector database implementations.

galah

Galah is an LLM-powered web honeypot designed to mimic various applications and dynamically respond to arbitrary HTTP requests. It supports multiple LLM providers, including OpenAI. Unlike traditional web honeypots, Galah dynamically crafts responses for any HTTP request, caching them to reduce repetitive generation and API costs. The honeypot's configuration is crucial, directing the LLM to produce responses in a specified JSON format. Note that Galah is a weekend project exploring LLM capabilities and not intended for production use, as it may be identifiable through network fingerprinting and non-standard responses.

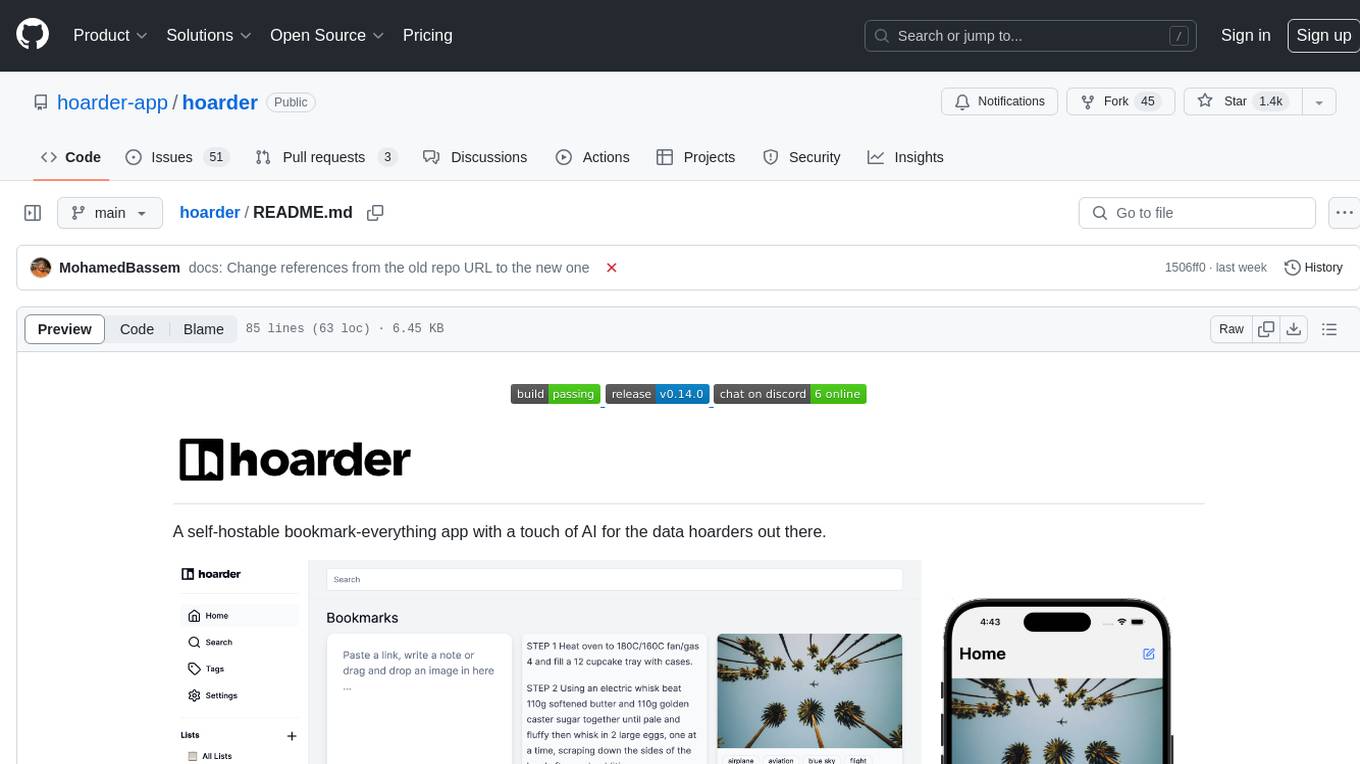

hoarder

A self-hostable bookmark-everything app with a touch of AI for data hoarders. Features include bookmarking links, taking notes, storing images, automatic fetching for link details, full-text search, AI-based automatic tagging, Chrome and Firefox plugins, iOS and Android apps, dark mode support, and self-hosting. Built to address the need for archiving and previewing links with automatic tagging. Developed by a systems engineer to stay connected with web development and cater to personal use cases.

promptulate

**Promptulate** is an AI Agent application development framework crafted by **Cogit Lab** , which offers developers an extremely concise and efficient way to build Agent applications through a Pythonic development paradigm. The core philosophy of Promptulate is to borrow and integrate the wisdom of the open-source community, incorporating the highlights of various development frameworks to lower the barrier to entry and unify the consensus among developers. With Promptulate, you can manipulate components like LLM, Agent, Tool, RAG, etc., with the most succinct code, as most tasks can be easily completed with just a few lines of code. 🚀

llm-app-stack

LLM App Stack, also known as Emerging Architectures for LLM Applications, is a comprehensive list of available tools, projects, and vendors at each layer of the LLM app stack. It covers various categories such as Data Pipelines, Embedding Models, Vector Databases, Playgrounds, Orchestrators, APIs/Plugins, LLM Caches, Logging/Monitoring/Eval, Validators, LLM APIs (proprietary and open source), App Hosting Platforms, Cloud Providers, and Opinionated Clouds. The repository aims to provide a detailed overview of tools and projects for building, deploying, and maintaining enterprise data solutions, AI models, and applications.

clearml-fractional-gpu

ClearML Fractional GPU is a tool designed to optimize GPU resource utilization by allowing multiple containers to run on the same GPU with driver-level memory limitation and compute time-slicing. It supports CUDA 11.x & CUDA 12.x, preventing greedy processes from grabbing the entire GPU memory. The tool offers options like Dynamic GPU Slicing, Container-based Memory Limits, and Kubernetes-based Static MIG Slicing to enhance hardware utilization and workload performance for AI development.

llamafile

llamafile is a tool that enables users to distribute and run Large Language Models (LLMs) with a single file. It combines llama.cpp with Cosmopolitan Libc to create a framework that simplifies the complexity of LLMs into a single-file executable called a 'llamafile'. Users can run these executable files locally on most computers without the need for installation, making open LLMs more accessible to developers and end users. llamafile also provides example llamafiles for various LLM models, allowing users to try out different LLMs locally. The tool supports multiple CPU microarchitectures, CPU architectures, and operating systems, making it versatile and easy to use.

DataFrame

DataFrame is a C++ analytical library designed for data analysis similar to libraries in Python and R. It allows you to slice, join, merge, group-by, and perform various statistical, summarization, financial, and ML algorithms on your data. DataFrame also includes a large collection of analytical algorithms in form of visitors, ranging from basic stats to more involved analysis. You can easily add your own algorithms as well. DataFrame employs extensive multithreading in almost all its APIs, making it suitable for analyzing large datasets. Key principles followed in the library include supporting any type without needing new code, avoiding pointer chasing, having all column data in contiguous memory space, minimizing space usage, avoiding data copying, using multi-threading judiciously, and not protecting the user against garbage in, garbage out.

last_layer

last_layer is a security library designed to protect LLM applications from prompt injection attacks, jailbreaks, and exploits. It acts as a robust filtering layer to scrutinize prompts before they are processed by LLMs, ensuring that only safe and appropriate content is allowed through. The tool offers ultra-fast scanning with low latency, privacy-focused operation without tracking or network calls, compatibility with serverless platforms, advanced threat detection mechanisms, and regular updates to adapt to evolving security challenges. It significantly reduces the risk of prompt-based attacks and exploits but cannot guarantee complete protection against all possible threats.

awesome-MLSecOps

Awesome MLSecOps is a curated list of open-source tools, resources, and tutorials for MLSecOps (Machine Learning Security Operations). It includes a wide range of security tools and libraries for protecting machine learning models against adversarial attacks, as well as resources for AI security, data anonymization, model security, and more. The repository aims to provide a comprehensive collection of tools and information to help users secure their machine learning systems and infrastructure.

Awesome-LLM-Watermark

This repository contains a collection of research papers related to watermarking techniques for text and images, specifically focusing on large language models (LLMs). The papers cover various aspects of watermarking LLM-generated content, including robustness, statistical understanding, topic-based watermarks, quality-detection trade-offs, dual watermarks, watermark collision, and more. Researchers have explored different methods and frameworks for watermarking LLMs to protect intellectual property, detect machine-generated text, improve generation quality, and evaluate watermarking techniques. The repository serves as a valuable resource for those interested in the field of watermarking for LLMs.

llm-guard

LLM Guard is a comprehensive tool designed to fortify the security of Large Language Models (LLMs). It offers sanitization, detection of harmful language, prevention of data leakage, and resistance against prompt injection attacks, ensuring that your interactions with LLMs remain safe and secure.

ai-exploits

AI Exploits is a repository that showcases practical attacks against AI/Machine Learning infrastructure, aiming to raise awareness about vulnerabilities in the AI/ML ecosystem. It contains exploits and scanning templates for responsibly disclosed vulnerabilities affecting machine learning tools, including Metasploit modules, Nuclei templates, and CSRF templates. Users can use the provided Docker image to easily run the modules and templates. The repository also provides guidelines for using Metasploit modules, Nuclei templates, and CSRF templates to exploit vulnerabilities in machine learning tools.

fast-llm-security-guardrails

ZenGuard AI enables AI developers to integrate production-level, low-code LLM (Large Language Model) guardrails into their generative AI applications effortlessly. With ZenGuard AI, ensure your application operates within trusted boundaries, is protected from prompt injections, and maintains user privacy without compromising on performance.

awesome-llm-unlearning

This repository tracks the latest research on machine unlearning in large language models (LLMs). It offers a comprehensive list of papers, datasets, and resources relevant to the topic.

20 - OpenAI Gpts

fox8 botnet paper

A helpful guide for understanding the paper "Anatomy of an AI-powered malicious social botnet"

T71 Russian Cyber Samovar

Analyzes and updates on cyber-related Russian APTs, cognitive warfare, disinformation, and other infoops.

CyberNews GPT

CyberNews GPT is an assistant that provides the latest security news about cyber threats, hackings and breaches, malware, zero-day vulnerabilities, phishing, scams and so on.

Personal Cryptoasset Security Wizard

An easy to understand wizard that guides you through questions about how to protect, back up and inherit essential digital information and assets such as crypto seed phrases, private keys, digital art, wallets, IDs, health and insurance information for you and your family.

Cute Little Time Travellers, a text adventure game

Protect your cute little timeline. Let me entertain you with this interactive repair-the-timeline game, lovingly illustrated in the style of ultra-cute little 3D kawaii dioramas.

Litigation Advisor

Advises on litigation strategies to protect the organization's legal rights.

Free Antivirus Software 2024

Free Antivirus Software : Reviews and Best Free Offers for antivirus software to protect you

GPT Auth™

This is a demonstration of GPT Auth™, an authentication system designed to protect your customized GPT.

Prompt Injection Detector

GPT used to classify prompts as valid inputs or injection attempts. Json output.

👑 Data Privacy for Insurance Companies 👑

Insurance providers collect and process personal health, financial, and property information, making it crucial to implement comprehensive data protection strategies.

Project Risk Assessment Advisor

Assesses project risks to mitigate potential organizational impacts.

PrivacyGPT

Guides And Advise On Digital Privacy Ranging From The Well Known To The Underground....

Big Idea Assistant

Expert advisor for protecting, sharing, and monetizing Intellectual Digital Assets (IDEAs) using Big Idea Platform.