Best AI tools for< measure metrics >

20 - AI tool Sites

Tara AI

Tara AI is an AI platform designed for software engineering teams to measure, optimize, and act on metrics to drive impactful outcomes. It helps in delivering with engineering analytics, running cycles on autopilot, eliminating risks and blockers, aligning on project scope, prioritizing team's focus, and tracking completed tasks. Tara AI aggregates and reconciles performance data at the team and project level, providing real-time insights and alerts on delivery. It optimizes teams' output, improves engineering efficiency, and delivers faster with improved visibility. The platform enables prioritization of initiatives that deliver customer value and helps in understanding engineering performance to enhance productivity.

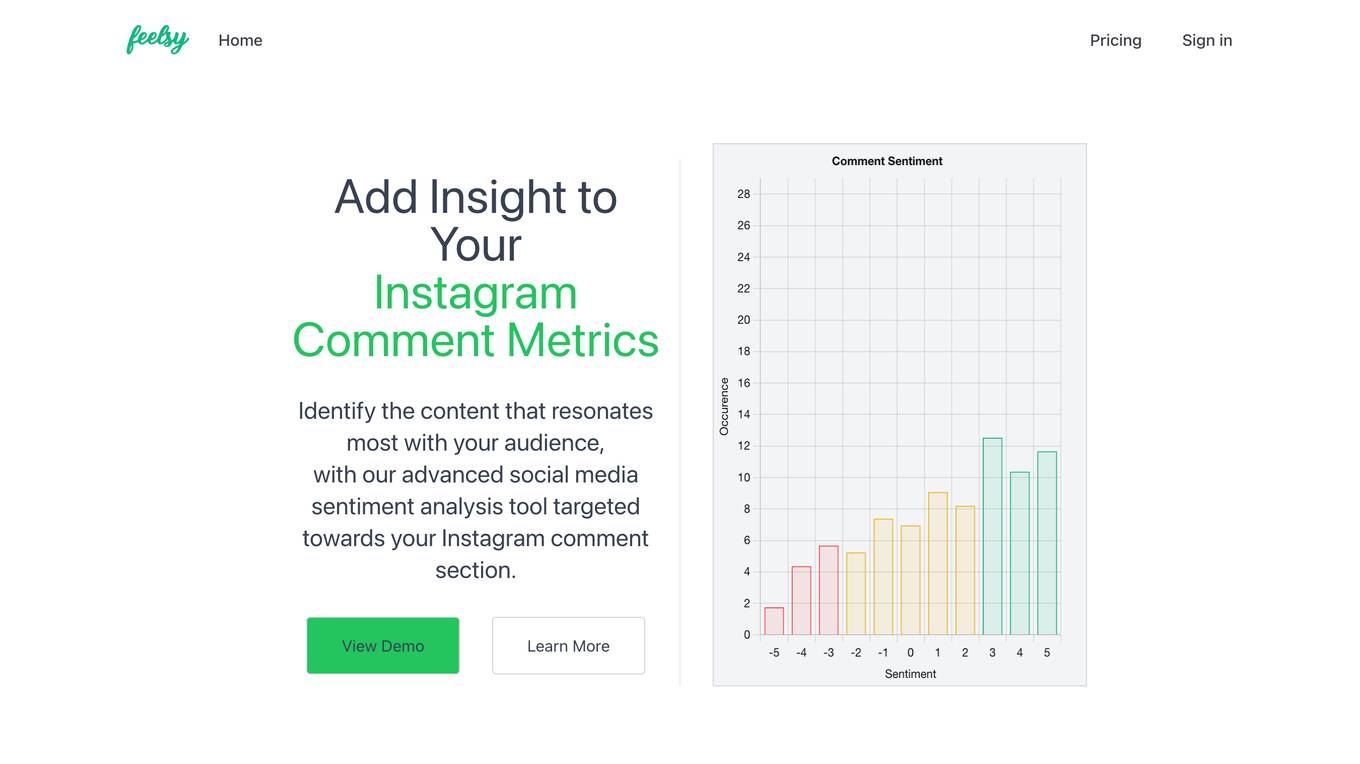

Feelsy

Feelsy is a social media sentiment analysis tool that helps businesses understand how their audience feels about their content. With Feelsy, businesses can track the sentiment of their Instagram comments in real-time, identify the content that resonates most with their audience, and measure the effectiveness of their social media campaigns.

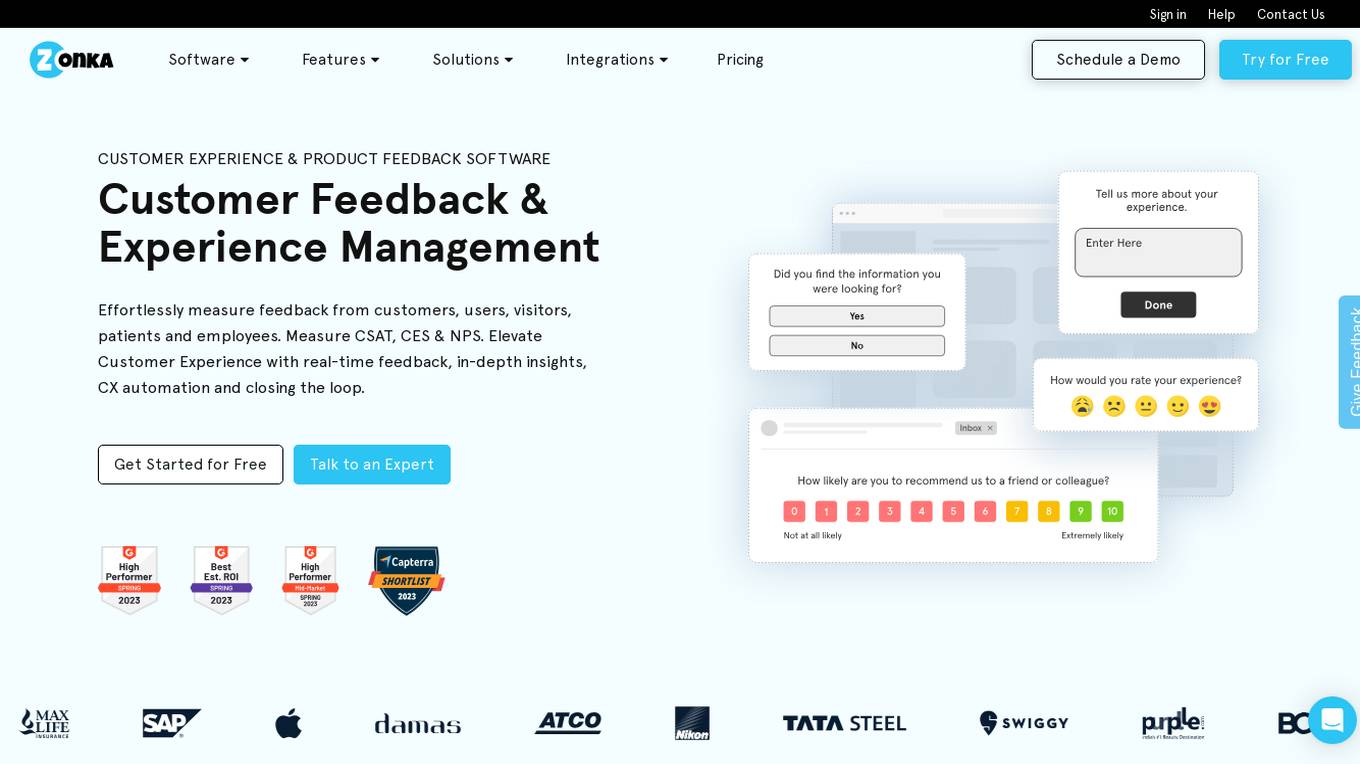

Zonka Feedback

Zonka Feedback is a powerful Customer Feedback and Survey Platform that offers User Segmentation for precise targeting, AI capabilities for smarter surveys, and a wide range of features to measure and improve Customer Experience. It provides solutions for various industries and use cases, integrates with popular tools, and offers in-depth reporting and analytics. Zonka Feedback is known for its modern-looking surveys, ease of use, and extensive integrations, making it a versatile tool for collecting feedback from customers, users, visitors, patients, and employees.

Simpleem

Simpleem is an Artificial Emotional Intelligence (AEI) tool that helps users uncover intentions, predict success, and leverage behavior for successful interactions. By measuring all interactions and correlating them with concrete outcomes, Simpleem provides insights into verbal, para-verbal, and non-verbal cues to enhance customer relationships, track customer rapport, and assess team performance. The tool aims to identify win/lose patterns in behavior, guide users on boosting performance, and prevent burnout by promptly identifying red flags. Simpleem uses proprietary AI models to analyze real-world data and translate behavioral insights into concrete business metrics, achieving a high accuracy rate of 94% in success prediction.

Impulze.ai

Impulze.ai is an Influencer Analytics Platform designed to help users discover and manage influencers from a vast global database covering Instagram, TikTok, and YouTube. The platform offers AI algorithms that effectively identify the right influencers, allowing users to effortlessly filter influencers based on various criteria such as engagement rate, follower count, location, age, and gender. Additionally, Impulze.ai provides audience analytics, fraud detection, and data-driven matching to help users find the perfect match for their brand. With features like real-time performance metrics, audience filters, and campaign tracking, Impulze.ai streamlines influencer marketing strategies for agencies and marketers, saving time and enhancing brand awareness.

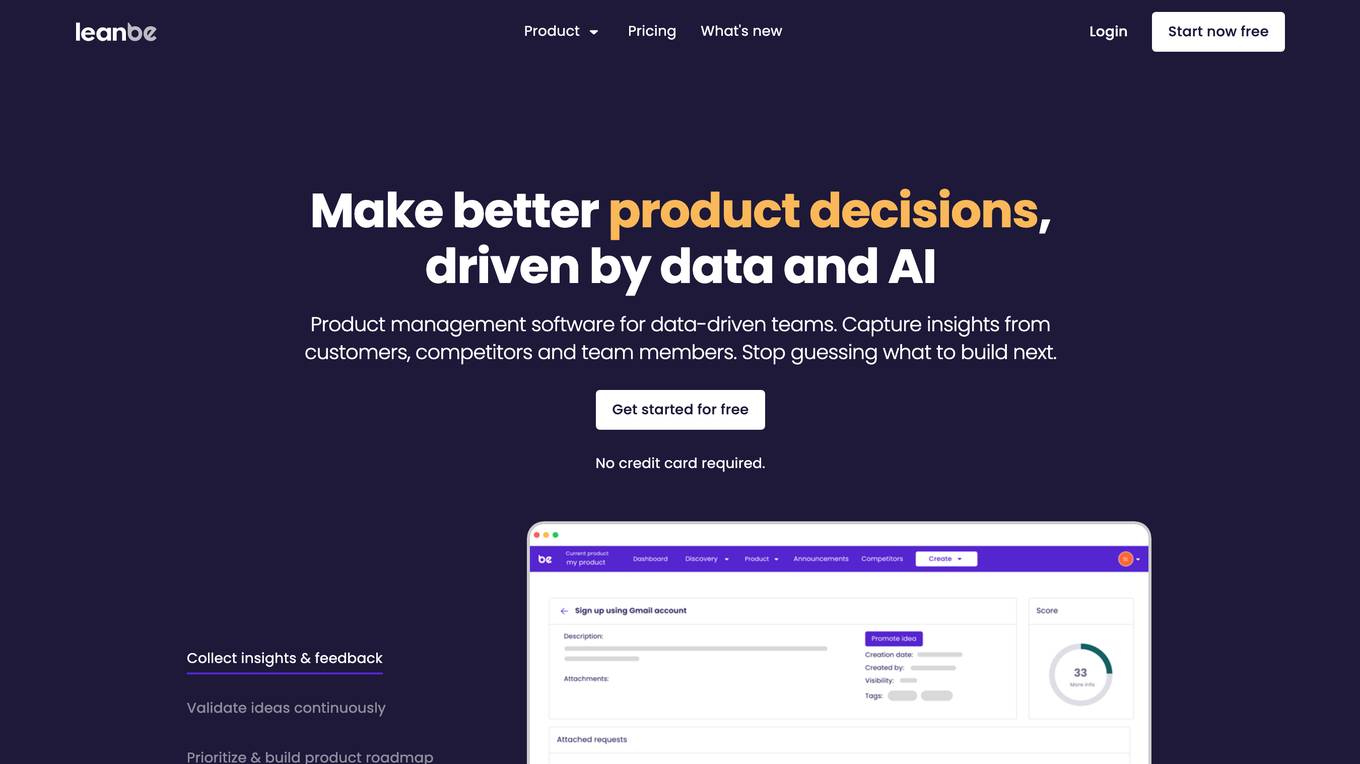

Leanbe

Leanbe is a product management platform designed for data-driven teams. It helps product managers capture insights from customers, competitors, and team members to make better product decisions. Leanbe uses AI to continuously update idea scores based on customer behavior, insights, and feedback from the team. This helps product managers prioritize the right ideas and build products that meet the needs of their customers.

Crayon

Crayon is a competitive intelligence software that helps businesses track competitors, win more deals, and stay ahead in the market. Powered by AI, Crayon enables users to analyze, enable, compete, and measure their competitive landscape efficiently. The platform offers features such as competitor monitoring, AI news summarization, importance scoring, content creation, sales enablement, performance metrics, and more. With Crayon, users can receive high-priority insights, distill articles about competitors, create battlecards, find intel to win deals, and track performance metrics. The application aims to make competitive intelligence seamless and impactful for sales teams.

SportBoost AI

SportBoost AI is an advanced Artificial Intelligence-powered application designed to help athletes and coaches measure, track, and improve sports performance. The platform offers innovative solutions for a variety of sports, democratizing access to advanced data analytics. Through cutting-edge technology and continuous research and development efforts, SportBoost AI aims to elevate athletic excellence at every level, from amateur to professional.

Impakt

Impakt is an AI Coach & Social Fitness Platform that provides personalized workout plans, real-time feedback, and community support to help users measure, understand, and improve their fitness goals. The platform offers infinite personalized workouts tailored by top athletes and fitness influencers. Users can track their progress, connect with like-minded individuals, and access a variety of fitness resources to stay motivated and achieve results. With Impakt, users can experience a unique blend of AI technology and social interaction to enhance their fitness journey.

Gestualy

Gestualy is an AI tool designed to measure and improve customer satisfaction and mood quickly and easily through gestures. It eliminates the need for cumbersome satisfaction surveys by allowing interactions with customers or guests through gestures. The tool uses artificial intelligence to make smart decisions in businesses. Gestualy generates valuable statistical reports for businesses, including satisfaction levels, gender, mood, and age, all while ensuring data protection and privacy compliance. It offers touchless interaction, immediate feedback, anonymized reports, and a range of services such as gesture recognition, facial analysis, gamification, and alert systems.

Walks of Life AI

Walks of Life AI is a desktop-based AI tool designed to measure the pulse of your ideas. It allows users to input a URL for analysis and provides advanced options for customization. The tool is created with a focus on privacy and offers a seamless user experience. Walks of Life AI is developed in San Francisco with a mission to assist users in gaining insights and making informed decisions.

Brand24

Brand24 is a powerful AI-powered social listening tool that helps businesses protect their brand reputation, measure their brand awareness, analyze their competitors, and discover customer insights. With Brand24, you can track mentions of your brand across social media, news, blogs, videos, forums, podcasts, reviews, and more. You can also use Brand24 to track hashtags, measure the reach of your marketing campaigns, and get access to valuable customer insights.

Codeway

Codeway is a leading mobile AI app developer that actively supports earthquake relief efforts in Turkey. With a focus on creating AI-powered apps, Codeway leverages cutting-edge AI technologies to deliver unparalleled user experiences. The company invests in R&D operations to ensure excellence in technology implementation, and is committed to understanding user needs for continuous app evolution. Codeway's products include mobile apps like Cleanup, Scanner+, Ask AI, Facedance, Wonder, Rumble Rivals, and PixelUp. The company excels in marketing, product management, and culture, attracting top talent and fostering a data-driven roadmap to success.

Metabob

Metabob is an AI-powered code review tool that helps developers detect, explain, and fix coding problems. It utilizes proprietary graph neural networks to detect problems and LLMs to explain and resolve them, combining the best of both worlds. Metabob's AI is trained on millions of bug fixes performed by experienced developers, enabling it to detect complex problems that span across codebases and automatically generate fixes for them. It integrates with popular code hosting platforms such as GitHub, Bitbucket, Gitlab, and VS Code, and supports various programming languages including Python, Javascript, Typescript, Java, C++, and C.

Page not found

The website appears to have encountered an error as the page content states 'Page not found'. It seems like the user has navigated to a page that does not exist on the website. The message '404 Page not found' indicates that the server could not find the requested page. This could be due to a broken link, mistyped URL, or the page being removed or relocated. Users encountering this message are advised to check the URL for errors or navigate back to the homepage.

Adjust

Adjust is an AI-driven platform that helps mobile app developers accelerate their app's growth through a comprehensive suite of measurement, analytics, automation, and fraud prevention tools. The platform offers unlimited measurement capabilities across various platforms, powerful analytics and reporting features, AI-driven decision-making recommendations, streamlined operations through automation, and data protection against mobile ad fraud. Adjust also provides solutions for iOS and SKAdNetwork success, CTV and OTT performance enhancement, ROI measurement, fraud prevention, and incrementality analysis. With a focus on privacy and security, Adjust empowers app developers to optimize their marketing strategies and drive tangible growth.

Deepfake Detection Challenge Dataset

The Deepfake Detection Challenge Dataset is a project initiated by Facebook AI to accelerate the development of new ways to detect deepfake videos. The dataset consists of over 100,000 videos and was created in collaboration with industry leaders and academic experts. It includes two versions: a preview dataset with 5k videos and a full dataset with 124k videos, each featuring facial modification algorithms. The dataset was used in a Kaggle competition to create better models for detecting manipulated media. The top-performing models achieved high accuracy on the public dataset but faced challenges when tested against the black box dataset, highlighting the importance of generalization in deepfake detection. The project aims to encourage the research community to continue advancing in detecting harmful manipulated media.

Binah.ai

Binah.ai is an AI-powered Health Data Platform that offers a software solution for video-based vital signs monitoring. The platform enables users to measure various health and wellness indicators using a smartphone, tablet, or laptop. It provides support for continuous monitoring through a raw PPG signal from external sensors and offers a range of features such as blood pressure monitoring, heart rate variability, oxygen saturation, and more. Binah.ai aims to make health data more accessible for better care at lower costs by leveraging AI and deep learning algorithms.

The Millshop Online

The Millshop Online is a website specializing in curtain fabric and upholstery fabric. They offer a wide range of unique and exclusive designs from various countries. Customers can find a variety of fabric types, styles, colors, and brands for both upholstery and curtain needs. The website also provides made-to-measure services, sale items, and British-produced products. With a focus on quality and customer satisfaction, The Millshop Online aims to cater to the fabric needs of individuals and businesses alike.

Bazaarvoice affable.ai

The Bazaarvoice affable.ai platform is an AI-driven influencer marketing solution that helps brands find, manage, and measure creator collaborations. It offers a range of features to help brands connect with the right creators, manage campaigns, and track results. The platform includes a database of over 100,000 creators, advanced search filters, campaign management tools, and reporting dashboards.

20 - Open Source AI Tools

ByteMLPerf

ByteMLPerf is an AI Accelerator Benchmark that focuses on evaluating AI Accelerators from a practical production perspective, including the ease of use and versatility of software and hardware. Byte MLPerf has the following characteristics: - Models and runtime environments are more closely aligned with practical business use cases. - For ASIC hardware evaluation, besides evaluate performance and accuracy, it also measure metrics like compiler usability and coverage. - Performance and accuracy results obtained from testing on the open Model Zoo serve as reference metrics for evaluating ASIC hardware integration.

enterprise-azureai

Azure OpenAI Service is a central capability with Azure API Management, providing guidance and tools for organizations to implement Azure OpenAI in a production environment with an emphasis on cost control, secure access, and usage monitoring. It includes infrastructure-as-code templates, CI/CD pipelines, secure access management, usage monitoring, load balancing, streaming requests, and end-to-end samples like ChatApp and Azure Dashboards.

awesome-hallucination-detection

This repository provides a curated list of papers, datasets, and resources related to the detection and mitigation of hallucinations in large language models (LLMs). Hallucinations refer to the generation of factually incorrect or nonsensical text by LLMs, which can be a significant challenge for their use in real-world applications. The resources in this repository aim to help researchers and practitioners better understand and address this issue.

llm-course

The LLM course is divided into three parts: 1. 🧩 **LLM Fundamentals** covers essential knowledge about mathematics, Python, and neural networks. 2. 🧑🔬 **The LLM Scientist** focuses on building the best possible LLMs using the latest techniques. 3. 👷 **The LLM Engineer** focuses on creating LLM-based applications and deploying them. For an interactive version of this course, I created two **LLM assistants** that will answer questions and test your knowledge in a personalized way: * 🤗 **HuggingChat Assistant**: Free version using Mixtral-8x7B. * 🤖 **ChatGPT Assistant**: Requires a premium account. ## 📝 Notebooks A list of notebooks and articles related to large language models. ### Tools | Notebook | Description | Notebook | |----------|-------------|----------| | 🧐 LLM AutoEval | Automatically evaluate your LLMs using RunPod |  | | 🥱 LazyMergekit | Easily merge models using MergeKit in one click. |  | | 🦎 LazyAxolotl | Fine-tune models in the cloud using Axolotl in one click. |  | | ⚡ AutoQuant | Quantize LLMs in GGUF, GPTQ, EXL2, AWQ, and HQQ formats in one click. |  | | 🌳 Model Family Tree | Visualize the family tree of merged models. |  | | 🚀 ZeroSpace | Automatically create a Gradio chat interface using a free ZeroGPU. |  |

tonic_validate

Tonic Validate is a framework for the evaluation of LLM outputs, such as Retrieval Augmented Generation (RAG) pipelines. Validate makes it easy to evaluate, track, and monitor your LLM and RAG applications. Validate allows you to evaluate your LLM outputs through the use of our provided metrics which measure everything from answer correctness to LLM hallucination. Additionally, Validate has an optional UI to visualize your evaluation results for easy tracking and monitoring.

alignment-handbook

The Alignment Handbook provides robust training recipes for continuing pretraining and aligning language models with human and AI preferences. It includes techniques such as continued pretraining, supervised fine-tuning, reward modeling, rejection sampling, and direct preference optimization (DPO). The handbook aims to fill the gap in public resources on training these models, collecting data, and measuring metrics for optimal downstream performance.

middleware

Middleware is an open-source engineering management tool that helps engineering leaders measure and analyze team effectiveness using DORA metrics. It integrates with CI/CD tools, automates DORA metric collection and analysis, visualizes key performance indicators, provides customizable reports and dashboards, and integrates with project management platforms. Users can set up Middleware using Docker or manually, generate encryption keys, set up backend and web servers, and access the application to view DORA metrics. The tool calculates DORA metrics using GitHub data, including Deployment Frequency, Lead Time for Changes, Mean Time to Restore, and Change Failure Rate. Middleware aims to provide DORA metrics to users based on their Git data, simplifying the process of tracking software delivery performance and operational efficiency.

llmperf

LLMPerf is a tool designed for evaluating the performance of Language Model APIs. It provides functionalities for conducting load tests to measure inter-token latency and generation throughput, as well as correctness tests to verify the responses. The tool supports various LLM APIs including OpenAI, Anthropic, TogetherAI, Hugging Face, LiteLLM, Vertex AI, and SageMaker. Users can set different parameters for the tests and analyze the results to assess the performance of the LLM APIs. LLMPerf aims to standardize prompts across different APIs and provide consistent evaluation metrics for comparison.

langcheck

LangCheck is a Python library that provides a suite of metrics and tools for evaluating the quality of text generated by large language models (LLMs). It includes metrics for evaluating text fluency, sentiment, toxicity, factual consistency, and more. LangCheck also provides tools for visualizing metrics, augmenting data, and writing unit tests for LLM applications. With LangCheck, you can quickly and easily assess the quality of LLM-generated text and identify areas for improvement.

continuous-eval

Open-Source Evaluation for LLM Applications. `continuous-eval` is an open-source package created for granular and holistic evaluation of GenAI application pipelines. It offers modularized evaluation, a comprehensive metric library covering various LLM use cases, the ability to leverage user feedback in evaluation, and synthetic dataset generation for testing pipelines. Users can define their own metrics by extending the Metric class. The tool allows running evaluation on a pipeline defined with modules and corresponding metrics. Additionally, it provides synthetic data generation capabilities to create user interaction data for evaluation or training purposes.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

langwatch

LangWatch is a monitoring and analytics platform designed to track, visualize, and analyze interactions with Large Language Models (LLMs). It offers real-time telemetry to optimize LLM cost and latency, a user-friendly interface for deep insights into LLM behavior, user analytics for engagement metrics, detailed debugging capabilities, and guardrails to monitor LLM outputs for issues like PII leaks and toxic language. The platform supports OpenAI and LangChain integrations, simplifying the process of tracing LLM calls and generating API keys for usage. LangWatch also provides documentation for easy integration and self-hosting options for interested users.

Quantus

Quantus is a toolkit designed for the evaluation of neural network explanations. It offers more than 30 metrics in 6 categories for eXplainable Artificial Intelligence (XAI) evaluation. The toolkit supports different data types (image, time-series, tabular, NLP) and models (PyTorch, TensorFlow). It provides built-in support for explanation methods like captum, tf-explain, and zennit. Quantus is under active development and aims to provide a comprehensive set of quantitative evaluation metrics for XAI methods.

graphrag

The GraphRAG project is a data pipeline and transformation suite designed to extract meaningful, structured data from unstructured text using LLMs. It enhances LLMs' ability to reason about private data. The repository provides guidance on using knowledge graph memory structures to enhance LLM outputs, with a warning about the potential costs of GraphRAG indexing. It offers contribution guidelines, development resources, and encourages prompt tuning for optimal results. The Responsible AI FAQ addresses GraphRAG's capabilities, intended uses, evaluation metrics, limitations, and operational factors for effective and responsible use.

rag-experiment-accelerator

The RAG Experiment Accelerator is a versatile tool that helps you conduct experiments and evaluations using Azure AI Search and RAG pattern. It offers a rich set of features, including experiment setup, integration with Azure AI Search, Azure Machine Learning, MLFlow, and Azure OpenAI, multiple document chunking strategies, query generation, multiple search types, sub-querying, re-ranking, metrics and evaluation, report generation, and multi-lingual support. The tool is designed to make it easier and faster to run experiments and evaluations of search queries and quality of response from OpenAI, and is useful for researchers, data scientists, and developers who want to test the performance of different search and OpenAI related hyperparameters, compare the effectiveness of various search strategies, fine-tune and optimize parameters, find the best combination of hyperparameters, and generate detailed reports and visualizations from experiment results.

LLM-PowerHouse-A-Curated-Guide-for-Large-Language-Models-with-Custom-Training-and-Inferencing

LLM-PowerHouse is a comprehensive and curated guide designed to empower developers, researchers, and enthusiasts to harness the true capabilities of Large Language Models (LLMs) and build intelligent applications that push the boundaries of natural language understanding. This GitHub repository provides in-depth articles, codebase mastery, LLM PlayLab, and resources for cost analysis and network visualization. It covers various aspects of LLMs, including NLP, models, training, evaluation metrics, open LLMs, and more. The repository also includes a collection of code examples and tutorials to help users build and deploy LLM-based applications.

ChainForge

ChainForge is a visual programming environment for battle-testing prompts to LLMs. It is geared towards early-stage, quick-and-dirty exploration of prompts, chat responses, and response quality that goes beyond ad-hoc chatting with individual LLMs. With ChainForge, you can: * Query multiple LLMs at once to test prompt ideas and variations quickly and effectively. * Compare response quality across prompt permutations, across models, and across model settings to choose the best prompt and model for your use case. * Setup evaluation metrics (scoring function) and immediately visualize results across prompts, prompt parameters, models, and model settings. * Hold multiple conversations at once across template parameters and chat models. Template not just prompts, but follow-up chat messages, and inspect and evaluate outputs at each turn of a chat conversation. ChainForge comes with a number of example evaluation flows to give you a sense of what's possible, including 188 example flows generated from benchmarks in OpenAI evals. This is an open beta of Chainforge. We support model providers OpenAI, HuggingFace, Anthropic, Google PaLM2, Azure OpenAI endpoints, and Dalai-hosted models Alpaca and Llama. You can change the exact model and individual model settings. Visualization nodes support numeric and boolean evaluation metrics. ChainForge is built on ReactFlow and Flask.

lighteval

LightEval is a lightweight LLM evaluation suite that Hugging Face has been using internally with the recently released LLM data processing library datatrove and LLM training library nanotron. We're releasing it with the community in the spirit of building in the open. Note that it is still very much early so don't expect 100% stability ^^' In case of problems or question, feel free to open an issue!

chronon

Chronon is a platform that simplifies and improves ML workflows by providing a central place to define features, ensuring point-in-time correctness for backfills, simplifying orchestration for batch and streaming pipelines, offering easy endpoints for feature fetching, and guaranteeing and measuring consistency. It offers benefits over other approaches by enabling the use of a broad set of data for training, handling large aggregations and other computationally intensive transformations, and abstracting away the infrastructure complexity of data plumbing.

20 - OpenAI Gpts

Frida Futurelab

Bilingual CX strategy expert using Futurelab Experience resources. These resources are futurelab.fi website, white papers, blog posts and books by Kari Korkiakoski

How to Measure Anything

对各种量化问题进行拆解和粗略的估算。注意这种估算主要是靠推测,而不是靠准确的数据,因此仅供参考。理想情况下,估算结果和真实值差距可能在1个数量级以内。即使数值不准确,也希望拆解思路对你有所启发。

PsyItemGenerator

Generates items for psychometric instruments to measure psychological constructs.

CHAT Social Progress

Explore social and environmental data for 169 countries to measure social progress and go beyond GDP. Using data from the Social Progress Imperative and powered by Open AI.

TuringGPT

The Turing Test, first named the imitation game by Alan Turing in 1950, is a measure of a machine's capacity to demonstrate intelligence that's either equal to or indistinguishable from human intelligence.

Aurometer

A device which detects the power level of any entity by measuring fluctuations in "Soul Power."

BS Meter Realtime

Detects and measures information credibility. Provides a "BS Score" (0-100) based on content analysis for misinformation signs, including factual inaccuracies and sensationalist language. Real-time feedback.

Raven's Progressive Matrices Test

Provides Raven's Progressive Matrices test with explanations and calculates your IQ score.

IQ Test

IQ Test is designed to simulate an IQ testing environment. It provides a formal and objective experience, delivering questions and processing answers in a straightforward manner.

FREE How to Know What Size Nursing Bra to Get

FREE How to Know What Size Nursing Bra to Get - Guidance on nursing bra sizing with insights into breast size changes during pregnancy, measurement instructions, and advice on choosing the right bra style and size. It interprets bust measurements and answers FAQs about nursing bras.

Moccha particle size analyzer

Expert in analyzing coffee grind particle size distribution using image processing and KDE.