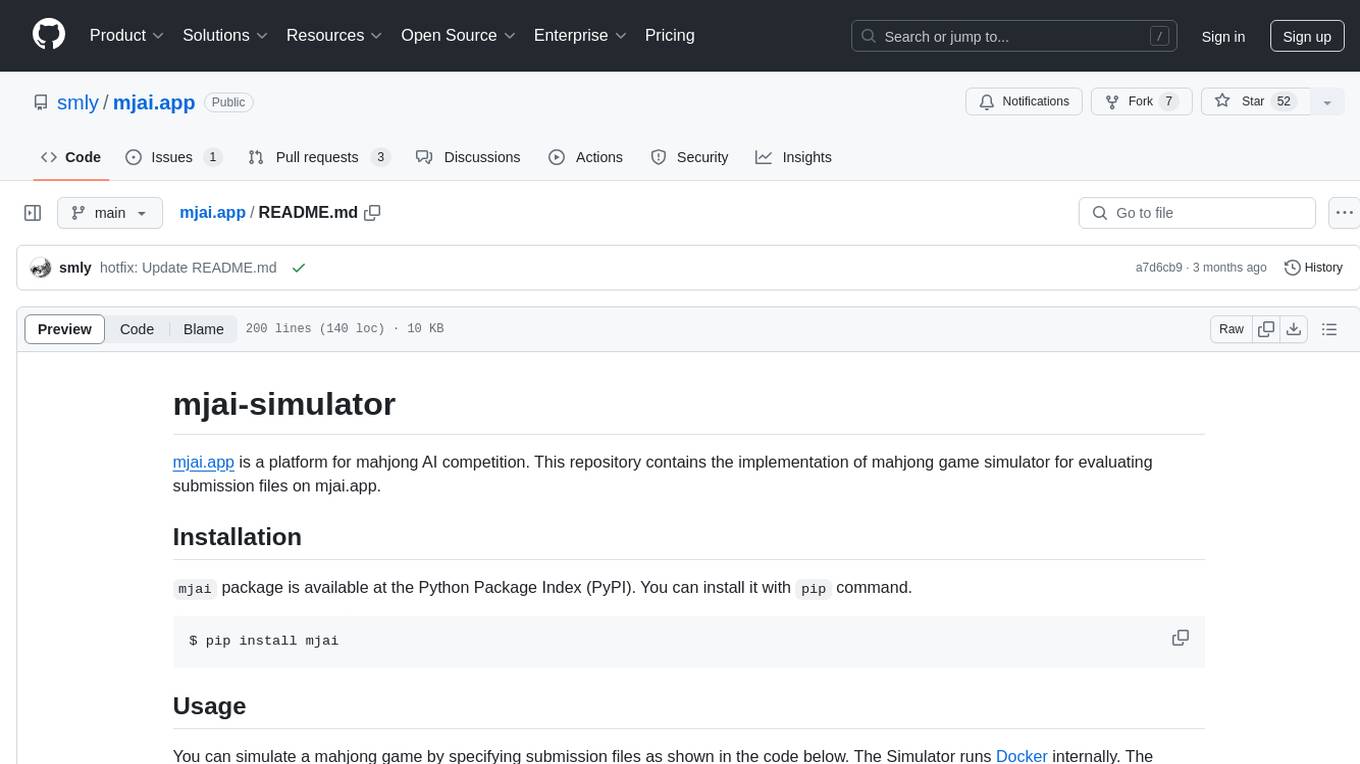

llmperf

LLMPerf is a library for validating and benchmarking LLMs

Stars: 439

LLMPerf is a tool designed for evaluating the performance of Language Model APIs. It provides functionalities for conducting load tests to measure inter-token latency and generation throughput, as well as correctness tests to verify the responses. The tool supports various LLM APIs including OpenAI, Anthropic, TogetherAI, Hugging Face, LiteLLM, Vertex AI, and SageMaker. Users can set different parameters for the tests and analyze the results to assess the performance of the LLM APIs. LLMPerf aims to standardize prompts across different APIs and provide consistent evaluation metrics for comparison.

README:

A Tool for evaulation the performance of LLM APIs.

git clone https://github.com/ray-project/llmperf.git

cd llmperf

pip install -e .We implement 2 tests for evaluating LLMs: a load test to check for performance and a correctness test to check for correctness.

The load test spawns a number of concurrent requests to the LLM API and measures the inter-token latency and generation throughput per request and across concurrent requests. The prompt that is sent with each request is of the format:

Randomly stream lines from the following text. Don't generate eos tokens:

LINE 1,

LINE 2,

LINE 3,

...

Where the lines are randomly sampled from a collection of lines from Shakespeare sonnets. Tokens are counted using the LlamaTokenizer regardless of which LLM API is being tested. This is to ensure that the prompts are consistent across different LLM APIs.

To run the most basic load test you can the token_benchmark_ray script.

- The endpoints provider backend might vary widely, so this is not a reflection on how the software runs on a particular hardware.

- The results may vary with time of day.

- The results may vary with the load.

- The results may not correlate with users’ workloads.

export OPENAI_API_KEY=secret_abcdefg

export OPENAI_API_BASE="https://api.endpoints.anyscale.com/v1"

python token_benchmark_ray.py \

--model "meta-llama/Llama-2-7b-chat-hf" \

--mean-input-tokens 550 \

--stddev-input-tokens 150 \

--mean-output-tokens 150 \

--stddev-output-tokens 10 \

--max-num-completed-requests 2 \

--timeout 600 \

--num-concurrent-requests 1 \

--results-dir "result_outputs" \

--llm-api openai \

--additional-sampling-params '{}'

export ANTHROPIC_API_KEY=secret_abcdefg

python token_benchmark_ray.py \

--model "claude-2" \

--mean-input-tokens 550 \

--stddev-input-tokens 150 \

--mean-output-tokens 150 \

--stddev-output-tokens 10 \

--max-num-completed-requests 2 \

--timeout 600 \

--num-concurrent-requests 1 \

--results-dir "result_outputs" \

--llm-api anthropic \

--additional-sampling-params '{}'

export TOGETHERAI_API_KEY="YOUR_TOGETHER_KEY"

python token_benchmark_ray.py \

--model "together_ai/togethercomputer/CodeLlama-7b-Instruct" \

--mean-input-tokens 550 \

--stddev-input-tokens 150 \

--mean-output-tokens 150 \

--stddev-output-tokens 10 \

--max-num-completed-requests 2 \

--timeout 600 \

--num-concurrent-requests 1 \

--results-dir "result_outputs" \

--llm-api "litellm" \

--additional-sampling-params '{}'

export HUGGINGFACE_API_KEY="YOUR_HUGGINGFACE_API_KEY"

export HUGGINGFACE_API_BASE="YOUR_HUGGINGFACE_API_ENDPOINT"

python token_benchmark_ray.py \

--model "huggingface/meta-llama/Llama-2-7b-chat-hf" \

--mean-input-tokens 550 \

--stddev-input-tokens 150 \

--mean-output-tokens 150 \

--stddev-output-tokens 10 \

--max-num-completed-requests 2 \

--timeout 600 \

--num-concurrent-requests 1 \

--results-dir "result_outputs" \

--llm-api "litellm" \

--additional-sampling-params '{}'

LLMPerf can use LiteLLM to send prompts to LLM APIs. To see the environment variables to set for the provider and arguments that one should set for model and additional-sampling-params.

see the LiteLLM Provider Documentation.

python token_benchmark_ray.py \

--model "meta-llama/Llama-2-7b-chat-hf" \

--mean-input-tokens 550 \

--stddev-input-tokens 150 \

--mean-output-tokens 150 \

--stddev-output-tokens 10 \

--max-num-completed-requests 2 \

--timeout 600 \

--num-concurrent-requests 1 \

--results-dir "result_outputs" \

--llm-api "litellm" \

--additional-sampling-params '{}'

Here, --model is used for logging, not for selecting the model. The model is specified in the Vertex AI Endpoint ID.

The GCLOUD_ACCESS_TOKEN needs to be somewhat regularly set, as the token generated by gcloud auth print-access-token expires after 15 minutes or so.

Vertex AI doesn't return the total number of tokens that are generated by their endpoint, so tokens are counted using the LLama tokenizer.

gcloud auth application-default login

gcloud config set project YOUR_PROJECT_ID

export GCLOUD_ACCESS_TOKEN=$(gcloud auth print-access-token)

export GCLOUD_PROJECT_ID=YOUR_PROJECT_ID

export GCLOUD_REGION=YOUR_REGION

export VERTEXAI_ENDPOINT_ID=YOUR_ENDPOINT_ID

python token_benchmark_ray.py \

--model "meta-llama/Llama-2-7b-chat-hf" \

--mean-input-tokens 550 \

--stddev-input-tokens 150 \

--mean-output-tokens 150 \

--stddev-output-tokens 10 \

--max-num-completed-requests 2 \

--timeout 600 \

--num-concurrent-requests 1 \

--results-dir "result_outputs" \

--llm-api "vertexai" \

--additional-sampling-params '{}'

SageMaker doesn't return the total number of tokens that are generated by their endpoint, so tokens are counted using the LLama tokenizer.

export AWS_ACCESS_KEY_ID="YOUR_ACCESS_KEY_ID"

export AWS_SECRET_ACCESS_KEY="YOUR_SECRET_ACCESS_KEY"s

export AWS_SESSION_TOKEN="YOUR_SESSION_TOKEN"

export AWS_REGION_NAME="YOUR_ENDPOINTS_REGION_NAME"

python llm_correctness.py \

--model "llama-2-7b" \

--llm-api "sagemaker" \

--max-num-completed-requests 2 \

--timeout 600 \

--num-concurrent-requests 1 \

--results-dir "result_outputs" \

see python token_benchmark_ray.py --help for more details on the arguments.

The correctness test spawns a number of concurrent requests to the LLM API with the following format:

Convert the following sequence of words into a number: {random_number_in_word_format}. Output just your final answer.

where random_number_in_word_format could be for example "one hundred and twenty three". The test then checks that the response contains that number in digit format which in this case would be 123.

The test does this for a number of randomly generated numbers and reports the number of responses that contain a mismatch.

To run the most basic correctness test you can run the the llm_correctness.py script.

export OPENAI_API_KEY=secret_abcdefg

export OPENAI_API_BASE=https://console.endpoints.anyscale.com/m/v1

python llm_correctness.py \

--model "meta-llama/Llama-2-7b-chat-hf" \

--max-num-completed-requests 150 \

--timeout 600 \

--num-concurrent-requests 10 \

--results-dir "result_outputs"export ANTHROPIC_API_KEY=secret_abcdefg

python llm_correctness.py \

--model "claude-2" \

--llm-api "anthropic" \

--max-num-completed-requests 5 \

--timeout 600 \

--num-concurrent-requests 1 \

--results-dir "result_outputs"export TOGETHERAI_API_KEY="YOUR_TOGETHER_KEY"

python llm_correctness.py \

--model "together_ai/togethercomputer/CodeLlama-7b-Instruct" \

--llm-api "litellm" \

--max-num-completed-requests 2 \

--timeout 600 \

--num-concurrent-requests 1 \

--results-dir "result_outputs" \

export HUGGINGFACE_API_KEY="YOUR_HUGGINGFACE_API_KEY"

export HUGGINGFACE_API_BASE="YOUR_HUGGINGFACE_API_ENDPOINT"

python llm_correctness.py \

--model "huggingface/meta-llama/Llama-2-7b-chat-hf" \

--llm-api "litellm" \

--max-num-completed-requests 2 \

--timeout 600 \

--num-concurrent-requests 1 \

--results-dir "result_outputs" \

LLMPerf can use LiteLLM to send prompts to LLM APIs. To see the environment variables to set for the provider and arguments that one should set for model and additional-sampling-params.

see the LiteLLM Provider Documentation.

python llm_correctness.py \

--model "meta-llama/Llama-2-7b-chat-hf" \

--llm-api "litellm" \

--max-num-completed-requests 2 \

--timeout 600 \

--num-concurrent-requests 1 \

--results-dir "result_outputs" \

see python llm_correctness.py --help for more details on the arguments.

Here, --model is used for logging, not for selecting the model. The model is specified in the Vertex AI Endpoint ID.

The GCLOUD_ACCESS_TOKEN needs to be somewhat regularly set, as the token generated by gcloud auth print-access-token expires after 15 minutes or so.

Vertex AI doesn't return the total number of tokens that are generated by their endpoint, so tokens are counted using the LLama tokenizer.

gcloud auth application-default login

gcloud config set project YOUR_PROJECT_ID

export GCLOUD_ACCESS_TOKEN=$(gcloud auth print-access-token)

export GCLOUD_PROJECT_ID=YOUR_PROJECT_ID

export GCLOUD_REGION=YOUR_REGION

export VERTEXAI_ENDPOINT_ID=YOUR_ENDPOINT_ID

python llm_correctness.py \

--model "meta-llama/Llama-2-7b-chat-hf" \

--llm-api "vertexai" \

--max-num-completed-requests 2 \

--timeout 600 \

--num-concurrent-requests 1 \

--results-dir "result_outputs" \

SageMaker doesn't return the total number of tokens that are generated by their endpoint, so tokens are counted using the LLama tokenizer.

export AWS_ACCESS_KEY_ID="YOUR_ACCESS_KEY_ID"

export AWS_SECRET_ACCESS_KEY="YOUR_SECRET_ACCESS_KEY"s

export AWS_SESSION_TOKEN="YOUR_SESSION_TOKEN"

export AWS_REGION_NAME="YOUR_ENDPOINTS_REGION_NAME"

python llm_correctness.py \

--model "llama-2-7b" \

--llm-api "sagemaker" \

--max-num-completed-requests 2 \

--timeout 600 \

--num-concurrent-requests 1 \

--results-dir "result_outputs" \

The results of the load test and correctness test are saved in the results directory specified by the --results-dir argument. The results are saved in 2 files, one with the summary metrics of the test, and one with metrics from each individual request that is returned.

The correctness tests were implemented with the following workflow in mind:

import ray

from transformers import LlamaTokenizerFast

from llmperf.ray_clients.openai_chat_completions_client import (

OpenAIChatCompletionsClient,

)

from llmperf.models import RequestConfig

from llmperf.requests_launcher import RequestsLauncher

# Copying the environment variables and passing them to ray.init() is necessary

# For making any clients work.

ray.init(runtime_env={"env_vars": {"OPENAI_API_BASE" : "https://api.endpoints.anyscale.com/v1",

"OPENAI_API_KEY" : "YOUR_API_KEY"}})

base_prompt = "hello_world"

tokenizer = LlamaTokenizerFast.from_pretrained(

"hf-internal-testing/llama-tokenizer"

)

base_prompt_len = len(tokenizer.encode(base_prompt))

prompt = (base_prompt, base_prompt_len)

# Create a client for spawning requests

clients = [OpenAIChatCompletionsClient.remote()]

req_launcher = RequestsLauncher(clients)

req_config = RequestConfig(

model="meta-llama/Llama-2-7b-chat-hf",

prompt=prompt

)

req_launcher.launch_requests(req_config)

result = req_launcher.get_next_ready(block=True)

print(result)To implement a new LLM client, you need to implement the base class llmperf.ray_llm_client.LLMClient and decorate it as a ray actor.

from llmperf.ray_llm_client import LLMClient

import ray

@ray.remote

class CustomLLMClient(LLMClient):

def llm_request(self, request_config: RequestConfig) -> Tuple[Metrics, str, RequestConfig]:

"""Make a single completion request to a LLM API

Returns:

Metrics about the performance charateristics of the request.

The text generated by the request to the LLM API.

The request_config used to make the request. This is mainly for logging purposes.

"""

...The old LLMPerf code base can be found in the llmperf-legacy repo.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llmperf

Similar Open Source Tools

llmperf

LLMPerf is a tool designed for evaluating the performance of Language Model APIs. It provides functionalities for conducting load tests to measure inter-token latency and generation throughput, as well as correctness tests to verify the responses. The tool supports various LLM APIs including OpenAI, Anthropic, TogetherAI, Hugging Face, LiteLLM, Vertex AI, and SageMaker. Users can set different parameters for the tests and analyze the results to assess the performance of the LLM APIs. LLMPerf aims to standardize prompts across different APIs and provide consistent evaluation metrics for comparison.

gfm-rag

The GFM-RAG is a graph foundation model-powered pipeline that combines graph neural networks to reason over knowledge graphs and retrieve relevant documents for question answering. It features a knowledge graph index, efficiency in multi-hop reasoning, generalizability to unseen datasets, transferability for fine-tuning, compatibility with agent-based frameworks, and interpretability of reasoning paths. The tool can be used for conducting retrieval and question answering tasks using pre-trained models or fine-tuning on custom datasets.

SPAG

This repository contains the implementation of Self-Play of Adversarial Language Game (SPAG) as described in the paper 'Self-playing Adversarial Language Game Enhances LLM Reasoning'. The SPAG involves training Language Models (LLMs) in an adversarial language game called Adversarial Taboo. The repository provides tools for imitation learning, self-play episode collection, and reinforcement learning on game episodes to enhance LLM reasoning abilities. The process involves training models using GPUs, launching imitation learning, conducting self-play episodes, assigning rewards based on outcomes, and learning the SPAG model through reinforcement learning. Continuous improvements on reasoning benchmarks can be observed by repeating the episode-collection and SPAG-learning processes.

mcp-llm-bridge

The MCP LLM Bridge is a tool that acts as a bridge connecting Model Context Protocol (MCP) servers to OpenAI-compatible LLMs. It provides a bidirectional protocol translation layer between MCP and OpenAI's function-calling interface, enabling any OpenAI-compatible language model to leverage MCP-compliant tools through a standardized interface. The tool supports primary integration with the OpenAI API and offers additional compatibility for local endpoints that implement the OpenAI API specification. Users can configure the tool for different endpoints and models, facilitating the execution of complex queries and tasks using cloud-based or local models like Ollama and LM Studio.

aidermacs

Aidermacs is an AI pair programming tool for Emacs that integrates Aider, a powerful open-source AI pair programming tool. It provides top performance on the SWE Bench, support for multi-file edits, real-time file synchronization, and broad language support. Aidermacs delivers an Emacs-centric experience with features like intelligent model selection, flexible terminal backend support, smarter syntax highlighting, enhanced file management, and streamlined transient menus. It thrives on community involvement, encouraging contributions, issue reporting, idea sharing, and documentation improvement.

llm2vec

LLM2Vec is a simple recipe to convert decoder-only LLMs into text encoders. It consists of 3 simple steps: 1) enabling bidirectional attention, 2) training with masked next token prediction, and 3) unsupervised contrastive learning. The model can be further fine-tuned to achieve state-of-the-art performance.

minuet-ai.el

Minuet AI is a tool that brings the grace and harmony of a minuet to your coding process. It offers AI-powered code completion with specialized prompts and enhancements for chat-based LLMs, as well as Fill-in-the-middle (FIM) completion for compatible models. The tool supports multiple AI providers such as OpenAI, Claude, Gemini, Codestral, Ollama, and OpenAI-compatible providers. It provides customizable configuration options and streaming support for completion delivery even with slower LLMs.

single-file-agents

Single File Agents (SFA) is a collection of powerful single-file agents built on top of uv, a modern Python package installer and resolver. These agents aim to perform specific tasks efficiently, demonstrating precise prompt engineering and GenAI patterns. The repository contains agents built across major GenAI providers like Gemini, OpenAI, and Anthropic. Each agent is self-contained, minimal, and built on modern Python for fast and reliable dependency management. Users can run these scripts from their server or directly from a gist. The agents are patternful, emphasizing the importance of setting up effective prompts, tools, and processes for reusability.

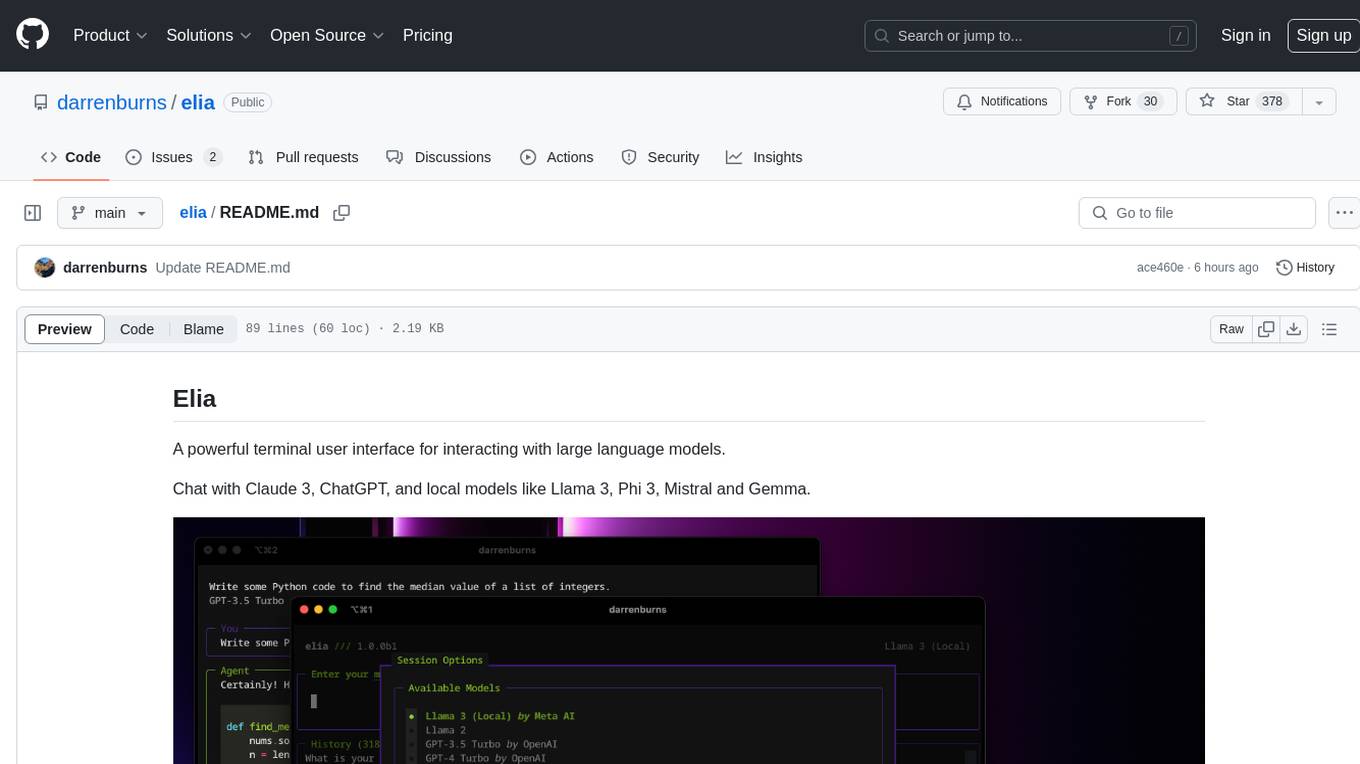

elia

Elia is a powerful terminal user interface designed for interacting with large language models. It allows users to chat with models like Claude 3, ChatGPT, Llama 3, Phi 3, Mistral, and Gemma. Conversations are stored locally in a SQLite database, ensuring privacy. Users can run local models through 'ollama' without data leaving their machine. Elia offers easy installation with pipx and supports various environment variables for different models. It provides a quick start to launch chats and manage local models. Configuration options are available to customize default models, system prompts, and add new models. Users can import conversations from ChatGPT and wipe the database when needed. Elia aims to enhance user experience in interacting with language models through a user-friendly interface.

vinagent

Vinagent is a lightweight and flexible library designed for building smart agent assistants across various industries. It provides a simple yet powerful foundation for creating AI-powered customer service bots, data analysis assistants, or domain-specific automation agents. With its modular tool system, users can easily extend their agent's capabilities by integrating a wide range of tools that are self-contained, well-documented, and can be registered dynamically. Vinagent allows users to scale and adapt their agents to new tasks or environments effortlessly.

trickPrompt-engine

This repository contains a vulnerability mining engine based on GPT technology. The engine is designed to identify logic vulnerabilities in code by utilizing task-driven prompts. It does not require prior knowledge or fine-tuning and focuses on prompt design rather than model design. The tool is effective in real-world projects and should not be used for academic vulnerability testing. It supports scanning projects in various languages, with current support for Solidity. The engine is configured through prompts and environment settings, enabling users to scan for vulnerabilities in their codebase. Future updates aim to optimize code structure, add more language support, and enhance usability through command line mode. The tool has received a significant audit bounty of $50,000+ as of May 2024.

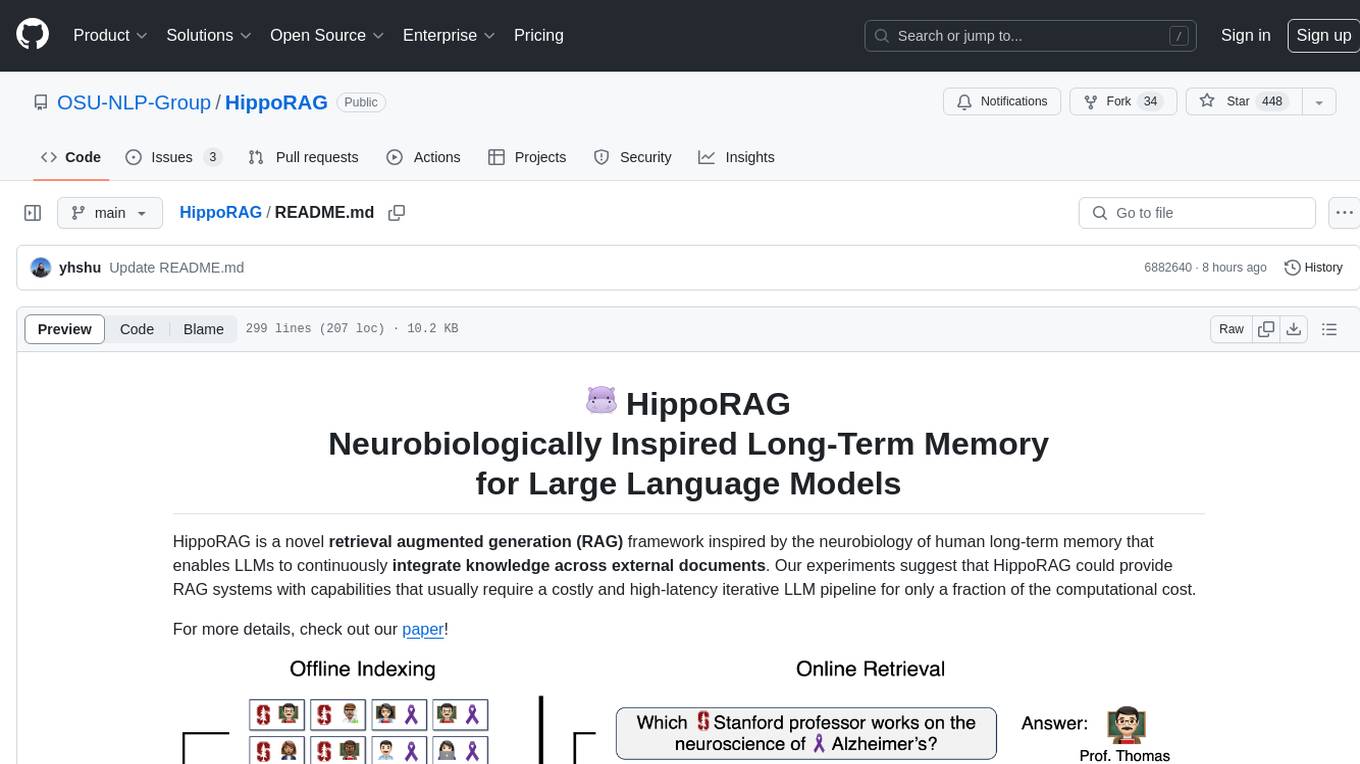

HippoRAG

HippoRAG is a novel retrieval augmented generation (RAG) framework inspired by the neurobiology of human long-term memory that enables Large Language Models (LLMs) to continuously integrate knowledge across external documents. It provides RAG systems with capabilities that usually require a costly and high-latency iterative LLM pipeline for only a fraction of the computational cost. The tool facilitates setting up retrieval corpus, indexing, and retrieval processes for LLMs, offering flexibility in choosing different online LLM APIs or offline LLM deployments through LangChain integration. Users can run retrieval on pre-defined queries or integrate directly with the HippoRAG API. The tool also supports reproducibility of experiments and provides data, baselines, and hyperparameter tuning scripts for research purposes.

Avalon-LLM

Avalon-LLM is a repository containing the official code for AvalonBench and the Avalon agent Strategist. AvalonBench evaluates Large Language Models (LLMs) playing The Resistance: Avalon, a board game requiring deductive reasoning, coordination, collaboration, and deception skills. Strategist utilizes LLMs to learn strategic skills through self-improvement, including high-level strategic evaluation and low-level execution guidance. The repository provides instructions for running AvalonBench, setting up Strategist, and conducting experiments with different agents in the game environment.

mjai.app

mjai.app is a platform for mahjong AI competition. It contains an implementation of a mahjong game simulator for evaluating submission files. The simulator runs Docker internally, and there is a base class for developing bots that communicate via the mjai protocol. Submission files are deployed in a Docker container, and the Docker image is pushed to Docker Hub. The Mjai protocol used is customized based on Mortal's Mjai Engine implementation.

odoo-expert

RAG-Powered Odoo Documentation Assistant is a comprehensive documentation processing and chat system that converts Odoo's documentation to a searchable knowledge base with an AI-powered chat interface. It supports multiple Odoo versions (16.0, 17.0, 18.0) and provides semantic search capabilities powered by OpenAI embeddings. The tool automates the conversion of RST to Markdown, offers real-time semantic search, context-aware AI-powered chat responses, and multi-version support. It includes a Streamlit-based web UI, REST API for programmatic access, and a CLI for document processing and chat. The system operates through a pipeline of data processing steps and an interface layer for UI and API access to the knowledge base.

gitleaks

Gitleaks is a tool for detecting secrets like passwords, API keys, and tokens in git repos, files, and whatever else you wanna throw at it via stdin. It can be installed using Homebrew, Docker, or Go, and is available in binary form for many popular platforms and OS types. Gitleaks can be implemented as a pre-commit hook directly in your repo or as a GitHub action. It offers scanning modes for git repositories, directories, and stdin, and allows creating baselines for ignoring old findings. Gitleaks also provides configuration options for custom secret detection rules and supports features like decoding encoded text and generating reports in various formats.

For similar tasks

llmperf

LLMPerf is a tool designed for evaluating the performance of Language Model APIs. It provides functionalities for conducting load tests to measure inter-token latency and generation throughput, as well as correctness tests to verify the responses. The tool supports various LLM APIs including OpenAI, Anthropic, TogetherAI, Hugging Face, LiteLLM, Vertex AI, and SageMaker. Users can set different parameters for the tests and analyze the results to assess the performance of the LLM APIs. LLMPerf aims to standardize prompts across different APIs and provide consistent evaluation metrics for comparison.

open-health

OpenHealth is an AI health assistant that helps users manage their health data by leveraging AI and personal health information. It allows users to consolidate health data, parse it smartly, and engage in contextual conversations with GPT-powered AI. The tool supports various data sources like blood test results, health checkup data, personal physical information, family history, and symptoms. OpenHealth aims to empower users to take control of their health by combining data and intelligence for actionable health management.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.