Best AI tools for< Manage Cloud Deployment Workflows >

20 - AI tool Sites

Pulumi

Pulumi is an AI-powered infrastructure as code platform that allows engineers to manage cloud infrastructure using various programming languages like Node.js, Python, Go, .NET, Java, and YAML. It offers capabilities such as generative AI-powered cloud management, security enforcement through policies, and automated deployment workflows. Pulumi Insights enables faster infrastructure code authoring through AI, while Pulumi Cloud provides managed services for infrastructure as code and secrets management. The platform is praised for its ease of use, developer experience, and ability to centralize and secure secrets management.

Azure Static Web Apps

Azure Static Web Apps is a platform provided by Microsoft Azure for building and deploying modern web applications. It allows developers to easily host static web content and serverless APIs with seamless integration to popular frameworks like React, Angular, and Vue. With Azure Static Web Apps, developers can quickly set up continuous integration and deployment workflows, enabling them to focus on building great user experiences without worrying about infrastructure management.

Prodvana

Prodvana is an intelligent deployment platform that helps businesses automate and streamline their software deployment process. It provides a variety of features to help businesses improve the speed, reliability, and security of their deployments. Prodvana is a cloud-based platform that can be used with any type of infrastructure, including on-premises, hybrid, and multi-cloud environments. It is also compatible with a wide range of DevOps tools and technologies. Prodvana's key features include: Intent-based deployments: Prodvana uses intent-based deployment technology to automate the deployment process. This means that businesses can simply specify their deployment goals, and Prodvana will automatically generate and execute the necessary steps to achieve those goals. This can save businesses a significant amount of time and effort. Guardrails for deployments: Prodvana provides a variety of guardrails to help businesses ensure the security and reliability of their deployments. These guardrails include approvals, database validations, automatic deployment validation, and simple interfaces to add custom guardrails. This helps businesses to prevent errors and reduce the risk of outages. Frictionless DevEx: Prodvana provides a frictionless developer experience by tracking commits through the infrastructure, ensuring complete visibility beyond just Docker images. This helps developers to quickly identify and resolve issues, and it also makes it easier to collaborate with other team members. Intelligence with Clairvoyance: Prodvana's Clairvoyance feature provides businesses with insights into the impact of their deployments before they are executed. This helps businesses to make more informed decisions about their deployments and to avoid potential problems. Easy integrations: Prodvana integrates seamlessly with a variety of DevOps tools and technologies. This makes it easy for businesses to use Prodvana with their existing workflows and processes.

BotX

BotX is a no-code AI platform that enables users to automate and deploy generative AI workflows, chatbots, RAGs, and multi-agent solutions. With production-ready AI systems, users can increase productivity, build AI agents and chatbots, automate workflows, create or process documents, and connect models effortlessly. The platform offers a range of models and fine-tuning options, seamless integration with advanced models like ChatGPT, and enterprise-grade results with grounded responses. Users can protect their data with various deployment options and benefit from dedicated support, integrations-ready solutions, and tailor-made solutions for enterprises and SMEs.

Integrail

Integrail is an AI tool that simplifies the process of building AI applications by allowing users to design and deploy multi-agent applications without the need for coding skills. It offers a range of features such as integrating external apps, optimizing cost and accuracy, and deploying applications securely in the cloud or on-premises. Integrail Studio provides access to popular AI models and enables users to transform business workflows efficiently.

Operant

Operant is a cloud-native runtime protection platform that offers instant visibility and control from infrastructure to APIs. It provides AI security shield for applications, API threat protection, Kubernetes security, automatic microsegmentation, and DevSecOps solutions. Operant helps defend APIs, protect Kubernetes, and shield AI applications by detecting and blocking various attacks in real-time. It simplifies security for cloud-native environments with zero instrumentation, application code changes, or integrations.

StreamDeploy

StreamDeploy is an AI-powered cloud deployment platform designed to streamline and secure application deployment for agile teams. It offers a range of features to help developers maximize productivity and minimize costs, including a Dockerfile generator, automated security checks, and support for continuous integration and delivery (CI/CD) pipelines. StreamDeploy is currently in closed beta, but interested users can book a demo or follow the company on Twitter for updates.

Cirroe AI

Cirroe AI is an intelligent chatbot designed to help users deploy and troubleshoot their AWS cloud infrastructure quickly and efficiently. With Cirroe AI, users can experience seamless automation, reduced downtime, and increased productivity by simplifying their AWS cloud operations. The chatbot allows for fast deployments, intuitive debugging, and cost-effective solutions, ultimately saving time and boosting efficiency in managing cloud infrastructure.

aqua

aqua is a comprehensive Quality Assurance (QA) management tool designed to streamline testing processes and enhance testing efficiency. It offers a wide range of features such as AI Copilot, bug reporting, test management, requirements management, user acceptance testing, and automation management. aqua caters to various industries including banking, insurance, manufacturing, government, tech companies, and medical sectors, helping organizations improve testing productivity, software quality, and defect detection ratios. The tool integrates with popular platforms like Jira, Jenkins, JMeter, and offers both Cloud and On-Premise deployment options. With AI-enhanced capabilities, aqua aims to make testing faster, more efficient, and error-free.

Singlebase

Singlebase.cloud is an AI-powered platform that serves as an alternative to Firebase and Supabase. It offers a comprehensive suite of tools and services to facilitate faster development and deployment through a unified API. The platform includes features such as Vector Database, NoSQL Database, Vector Embeddings, Generative AI, RAG, Knowledge Base, File storage, and Authentication, catering to a wide range of development needs.

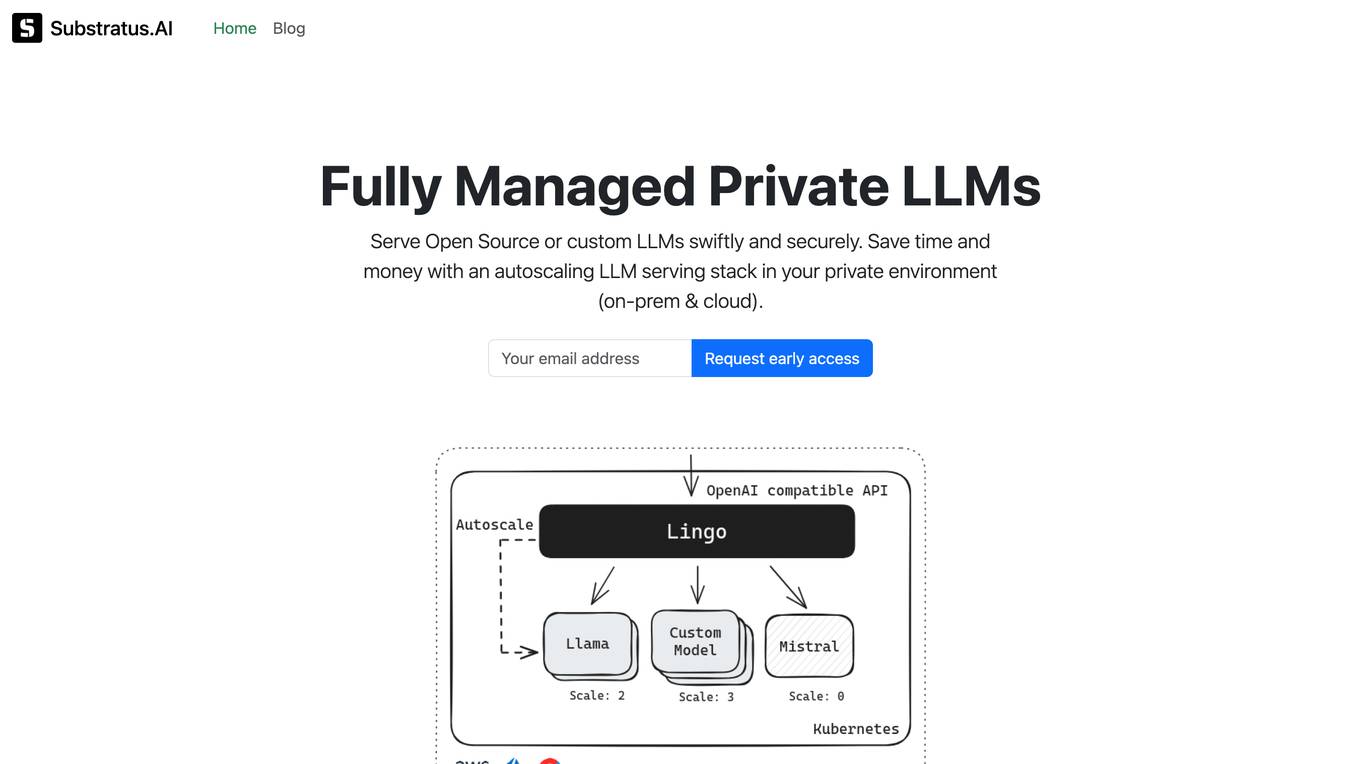

Substratus.AI

Substratus.AI is a fully managed private LLMs platform that allows users to serve LLMs (Llama and Mistral) in their own cloud account. It enables users to keep control of their data while reducing OpenAI costs by up to 10x. With Substratus.AI, users can utilize LLMs in production in hours instead of weeks, making it a convenient and efficient solution for AI model deployment.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

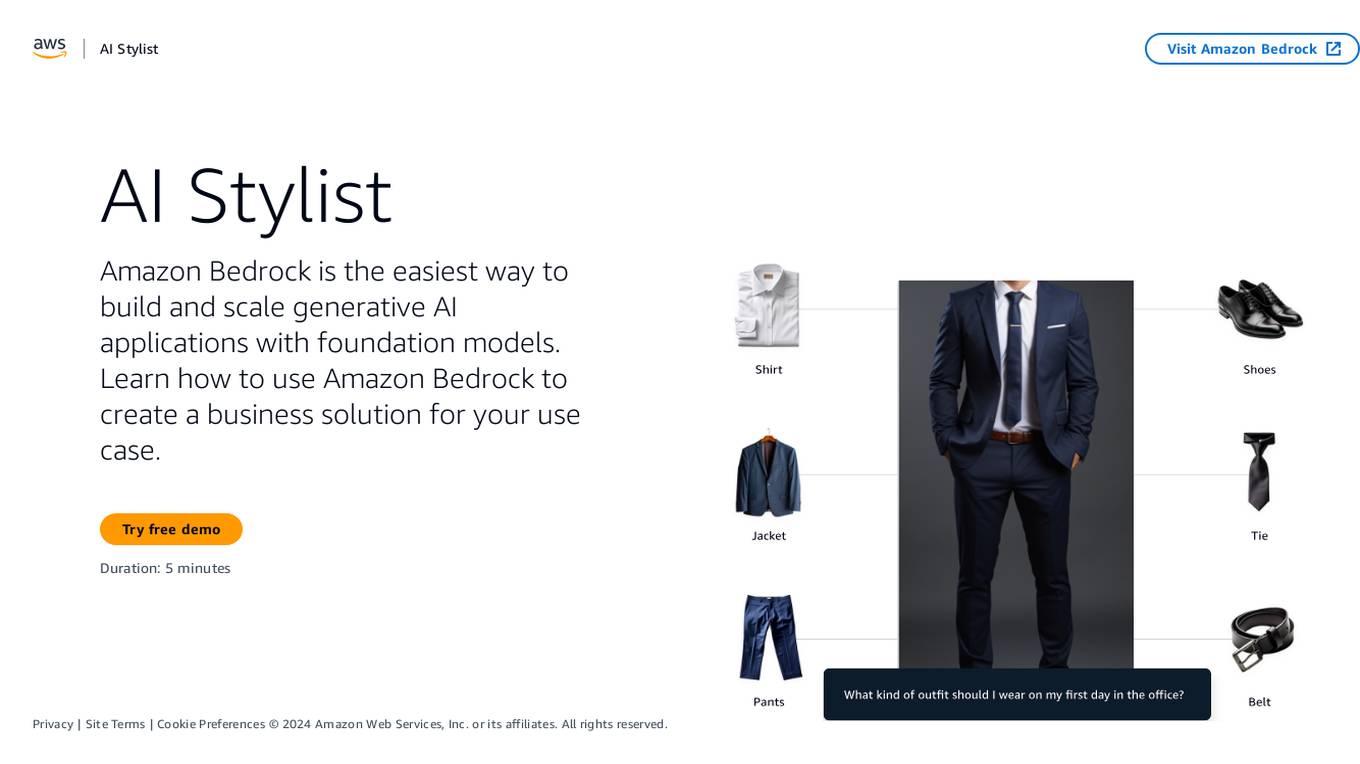

Amazon Bedrock

Amazon Bedrock is a cloud-based platform that enables developers to build, deploy, and manage serverless applications. It provides a fully managed environment that takes care of the infrastructure and operations, so developers can focus on writing code. Bedrock also offers a variety of tools and services to help developers build and deploy their applications, including a code editor, a debugger, and a deployment pipeline.

Seldon

Seldon is an MLOps platform that helps enterprises deploy, monitor, and manage machine learning models at scale. It provides a range of features to help organizations accelerate model deployment, optimize infrastructure resource allocation, and manage models and risk. Seldon is trusted by the world's leading MLOps teams and has been used to install and manage over 10 million ML models. With Seldon, organizations can reduce deployment time from months to minutes, increase efficiency, and reduce infrastructure and cloud costs.

HostAI

HostAI is a platform that allows users to host their artificial intelligence models and applications with ease. It provides a user-friendly interface for managing and deploying AI projects, eliminating the need for complex server setups. With HostAI, users can seamlessly run their AI algorithms and applications in a secure and efficient environment. The platform supports various AI frameworks and libraries, making it versatile for different AI projects. HostAI simplifies the process of AI deployment, enabling users to focus on developing and improving their AI models.

Wallaroo.AI

Wallaroo.AI is an AI inference platform that offers production-grade AI inference microservices optimized on OpenVINO for cloud and Edge AI application deployments on CPUs and GPUs. It provides hassle-free AI inferencing for any model, any hardware, anywhere, with ultrafast turnkey inference microservices. The platform enables users to deploy, manage, observe, and scale AI models effortlessly, reducing deployment costs and time-to-value significantly.

Vairflow

Vairflow is an AI-driven Integrated Development Environment (IDE) that empowers developers to build faster and more efficiently. It simplifies complex ideas into components, allowing seamless development and deployment of backend microservices, web UI, and mobile app UI. With upcoming AI features like code generation, completion, and explanation, Vairflow aims to enhance the coding experience. The platform also offers flexible deployment options, cost-effective usage, and seamless collaboration, ensuring no vendor lock-in and pay-as-you-go pricing model.

PoplarML

PoplarML is a platform that enables the deployment of production-ready, scalable ML systems with minimal engineering effort. It offers one-click deploys, real-time inference, and framework agnostic support. With PoplarML, users can seamlessly deploy ML models using a CLI tool to a fleet of GPUs and invoke their models through a REST API endpoint. The platform supports Tensorflow, Pytorch, and JAX models.

Heroku

Heroku is a cloud platform that lets companies build, deliver, monitor, and scale apps. It simplifies the process of deploying applications by providing a platform as a service (PaaS) that supports various programming languages. With Heroku, developers can focus on coding without worrying about infrastructure management.

Heroku

Heroku is a cloud platform as a service (PaaS) that enables developers to build, run, and operate applications entirely in the cloud. It supports several programming languages and offers a range of tools and services to streamline the development process. With Heroku, developers can focus on building their applications without worrying about infrastructure management.

20 - Open Source AI Tools

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

ai-enablement-stack

The AI Enablement Stack is a curated collection of venture-backed companies, tools, and technologies that enable developers to build, deploy, and manage AI applications. It provides a structured view of the AI development ecosystem across five key layers: Agent Consumer Layer, Observability and Governance Layer, Engineering Layer, Intelligence Layer, and Infrastructure Layer. Each layer focuses on specific aspects of AI development, from end-user interaction to model training and deployment. The stack aims to help developers find the right tools for building AI applications faster and more efficiently, assist engineering leaders in making informed decisions about AI infrastructure and tooling, and help organizations understand the AI development landscape to plan technology adoption.

BentoML

BentoML is an open-source model serving library for building performant and scalable AI applications with Python. It comes with everything you need for serving optimization, model packaging, and production deployment.

awesome-generative-ai-data-scientist

A curated list of 50+ resources to help you become a Generative AI Data Scientist. This repository includes resources on building GenAI applications with Large Language Models (LLMs), and deploying LLMs and GenAI with Cloud-based solutions.

enterprise-azureai

Azure OpenAI Service is a central capability with Azure API Management, providing guidance and tools for organizations to implement Azure OpenAI in a production environment with an emphasis on cost control, secure access, and usage monitoring. It includes infrastructure-as-code templates, CI/CD pipelines, secure access management, usage monitoring, load balancing, streaming requests, and end-to-end samples like ChatApp and Azure Dashboards.

free-for-life

A massive list including a huge amount of products and services that are completely free! ⭐ Star on GitHub • 🤝 Contribute # Table of Contents * APIs, Data & ML * Artificial Intelligence * BaaS * Code Editors * Code Generation * DNS * Databases * Design & UI * Domains * Email * Font * For Students * Forms * Linux Distributions * Messaging & Streaming * PaaS * Payments & Billing * SSL

aiid

The Artificial Intelligence Incident Database (AIID) is a collection of incidents involving the development and use of artificial intelligence (AI). The database is designed to help researchers, policymakers, and the public understand the potential risks and benefits of AI, and to inform the development of policies and practices to mitigate the risks and promote the benefits of AI. The AIID is a collaborative project involving researchers from the University of California, Berkeley, the University of Washington, and the University of Toronto.

cb-tumblebug

CB-Tumblebug (CB-TB) is a system for managing multi-cloud infrastructure consisting of resources from multiple cloud service providers. It provides an overview, features, and architecture. The tool supports various cloud providers and resource types, with ongoing development and localization efforts. Users can deploy a multi-cloud infra with GPUs, enjoy multiple LLMs in parallel, and utilize LLM-related scripts. The tool requires Linux, Docker, Docker Compose, and Golang for building the source. Users can run CB-TB with Docker Compose or from the Makefile, set up prerequisites, contribute to the project, and view a list of contributors. The tool is licensed under an open-source license.

call-center-ai

Call Center AI is an AI-powered call center solution leveraging Azure and OpenAI GPT. It allows for AI agent-initiated phone calls or direct calls to the bot from a configured phone number. The bot is customizable for various industries like insurance, IT support, and customer service, with features such as accessing claim information, conversation history, language change, SMS sending, and more. The project is a proof of concept showcasing the integration of Azure Communication Services, Azure Cognitive Services, and Azure OpenAI for an automated call center solution.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

lance

Lance is a modern columnar data format optimized for ML workflows and datasets. It offers high-performance random access, vector search, zero-copy automatic versioning, and ecosystem integrations with Apache Arrow, Pandas, Polars, and DuckDB. Lance is designed to address the challenges of the ML development cycle, providing a unified data format for collection, exploration, analytics, feature engineering, training, evaluation, deployment, and monitoring. It aims to reduce data silos and streamline the ML development process.

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

airflow-chart

This Helm chart bootstraps an Airflow deployment on a Kubernetes cluster using the Helm package manager. The version of this chart does not correlate to any other component. Users should not expect feature parity between OSS airflow chart and the Astronomer airflow-chart for identical version numbers. To install this helm chart remotely (using helm 3) kubectl create namespace airflow helm repo add astronomer https://helm.astronomer.io helm install airflow --namespace airflow astronomer/airflow To install this repository from source sh kubectl create namespace airflow helm install --namespace airflow . Prerequisites: Kubernetes 1.12+ Helm 3.6+ PV provisioner support in the underlying infrastructure Installing the Chart: sh helm install --name my-release . The command deploys Airflow on the Kubernetes cluster in the default configuration. The Parameters section lists the parameters that can be configured during installation. Upgrading the Chart: First, look at the updating documentation to identify any backwards-incompatible changes. To upgrade the chart with the release name `my-release`: sh helm upgrade --name my-release . Uninstalling the Chart: To uninstall/delete the `my-release` deployment: sh helm delete my-release The command removes all the Kubernetes components associated with the chart and deletes the release. Updating DAGs: Bake DAGs in Docker image The recommended way to update your DAGs with this chart is to build a new docker image with the latest code (`docker build -t my-company/airflow:8a0da78 .`), push it to an accessible registry (`docker push my-company/airflow:8a0da78`), then update the Airflow pods with that image: sh helm upgrade my-release . --set images.airflow.repository=my-company/airflow --set images.airflow.tag=8a0da78 Docker Images: The Airflow image that are referenced as the default values in this chart are generated from this repository: https://github.com/astronomer/ap-airflow. Other non-airflow images used in this chart are generated from this repository: https://github.com/astronomer/ap-vendor. Parameters: The complete list of parameters supported by the community chart can be found on the Parameteres Reference page, and can be set under the `airflow` key in this chart. The following tables lists the configurable parameters of the Astronomer chart and their default values. | Parameter | Description | Default | | :----------------------------- | :-------------------------------------------------------------------------------------------------------- | :---------------------------- | | `ingress.enabled` | Enable Kubernetes Ingress support | `false` | | `ingress.acme` | Add acme annotations to Ingress object | `false` | | `ingress.tlsSecretName` | Name of secret that contains a TLS secret | `~` | | `ingress.webserverAnnotations` | Annotations added to Webserver Ingress object | `{}` | | `ingress.flowerAnnotations` | Annotations added to Flower Ingress object | `{}` | | `ingress.baseDomain` | Base domain for VHOSTs | `~` | | `ingress.auth.enabled` | Enable auth with Astronomer Platform | `true` | | `extraObjects` | Extra K8s Objects to deploy (these are passed through `tpl`). More about Extra Objects. | `[]` | | `sccEnabled` | Enable security context constraints required for OpenShift | `false` | | `authSidecar.enabled` | Enable authSidecar | `false` | | `authSidecar.repository` | The image for the auth sidecar proxy | `nginxinc/nginx-unprivileged` | | `authSidecar.tag` | The image tag for the auth sidecar proxy | `stable` | | `authSidecar.pullPolicy` | The K8s pullPolicy for the the auth sidecar proxy image | `IfNotPresent` | | `authSidecar.port` | The port the auth sidecar exposes | `8084` | | `gitSyncRelay.enabled` | Enables git sync relay feature. | `False` | | `gitSyncRelay.repo.url` | Upstream URL to the git repo to clone. | `~` | | `gitSyncRelay.repo.branch` | Branch of the upstream git repo to checkout. | `main` | | `gitSyncRelay.repo.depth` | How many revisions to check out. Leave as default `1` except in dev where history is needed. | `1` | | `gitSyncRelay.repo.wait` | Seconds to wait before pulling from the upstream remote. | `60` | | `gitSyncRelay.repo.subPath` | Path to the dags directory within the git repository. | `~` | Specify each parameter using the `--set key=value[,key=value]` argument to `helm install`. For example, sh helm install --name my-release --set executor=CeleryExecutor --set enablePodLaunching=false . Walkthrough using kind: Install kind, and create a cluster We recommend testing with Kubernetes 1.25+, example: sh kind create cluster --image kindest/node:v1.25.11 Confirm it's up: sh kubectl cluster-info --context kind-kind Add Astronomer's Helm repo sh helm repo add astronomer https://helm.astronomer.io helm repo update Create namespace + install the chart sh kubectl create namespace airflow helm install airflow -n airflow astronomer/airflow It may take a few minutes. Confirm the pods are up: sh kubectl get pods --all-namespaces helm list -n airflow Run `kubectl port-forward svc/airflow-webserver 8080:8080 -n airflow` to port-forward the Airflow UI to http://localhost:8080/ to confirm Airflow is working. Login as _admin_ and password _admin_. Build a Docker image from your DAGs: 1. Start a project using astro-cli, which will generate a Dockerfile, and load your DAGs in. You can test locally before pushing to kind with `astro airflow start`. `sh mkdir my-airflow-project && cd my-airflow-project astro dev init` 2. Then build the image: `sh docker build -t my-dags:0.0.1 .` 3. Load the image into kind: `sh kind load docker-image my-dags:0.0.1` 4. Upgrade Helm deployment: sh helm upgrade airflow -n airflow --set images.airflow.repository=my-dags --set images.airflow.tag=0.0.1 astronomer/airflow Extra Objects: This chart can deploy extra Kubernetes objects (assuming the role used by Helm can manage them). For Astronomer Cloud and Enterprise, the role permissions can be found in the Commander role. yaml extraObjects: - apiVersion: batch/v1beta1 kind: CronJob metadata: name: "{{ .Release.Name }}-somejob" spec: schedule: "*/10 * * * *" concurrencyPolicy: Forbid jobTemplate: spec: template: spec: containers: - name: myjob image: ubuntu command: - echo args: - hello restartPolicy: OnFailure Contributing: Check out our contributing guide! License: Apache 2.0 with Commons Clause

HAMi

HAMi is a Heterogeneous AI Computing Virtualization Middleware designed to manage Heterogeneous AI Computing Devices in a Kubernetes cluster. It allows for device sharing, device memory control, device type specification, and device UUID specification. The tool is easy to use and does not require modifying task YAML files. It includes features like hard limits on device memory, partial device allocation, streaming multiprocessor limits, and core usage specification. HAMi consists of components like a mutating webhook, scheduler extender, device plugins, and in-container virtualization techniques. It is suitable for scenarios requiring device sharing, specific device memory allocation, GPU balancing, low utilization optimization, and scenarios needing multiple small GPUs. The tool requires prerequisites like NVIDIA drivers, CUDA version, nvidia-docker, Kubernetes version, glibc version, and helm. Users can install, upgrade, and uninstall HAMi, submit tasks, and monitor cluster information. The tool's roadmap includes supporting additional AI computing devices, video codec processing, and Multi-Instance GPUs (MIG).

oci-data-science-ai-samples

The Oracle Cloud Infrastructure Data Science and AI services Examples repository provides demos, tutorials, and code examples showcasing various features of the OCI Data Science service and AI services. It offers tools for data scientists to develop and deploy machine learning models efficiently, with features like Accelerated Data Science SDK, distributed training, batch processing, and machine learning pipelines. Whether you're a beginner or an experienced practitioner, OCI Data Science Services provide the resources needed to build, train, and deploy models easily.

holmesgpt

HolmesGPT is an open-source DevOps assistant powered by OpenAI or any tool-calling LLM of your choice. It helps in troubleshooting Kubernetes, incident response, ticket management, automated investigation, and runbook automation in plain English. The tool connects to existing observability data, is compliance-friendly, provides transparent results, supports extensible data sources, runbook automation, and integrates with existing workflows. Users can install HolmesGPT using Brew, prebuilt Docker container, Python Poetry, or Docker. The tool requires an API key for functioning and supports OpenAI, Azure AI, and self-hosted LLMs.

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

20 - OpenAI Gpts

Azure Arc Expert

Azure Arc expert providing guidance on architecture, deployment, and management.

Cloud Services Management Advisor

Manages and optimizes organization's cloud resources and services.

Cloud Computing

Expert in cloud computing, offering insights on services, security, and infrastructure.

Alexandre Leroy : Architecte de Solutions Cloud

Architecte cloud chez KingLand et passionné de nature. Conception d'architectures cloud, expertise en solutions cloud, capacité d'innovation technologique, compétences en gestion de projet, collaboration interdépartementale.

Cloud Architecture Advisor

Guides cloud strategy and architecture to optimize business operations.

Nimbus Navigator

Cloud Engineer Expert, guiding in cloud tech, projects, career, and industry trends.

Cloudwise Consultant

Expert in cloud-native solutions, provides tailored tech advice and cost estimates.