Best AI tools for< Deploy Ml Systems >

20 - AI tool Sites

PoplarML

PoplarML is a platform that enables the deployment of production-ready, scalable ML systems with minimal engineering effort. It offers one-click deploys, real-time inference, and framework agnostic support. With PoplarML, users can seamlessly deploy ML models using a CLI tool to a fleet of GPUs and invoke their models through a REST API endpoint. The platform supports Tensorflow, Pytorch, and JAX models.

Protect AI

Protect AI is a comprehensive platform designed to secure AI systems by providing visibility and manageability to detect and mitigate unique AI security threats. The platform empowers organizations to embrace a security-first approach to AI, offering solutions for AI Security Posture Management, ML model security enforcement, AI/ML supply chain vulnerability database, LLM security monitoring, and observability. Protect AI aims to safeguard AI applications and ML systems from potential vulnerabilities, enabling users to build, adopt, and deploy AI models confidently and at scale.

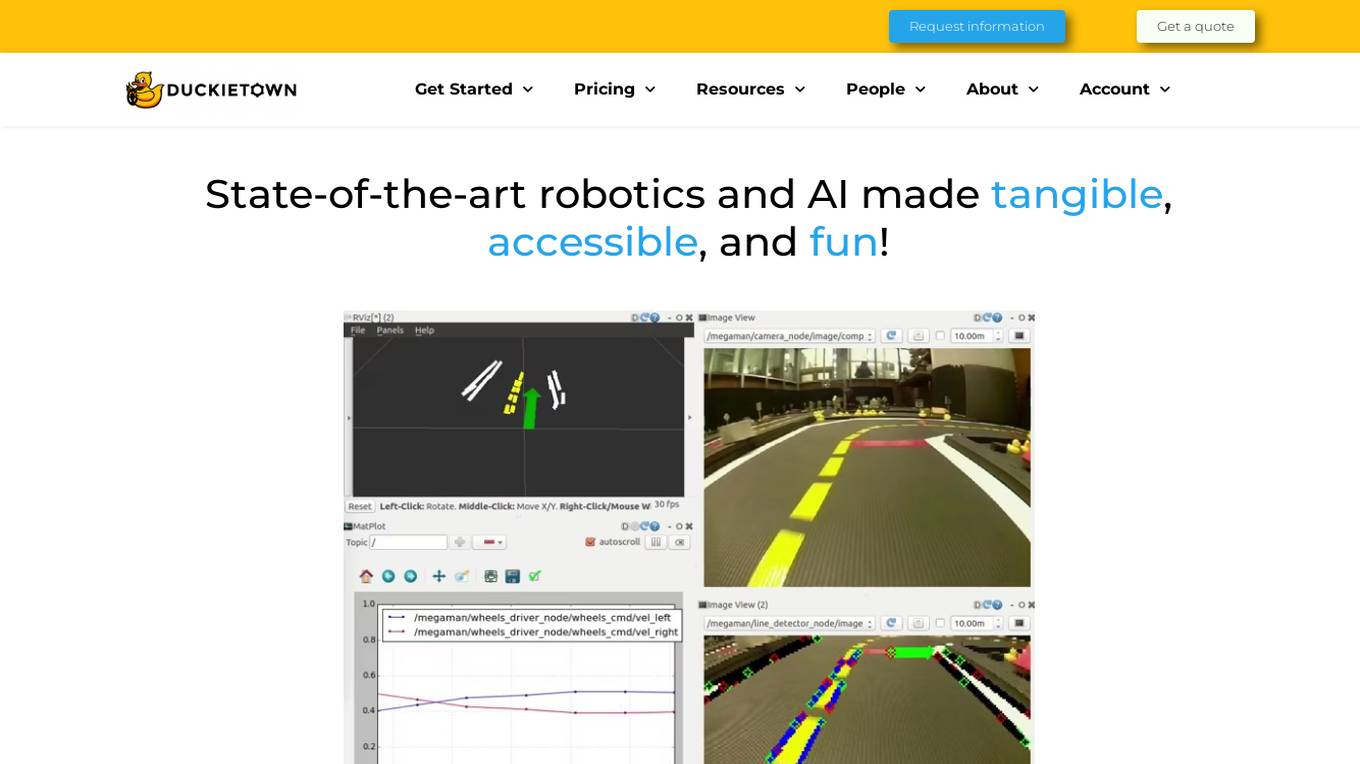

Duckietown

Duckietown is a platform for delivering cutting-edge robotics and AI learning experiences. It offers teaching resources to instructors, hands-on activities to learners, an accessible research platform to researchers, and a state-of-the-art ecosystem for professional training. Duckietown's mission is to make robotics and AI education state-of-the-art, hands-on, and accessible to all.

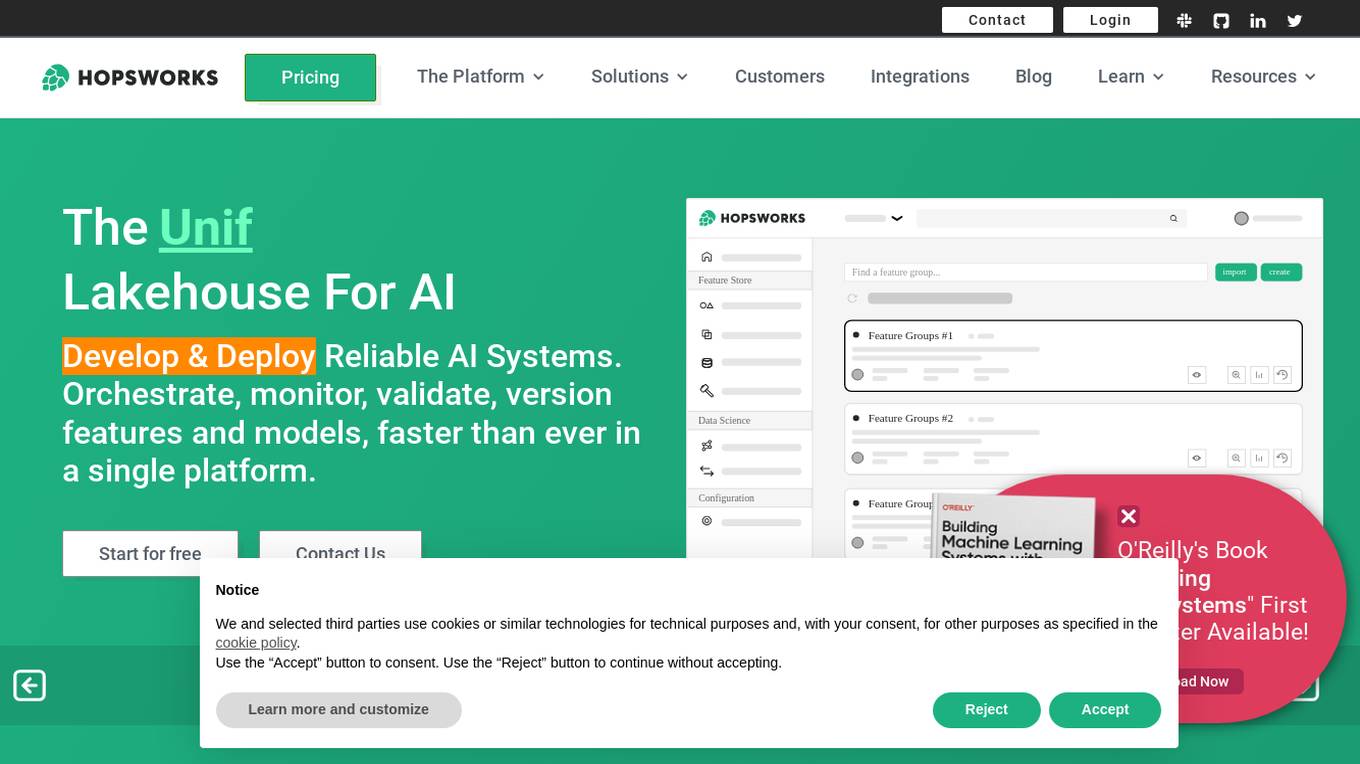

Hopsworks

Hopsworks is an AI platform that offers a comprehensive solution for building, deploying, and monitoring machine learning systems. It provides features such as a Feature Store, real-time ML capabilities, and generative AI solutions. Hopsworks enables users to develop and deploy reliable AI systems, orchestrate and monitor models, and personalize machine learning models with private data. The platform supports batch and real-time ML tasks, with the flexibility to deploy on-premises or in the cloud.

SiMa.ai

SiMa.ai is an AI application that offers high-performance, power-efficient, and scalable edge machine learning solutions for various industries such as automotive, industrial, healthcare, drones, and government sectors. The platform provides MLSoC™ boards, DevKit 2.0, Palette Software 1.2, and Edgematic™ for developers to accelerate complete applications and deploy AI-enabled solutions. SiMa.ai's Machine Learning System on Chip (MLSoC) enables full-pipeline implementations of real-world ML solutions, making it a trusted platform for edge AI development.

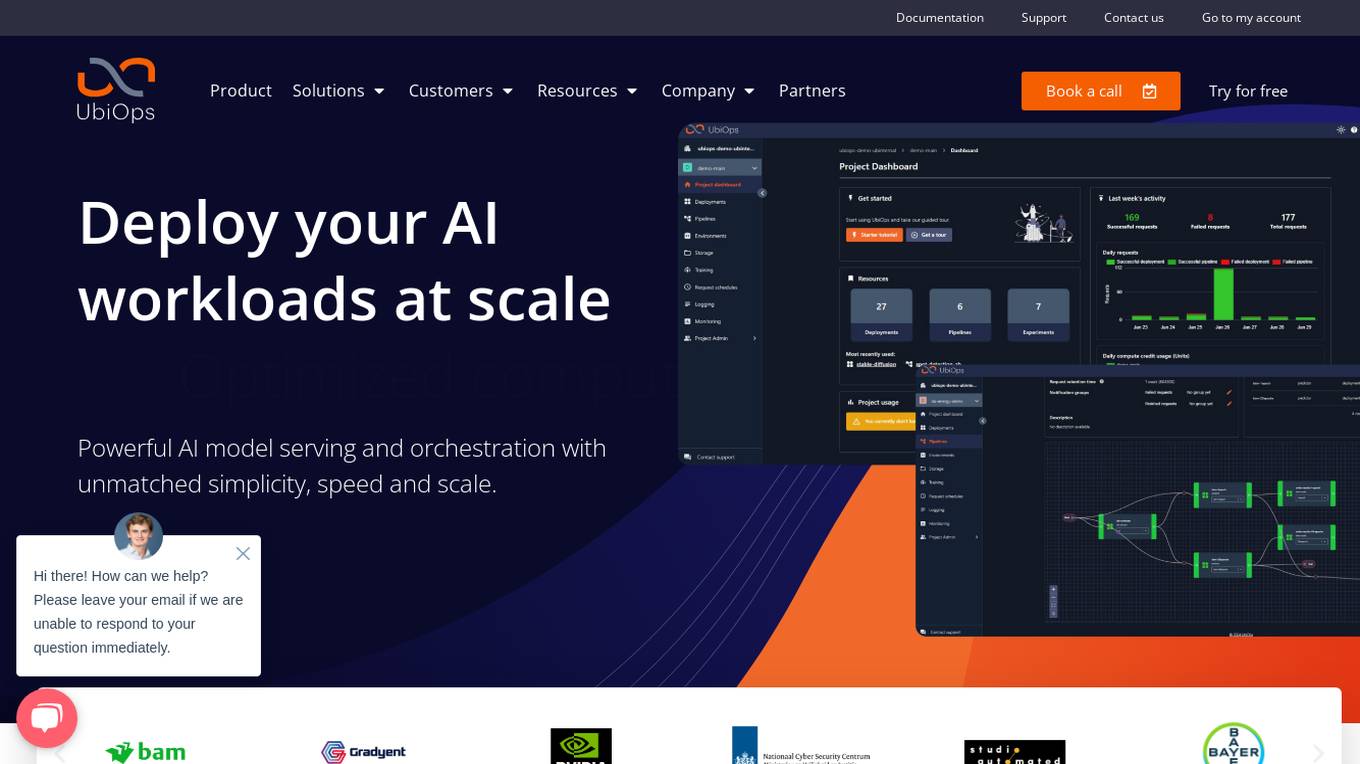

UbiOps

UbiOps is an AI infrastructure platform that helps teams quickly run their AI & ML workloads as reliable and secure microservices. It offers powerful AI model serving and orchestration with unmatched simplicity, speed, and scale. UbiOps allows users to deploy models and functions in minutes, manage AI workloads from a single control plane, integrate easily with tools like PyTorch and TensorFlow, and ensure security and compliance by design. The platform supports hybrid and multi-cloud workload orchestration, rapid adaptive scaling, and modular applications with unique workflow management system.

Kubeflow

Kubeflow is an open-source machine learning (ML) toolkit that makes deploying ML workflows on Kubernetes simple, portable, and scalable. It provides a unified interface for model training, serving, and hyperparameter tuning, and supports a variety of popular ML frameworks including PyTorch, TensorFlow, and XGBoost. Kubeflow is designed to be used with Kubernetes, a container orchestration system that automates the deployment, management, and scaling of containerized applications.

Modal

Modal is a high-performance cloud platform designed for developers, AI data, and ML teams. It offers a serverless environment for running generative AI models, large-scale batch jobs, job queues, and more. With Modal, users can bring their own code and leverage the platform's optimized container file system for fast cold boots and seamless autoscaling. The platform is engineered for large-scale workloads, allowing users to scale to hundreds of GPUs, pay only for what they use, and deploy functions to the cloud in seconds without the need for YAML or Dockerfiles. Modal also provides features for job scheduling, web endpoints, observability, and security compliance.

Mystic.ai

Mystic.ai is an AI tool designed to deploy and scale Machine Learning models with ease. It offers a fully managed Kubernetes platform that runs in your own cloud, allowing users to deploy ML models in their own Azure/AWS/GCP account or in a shared GPU cluster. Mystic.ai provides cost optimizations, fast inference, simpler developer experience, and performance optimizations to ensure high-performance AI model serving. With features like pay-as-you-go API, cloud integration with AWS/Azure/GCP, and a beautiful dashboard, Mystic.ai simplifies the deployment and management of ML models for data scientists and AI engineers.

PredictModel

PredictModel is an AI tool that specializes in creating custom Machine Learning models tailored to meet unique requirements. The platform offers a comprehensive three-step process, including generating synthetic data, training ML models, and deploying them to AWS. PredictModel helps businesses streamline processes, improve customer segmentation, enhance client interaction, and boost overall business performance. The tool maximizes accuracy through customized synthetic data generation and saves time and money by providing expert ML engineers. With a focus on automated lead prioritization, fraud detection, cost optimization, and planning, PredictModel aims to stay ahead of the curve in the ML industry.

TensorFlow

TensorFlow is an end-to-end platform for machine learning. It provides a wide range of tools and resources to help developers build, train, and deploy ML models. TensorFlow is used by researchers and developers all over the world to solve real-world problems in a variety of domains, including computer vision, natural language processing, and robotics.

Salad

Salad is a distributed GPU cloud platform that offers fully managed and massively scalable services for AI applications. It provides the lowest priced AI transcription in the market, with features like image generation, voice AI, computer vision, data collection, and batch processing. Salad democratizes cloud computing by leveraging consumer GPUs to deliver cost-effective AI/ML inference at scale. The platform is trusted by hundreds of machine learning and data science teams for its affordability, scalability, and ease of deployment.

JFrog ML

JFrog ML is an AI platform designed to streamline AI development from prototype to production. It offers a unified MLOps platform to build, train, deploy, and manage AI workflows at scale. With features like Feature Store, LLMOps, and model monitoring, JFrog ML empowers AI teams to collaborate efficiently and optimize AI & ML models in production.

ML Clever

ML Clever is a no-code machine learning platform that empowers users to build powerful ML models with one click, explore what-if scenarios to guide decisions, and create interactive dashboards to explain results. It combines automated machine learning, interactive dashboards, and flexible prediction tools in one platform, allowing users to transform data into business insights without the need for data scientists or coding skills.

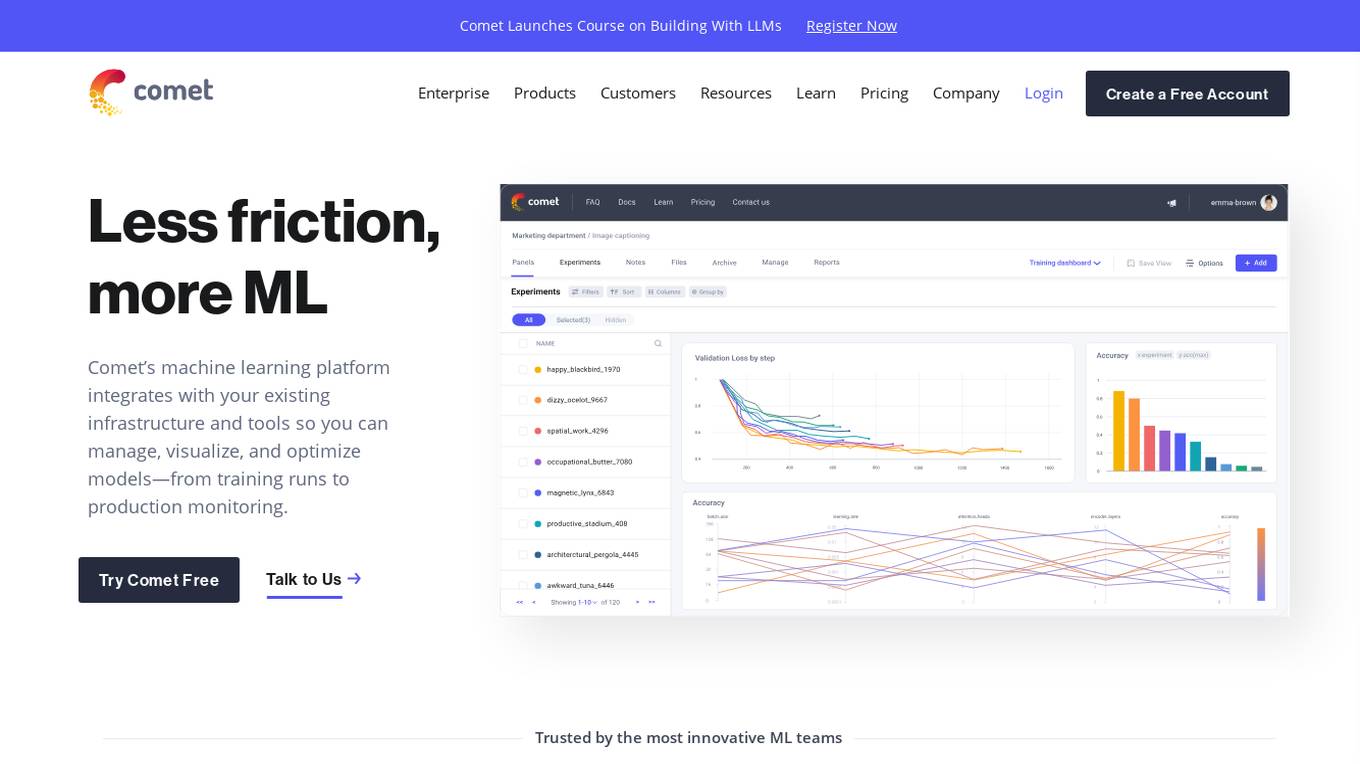

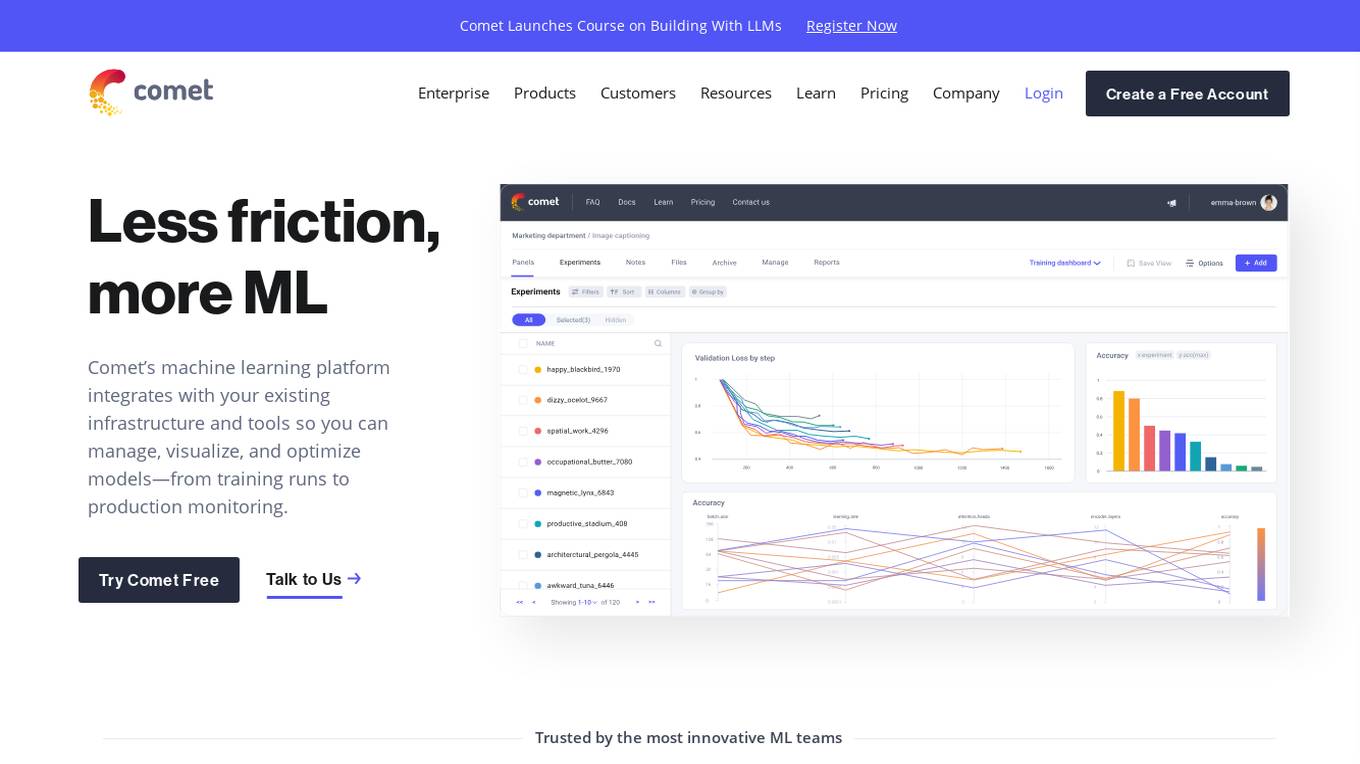

Comet ML

Comet ML is a machine learning platform that integrates with your existing infrastructure and tools so you can manage, visualize, and optimize models—from training runs to production monitoring.

Comet ML

Comet ML is a machine learning platform that integrates with your existing infrastructure and tools so you can manage, visualize, and optimize models—from training runs to production monitoring.

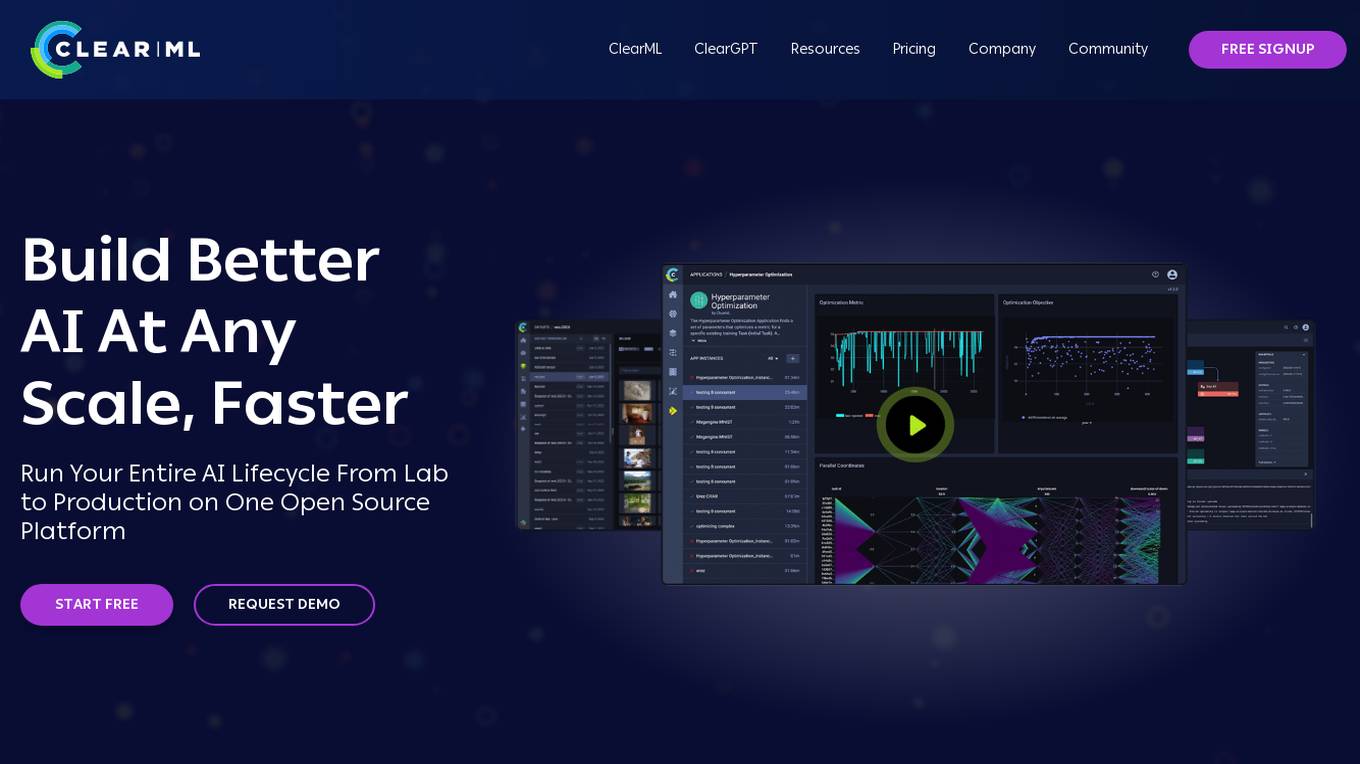

ClearML

ClearML is an open-source, end-to-end platform for continuous machine learning (ML). It provides a unified platform for data management, experiment tracking, model training, deployment, and monitoring. ClearML is designed to make it easy for teams to collaborate on ML projects and to ensure that models are deployed and maintained in a reliable and scalable way.

CHAI AI

CHAI AI is a leading conversational AI platform that focuses on building AI solutions for quant traders. The platform has secured significant funding rounds to enhance both its computational capabilities and talent acquisition. CHAI AI offers a range of features and advantages, including model upgrades, deployment of various AI models, and efficient inference techniques. The platform aims to provide users with the ability to create their own ChatAIs and offers a unique approach to model blending for improved user retention. With a strong team of AI researchers and engineers, CHAI AI continues to innovate in the field of AI technology.

Striveworks

Striveworks is an AI application that offers a Machine Learning Operations Platform designed to help organizations build, deploy, maintain, monitor, and audit machine learning models efficiently. It provides features such as rapid model deployment, data and model auditability, low-code interface, flexible deployment options, and operationalizing AI data science with real returns. Striveworks aims to accelerate the ML lifecycle, save time and money in model creation, and enable non-experts to leverage AI for data-driven decisions.

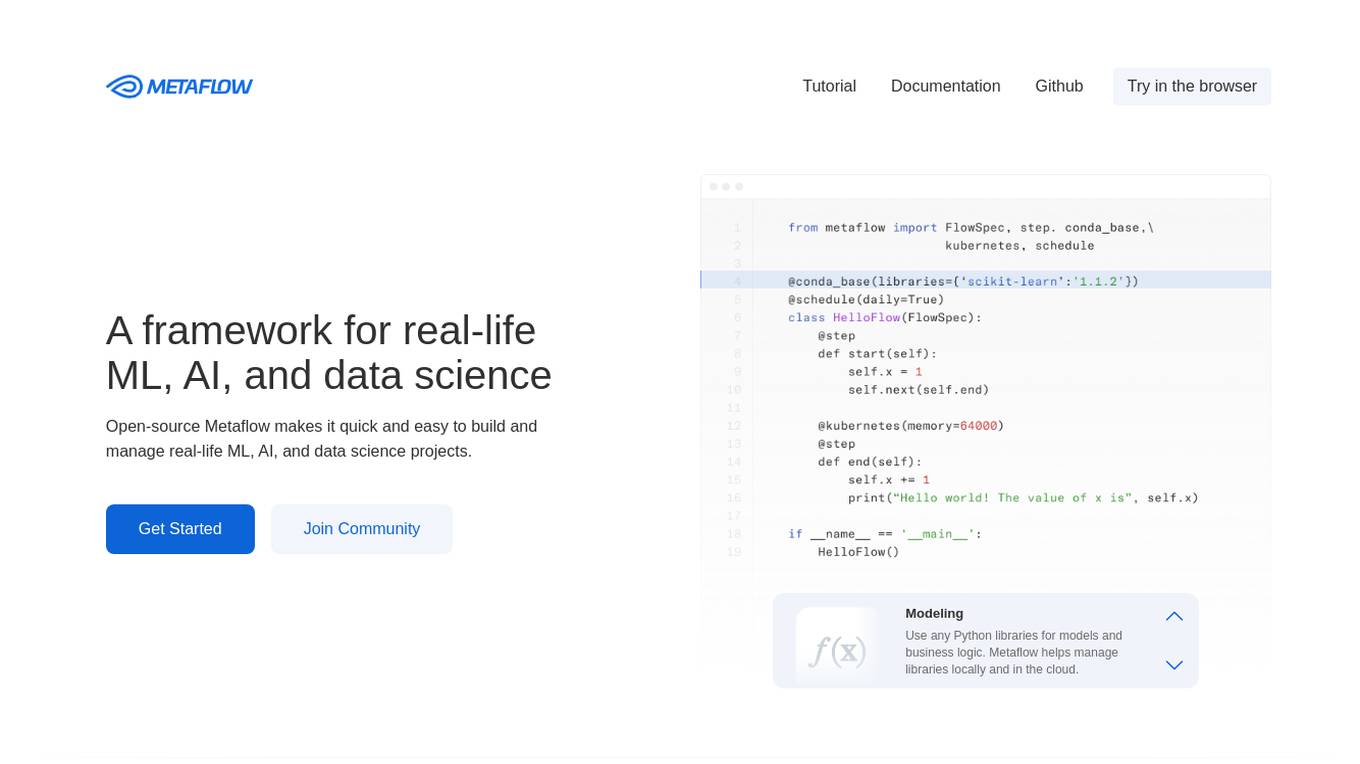

Metaflow

Metaflow is an open-source framework for building and managing real-life ML, AI, and data science projects. It makes it easy to use any Python libraries for models and business logic, deploy workflows to production with a single command, track and store variables inside the flow automatically for easy experiment tracking and debugging, and create robust workflows in plain Python. Metaflow is used by hundreds of companies, including Netflix, 23andMe, and Realtor.com.

1 - Open Source AI Tools

AI-System-School

AI System School is a curated list of research in machine learning systems, focusing on ML/DL infra, LLM infra, domain-specific infra, ML/LLM conferences, and general resources. It provides resources such as data processing, training systems, video systems, autoML systems, and more. The repository aims to help users navigate the landscape of AI systems and machine learning infrastructure, offering insights into conferences, surveys, books, videos, courses, and blogs related to the field.

20 - OpenAI Gpts

Instructor GCP ML

Formador para la certificación de ML Engineer en GCP, con respuestas y explicaciones detalladas.

ML Engineer GPT

I'm a Python and PyTorch expert with knowledge of ML infrastructure requirements ready to help you build and scale your ML projects.

Frontend Developer

AI front-end developer expert in coding React, Nextjs, Vue, Svelte, Typescript, Gatsby, Angular, HTML, CSS, JavaScript & advanced in Flexbox, Tailwind & Material Design. Mentors in coding & debugging for junior, intermediate & senior front-end developers alike. Let’s code, build & deploy a SaaS app.

Azure Arc Expert

Azure Arc expert providing guidance on architecture, deployment, and management.

Docker and Docker Swarm Assistant

Expert in Docker and Docker Swarm solutions and troubleshooting.

Cloudwise Consultant

Expert in cloud-native solutions, provides tailored tech advice and cost estimates.