Best AI tools for< create synthetic data >

20 - AI tool Sites

Syntho

Syntho is a self-service AI-generated synthetic data platform that enables users to create realistic and privacy-preserving synthetic data for various applications, including testing, analytics, and data sharing. It offers a range of features such as AI-generated synthetic data, smart de-identification, test data management, and rule-based synthetic data generation. Syntho's synthetic data is assessed and approved by the data experts of SAS, ensuring high accuracy and quality.

Avanzai

Avanzai is an AI application designed to assist wealth managers in managing portfolio risk efficiently. It offers autonomous AI agents that automate risk management, scenario analysis, and compliance monitoring. Users can build AI agents to scrape real-time market insights, monitor economic and earnings calendars, and integrate data sources to make better decisions. Avanzai aims to streamline risk management processes and provide tailored insights for businesses.

Datagen

Datagen is a platform that provides synthetic data for computer vision. Synthetic data is artificially generated data that can be used to train machine learning models. Datagen's data is generated using a variety of techniques, including 3D modeling, computer graphics, and machine learning. The company's data is used by a variety of industries, including automotive, security, smart office, fitness, cosmetics, and facial applications.

Synthesis AI

Synthesis AI is a synthetic data platform that enables more capable and ethical computer vision AI. It provides on-demand labeled images and videos, photorealistic images, and 3D generative AI to help developers build better models faster. Synthesis AI's products include Synthesis Humans, which allows users to create detailed images and videos of digital humans with rich annotations; Synthesis Scenarios, which enables users to craft complex multi-human simulations across a variety of environments; and a range of applications for industries such as ID verification, automotive, avatar creation, virtual fashion, AI fitness, teleconferencing, visual effects, and security.

Incribo

Incribo is a company that provides synthetic data for training machine learning models. Synthetic data is artificially generated data that is designed to mimic real-world data. This data can be used to train machine learning models without the need for real-world data, which can be expensive and difficult to obtain. Incribo's synthetic data is high quality and affordable, making it a valuable resource for machine learning developers.

syntheticAIdata

syntheticAIdata is a platform that provides synthetic data for training vision AI models. Synthetic data is generated artificially, and it can be used to augment existing real-world datasets or to create new datasets from scratch. syntheticAIdata's platform is easy to use, and it can be integrated with leading cloud platforms. The company's mission is to make synthetic data accessible to everyone, and to help businesses overcome the challenges of acquiring high-quality data for training their vision AI models.

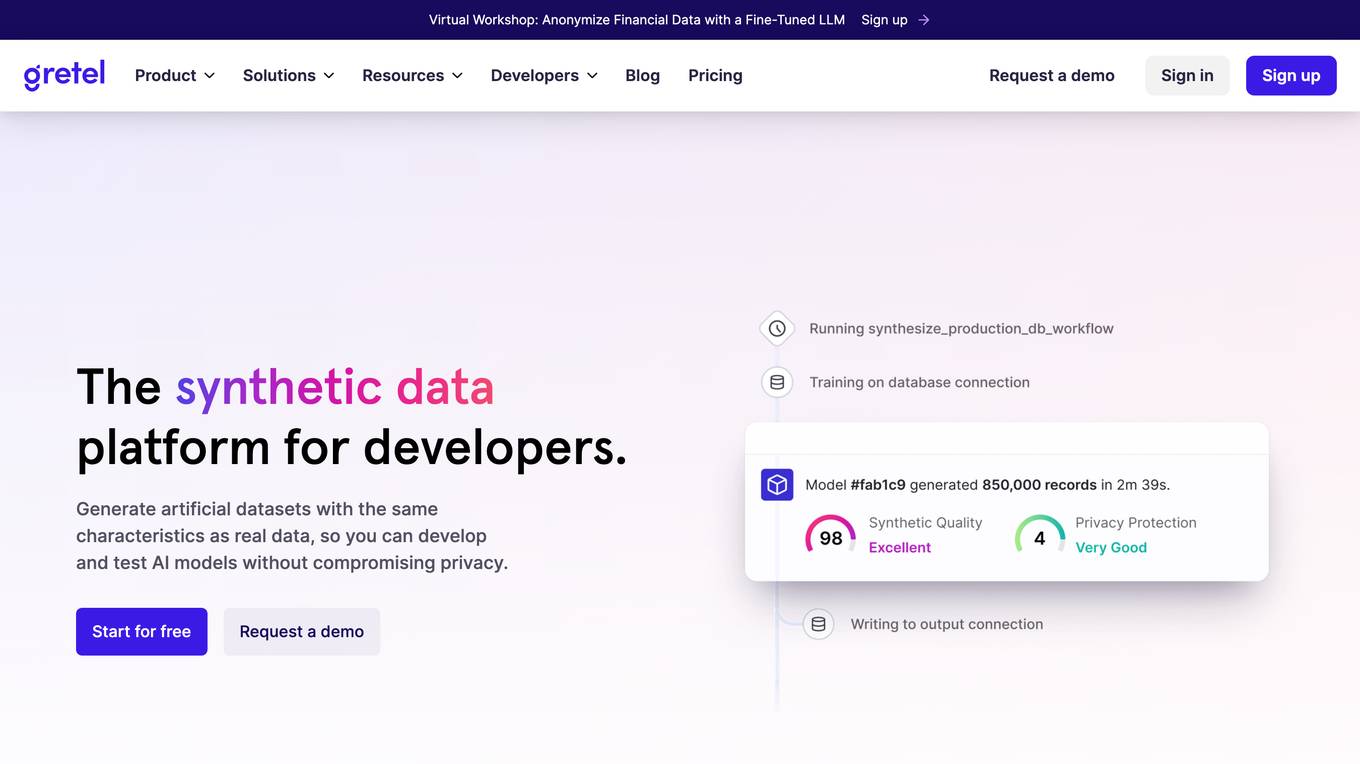

Gretel.ai

Gretel.ai is a multimodal synthetic data platform designed for developers. It allows users to generate synthetic data from input prompts, build data pipelines, transform data using flexible rule-based methods, and evaluate the quality of synthetic data. The platform aims to improve machine learning robustness, enable safe data sharing, and power generative AI models across various industries such as finance, healthcare, and the public sector. Gretel.ai offers a comprehensive set of tools and resources, including the Gretel CLI, Blueprints, and open-source projects, to help developers create accurate and safe synthetic datasets for their AI models.

OpinioAI

OpinioAI is an AI-powered market research tool that allows users to gain business critical insights from data without the need for costly polls, surveys, or interviews. With OpinioAI, users can create AI personas and market segments to understand customer preferences, affinities, and opinions. The platform democratizes research by providing efficient, effective, and budget-friendly solutions for businesses, students, and individuals seeking valuable insights. OpinioAI leverages Large Language Models to simulate humans and extract opinions in detail, enabling users to analyze existing data, synthesize new insights, and evaluate content from the perspective of their target audience.

Marvin

Marvin is a lightweight toolkit for building natural language interfaces that are reliable, scalable, and easy to trust. It provides a variety of AI functions for text, images, audio, and video, as well as interactive tools and utilities. Marvin is designed to be easy to use and integrate, and it can be used to build a wide range of applications, from simple chatbots to complex AI-powered systems.

QuarkIQL

QuarkIQL is a generative testing tool for computer vision APIs. It allows users to create custom test images and requests with just a few clicks. QuarkIQL also provides a log of your queries so you can run more experiments without starting from square one.

Gretel.ai

Gretel.ai is an AI tool that helps users incorporate generative AI into their data by generating synthetic data that is as good or better than the existing data. Users can fine-tune custom AI models and use Gretel's APIs to generate unlimited synthesized datasets, perform privacy-preserving transformations on sensitive data, and identify PII with advanced NLP detection. Gretel's APIs make it simple to generate anonymized and safe synthetic data, allowing users to innovate faster and preserve privacy while doing it. Gretel's platform includes Synthetics, Transform, and Classify APIs that provide users with a complete set of tools to create safe data. Gretel also offers a range of resources, including documentation, tutorials, GitHub projects, and open-source SDKs for developers. Gretel Cloud runners allow users to keep data contained by running Gretel containers in their environment or scaling out workloads to the cloud in seconds. Overall, Gretel.ai is a powerful AI tool for generating synthetic data that can help users unlock innovation and achieve more with safe access to the right data.

Resemble AI

Resemble AI is an advanced AI voice generator tool that offers text-to-speech and speech-to-speech capabilities. It provides rapid voice cloning, generative voice AI platform, and deepfake detection features. Users can create synthetic voices in multiple languages, edit audio with neural audio editing, and deploy AI voices for various applications like gaming, entertainment, and advertisement. Resemble AI prioritizes security and offers on-premises deployment options for enhanced data control and integration. The tool is designed for enterprises seeking cutting-edge voice AI solutions for videos, audiobooks, podcasts, and more.

Synthetic Users

Synthetic Users is an AI-powered user research tool that allows users to conduct user research without the traditional challenges and headaches. The platform leverages advanced AI architecture to create accurate synthetic interviews and surveys, enabling users to gain valuable insights for various applications. Synthetic Users uses the power of Large Language Models (LLMs) to generate human-like AI participants with high Synthetic Organic Parity, ensuring relevant and reflective data. The tool offers a multi-agent architecture for dynamic interactions, continuous learning, and adaptation, mimicking real human behaviors. Users can run problem exploration interviews, concept testing interviews, and enrich their own Synthetic Users with proprietary data. With a focus on enhancing user satisfaction and identifying market needs, Synthetic Users provides a complete suite of research solutions for product discovery, marketing, and growth.

This Beach Does Not Exist

This Beach Does Not Exist is an AI application powered by StyleGAN2-ADA network, capable of generating realistic beach images. The website showcases AI-generated beach landscapes created from a dataset of approximately 20,000 images. Users can explore the training progress of the network, generate random images, utilize K-Means Clustering for image grouping, and download the network for experimentation or retraining purposes. Detailed technical information about the network architecture, dataset, training steps, and metrics is provided. The application is based on the GAN architecture developed by NVIDIA Labs and offers a unique experience of creating virtual beach scenes through AI technology.

Undress AI Pro

Undress AI Pro is a controversial computer vision application that uses machine learning to remove clothing from images of people. It was based on deep learning and generative adversarial networks (GANs). The technology powering Undress AI and DeepNude was based on deep learning and generative adversarial networks (GANs). GANs involve two neural networks competing against each other - a generator creates synthetic images trying to mimic the training data, while a discriminator tries to distinguish the real images from the generated ones. Through this adversarial process, the generator learns to produce increasingly realistic outputs. For Undress AI, the GAN was trained on a dataset of nude and clothed images, allowing it to "unclothe" people in new images by generating the nudity.

Azoo

Azoo is an AI-powered platform that offers a wide range of services in various categories such as logistics, animal, consumer commerce, real estate, law, and finance. It provides tools for data analysis, event management, and guides for users. The platform is designed to streamline processes, enhance decision-making, and improve efficiency in different industries. Azoo is developed by Cubig Corp., a company based in Seoul, South Korea, and aims to revolutionize the way businesses operate through innovative AI solutions.

AiFA Labs

AiFA Labs is an AI platform that offers a comprehensive suite of generative AI products and services for enterprises. The platform enables businesses to create, manage, and deploy generative AI applications responsibly and at scale. With a focus on governance, compliance, and security, AiFA Labs provides a range of AI tools to streamline business operations, enhance productivity, and drive innovation. From AI code assistance to chat interfaces and data synthesis, AiFA Labs empowers organizations to leverage the power of AI for various use cases across different industries.

ChatTTS

ChatTTS is a text-to-speech tool optimized for natural, conversational scenarios. It supports both Chinese and English languages, trained on approximately 100,000 hours of data. With features like multi-language support, large data training, dialog task compatibility, open-source plans, control, security, and ease of use, ChatTTS provides high-quality and natural-sounding voice synthesis. It is designed for conversational tasks, dialogue speech generation, video introductions, educational content synthesis, and more. Users can integrate ChatTTS into their applications using provided API and SDKs for a seamless text-to-speech experience.

WikeAI

WikeAI is an all-in-one AI platform that provides access to top AI models such as GPT-4, Claude3, Mistral, and Llama2. It offers professional-level cross-model integration, allowing users to experience powerful language understanding, speech synthesis, and visual generation technology without switching between multiple systems. WikeAI simplifies the process of using AI for content writing by generating blog articles, product descriptions, social media ads, and more in seconds. The platform offers different pricing plans tailored to various user needs, from casual users to language creators.

Notably

Notably is a research synthesis platform that uses AI to help researchers analyze and interpret data faster. It offers a variety of features, including a research repository, AI research, digital sticky notes, video transcription, and cluster analysis. Notably is used by companies and organizations of all sizes to conduct product research, market research, academic research, and more.

20 - Open Source AI Tools

awesome-synthetic-datasets

This repository focuses on organizing resources for building synthetic datasets using large language models. It covers important datasets, libraries, tools, tutorials, and papers related to synthetic data generation. The goal is to provide pragmatic and practical resources for individuals interested in creating synthetic datasets for machine learning applications.

bonito

Bonito is an open-source model for conditional task generation, converting unannotated text into task-specific training datasets for instruction tuning. It is a lightweight library built on top of Hugging Face `transformers` and `vllm` libraries. The tool supports various task types such as question answering, paraphrase generation, sentiment analysis, summarization, and more. Users can easily generate synthetic instruction tuning datasets using Bonito for zero-shot task adaptation.

llm-course

The LLM course is divided into three parts: 1. 🧩 **LLM Fundamentals** covers essential knowledge about mathematics, Python, and neural networks. 2. 🧑🔬 **The LLM Scientist** focuses on building the best possible LLMs using the latest techniques. 3. 👷 **The LLM Engineer** focuses on creating LLM-based applications and deploying them. For an interactive version of this course, I created two **LLM assistants** that will answer questions and test your knowledge in a personalized way: * 🤗 **HuggingChat Assistant**: Free version using Mixtral-8x7B. * 🤖 **ChatGPT Assistant**: Requires a premium account. ## 📝 Notebooks A list of notebooks and articles related to large language models. ### Tools | Notebook | Description | Notebook | |----------|-------------|----------| | 🧐 LLM AutoEval | Automatically evaluate your LLMs using RunPod |  | | 🥱 LazyMergekit | Easily merge models using MergeKit in one click. |  | | 🦎 LazyAxolotl | Fine-tune models in the cloud using Axolotl in one click. |  | | ⚡ AutoQuant | Quantize LLMs in GGUF, GPTQ, EXL2, AWQ, and HQQ formats in one click. |  | | 🌳 Model Family Tree | Visualize the family tree of merged models. |  | | 🚀 ZeroSpace | Automatically create a Gradio chat interface using a free ZeroGPU. |  |

DataDreamer

DataDreamer is a powerful open-source Python library designed for prompting, synthetic data generation, and training workflows. It is simple, efficient, and research-grade, allowing users to create prompting workflows, generate synthetic datasets, and train models with ease. The library is built for researchers, by researchers, focusing on correctness, best practices, and reproducibility. It offers features like aggressive caching, resumability, support for bleeding-edge techniques, and easy sharing of datasets and models. DataDreamer enables users to run multi-step prompting workflows, generate synthetic datasets for various tasks, and train models by aligning, fine-tuning, instruction-tuning, and distilling them using existing or synthetic data.

datadreamer

DataDreamer is an advanced toolkit designed to facilitate the development of edge AI models by enabling synthetic data generation, knowledge extraction from pre-trained models, and creation of efficient and potent models. It eliminates the need for extensive datasets by generating synthetic datasets, leverages latent knowledge from pre-trained models, and focuses on creating compact models suitable for integration into any device and performance for specialized tasks. The toolkit offers features like prompt generation, image generation, dataset annotation, and tools for training small-scale neural networks for edge deployment. It provides hardware requirements, usage instructions, available models, and limitations to consider while using the library.

llm-swarm

llm-swarm is a tool designed to manage scalable open LLM inference endpoints in Slurm clusters. It allows users to generate synthetic datasets for pretraining or fine-tuning using local LLMs or Inference Endpoints on the Hugging Face Hub. The tool integrates with huggingface/text-generation-inference and vLLM to generate text at scale. It manages inference endpoint lifetime by automatically spinning up instances via `sbatch`, checking if they are created or connected, performing the generation job, and auto-terminating the inference endpoints to prevent idling. Additionally, it provides load balancing between multiple endpoints using a simple nginx docker for scalability. Users can create slurm files based on default configurations and inspect logs for further analysis. For users without a Slurm cluster, hosted inference endpoints are available for testing with usage limits based on registration status.

distilabel

Distilabel is a framework for synthetic data and AI feedback for AI engineers that require high-quality outputs, full data ownership, and overall efficiency. It helps you synthesize data and provide AI feedback to improve the quality of your AI models. With Distilabel, you can: * **Synthesize data:** Generate synthetic data to train your AI models. This can help you to overcome the challenges of data scarcity and bias. * **Provide AI feedback:** Get feedback from AI models on your data. This can help you to identify errors and improve the quality of your data. * **Improve your AI output quality:** By using Distilabel to synthesize data and provide AI feedback, you can improve the quality of your AI models and get better results.

Vodalus-Expert-LLM-Forge

Vodalus Expert LLM Forge is a tool designed for crafting datasets and efficiently fine-tuning models using free open-source tools. It includes components for data generation, LLM interaction, RAG engine integration, model training, fine-tuning, and quantization. The tool is suitable for users at all levels and is accompanied by comprehensive documentation. Users can generate synthetic data, interact with LLMs, train models, and optimize performance for local execution. The tool provides detailed guides and instructions for setup, usage, and customization.

llm-datasets

LLM Datasets is a repository containing high-quality datasets, tools, and concepts for LLM fine-tuning. It provides datasets with characteristics like accuracy, diversity, and complexity to train large language models for various tasks. The repository includes datasets for general-purpose, math & logic, code, conversation & role-play, and agent & function calling domains. It also offers guidance on creating high-quality datasets through data deduplication, data quality assessment, data exploration, and data generation techniques.

marvin

Marvin is a lightweight AI toolkit for building natural language interfaces that are reliable, scalable, and easy to trust. Each of Marvin's tools is simple and self-documenting, using AI to solve common but complex challenges like entity extraction, classification, and generating synthetic data. Each tool is independent and incrementally adoptable, so you can use them on their own or in combination with any other library. Marvin is also multi-modal, supporting both image and audio generation as well using images as inputs for extraction and classification. Marvin is for developers who care more about _using_ AI than _building_ AI, and we are focused on creating an exceptional developer experience. Marvin users should feel empowered to bring tightly-scoped "AI magic" into any traditional software project with just a few extra lines of code. Marvin aims to merge the best practices for building dependable, observable software with the best practices for building with generative AI into a single, easy-to-use library. It's a serious tool, but we hope you have fun with it. Marvin is open-source, free to use, and made with 💙 by the team at Prefect.

ai-audio-datasets

AI Audio Datasets List (AI-ADL) is a comprehensive collection of datasets consisting of speech, music, and sound effects, used for Generative AI, AIGC, AI model training, and audio applications. It includes datasets for speech recognition, speech synthesis, music information retrieval, music generation, audio processing, sound synthesis, and more. The repository provides a curated list of diverse datasets suitable for various AI audio tasks.

LLM101n

LLM101n is a course focused on building a Storyteller AI Large Language Model (LLM) from scratch in Python, C, and CUDA. The course covers various topics such as language modeling, machine learning, attention mechanisms, tokenization, optimization, device usage, precision training, distributed optimization, datasets, inference, finetuning, deployment, and multimodal applications. Participants will gain a deep understanding of AI, LLMs, and deep learning through hands-on projects and practical examples.

baal

Baal is an active learning library that supports both industrial applications and research use cases. It provides a framework for Bayesian active learning methods such as Monte-Carlo Dropout, MCDropConnect, Deep ensembles, and Semi-supervised learning. Baal helps in labeling the most uncertain items in the dataset pool to improve model performance and reduce annotation effort. The library is actively maintained by a dedicated team and has been used in various research papers for production and experimentation.

supervisely

Supervisely is a computer vision platform that provides a range of tools and services for developing and deploying computer vision solutions. It includes a data labeling platform, a model training platform, and a marketplace for computer vision apps. Supervisely is used by a variety of organizations, including Fortune 500 companies, research institutions, and government agencies.

Awesome-Segment-Anything

Awesome-Segment-Anything is a powerful tool for segmenting and extracting information from various types of data. It provides a user-friendly interface to easily define segmentation rules and apply them to text, images, and other data formats. The tool supports both supervised and unsupervised segmentation methods, allowing users to customize the segmentation process based on their specific needs. With its versatile functionality and intuitive design, Awesome-Segment-Anything is ideal for data analysts, researchers, content creators, and anyone looking to efficiently extract valuable insights from complex datasets.

py-llm-core

PyLLMCore is a light-weighted interface with Large Language Models with native support for llama.cpp, OpenAI API, and Azure deployments. It offers a Pythonic API that is simple to use, with structures provided by the standard library dataclasses module. The high-level API includes the assistants module for easy swapping between models. PyLLMCore supports various models including those compatible with llama.cpp, OpenAI, and Azure APIs. It covers use cases such as parsing, summarizing, question answering, hallucinations reduction, context size management, and tokenizing. The tool allows users to interact with language models for tasks like parsing text, summarizing content, answering questions, reducing hallucinations, managing context size, and tokenizing text.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

LLM4IR-Survey

LLM4IR-Survey is a collection of papers related to large language models for information retrieval, organized according to the survey paper 'Large Language Models for Information Retrieval: A Survey'. It covers various aspects such as query rewriting, retrievers, rerankers, readers, search agents, and more, providing insights into the integration of large language models with information retrieval systems.

llms-tools

The 'llms-tools' repository is a comprehensive collection of AI tools, open-source projects, and research related to Large Language Models (LLMs) and Chatbots. It covers a wide range of topics such as AI in various domains, open-source models, chats & assistants, visual language models, evaluation tools, libraries, devices, income models, text-to-image, computer vision, audio & speech, code & math, games, robotics, typography, bio & med, military, climate, finance, and presentation. The repository provides valuable resources for researchers, developers, and enthusiasts interested in exploring the capabilities of LLMs and related technologies.

20 - OpenAI Gpts

Synthetic Biologist

A customized ChatGPT designed to excel in the field of synthetic biology, as a scientist, an engineer, and a business man

Synthetic Heists, a text adventure game

AI-powered heists: Where cunning meets calculation. Let me entertain you with this interactive heist game, lovingly illustrated in the style of synthetic, AI-powered humanoid robots.

Talk to a TV / Movie Character

I respond and answer as a specific character or person, using their tone and style.

Create an agent team

First, please say "Create an agent team to do 〇〇." / 最初に「〇〇をするためのエージェントチームを作成してください」とお伝え下さい

Create A Business Model Canvas For Your Business

Let's get started by telling me about your business: What do you offer? Who do you serve? ------------------------------------------------------- Need help Prompt Engineering? Reach out on LinkedIn: StephenHnilica

Create Short Stories to Learn a Language

2500+ word stories in target language with images, for language learning.

SuperHero Me | Create a SuperHero Alter Ego

Level up Now. Upload a selfie for some superhero flair. Create a backstory. Select a superpower, arch-villain, and crew. Answer trivia. Pow!

Create Your Christian Prayer

Tell me about your situation and the type of prayer you would like

周易运势头像Create a Lucky avatar image

利用专业的周易知识和命理知识进行头像设计 Generates and explains lucky profile pictures based on I Ching, zodiac.