AI tools for hotspot

Related Tools:

AI Reelity

AI Reelity is an AI-powered trip planner that helps you explore cities like a local and a tourist. It provides personalized travel plans that include both popular tourist attractions and hidden local gems. The app is easy to use and adapts to your tastes and interests. It is also flexible, allowing you to mix and match tourist and local experiences to create a journey that is entirely yours.

Hotpot.ai

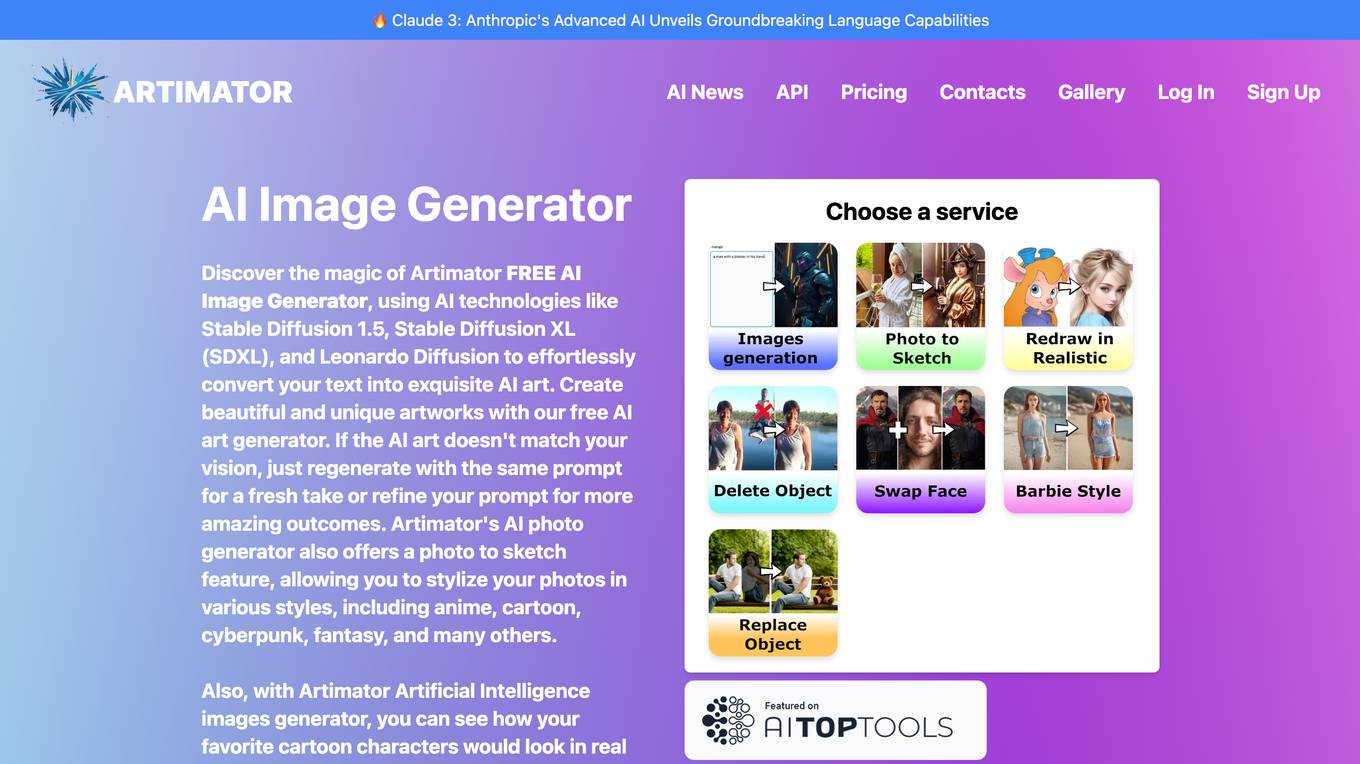

Hotpot.ai is an AI-powered platform that provides a suite of tools to help users create amazing images, graphics, and writing. With Hotpot.ai, users can visualize ideas with AI Image Generator, reimagine themselves with AI Headshots, or spark creativity with other products from our AI platform. Hotpot.ai also offers a range of AI photo editing tools to automate away drudgery, and easy-to-edit templates to empower anyone to create social media posts, marketing images, app icons, and other work graphics.

scalene

Scalene is a high-performance CPU, GPU, and memory profiler for Python that provides detailed information and runs faster than many other profilers. It incorporates AI-powered proposed optimizations, allowing users to generate optimization suggestions by clicking on specific lines or regions of code. Scalene separates time spent in Python from native code, highlights hotspots, and identifies memory usage per line. It supports GPU profiling on NVIDIA-based systems and detects memory leaks. Users can generate reduced profiles, profile specific functions using decorators, and suspend/resume profiling for background processes. Scalene is available as a pip or conda package and works on various platforms. It offers features like profiling at the line level, memory trends, copy volume reporting, and leak detection.

vasttools

This repository contains a collection of tools that can be used with vastai. The tools are free to use, modify and distribute. If you find this useful and wish to donate your welcome to send your donations to the following wallets. BTC 15qkQSYXP2BvpqJkbj2qsNFb6nd7FyVcou XMR 897VkA8sG6gh7yvrKrtvWningikPteojfSgGff3JAUs3cu7jxPDjhiAZRdcQSYPE2VGFVHAdirHqRZEpZsWyPiNK6XPQKAg RVN RSgWs9Co8nQeyPqQAAqHkHhc5ykXyoMDUp USDT(ETH ERC20) 0xa5955cf9fe7af53bcaa1d2404e2b17a1f28aac4f Paypal PayPal.Me/cryptolabsZA

travel-planner-ai

Travel Planner AI is a Software as a Service (SaaS) product that simplifies travel planning by generating comprehensive itineraries based on user preferences. It leverages cutting-edge technologies to provide tailored schedules, optimal timing suggestions, food recommendations, prime experiences, expense tracking, and collaboration features. The tool aims to be the ultimate travel companion for users looking to plan seamless and smart travel adventures.

Taiyi-LLM

Taiyi (太一) is a bilingual large language model fine-tuned for diverse biomedical tasks. It aims to facilitate communication between healthcare professionals and patients, provide medical information, and assist in diagnosis, biomedical knowledge discovery, drug development, and personalized healthcare solutions. The model is based on the Qwen-7B-base model and has been fine-tuned using rich bilingual instruction data. It covers tasks such as question answering, biomedical dialogue, medical report generation, biomedical information extraction, machine translation, title generation, text classification, and text semantic similarity. The project also provides standardized data formats, model training details, model inference guidelines, and overall performance metrics across various BioNLP tasks.

Mooncake

Mooncake is a serving platform for Kimi, a leading LLM service provided by Moonshot AI. It features a KVCache-centric disaggregated architecture that separates prefill and decoding clusters, leveraging underutilized CPU, DRAM, and SSD resources of the GPU cluster. Mooncake's scheduler balances throughput and latency-related SLOs, with a prediction-based early rejection policy for highly overloaded scenarios. It excels in long-context scenarios, achieving up to a 525% increase in throughput while handling 75% more requests under real workloads.

awesome-AI4MolConformation-MD

The 'awesome-AI4MolConformation-MD' repository focuses on protein conformations and molecular dynamics using generative artificial intelligence and deep learning. It provides resources, reviews, datasets, packages, and tools related to AI-driven molecular dynamics simulations. The repository covers a wide range of topics such as neural networks potentials, force fields, AI engines/frameworks, trajectory analysis, visualization tools, and various AI-based models for protein conformational sampling. It serves as a comprehensive guide for researchers and practitioners interested in leveraging AI for studying molecular structures and dynamics.

awesome-mcp-servers

Awesome MCP Servers is a curated list of Model Context Protocol (MCP) servers that enable AI models to securely interact with local and remote resources through standardized server implementations. The list includes production-ready and experimental servers that extend AI capabilities through file access, database connections, API integrations, and other contextual services.

shitspotter

The 'ShitSpotter' repository is dedicated to developing a poop-detection algorithm and dataset for creating a phone app that helps locate dog poop in outdoor environments. The project involves training a PyTorch network to detect poop in images and provides scripts for detecting poop in unseen images using a pretrained model. The dataset consists of mostly outdoor images taken with a phone, with a process involving before and after pictures of the poop. The project aims to enable various applications, such as AR glasses for poop detection and efficient cleaning of public areas by city governments. The code, dataset, and pretrained models are open source with permissive licensing and distributed via IPFS, BitTorrent, and centralized mechanisms.

bytedesk

Bytedesk is an AI-powered customer service and team instant messaging tool that offers features like enterprise instant messaging, online customer service, large model AI assistant, and local area network file transfer. It supports multi-level organizational structure, role management, permission management, chat record management, seating workbench, work order system, seat management, data dashboard, manual knowledge base, skill group management, real-time monitoring, announcements, sensitive words, CRM, report function, and integrated customer service workbench services. The tool is designed for team use with easy configuration throughout the company, and it allows file transfer across platforms using WiFi/hotspots without the need for internet connection.

LLMsKnow

LLMs Know More Than They Show is a repository containing code to reproduce the results in the paper. It includes scripts to generate model answers, extract exact answers, probe all layers and tokens, probe specific layers and tokens, conduct generalization experiments, perform resampling for error type probing and answer selection experiments, and run other baselines like logprob detection and p_true detection. The repository supports various datasets such as TriviaQA, Movies, HotpotQA, Winobias, Winogrande, NLI, IMDB, Math, and Natural questions. It also provides supported models like Mistral-7B-Instruct-v0.2, Mistral-7B-v0.3, Meta-Llama-3-8B, and Meta-Llama-3-8B-Instruct.

KG-LLM-MDQA

This repository contains code and demo for Knowledge Graph Prompting for Multi-Document Question Answering. It includes modules for data collection, training DPR and MDR models, fine-tuning T5 and LLaMA, and reproducing KGP-LLM algorithm. The workflow involves document collection, knowledge graph construction, fine-tuning models, and reproducing main table results. The repository provides instructions for environment setup, folder architecture, and running different modules.

Search-R1

Search-R1 is a tool that trains large language models (LLMs) to reason and call a search engine using reinforcement learning. It is a reproduction of DeepSeek-R1 methods for training reasoning and searching interleaved LLMs, built upon veRL. Through rule-based outcome reward, the base LLM develops reasoning and search engine calling abilities independently. Users can train LLMs on their own datasets and search engines, with preliminary results showing improved performance in search engine calling and reasoning tasks.

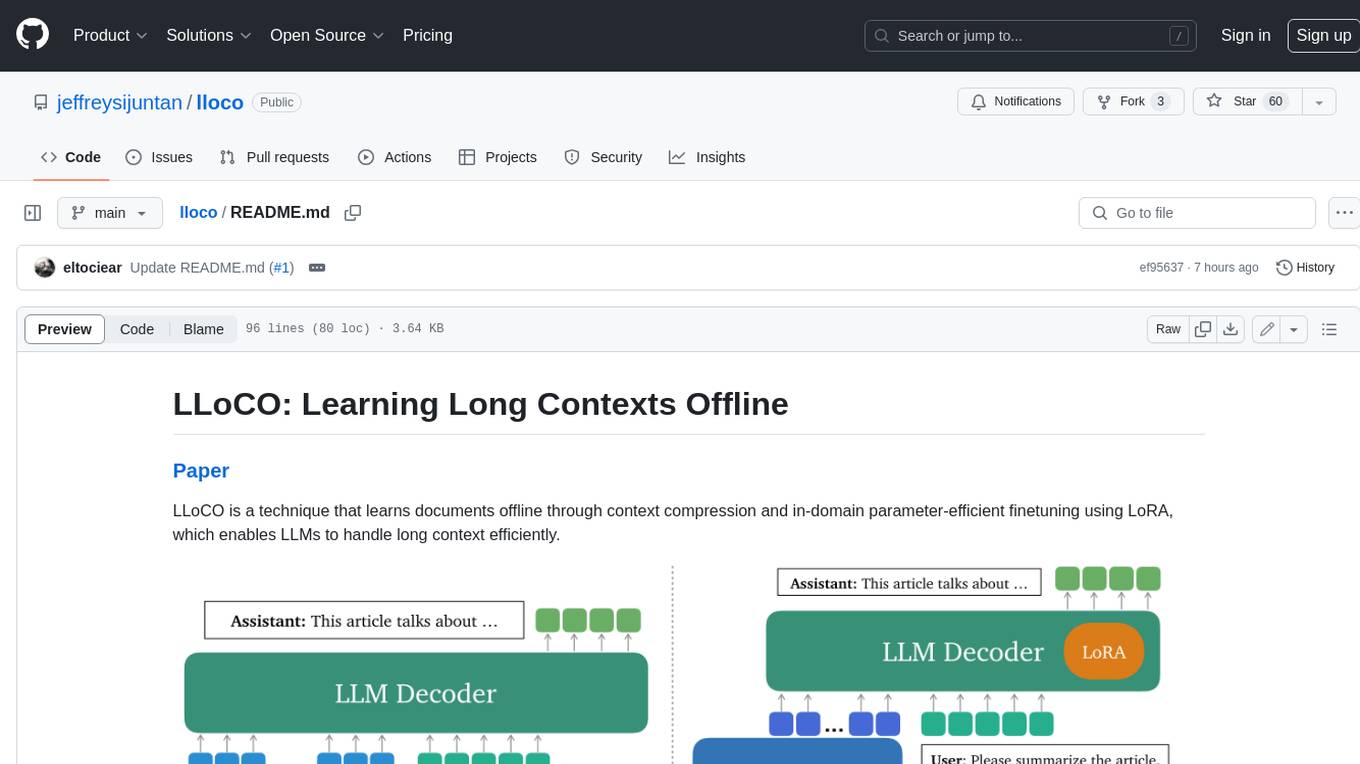

lloco

LLoCO is a technique that learns documents offline through context compression and in-domain parameter-efficient finetuning using LoRA, which enables LLMs to handle long context efficiently.

KnowAgent

KnowAgent is a tool designed for Knowledge-Augmented Planning for LLM-Based Agents. It involves creating an action knowledge base, converting action knowledge into text for model understanding, and a knowledgeable self-learning phase to continually improve the model's planning abilities. The tool aims to enhance agents' potential for application in complex situations by leveraging external reservoirs of information and iterative processes.

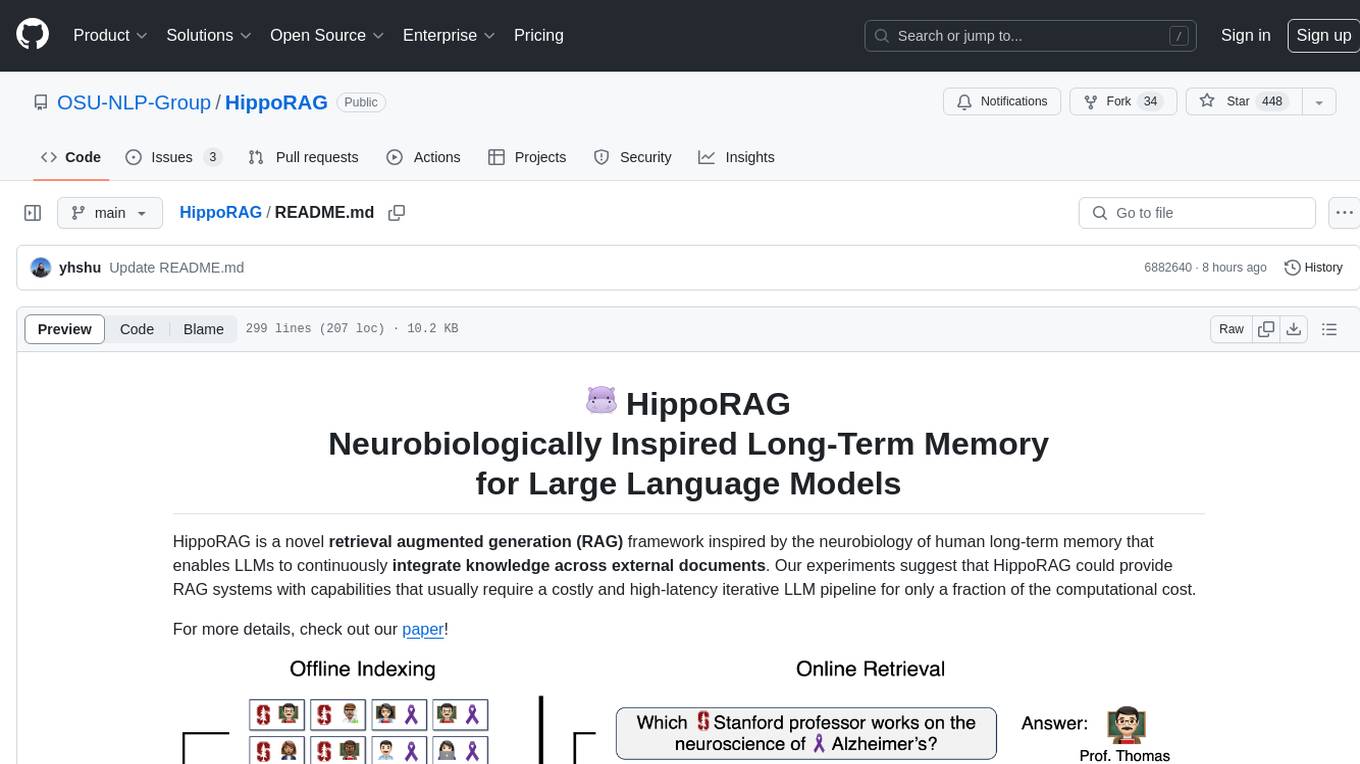

HippoRAG

HippoRAG is a novel retrieval augmented generation (RAG) framework inspired by the neurobiology of human long-term memory that enables Large Language Models (LLMs) to continuously integrate knowledge across external documents. It provides RAG systems with capabilities that usually require a costly and high-latency iterative LLM pipeline for only a fraction of the computational cost. The tool facilitates setting up retrieval corpus, indexing, and retrieval processes for LLMs, offering flexibility in choosing different online LLM APIs or offline LLM deployments through LangChain integration. Users can run retrieval on pre-defined queries or integrate directly with the HippoRAG API. The tool also supports reproducibility of experiments and provides data, baselines, and hyperparameter tuning scripts for research purposes.

LongRAG

This repository contains the code for LongRAG, a framework that enhances retrieval-augmented generation with long-context LLMs. LongRAG introduces a 'long retriever' and a 'long reader' to improve performance by using a 4K-token retrieval unit, offering insights into combining RAG with long-context LLMs. The repo provides instructions for installation, quick start, corpus preparation, long retriever, and long reader.

NeuroSandboxWebUI

A simple and convenient interface for using various neural network models. Users can interact with LLM using text, voice, and image input to generate images, videos, 3D objects, music, and audio. The tool supports a wide range of models for different tasks such as image generation, video generation, audio file separation, voice conversion, and more. Users can also view files from the outputs directory in a gallery, download models, change application settings, and check system sensors. The goal of the project is to create an easy-to-use application for utilizing neural network models.