uptrain

UpTrain is an open-source unified platform to evaluate and improve Generative AI applications. We provide grades for 20+ preconfigured checks (covering language, code, embedding use-cases), perform root cause analysis on failure cases and give insights on how to resolve them.

Stars: 2010

UpTrain is an open-source unified platform to evaluate and improve Generative AI applications. We provide grades for 20+ preconfigured evaluations (covering language, code, embedding use cases), perform root cause analysis on failure cases and give insights on how to resolve them.

README:

UpTrain is an open-source unified platform to evaluate and improve Generative AI applications. We provide grades for 20+ preconfigured evaluations (covering language, code, embedding use cases), perform root cause analysis on failure cases and give insights on how to resolve them.

UpTrain Dashboard is a web-based interface that runs on your local machine. You can use the dashboard to evaluate your LLM applications, view the results, and perform a root cause analysis.

Support for 20+ pre-configured evaluations such as Response Completeness, Factual Accuracy, Context Conciseness etc.

All the evaluations and analysis run locally on your system, ensuring that the data never leaves your secure environment (except for LLM calls while using model grading checks)

Experiment with different embedding models like text-embedding-3-large/small, text-embedding-3-ada, baai/bge-large, etc. UpTrain supports HuggingFace models, Replicate endpoints, or custom models hosted on your endpoint.

You can perform root cause analysis on cases with either negative user feedback or low evaluation scores to understand which part of your LLM pipeline is giving suboptimal results. Check out the supported RCA templates.

We allow you to use any of OpenAI, Anthropic, Mistral, Azure's Openai endpoints or open-source LLMs hosted on Anyscale to be used as evaluators.

UpTrain provides tons of ways to customize evaluations. You can customize the evaluation method (chain of thought vs classify), few-shot examples, and scenario description. You can also create custom evaluators.

- Collaborate with your team

- Embedding visualization via UMAP and Clustering

- Pattern recognition among failure cases

- Prompt improvement suggestions

The UpTrain dashboard is a web-based interface that allows you to evaluate your LLM applications. It is a self-hosted dashboard that runs on your local machine. You don't need to write any code to use the dashboard. You can use the dashboard to evaluate your LLM applications, view the results, and perform a root cause analysis.

Before you start, ensure you have docker installed on your machine. If not, you can install it from here.

The following commands will download the UpTrain dashboard and start it on your local machine.

# Clone the repository

git clone https://github.com/uptrain-ai/uptrain

cd uptrain

# Run UpTrain

bash run_uptrain.shNOTE: UpTrain Dashboard is currently in Beta version. We would love your feedback to improve it.

If you are a developer and want to integrate UpTrain evaluations into your application, you can use the UpTrain package. This allows for a more programmatic way to evaluate your LLM applications.

pip install uptrainYou can evaluate your responses via the open-source version by providing your OpenAI API key to run evaluations.

from uptrain import EvalLLM, Evals

import json

OPENAI_API_KEY = "sk-***************"

data = [{

'question': 'Which is the most popular global sport?',

'context': "The popularity of sports can be measured in various ways, including TV viewership, social media presence, number of participants, and economic impact. Football is undoubtedly the world's most popular sport with major events like the FIFA World Cup and sports personalities like Ronaldo and Messi, drawing a followership of more than 4 billion people. Cricket is particularly popular in countries like India, Pakistan, Australia, and England. The ICC Cricket World Cup and Indian Premier League (IPL) have substantial viewership. The NBA has made basketball popular worldwide, especially in countries like the USA, Canada, China, and the Philippines. Major tennis tournaments like Wimbledon, the US Open, French Open, and Australian Open have large global audiences. Players like Roger Federer, Serena Williams, and Rafael Nadal have boosted the sport's popularity. Field Hockey is very popular in countries like India, Netherlands, and Australia. It has a considerable following in many parts of the world.",

'response': 'Football is the most popular sport with around 4 billion followers worldwide'

}]

eval_llm = EvalLLM(openai_api_key=OPENAI_API_KEY)

results = eval_llm.evaluate(

data=data,

checks=[Evals.CONTEXT_RELEVANCE, Evals.FACTUAL_ACCURACY, Evals.RESPONSE_COMPLETENESS]

)

print(json.dumps(results, indent=3))If you have any questions, please join our Slack community

Speak directly with the maintainers of UpTrain by booking a call here.

| Eval | Description |

|---|---|

| Response Completeness | Grades whether the response has answered all the aspects of the question specified. |

| Response Conciseness | Grades how concise the generated response is or if it has any additional irrelevant information for the question asked. |

| Response Relevance | Grades how relevant the generated context was to the question specified. |

| Response Validity | Grades if the response generated is valid or not. A response is considered to be valid if it contains any information. |

| Response Consistency | Grades how consistent the response is with the question asked as well as with the context provided. |

| Eval | Description |

|---|---|

| Context Relevance | Grades how relevant the context was to the question specified. |

| Context Utilization | Grades how complete the generated response was for the question specified, given the information provided in the context. |

| Factual Accuracy | Grades whether the response generated is factually correct and grounded by the provided context. |

| Context Conciseness | Evaluates the concise context cited from an original context for irrelevant information. |

| Context Reranking | Evaluates how efficient the reranked context is compared to the original context. |

| Eval | Description |

|---|---|

| Language Features | Grades the quality and effectiveness of language in a response, focusing on factors such as clarity, coherence, conciseness, and overall communication. |

| Tonality | Grades whether the generated response matches the required persona's tone |

| Eval | Description |

|---|---|

| Code Hallucination | Grades whether the code present in the generated response is grounded by the context. |

| Eval | Description |

|---|---|

| User Satisfaction | Grades how well the user's concerns are addressed and assesses their satisfaction based on provided conversation. |

| Eval | Description |

|---|---|

| Custom Guideline | Allows you to specify a guideline and grades how well the LLM adheres to the provided guideline when giving a response. |

| Custom Prompts | Allows you to create your own set of evaluations. |

| Eval | Description |

|---|---|

| Response Matching | Compares and grades how well the response generated by the LLM aligns with the provided ground truth. |

| Eval | Description |

|---|---|

| Prompt Injection | Grades whether the user's prompt is an attempt to make the LLM reveal its system prompts. |

| Jailbreak Detection | Grades whether the user's prompt is an attempt to jailbreak (i.e. generate illegal or harmful responses). |

| Eval | Description |

|---|---|

| Sub-Query Completeness | Evaluate whether all of the sub-questions generated from a user's query, taken together, cover all aspects of the user's query or not |

| Multi-Query Accuracy | Evaluate whether the variants generated accurately represent the original query |

| Eval Frameworks | LLM Providers | LLM Packages | Serving frameworks | LLM Observability | Vector DBs |

|---|---|---|---|---|---|

| OpenAI Evals | OpenAI | LlamaIndex | Ollama | Langfuse | Qdrant |

| Azure | Together AI | Helicone | FAISS | ||

| Claude | Anyscale | Zeno | Chroma | ||

| Mistral | Replicate | ||||

| HuggingFace |

More integrations are coming soon. If you have a specific integration in mind, please let us know by creating an issue.

Most popular LLMs like GPT-4, GPT-3.5-turbo, Claude-2.1 etc., are closed-source, i.e. exposed via an API with very little visibility on what happens under the hood. There are many reported instances of prompt drift (or GPT-4 becoming lazy) and research work exploring the degradation in model quality. This benchmark is an attempt to track the change in model behaviour by evaluating its response on a fixed dataset.

You can find the benchmark here.

Having worked with ML and NLP models for the last 8 years, we were continuosly frustated with numerous hidden failures in our models which led to us building UpTrain. UpTrain was initially started as an ML observability tool with checks to identify regression in accuracy.

However we soon released that LLM developers face an even bigger problem -- there is no good way to measure accuracy of their LLM applications, let alone identify regression.

We also saw release of OpenAI evals, where they proposed the use of LLMs to grade the model responses. Furthermore, we gained confidence to approach this after reading how Anthropic leverages RLAIF and dived right into the LLM evaluations research (We are soon releasing a repository of awesome evaluations research).

So, come today, UpTrain is our attempt to bring order to LLM chaos and contribute back to the community. While a majority of developers still rely on intuition and productionise prompt changes by reviewing a couple of cases, we have heard enough regression stories to believe "evaluations and improvement" will be a key part of LLM ecosystem as the space matures.

-

Robust evaluations allows you to systematically experiment with different configurations and prevent any regressions by helping objectively select the best choice.

-

It helps you understand where your systems are going wrong, find the root cause(s) and fix them - long before your end users complain and potentially churn out.

-

Evaluations like prompt injection and jailbreak detection are essential to maintain safety and security of your LLM applications.

-

Evaluations help you provide transparency and build trust with your end-users - especially relevant if you are selling to enterprises.

-

We understand that there is no one-size-fits-all solution when it come to evaluations. We are increasingly seeing the desire from developers to modify the evaluation prompt or set of choices or the few shot examples, etc. We believe the best developer experience lies in open-source, instead of exposing 20 different parameters.

-

Foster innovation: The field of LLM evaluations and using LLM-as-a-judge is still pretty nascent. We see a lot of exciting research happening, almost on a daily basis and being open-source provides the right platform to us and our community to implement those techniques and innovate faster.

We are continuously striving to enhance UpTrain, and there are several ways you can contribute:

-

Notice any issues or areas for improvement: If you spot anything wrong or have ideas for enhancements, please create an issue on our GitHub repository.

-

Contribute directly: If you see an issue you can fix or have code improvements to suggest, feel free to contribute directly to the repository.

-

Request custom evaluations: If your application requires a tailored evaluation, let us know, and we'll add it to the repository.

-

Integrate with your tools: Need integration with your existing tools? Reach out, and we'll work on it.

-

Assistance with evaluations: If you need assistance with evaluations, post your query on our Slack channel, and we'll resolve it promptly.

-

Show your support: Show your support by starring us ⭐ on GitHub to track our progress.

-

Spread the word: If you like what we've built, give us a shoutout on Twitter!

Your contributions and support are greatly appreciated! Thank you for being a part of UpTrain's journey.

This repo is published under Apache 2.0 license and we are committed to adding more functionalities to the UpTrain open-source repo. We also have a managed version if you just want a more hands-off experience. Please book a demo call here.

We are building UpTrain in public. Help us improve by giving your feedback here.

We welcome contributions to UpTrain. Please see our contribution guide for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for uptrain

Similar Open Source Tools

uptrain

UpTrain is an open-source unified platform to evaluate and improve Generative AI applications. We provide grades for 20+ preconfigured evaluations (covering language, code, embedding use cases), perform root cause analysis on failure cases and give insights on how to resolve them.

second-brain-ai-assistant-course

This open-source course teaches how to build an advanced RAG and LLM system using LLMOps and ML systems best practices. It helps you create an AI assistant that leverages your personal knowledge base to answer questions, summarize documents, and provide insights. The course covers topics such as LLM system architecture, pipeline orchestration, large-scale web crawling, model fine-tuning, and advanced RAG features. It is suitable for ML/AI engineers and data/software engineers & data scientists looking to level up to production AI systems. The course is free, with minimal costs for tools like OpenAI's API and Hugging Face's Dedicated Endpoints. Participants will build two separate Python applications for offline ML pipelines and online inference pipeline.

generative-ai-use-cases

Generative AI Use Cases (GenU) is an application that provides well-architected implementation with business use cases for utilizing generative AI in business operations. It offers a variety of standard use cases leveraging generative AI, such as chat interaction, text generation, summarization, meeting minutes generation, writing assistance, translation, web content extraction, image generation, video generation, video analysis, diagram generation, voice chat, RAG technique, custom agent creation, and custom use case building. Users can experience generative AI use cases, perform RAG technique, use custom agents, and create custom use cases using GenU.

ragas

Ragas is a framework that helps you evaluate your Retrieval Augmented Generation (RAG) pipelines. RAG denotes a class of LLM applications that use external data to augment the LLM’s context. There are existing tools and frameworks that help you build these pipelines but evaluating it and quantifying your pipeline performance can be hard. This is where Ragas (RAG Assessment) comes in. Ragas provides you with the tools based on the latest research for evaluating LLM-generated text to give you insights about your RAG pipeline. Ragas can be integrated with your CI/CD to provide continuous checks to ensure performance.

qlib

Qlib is an open-source, AI-oriented quantitative investment platform that supports diverse machine learning modeling paradigms, including supervised learning, market dynamics modeling, and reinforcement learning. It covers the entire chain of quantitative investment, from alpha seeking to order execution. The platform empowers researchers to explore ideas and implement productions using AI technologies in quantitative investment. Qlib collaboratively solves key challenges in quantitative investment by releasing state-of-the-art research works in various paradigms. It provides a full ML pipeline for data processing, model training, and back-testing, enabling users to perform tasks such as forecasting market patterns, adapting to market dynamics, and modeling continuous investment decisions.

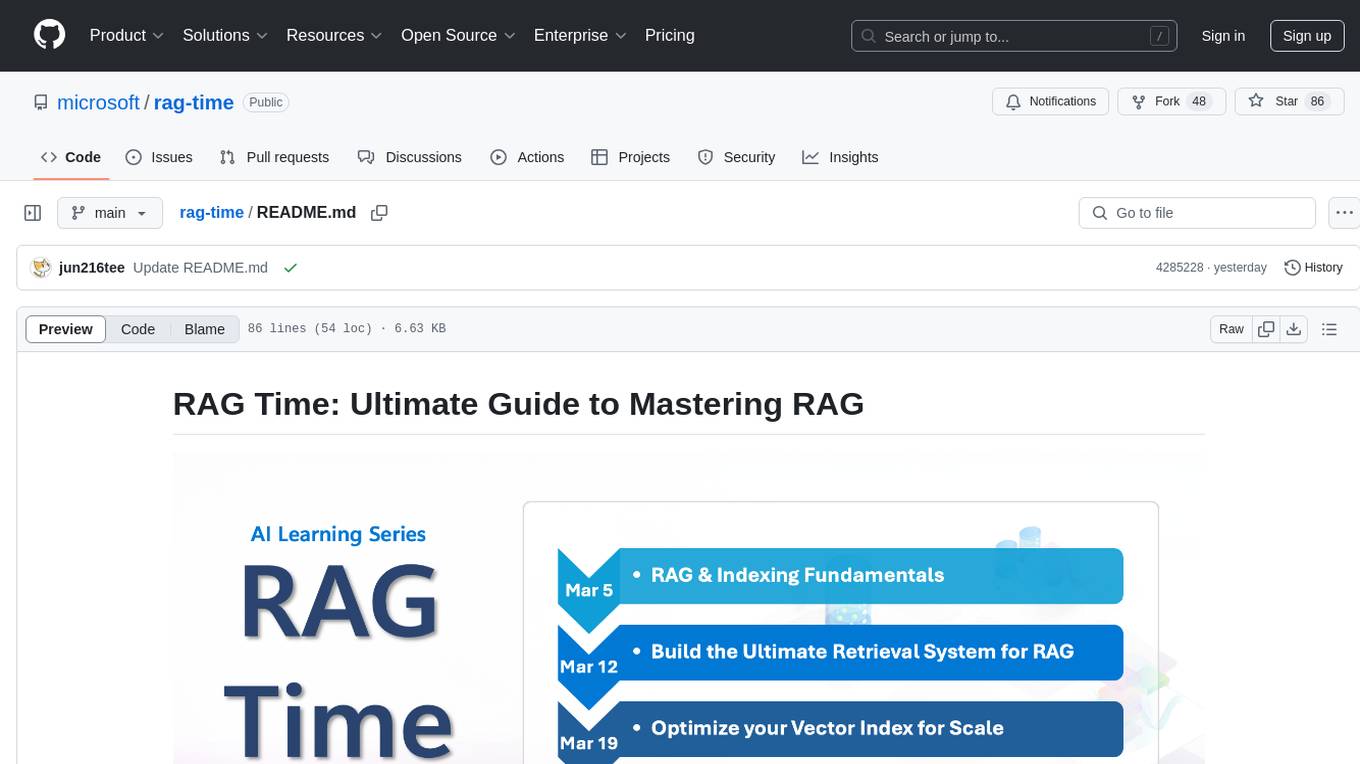

rag-time

RAG Time is a 5-week AI learning series focusing on Retrieval-Augmented Generation (RAG) concepts. The repository contains code samples, step-by-step guides, and resources to help users master RAG. It aims to teach foundational and advanced RAG concepts, demonstrate real-world applications, and provide hands-on samples for practical implementation.

yuna-ai

Yuna AI is a unique AI companion designed to form a genuine connection with users. It runs exclusively on the local machine, ensuring privacy and security. The project offers features like text generation, language translation, creative content writing, roleplaying, and informal question answering. The repository provides comprehensive setup and usage guides for Yuna AI, along with additional resources and tools to enhance the user experience.

hass-ollama-conversation

The Ollama Conversation integration adds a conversation agent powered by Ollama in Home Assistant. This agent can be used in automations to query information provided by Home Assistant about your house, including areas, devices, and their states. Users can install the integration via HACS and configure settings such as API timeout, model selection, context size, maximum tokens, and other parameters to fine-tune the responses generated by the AI language model. Contributions to the project are welcome, and discussions can be held on the Home Assistant Community platform.

babilong

BABILong is a generative benchmark designed to evaluate the performance of NLP models in processing long documents with distributed facts. It consists of 20 tasks that simulate interactions between characters and objects in various locations, requiring models to distinguish important information from irrelevant details. The tasks vary in complexity and reasoning aspects, with test samples potentially containing millions of tokens. The benchmark aims to challenge and assess the capabilities of Large Language Models (LLMs) in handling complex, long-context information.

12-factor-agents

12-Factor Agents is a project focused on building reliable LLM-powered software by outlining 12 core engineering principles. The project aims to provide guidance on creating production-ready customer-facing agents that leverage AI technology effectively. It emphasizes the importance of software design, context management, tool integration, and control flow in developing high-quality AI agents. The project offers insights, design patterns, and practical advice for software engineers looking to enhance their AI applications with agent-based approaches.

burr

Burr is a Python library and UI that makes it easy to develop applications that make decisions based on state (chatbots, agents, simulations, etc...). Burr includes a UI that can track/monitor those decisions in real time.

argilla

Argilla is a collaboration platform for AI engineers and domain experts that require high-quality outputs, full data ownership, and overall efficiency. It helps users improve AI output quality through data quality, take control of their data and models, and improve efficiency by quickly iterating on the right data and models. Argilla is an open-source community-driven project that provides tools for achieving and maintaining high-quality data standards, with a focus on NLP and LLMs. It is used by AI teams from companies like the Red Cross, Loris.ai, and Prolific to improve the quality and efficiency of AI projects.

agent-lightning

Agent Lightning is a lightweight and efficient tool for automating repetitive tasks in the field of data analysis and machine learning. It provides a user-friendly interface to create and manage automated workflows, allowing users to easily schedule and execute data processing, model training, and evaluation tasks. With its intuitive design and powerful features, Agent Lightning streamlines the process of building and deploying machine learning models, making it ideal for data scientists, machine learning engineers, and AI enthusiasts looking to boost their productivity and efficiency in their projects.

basiclingua-LLM-Based-NLP

BasicLingua is a Python library that provides functionalities for linguistic tasks such as tokenization, stemming, lemmatization, and many others. It is based on the Gemini Language Model, which has demonstrated promising results in dealing with text data. BasicLingua can be used as an API or through a web demo. It is available under the MIT license and can be used in various projects.

NineRec

NineRec is a benchmark dataset suite for evaluating transferable recommendation models. It provides datasets for pre-training and transfer learning in recommender systems, focusing on multimodal and foundation model tasks. The dataset includes user-item interactions, item texts in multiple languages, item URLs, and raw images. Researchers can use NineRec to develop more effective and efficient methods for pre-training recommendation models beyond end-to-end training. The dataset is accompanied by code for dataset preparation, training, and testing in PyTorch environment.

llm-twin-course

The LLM Twin Course is a free, end-to-end framework for building production-ready LLM systems. It teaches you how to design, train, and deploy a production-ready LLM twin of yourself powered by LLMs, vector DBs, and LLMOps good practices. The course is split into 11 hands-on written lessons and the open-source code you can access on GitHub. You can read everything and try out the code at your own pace.

For similar tasks

uptrain

UpTrain is an open-source unified platform to evaluate and improve Generative AI applications. We provide grades for 20+ preconfigured evaluations (covering language, code, embedding use cases), perform root cause analysis on failure cases and give insights on how to resolve them.

llm-applications

A comprehensive guide to building Retrieval Augmented Generation (RAG)-based LLM applications for production. This guide covers developing a RAG-based LLM application from scratch, scaling the major components, evaluating different configurations, implementing LLM hybrid routing, serving the application in a highly scalable and available manner, and sharing the impacts LLM applications have had on products.

postgresai

PostgresAI is an AI-native PostgreSQL observability tool designed for monitoring, health checks, and root cause analysis. It provides structured reports and metrics for AI consumption, tracks problems from detection to resolution, offers over 45 health checks including bloat, indexes, queries, settings, and security, and features Active Session History similar to Oracle ASH. PostgresAI is part of the Self-Driving Postgres initiative, aiming to make Postgres autonomous. It includes expert dashboards following the Four Golden Signals methodology and is battle-tested with companies like GitLab, Miro, Chewy, and more.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.