piccolo

SensiML's open-source AutoML solution for Edge AI model development

Stars: 58

Piccolo AI is an open-source software development toolkit for constructing sensor-based AI inference models optimized to run on low-power microcontrollers and IoT edge platforms. It includes SensiML's ML Engine, Embedded ML SDK, Analytic Studio UI, and SensiML Python Client. The tool is intended for individual developers, researchers, and AI enthusiasts, offering support for time-series sensor data classification and various applications such as acoustic event detection, activity recognition, gesture detection, anomaly detection, keyword spotting, and vibration classification.

README:

Piccolo AI is the open-source version of SensiML Analytics Studio, a software development toolkit for constructing sensor-based AI inference models optimzed to run on low-power microcontrollers and IoT edge platforms (ex. x86 embedded PCs, RPi, arm Cortex-A mobile processors). Piccolo AI is intended for individual developers, researchers, and AI enthusiasts and made available under the AGPLv3 license.

The Piccolo AI project includes:

- SensiML's ML Engine: The engine behind SensiML’s AutoML model building, sensor data management, model tracking, and embedded firmware generation

- Embedded ML SDK: SensiML's Inferencing and DSP SDK designed for building and running DSP & ML pipelines on edge devices

- Analytic Studio UI: An Intuitive web interface for working with the SensiML ML Engine to build TinyML applications

- SensiML Python Client: Allows programmatic access to the REST API services through Jupyter Notebooks

Piccolo AI is currently optimized for the classification of time-series sensor data. Common use cases enabled by Piccolo AI include:

| Application | Example Use Cases | Piccolo AI Reference Application(s) | |

|---|---|---|---|

| Acoustic Event Detection |

|

Intrusion Detection Machinery / Appliance Monitoring Public Safety (ex. gunshot recogition) Vehicle Diagnostics Livestock Monitoring Worker Safety (ex. leak detection) |

Pump State Recognition Demo Smart Lock Demo |

| Activity Recognition |

|

Human Gait Analysis Ergonomic Assessment Sport/Fitness Tracking Elder Care Monitoring |

Boxing Punch Classification Demo |

| Gesture Detection |

|

Touchless Machine Interfaces Smarthome Control Gaming Interfaces Driver Monitoring Interactive Retail Displays |

Wizard Wand Demo |

| Anomaly Detection |

|

Factory/Plant Process Control Predictive Maintenance Robotics Remote Monitoring |

Robotic Arm Anomaly Detection |

| Keyword Spotting |

|

Consumer Electronics UI Security (ex. Voice Authentication) HMI Command/Control |

Keyword Spotting Demo (Part 1,Part 2) |

| Vibration Classification |

|

Predictive Maintenance Vehicle Monitoring Supply Chain Monitoring Machine State Detection |

Smart Drill Demo Fan State Demo |

Piccolo AI is not limited to only the applications listed above. Virtually any time-series sensor input(s) can be used to classify application-specific events of interest. Piccolo AI also provides support for regression models as well as basic feature transform outputs without classification. To learn more about Piccolo AI features and capabilities, see the product page.

The simplest way to get started learning and using the features in Piccolo AI is to sign up for an account on SensiML's managed SaaS service at SensiML Free Trial.

To install and manage Piccolo AI yourself, continue with the instructions and step-by-step installation video provided below.

To try out Piccolo AI on your machine, we recommend using Docker.

-

Install and start docker and docker-compose - https://docs.docker.com/engine/

- Note: Windows users will need to use the wsl2 backend. Follow the instructions here

- Note: If using Docker Desktop go to Preferences > Resources > Advanced and set Memory to at least 12GB.

-

Follow the post-installation instructions as well to avoid having to run docker commands as sudo user

-

Some functionality requires calling docker from within docker. To enable this, you will need to make the docker socket accessible to docker containers

sudo chmod 666 /var/run/docker.sock -

By default the repository does not include docker images to generate model/compiler code for devices. You'll need to pull docker images for the compilers you want to use. See docker images:

docker pull sensiml/sml_x86_generic:9.3.0-v1.0 docker pull sensiml/sml_armgcc_generic:10.3.1-v1.0 docker pull sensiml/sml_x86mingw_generic:9.3-v1.0 docker pull sensiml/sensiml_tensorflow:0a4bec2a-v4.0 -

Make sure you have the latest sensiml base docker image version. If not, you can do a docker pull

docker pull sensiml/base

-

Make sure docker is running by checking your system tray icons. If it is not running then open Docker Desktop application in Windows to start the docker background service.

-

Open a Ubuntu terminal through Windows Subsystem for Linux

git clone https://github.com/sensiml/piccolo cd piccolo -

Use docker compose to start the services

docker compose up

Login via the Web UI Interface

Go to your browser at http://localhost:8000 to log in to the UI. See the Getting Started Guide to get started.

The default username and password is stored in the src/server/datamanager/fixtures/default.yaml file

username: [email protected]

password: TinyML4Life

To help you get up and running quickly, you may also find our video installation guide useful. Click on the video below for a complete walkthrough for getting Piccolo AI installed on a Windows 10 / 11 PC:

The Data Studio is SensiML's standalone Data Capture, Labeling, and Machine Learning Model testing application. It is a companion application to Piccolo AI, but it requires a subscription to use with your local Piccolo AI.

Upgrades will need to check out the latest code and run database.intialize container script which will perform any migrations. You may also need to pull the newest sensiml/base docker image in some cases when the underlying packages have been changed.

Piccolo AI is the open-source version of SensiML ML Engine. As such, it's features and capabilities align with the majority of those found in SensiML's ML Engine for which documentation can be found here.

For more information about our documentation processes or to build them yourself, see the docs README.

We welcome contributions from the community. See our contribution guidelines, see CONTRIBUTING.md.

Documentation for developers coming soon!

-

To report a bug or request a feature, create a GitHub Issue. Please ensure someone else hasn't created an issue for the same topic.

-

Need help using Piccolo AI? Reach out on the forum, fellow community member or SensiML engineer will be happy to help you out.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for piccolo

Similar Open Source Tools

piccolo

Piccolo AI is an open-source software development toolkit for constructing sensor-based AI inference models optimized to run on low-power microcontrollers and IoT edge platforms. It includes SensiML's ML Engine, Embedded ML SDK, Analytic Studio UI, and SensiML Python Client. The tool is intended for individual developers, researchers, and AI enthusiasts, offering support for time-series sensor data classification and various applications such as acoustic event detection, activity recognition, gesture detection, anomaly detection, keyword spotting, and vibration classification.

second-brain-ai-assistant-course

This open-source course teaches how to build an advanced RAG and LLM system using LLMOps and ML systems best practices. It helps you create an AI assistant that leverages your personal knowledge base to answer questions, summarize documents, and provide insights. The course covers topics such as LLM system architecture, pipeline orchestration, large-scale web crawling, model fine-tuning, and advanced RAG features. It is suitable for ML/AI engineers and data/software engineers & data scientists looking to level up to production AI systems. The course is free, with minimal costs for tools like OpenAI's API and Hugging Face's Dedicated Endpoints. Participants will build two separate Python applications for offline ML pipelines and online inference pipeline.

bedrock-engineer

Bedrock Engineer is an autonomous software development agent application that utilizes Amazon Bedrock. It allows users to customize, create/edit files, execute commands, search the web, use a knowledge base, utilize multi-agents, generate images, and more. The tool provides an interactive chat interface with AI agents, file system operations, web search capabilities, project structure management, code analysis, code generation, data analysis, agent and tool customization, chat history management, and multi-language support. Users can select and customize agents, choose from various tools like file system operations, web search, Amazon Bedrock integration, and system command execution. Additionally, the tool offers features for website generation, connecting to design system data sources, AWS Step Functions ASL definition generation, diagram creation using natural language descriptions, and multi-language support.

refly

Refly.AI is an open-source AI-native creation engine that empowers users to transform ideas into production-ready content. It features a free-form canvas interface with multi-threaded conversations, knowledge base integration, contextual memory, intelligent search, WYSIWYG AI editor, and more. Users can leverage AI-powered capabilities, context memory, knowledge base integration, quotes, and AI document editing to enhance their content creation process. Refly offers both cloud and self-hosting options, making it suitable for individuals, enterprises, and organizations. The tool is designed to facilitate human-AI collaboration and streamline content creation workflows.

fenic

fenic is an opinionated DataFrame framework from typedef.ai for building AI and agentic applications. It transforms unstructured and structured data into insights using familiar DataFrame operations enhanced with semantic intelligence. With support for markdown, transcripts, and semantic operators, plus efficient batch inference across various model providers. fenic is purpose-built for LLM inference, providing a query engine designed for AI workloads, semantic operators as first-class citizens, native unstructured data support, production-ready infrastructure, and a familiar DataFrame API.

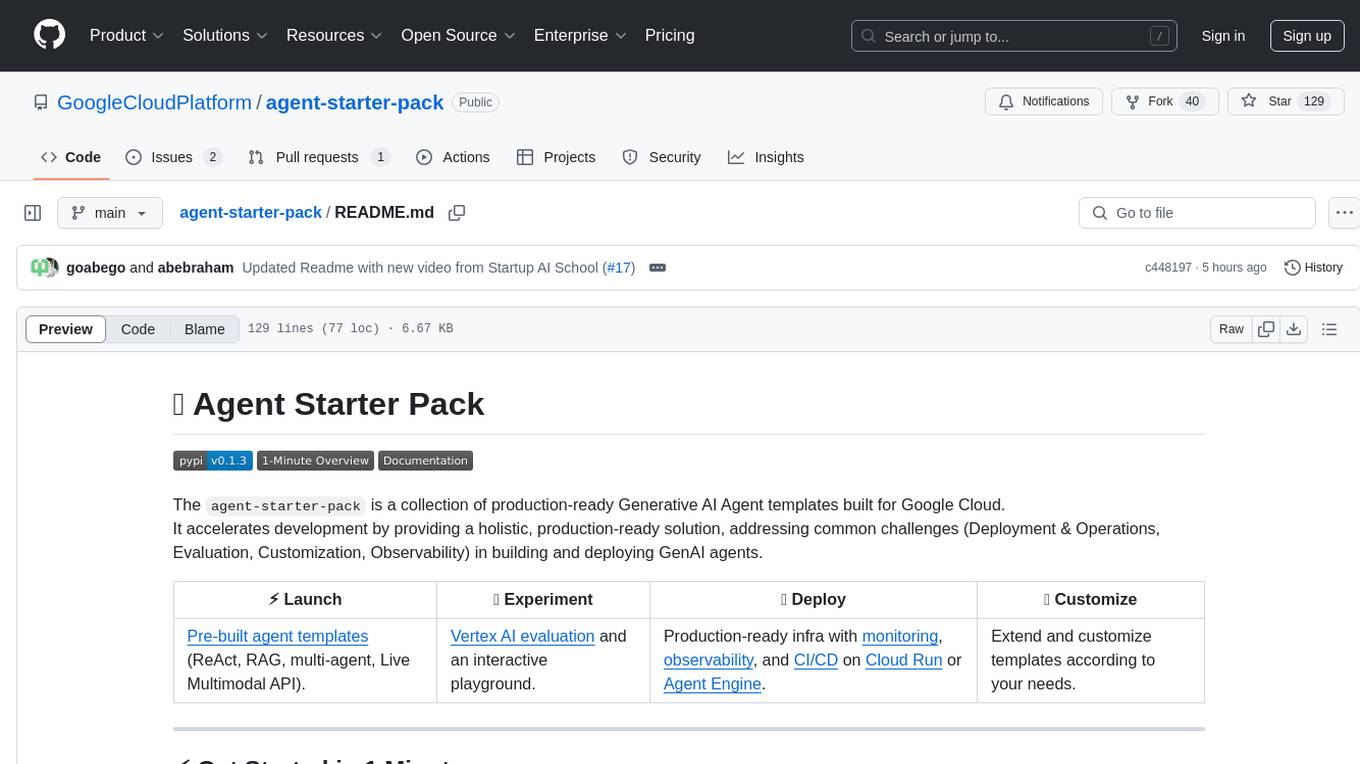

agent-starter-pack

The agent-starter-pack is a collection of production-ready Generative AI Agent templates built for Google Cloud. It accelerates development by providing a holistic, production-ready solution, addressing common challenges in building and deploying GenAI agents. The tool offers pre-built agent templates, evaluation tools, production-ready infrastructure, and customization options. It also provides CI/CD automation and data pipeline integration for RAG agents. The starter pack covers all aspects of agent development, from prototyping and evaluation to deployment and monitoring. It is designed to simplify project creation, template selection, and deployment for agent development on Google Cloud.

swirl-search

Swirl is an open-source software that allows users to simultaneously search multiple content sources and receive AI-ranked results. It connects to various data sources, including databases, public data services, and enterprise sources, and utilizes AI and LLMs to generate insights and answers based on the user's data. Swirl is easy to use, requiring only the download of a YML file, starting in Docker, and searching with Swirl. Users can add credentials to preloaded SearchProviders to access more sources. Swirl also offers integration with ChatGPT as a configured AI model. It adapts and distributes user queries to anything with a search API, re-ranking the unified results using Large Language Models without extracting or indexing anything. Swirl includes five Google Programmable Search Engines (PSEs) to get users up and running quickly. Key features of Swirl include Microsoft 365 integration, SearchProvider configurations, query adaptation, synchronous or asynchronous search federation, optional subscribe feature, pipelining of Processor stages, results stored in SQLite3 or PostgreSQL, built-in Query Transformation support, matching on word stems and handling of stopwords, duplicate detection, re-ranking of unified results using Cosine Vector Similarity, result mixers, page through all results requested, sample data sets, optional spell correction, optional search/result expiration service, easily extensible Connector and Mixer objects, and a welcoming community for collaboration and support.

yuna-ai

Yuna AI is a unique AI companion designed to form a genuine connection with users. It runs exclusively on the local machine, ensuring privacy and security. The project offers features like text generation, language translation, creative content writing, roleplaying, and informal question answering. The repository provides comprehensive setup and usage guides for Yuna AI, along with additional resources and tools to enhance the user experience.

open-assistant-api

Open Assistant API is an open-source, self-hosted AI intelligent assistant API compatible with the official OpenAI interface. It supports integration with more commercial and private models, R2R RAG engine, internet search, custom functions, built-in tools, code interpreter, multimodal support, LLM support, and message streaming output. Users can deploy the service locally and expand existing features. The API provides user isolation based on tokens for SaaS deployment requirements and allows integration of various tools to enhance its capability to connect with the external world.

ClicShopping

ClicShopping AI™ is an open-source Ecommerce platform powered by Generative AI, designed for B2B, B2C, and B2B-B2C businesses. It offers seamless shopping experiences, advanced AI integration, modular architecture for customization, and responsive design across devices. With features like GPT API integration, RAG-powered Business Intelligence Agent, multi-model AI support, and security compliance, ClicShopping AI™ is a comprehensive solution for online businesses. It also provides internationalization support, performance analytics, server performance optimization, content management, API connections, shipping and payment options, and a marketplace for additional modules and apps.

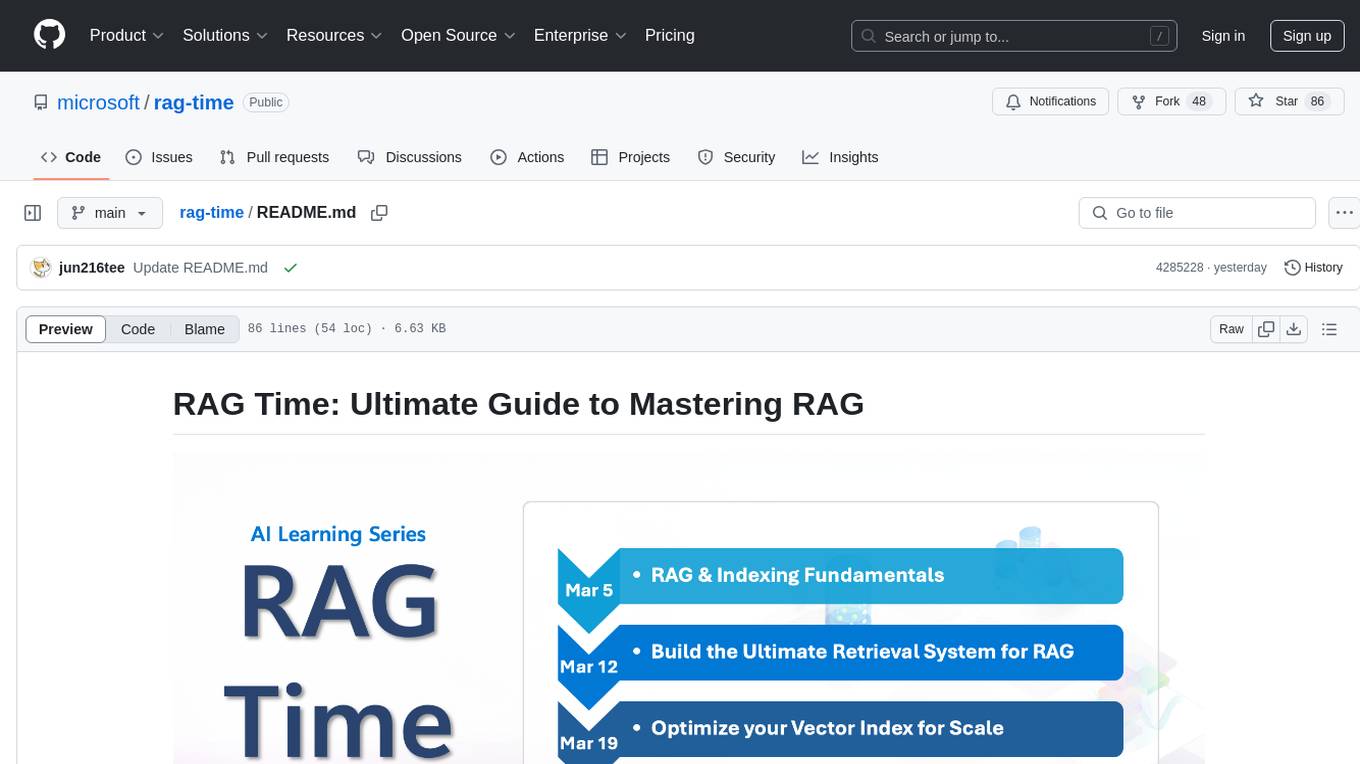

rag-time

RAG Time is a 5-week AI learning series focusing on Retrieval-Augmented Generation (RAG) concepts. The repository contains code samples, step-by-step guides, and resources to help users master RAG. It aims to teach foundational and advanced RAG concepts, demonstrate real-world applications, and provide hands-on samples for practical implementation.

neuro-san-studio

Neuro SAN Studio is an open-source library for building agent networks across various industries. It simplifies the development of collaborative AI systems by enabling users to create sophisticated multi-agent applications using declarative configuration files. The tool offers features like data-driven configuration, adaptive communication protocols, safe data handling, dynamic agent network designer, flexible tool integration, robust traceability, and cloud-agnostic deployment. It has been used in various use-cases such as automated generation of multi-agent configurations, airline policy assistance, banking operations, market analysis in consumer packaged goods, insurance claims processing, intranet knowledge management, retail operations, telco network support, therapy vignette supervision, and more.

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

OpenContracts

OpenContracts is an Apache-2 licensed enterprise document analytics tool that supports multiple formats, including PDF and txt-based formats. It features multiple document ingestion pipelines with a pluggable architecture for easy format and ingestion engine support. Users can create custom document analytics tools with beautiful result displays, support mass document data extraction with a LlamaIndex wrapper, and manage document collections, layout parsing, automatic vector embeddings, and human annotation. The tool also offers pluggable parsing pipelines, human annotation interface, LlamaIndex integration, data extraction capabilities, and custom data extract pipelines for bulk document querying.

synmetrix

Synmetrix is an open source data engineering platform and semantic layer for centralized metrics management. It provides a complete framework for modeling, integrating, transforming, aggregating, and distributing metrics data at scale. Key features include data modeling and transformations, semantic layer for unified data model, scheduled reports and alerts, versioning, role-based access control, data exploration, caching, and collaboration on metrics modeling. Synmetrix leverages Cube.js to consolidate metrics from various sources and distribute them downstream via a SQL API. Use cases include data democratization, business intelligence and reporting, embedded analytics, and enhancing accuracy in data handling and queries. The tool speeds up data-driven workflows from metrics definition to consumption by combining data engineering best practices with self-service analytics capabilities.

mlcraft

Synmetrix (prev. MLCraft) is an open source data engineering platform and semantic layer for centralized metrics management. It provides a complete framework for modeling, integrating, transforming, aggregating, and distributing metrics data at scale. Key features include data modeling and transformations, semantic layer for unified data model, scheduled reports and alerts, versioning, role-based access control, data exploration, caching, and collaboration on metrics modeling. Synmetrix leverages Cube (Cube.js) for flexible data models that consolidate metrics from various sources, enabling downstream distribution via a SQL API for integration into BI tools, reporting, dashboards, and data science. Use cases include data democratization, business intelligence, embedded analytics, and enhancing accuracy in data handling and queries. The tool speeds up data-driven workflows from metrics definition to consumption by combining data engineering best practices with self-service analytics capabilities.

For similar tasks

sktime

sktime is a Python library for time series analysis that provides a unified interface for various time series learning tasks such as classification, regression, clustering, annotation, and forecasting. It offers time series algorithms and tools compatible with scikit-learn for building, tuning, and validating time series models. sktime aims to enhance the interoperability and usability of the time series analysis ecosystem by empowering users to apply algorithms across different tasks and providing interfaces to related libraries like scikit-learn, statsmodels, tsfresh, PyOD, and fbprophet.

piccolo

Piccolo AI is an open-source software development toolkit for constructing sensor-based AI inference models optimized to run on low-power microcontrollers and IoT edge platforms. It includes SensiML's ML Engine, Embedded ML SDK, Analytic Studio UI, and SensiML Python Client. The tool is intended for individual developers, researchers, and AI enthusiasts, offering support for time-series sensor data classification and various applications such as acoustic event detection, activity recognition, gesture detection, anomaly detection, keyword spotting, and vibration classification.

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

SynapseML

SynapseML (previously known as MMLSpark) is an open-source library that simplifies the creation of massively scalable machine learning (ML) pipelines. It provides simple, composable, and distributed APIs for various machine learning tasks such as text analytics, vision, anomaly detection, and more. Built on Apache Spark, SynapseML allows seamless integration of models into existing workflows. It supports training and evaluation on single-node, multi-node, and resizable clusters, enabling scalability without resource wastage. Compatible with Python, R, Scala, Java, and .NET, SynapseML abstracts over different data sources for easy experimentation. Requires Scala 2.12, Spark 3.4+, and Python 3.8+.

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

Java-AI-Book-Code

The Java-AI-Book-Code repository contains code examples for the 2020 edition of 'Practical Artificial Intelligence With Java'. It is a comprehensive update of the previous 2013 edition, featuring new content on deep learning, knowledge graphs, anomaly detection, linked data, genetic algorithms, search algorithms, and more. The repository serves as a valuable resource for Java developers interested in AI applications and provides practical implementations of various AI techniques and algorithms.

Awesome-AI-Data-Guided-Projects

A curated list of data science & AI guided projects to start building your portfolio. The repository contains guided projects covering various topics such as large language models, time series analysis, computer vision, natural language processing (NLP), and data science. Each project provides detailed instructions on how to implement specific tasks using different tools and technologies.

awesome-AIOps

awesome-AIOps is a curated list of academic researches and industrial materials related to Artificial Intelligence for IT Operations (AIOps). It includes resources such as competitions, white papers, blogs, tutorials, benchmarks, tools, companies, academic materials, talks, workshops, papers, and courses covering various aspects of AIOps like anomaly detection, root cause analysis, incident management, microservices, dependency tracing, and more.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.