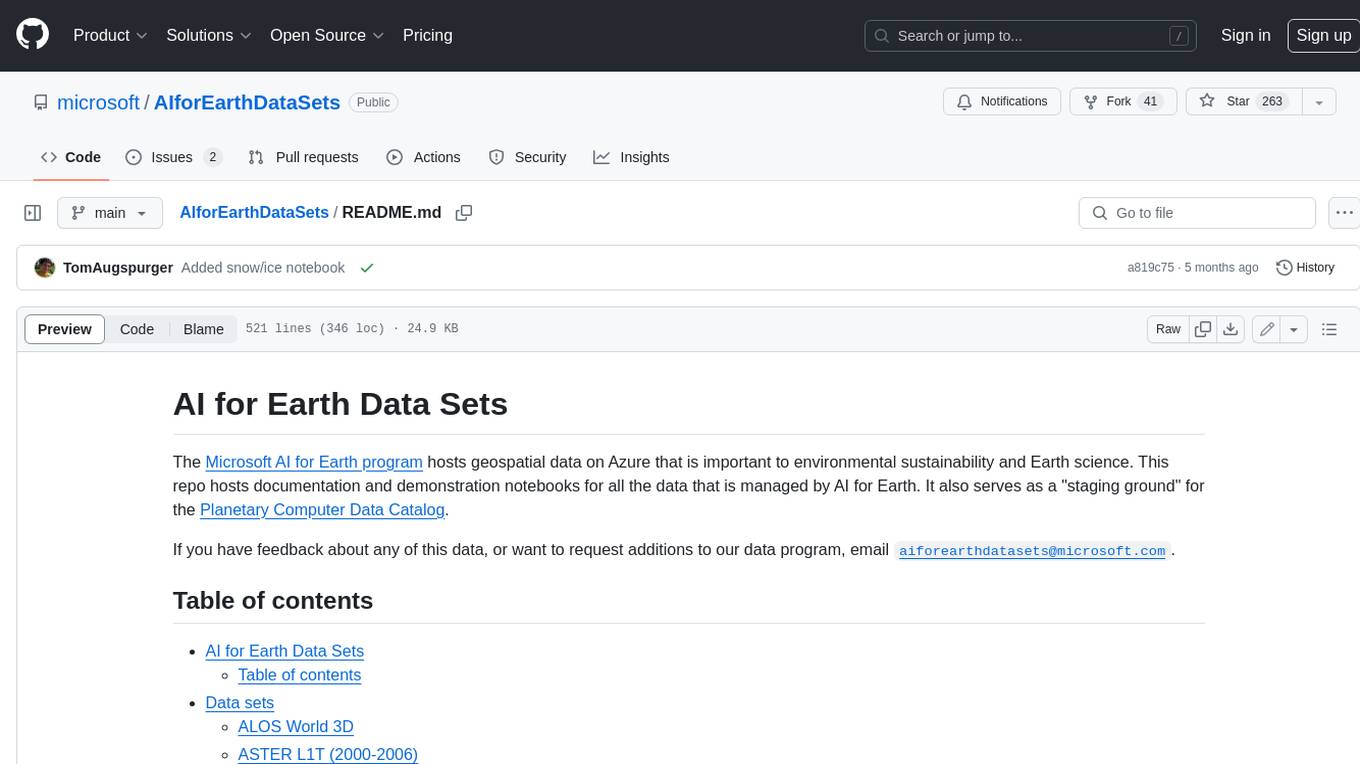

AIforEarthDataSets

Notebooks and documentation for AI-for-Earth-managed datasets on Azure

Stars: 263

The Microsoft AI for Earth program hosts geospatial data on Azure that is important to environmental sustainability and Earth science. This repo hosts documentation and demonstration notebooks for all the data that is managed by AI for Earth. It also serves as a "staging ground" for the Planetary Computer Data Catalog.

README:

The Microsoft AI for Earth program hosts geospatial data on Azure that is important to environmental sustainability and Earth science. This repo hosts documentation and demonstration notebooks for all the data that is managed by AI for Earth. It also serves as a "staging ground" for the Planetary Computer Data Catalog.

If you have feedback about any of this data, or want to request additions to our data program, email [email protected].

- AI for Earth Data Sets

-

Data sets

- ALOS World 3D

- ASTER L1T (2000-2006)

- Copernicus DEM

- Daymet

- Deltares Global Flood Maps

- Deltares Global Water Availability

- Esri 10m Land Cover

- Global Biodiversity Information Facility (GBIF)

- Harmonized Global Biomass

- Harmonized Landsat Sentinel-2

- High Resolution Electricity Access (HREA)

- High Resolution Ocean Surface Wave Hindcast

- Labeled Information Library of Alexandria: Biology and Conservation (LILA BC)

- Landsat TM/MSS Collection 2

- Landsat 7 Collection 2 Level-2

- Landsat 8 Collection 2 Level-2

- MODIS (40 individual products)

- Monitoring Trends in Burn Severity Mosaics

- National Solar Radiation Database

- NASADEM

- NREL Puerto Rico 100 (PR100)

- NREL PV Rooftop Database

- NOAA Climate Data Records (CDR)

- NOAA Climate Forecast System (CFS)

- NOAA Digital Coast Imagery

- NOAA GFS Warm Start Initial Conditions

- NOAA GOES-R

- NOAA Global Ensemble Forecast System (GEFS)

- NOAA Global Forecast System (GFS)

- NOAA Global Hydro Estimator (GHE)

- NOAA High-Resolution Rapid Refresh (HRRR)

- NOAA Integrated Surface Data (ISD)

- NOAA Monthly US Climate Gridded Dataset (NClimGrid)

- NOAA National Water Model

- NOAA Rapid Refresh (RAP)

- NOAA US Climate Normals

- National Agriculture Imagery Program

- National Land Cover Database

- NatureServe Map of Biodiversity Importance (MoBI)

- Ocean Observatories Initiative CamHD

- Sentinel-1 GRD

- Sentinel-1 SLC

- Sentinel-2 L2A

- Sentinel-3 L2

- Sentinel-5P

- TerraClimate

- UK Met Office CSSP China 20CRDS

- UK Met Office Global Weather Data for COVID-19 Analysis

- University of Miami Coupled Model for Hurricanes Ike and Sandy

- USFS Forest Inventory and Analysis

- USGS 3DEP Seamless DEMs

- USGS Gap Land Cover

- Legal stuff

Global topographic information from the JAXA ALOS PRISM instrument.

The ASTER instrument, launched on-board NASA's Terra satellite in 1999, provides multispectral images of the Earth at 15m-90m resolution. This data set represents ASTER data from 2000-2006.

Global topographic information from the Copernicus program.

Estimates of daily weather parameters in North America on a one-kilometer grid, with monthly and annual summaries.

Global estimates of coastal inundation under various sea level rise conditions and return periods at 90m, 1km, and 5km resolutions. Also includes estimated coastal inundation caused by named historical storm events going back several decades.

Simulations of historical daily reservoir variations for 3,236 locations across the globe for the period 1970-2020 using the distributed wflow_sbm model. The model outputs long-term daily information on reservoir volume, inflow and outflow dynamics, as well as information on upstream hydrological forcing.

Global estimates of 10-class land use/land cover (LULC) for 2020, derived from ESA Sentinel-2 imagery at 10m resolution, produced by Impact Observatory.

Exports of global species occurrence data from the GBIF network.

Global maps of aboveground and belowground biomass carbon density for the year 2010 at 300m resolution.

Satellite imagery from the Landsat 8 and Sentinel-2 satellites, aligned to a common grid and processed to compatible color spaces.

Settlement-level measures of electricity access, reliability, and usage derived from VIIRS satellite imagery.

Long-term wave hindcast data for the U.S. Exclusive Economic Zone (EEZ), developed by the U.S. Department of Energy's Water Power Technologies Office.

AI for Earth and partners have assembled a repository of labeled information related to wildlife conservation, particularly wildlife imagery.

Global optical imagery from the Landsat MSS and TM instruments, which imaged the Earth from 1972 to 2013, aboard the Landsat 1-5 satellites.

Landsat TM/MSS data are in preview; access is granted by request.

Global optical imagery from the Landsat 7 satellite, which has imaged the Earth since 1999.

Landsat 7 data are in preview; access is granted by request.

Global optical imagery from the Landsat 8 satellite, which has imaged the Earth since 2013.

Satellite imagery from the Moderate Resolution Imaging Spectroradiometer (MODIS).

Annual burn severity mosaics for the continental United States and Alaska.

Hourly and half-hourly values of the three most common measurements of solar radiation – global horizontal, direct normal, and diffuse horizontal irradiance - along with meteorological data.

Global topographic information from the NASADEM program.

A collection of geospasial data useful for renewable energy development in Puerto Rico. The dataset is curated by the National Renewable Energy Laboratory.

A lidar-derived, geospatially-resolved dataset of suitable roof surfaces and their PV technical potential for 128 metropolitan regions in the United States.

Historical global climate information.

Model output data from the NOAA NCEP Climate Forecast System Version 2.

High resolution (1 meter or less) imagery collected by a number of sources and contributed to the NOAA Digital Coast

Warm start initial conditions for the NOAA Global Forecast System.

Weather imagery from the GOES-16, GOES-17, and GOES-18 satellites.

Model output data from the NOAA Global Ensemble Forecast System.

Model output data from the NOAA Global Forecast System.

Global rainfall estimates in 15-minute intervals.

Weather forecasts for North America at 3km spatial resolution and 15 minute temporal resolution.

Historical global weather information.

Gridded climate data for the US from 1895 to the present.

Data from the National Water Model.

Weather forecasts for North America at 13km resolution.

Typical climate conditions for the United States from 1981 to the present.

NAIP provides US-wide, high-resolution aerial imagery. This data set includes NAIP images from 2010 to the present.

US-wide data on land cover and land cover change at a 30m resolution with a 16-class legend.

Habitat information for 2,216 imperiled species occurring in the conterminous United States.

Video data from the Ocean Observatories Initiative seafloor camera deployed at Axial Volcano on the Juan de Fuca Ridge.

Global synthetic aperture radar (SAR) data from 2017-present, projected to ground range.

Sentinel-1 GRD data are in preview; access is granted by request.

Global synthetic aperture radar (SAR) data for the last 90 days.

Sentinel-1 SLC data are in preview; access is granted by request.

Global optical imagery at 10m resolution from 2016-present.

Global multispectral imagery at 300m resolution, with a revisit rate of less than two days, from 2016-present.

Sentinel-3 data are in preview; access is granted by request.

Global atmospheric data from 2018-present.

Sentinel-5P data are in preview; access is granted by request.

Monthly climate and climatic water balance for global terrestrial surfaces from 1958-2019.

Historical climate data for China, from 1851-2010.

Data for COVID-19 researchers exploring relationships between COVID-19 and environmental factors.

Modeled wind, wave, and current data for Hurricanes Ike and Sandy, produced by the National Renewable Energy Laboratory.

Status and trends on U.S. forest location, health, growth, mortality, and production, from the US Forest Service's Forest Inventory and Analysis (FIA) program.

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AIforEarthDataSets

Similar Open Source Tools

AIforEarthDataSets

The Microsoft AI for Earth program hosts geospatial data on Azure that is important to environmental sustainability and Earth science. This repo hosts documentation and demonstration notebooks for all the data that is managed by AI for Earth. It also serves as a "staging ground" for the Planetary Computer Data Catalog.

ai-data-science-team

The AI Data Science Team of Copilots is an AI-powered data science team that uses agents to help users perform common data science tasks 10X faster. It includes agents specializing in data cleaning, preparation, feature engineering, modeling, and interpretation of business problems. The project is a work in progress with new data science agents to be released soon. Disclaimer: This project is for educational purposes only and not intended to replace a company's data science team. No warranties or guarantees are provided, and the creator assumes no liability for financial loss.

nixtla

Nixtla is a production-ready generative pretrained transformer for time series forecasting and anomaly detection. It can accurately predict various domains such as retail, electricity, finance, and IoT with just a few lines of code. TimeGPT introduces a paradigm shift with its standout performance, efficiency, and simplicity, making it accessible even to users with minimal coding experience. The model is based on self-attention and is independently trained on a vast time series dataset to minimize forecasting error. It offers features like zero-shot inference, fine-tuning, API access, adding exogenous variables, multiple series forecasting, custom loss function, cross-validation, prediction intervals, and handling irregular timestamps.

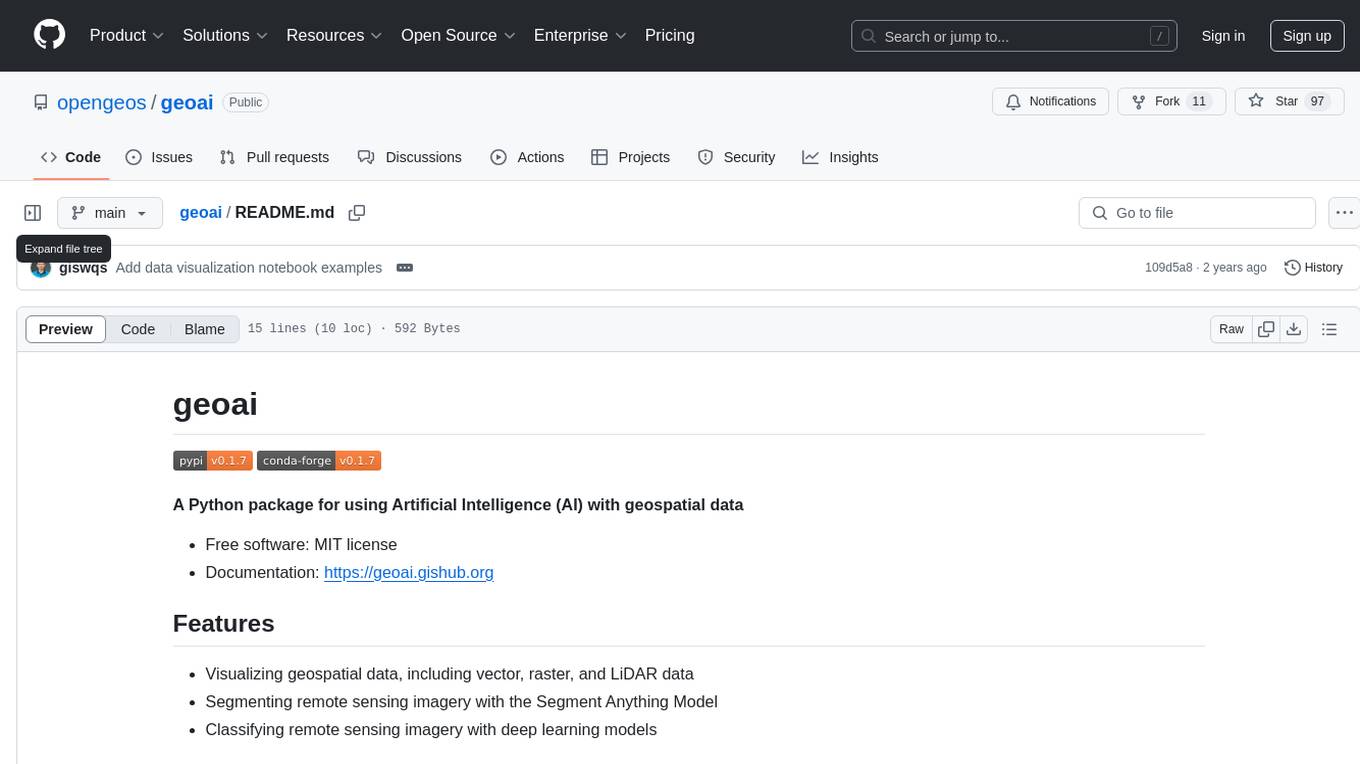

geoai

geoai is a Python package designed for utilizing Artificial Intelligence (AI) in the context of geospatial data. It allows users to visualize various types of geospatial data such as vector, raster, and LiDAR data. Additionally, the package offers functionalities for segmenting remote sensing imagery using the Segment Anything Model and classifying remote sensing imagery with deep learning models. With a focus on geospatial AI applications, geoai provides a versatile tool for processing and analyzing spatial data with the power of AI.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

MMStar

MMStar is an elite vision-indispensable multi-modal benchmark comprising 1,500 challenge samples meticulously selected by humans. It addresses two key issues in current LLM evaluation: the unnecessary use of visual content in many samples and the existence of unintentional data leakage in LLM and LVLM training. MMStar evaluates 6 core capabilities across 18 detailed axes, ensuring a balanced distribution of samples across all dimensions.

pytorch-forecasting

PyTorch Forecasting is a PyTorch-based package for time series forecasting with state-of-the-art network architectures. It offers a high-level API for training networks on pandas data frames and utilizes PyTorch Lightning for scalable training on GPUs and CPUs. The package aims to simplify time series forecasting with neural networks by providing a flexible API for professionals and default settings for beginners. It includes a timeseries dataset class, base model class, multiple neural network architectures, multi-horizon timeseries metrics, and hyperparameter tuning with optuna. PyTorch Forecasting is built on pytorch-lightning for easy training on various hardware configurations.

merlin

Merlin is a groundbreaking model capable of generating natural language responses intricately linked with object trajectories of multiple images. It excels in predicting and reasoning about future events based on initial observations, showcasing unprecedented capability in future prediction and reasoning. Merlin achieves state-of-the-art performance on the Future Reasoning Benchmark and multiple existing multimodal language models benchmarks, demonstrating powerful multi-modal general ability and foresight minds.

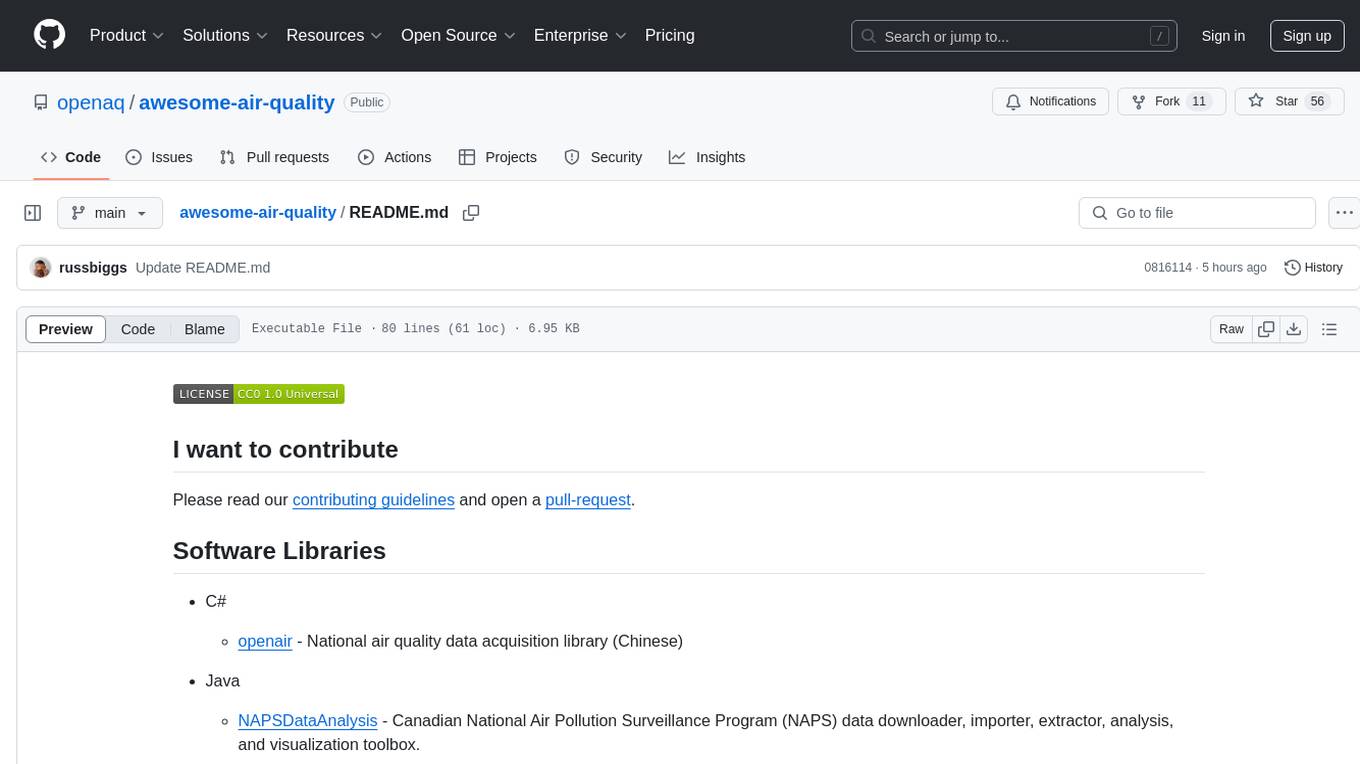

awesome-air-quality

The 'awesome-air-quality' repository is a curated list of software libraries, tools, and resources related to air quality data acquisition, analysis, and visualization. It includes libraries in various programming languages such as Python, Java, R, and C#, as well as hardware drivers and software for gas sensors and particulate matter sensors. The repository aims to provide a comprehensive collection of tools for working with air quality data from different sources and for different purposes.

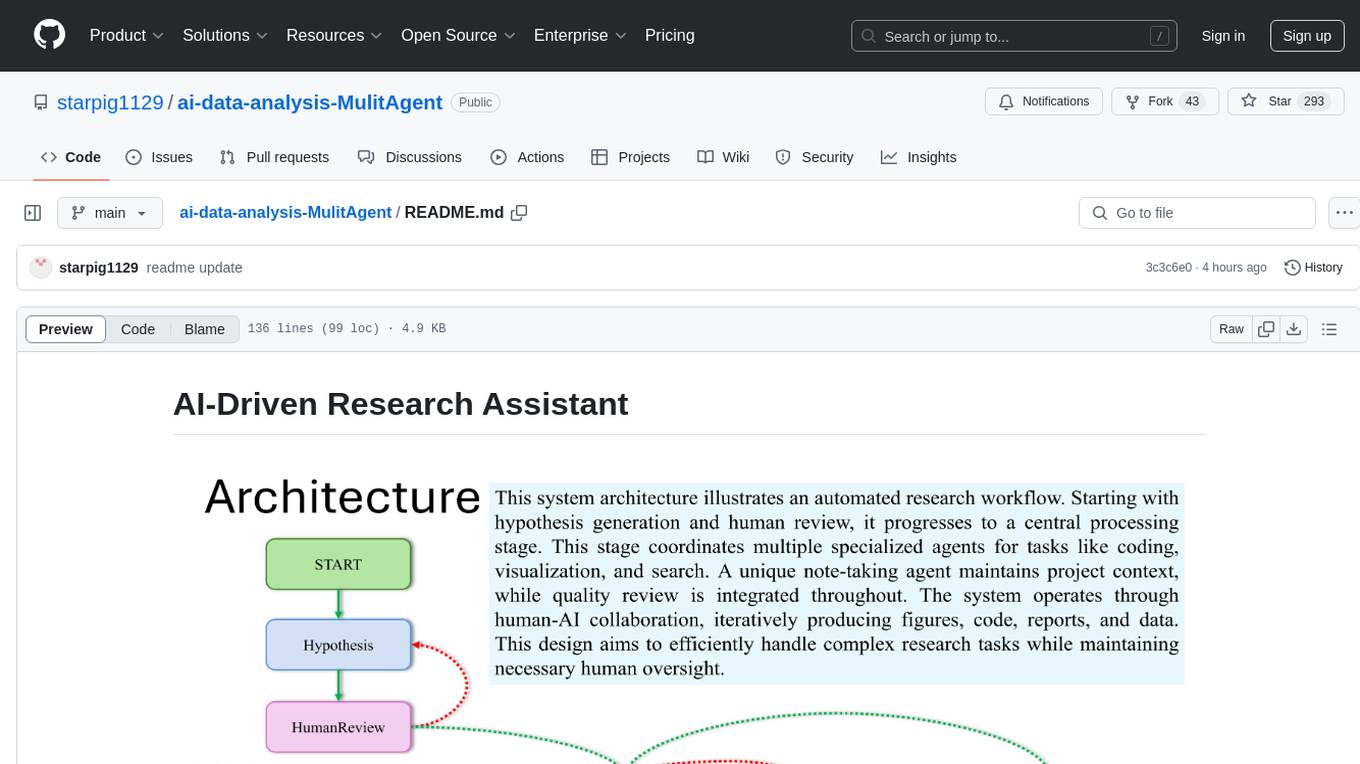

ai-data-analysis-MulitAgent

AI-Driven Research Assistant is an advanced AI-powered system utilizing specialized agents for data analysis, visualization, and report generation. It integrates LangChain, OpenAI's GPT models, and LangGraph for complex research processes. Key features include hypothesis generation, data processing, web search, code generation, and report writing. The system's unique Note Taker agent maintains project state, reducing overhead and improving context retention. System requirements include Python 3.10+ and Jupyter Notebook environment. Installation involves cloning the repository, setting up a Conda virtual environment, installing dependencies, and configuring environment variables. Usage instructions include setting data, running Jupyter Notebook, customizing research tasks, and viewing results. Main components include agents for hypothesis generation, process supervision, visualization, code writing, search, report writing, quality review, and note-taking. Workflow involves hypothesis generation, processing, quality review, and revision. Customization is possible by modifying agent creation and workflow definition. Current issues include OpenAI errors, NoteTaker efficiency, runtime optimization, and refiner improvement. Contributions via pull requests are welcome under the MIT License.

LAMBDA

LAMBDA is a code-free multi-agent data analysis system that utilizes large models to address data analysis challenges in complex data-driven applications. It allows users to perform complex data analysis tasks through human language instruction, seamlessly generate and debug code using two key agent roles, integrate external models and algorithms, and automatically generate reports. The system has demonstrated strong performance on various machine learning datasets, enhancing data science practice by integrating human and artificial intelligence.

trustgraph

TrustGraph is a tool that deploys private GraphRAG pipelines to build a RDF style knowledge graph from data, enabling accurate and secure `RAG` requests compatible with cloud LLMs and open-source SLMs. It showcases the reliability and efficiencies of GraphRAG algorithms, capturing contextual language flags missed in conventional RAG approaches. The tool offers features like PDF decoding, text chunking, inference of various LMs, RDF-aligned Knowledge Graph extraction, and more. TrustGraph is designed to be modular, supporting multiple Language Models and environments, with a plug'n'play architecture for easy customization.

persian-license-plate-recognition

The Persian License Plate Recognition (PLPR) system is a state-of-the-art solution designed for detecting and recognizing Persian license plates in images and video streams. Leveraging advanced deep learning models and a user-friendly interface, it ensures reliable performance across different scenarios. The system offers advanced detection using YOLOv5 models, precise recognition of Persian characters, real-time processing capabilities, and a user-friendly GUI. It is well-suited for applications in traffic monitoring, automated vehicle identification, and similar fields. The system's architecture includes modules for resident management, entrance management, and a detailed flowchart explaining the process from system initialization to displaying results in the GUI. Hardware requirements include an Intel Core i5 processor, 8 GB RAM, a dedicated GPU with at least 4 GB VRAM, and an SSD with 20 GB of free space. The system can be installed by cloning the repository and installing required Python packages. Users can customize the video source for processing and run the application to upload and process images or video streams. The system's GUI allows for parameter adjustments to optimize performance, and the Wiki provides in-depth information on the system's architecture and model training.

AixLib

AixLib is a Modelica model library for building performance simulations developed at RWTH Aachen University, E.ON Energy Research Center, Institute for Energy Efficient Buildings and Indoor Climate (EBC) in Aachen, Germany. It contains models of HVAC systems as well as high and reduced order building models. The name AixLib is derived from the city's French name Aix-la-Chapelle, following a local tradition. The library is continuously improved and offers citable papers for reference. Contributions to the development can be made via Issues section or Pull Requests, following the workflow described in the Wiki. AixLib is released under a 3-clause BSD-license with acknowledgements to public funded projects and financial support by BMWi (German Federal Ministry for Economic Affairs and Energy).

AI2BMD

AI2BMD is a program for efficiently simulating protein molecular dynamics with ab initio accuracy. The repository contains datasets, simulation programs, and public materials related to AI2BMD. It provides a Docker image for easy deployment and a standalone launcher program. Users can run simulations by downloading the launcher script and specifying simulation parameters. The repository also includes ready-to-use protein structures for testing. AI2BMD is designed for x86-64 GNU/Linux systems with recommended hardware specifications. The related research includes model architectures like ViSNet, Geoformer, and fine-grained force metrics for MLFF. Citation information and contact details for the AI2BMD Team are provided.

datasets

Datasets is a repository that provides a collection of various datasets for machine learning and data analysis projects. It includes datasets in different formats such as CSV, JSON, and Excel, covering a wide range of topics including finance, healthcare, marketing, and more. The repository aims to help data scientists, researchers, and students access high-quality datasets for training models, conducting experiments, and exploring data analysis techniques.

For similar tasks

AIforEarthDataSets

The Microsoft AI for Earth program hosts geospatial data on Azure that is important to environmental sustainability and Earth science. This repo hosts documentation and demonstration notebooks for all the data that is managed by AI for Earth. It also serves as a "staging ground" for the Planetary Computer Data Catalog.

AIR-1

AIR-1 is a compact sensor device designed for monitoring various environmental parameters such as gas levels, particulate matter, temperature, and humidity. It features multiple sensors for detecting gases like CO, alcohol, H2, NO2, NH3, CO2, as well as particulate matter, VOCs, NOx, and more. The device is designed with a focus on accuracy and efficient heat management in a small form factor, making it suitable for indoor air quality monitoring and environmental sensing applications.

For similar jobs

AIforEarthDataSets

The Microsoft AI for Earth program hosts geospatial data on Azure that is important to environmental sustainability and Earth science. This repo hosts documentation and demonstration notebooks for all the data that is managed by AI for Earth. It also serves as a "staging ground" for the Planetary Computer Data Catalog.

Awesome-LWMs

Awesome Large Weather Models (LWMs) is a curated collection of articles and resources related to large weather models used in AI for Earth and AI for Science. It includes information on various cutting-edge weather forecasting models, benchmark datasets, and research papers. The repository serves as a hub for researchers and enthusiasts to explore the latest advancements in weather modeling and forecasting.

Generative_AI_For_Science

Generative AI for Science is a comprehensive, hands-on guide for researchers, students, and practitioners who want to apply cutting-edge AI techniques to scientific discovery. The book bridges the gap between AI/ML expertise and domain science, providing practical implementations across chemistry, biology, physics, geoscience, and beyond. It covers key AI architectures like Transformers, Diffusion Models, VAEs, and GNNs, and teaches how to apply generative models to problems in climate science, drug discovery, genomics, materials science, and more. The book also emphasizes best practices around ethics, reproducibility, and deployment, helping readers develop the intuition to know when and how to apply AI to scientific research.

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.