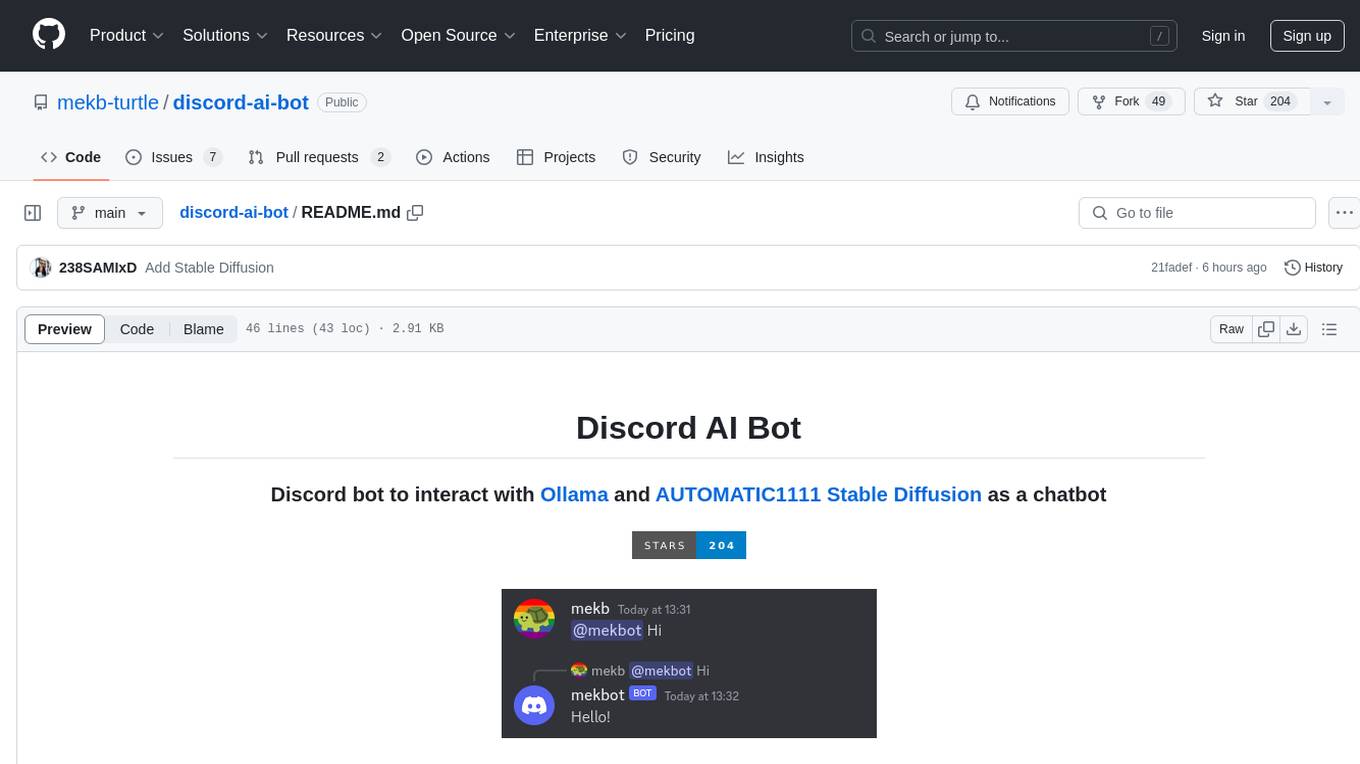

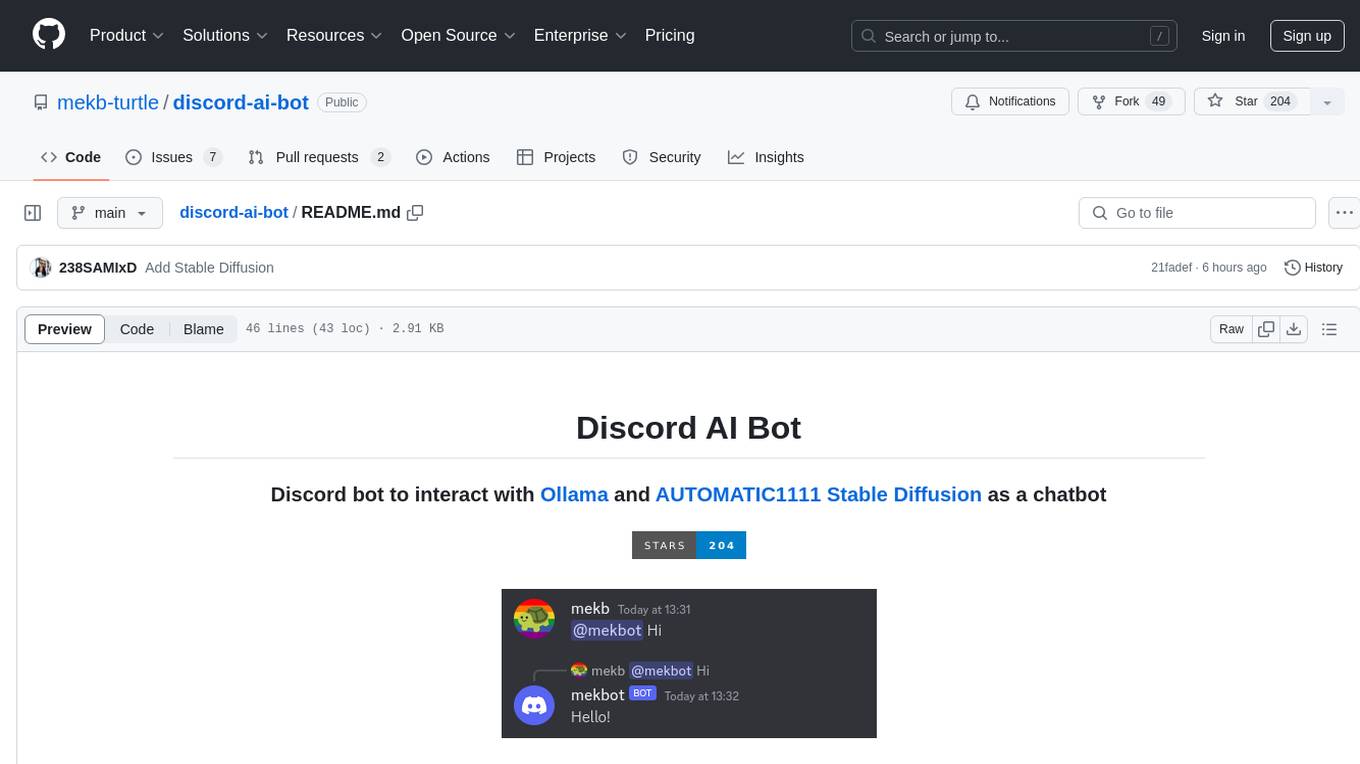

discord-ai-bot

Discord AI chatbot using Ollama

Stars: 210

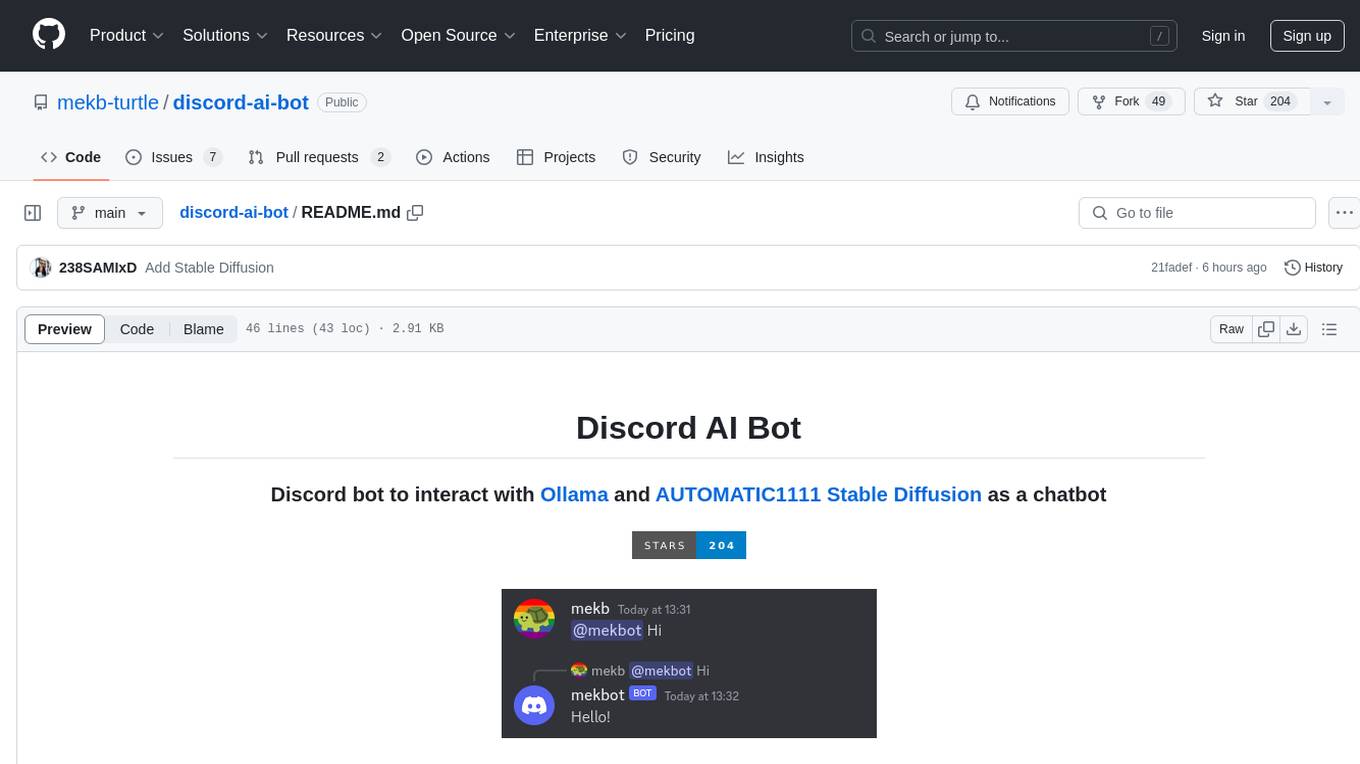

Discord AI Bot is a chatbot designed to interact with Ollama and AUTOMATIC1111 Stable Diffusion on Discord. The project is now archived due to lack of maintenance. Users can set up the bot by installing Node.js, Ollama, and a model, creating a Discord bot, and starting the bot with the necessary configurations. Additionally, Docker setup instructions are provided for easy deployment. The bot can be interacted with by mentioning it in Discord messages.

README:

This repository is no longer being developed on by me, mekb-turtle.

- Install Node.js (if you have a package manager, use that instead to install this)

- Make sure to install at least v14 of Node.js

- Install Ollama (ditto)

- Pull (download) a model, e.g

ollama pull orcaorollama pull llama2 - Start Ollama by running

ollama serve -

Create a Discord bot

- Under Application » Bot

- Enable Message Content Intent

- Enable Server Members Intent (for replacing user mentions with the username)

- Under Application » Bot

- Invite the bot to a server

- Go to Application » OAuth2 » URL Generator

- Enable

bot - Enable Send Messages, Read Messages/View Channels, and Read Message History

- Under Generated URL, click Copy and paste the URL in your browser

- Rename

.env.exampleto.envand edit the.envfile- You can get the token from Application » Bot » Token, never share this with anyone

- Make sure to change the model if you aren't using

orca - Ollama URL can be kept the same unless you have changed the port

- You can use multiple Ollama servers at the same time by separating the URLs with commas

- Set the channels to the channel ID, comma separated

- In Discord, go to User Settings » Advanced, and enable Developer Mode

- Right click on a channel you want to use, and click Copy Channel ID

- You can edit the system message the bot uses, or disable it entirely

- Install the required dependencies with

npm i - Start the bot with

npm start - You can interact with the bot by @mentioning it with your message

- Install Stable Diffusion

- Run the script

./webui.sh --api --listen

- Install Docker

- Should be atleast compatible with version 3 of compose (docker engine 1.13.0+)

- Repeat steps 2—7 from the other setup instructions

- Start the bot with

make compose-upif you have Make installed- Otherwise, try

docker compose -p discord-ai upinstead

- Otherwise, try

- You can interact with the bot by @mentioning it with your message

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for discord-ai-bot

Similar Open Source Tools

discord-ai-bot

Discord AI Bot is a chatbot designed to interact with Ollama and AUTOMATIC1111 Stable Diffusion on Discord. The project is now archived due to lack of maintenance. Users can set up the bot by installing Node.js, Ollama, and a model, creating a Discord bot, and starting the bot with the necessary configurations. Additionally, Docker setup instructions are provided for easy deployment. The bot can be interacted with by mentioning it in Discord messages.

discord-ai-bot

Discord AI Bot is a chatbot tool designed to interact with Ollama and AUTOMATIC1111 Stable Diffusion on Discord. The bot allows users to set up and configure a Discord bot to communicate with the mentioned AI models. Users can follow step-by-step instructions to install Node.js, Ollama, and the required dependencies, create a Discord bot, and interact with the bot by mentioning it in messages. Additionally, the tool provides set-up instructions for Docker users to easily deploy the bot using Docker containers. Overall, Discord AI Bot simplifies the process of integrating AI chatbots into Discord servers for interactive communication.

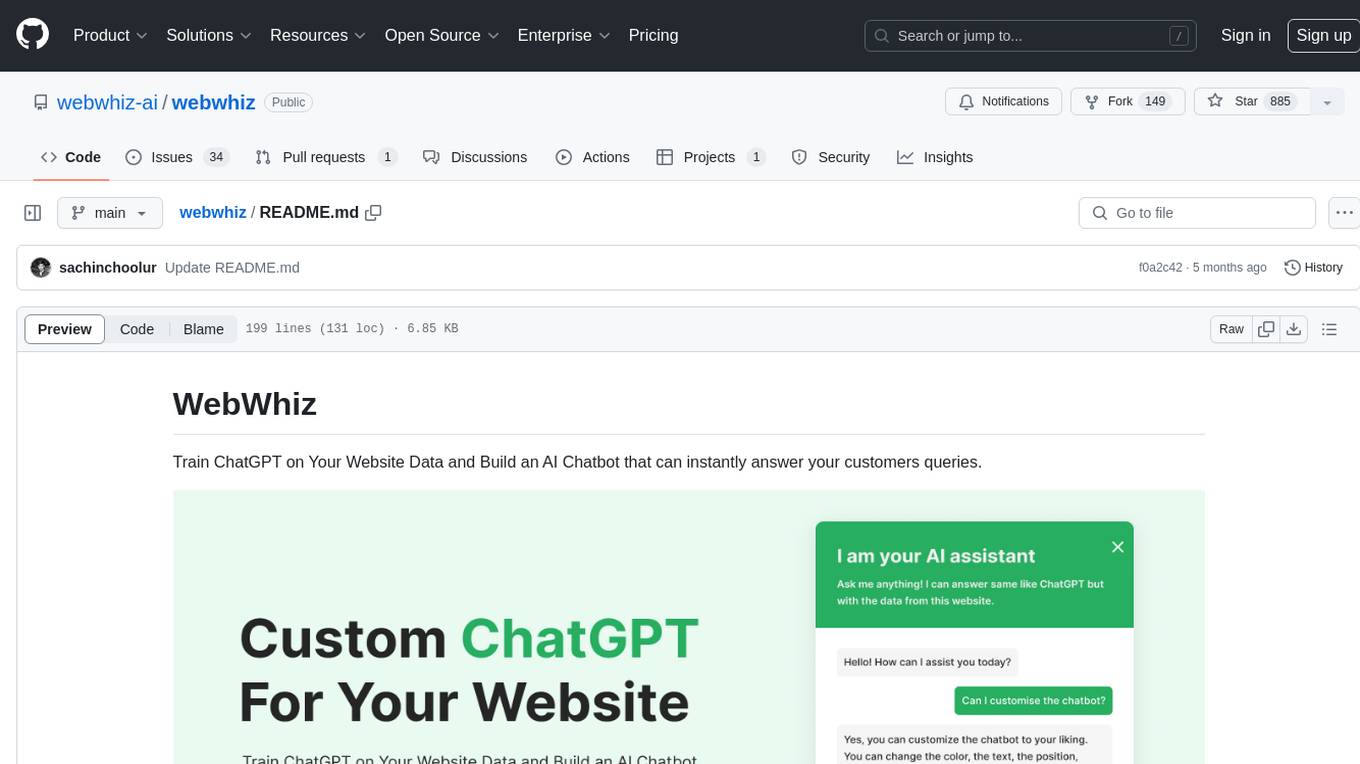

webwhiz

WebWhiz is an open-source tool that allows users to train ChatGPT on website data to build AI chatbots for customer queries. It offers easy integration, data-specific responses, regular data updates, no-code builder, chatbot customization, fine-tuning, and offline messaging. Users can create and train chatbots in a few simple steps by entering their website URL, automatically fetching and preparing training data, training ChatGPT, and embedding the chatbot on their website. WebWhiz can crawl websites monthly, collect text data and metadata, and process text data using tokens. Users can train custom data, but bringing custom open AI keys is not yet supported. The tool has no limitations on context size but may limit the number of pages based on the chosen plan. WebWhiz SDK is available on NPM, CDNs, and GitHub, and users can self-host it using Docker or manual setup involving MongoDB, Redis, Node, Python, and environment variables setup. For any issues, users can contact [email protected].

python-sc2

python-sc2 is an easy-to-use library for writing AI Bots for StarCraft II in Python 3. It aims for simplicity and ease of use while providing both high and low level abstractions. The library covers only the raw scripted interface and intends to help new bot authors with added functions. Users can install the library using pip and need a StarCraft II executable to run bots. The API configuration options allow users to customize bot behavior and performance. The community provides support through Discord servers, and users can contribute to the project by creating new issues or pull requests following style guidelines.

minecraft-mcp-server

Minecraft MCP Server is a bot powered by large language models and Mineflayer API. It uses the Model Context Protocol (MCP) to enable models like Claude to control a Minecraft character. The bot allows users to interact with Minecraft through commands and chat messages, facilitating tasks such as movement, inventory management, block interaction, entity interaction, and more. Users can also upload images of buildings and ask the bot to build them. The tool is designed to work with Claude Desktop and requires specific configurations for Minecraft and MCP clients. Contributions to the project, including refactoring, testing, documentation, and new functionality, are welcome.

telegram-llm

A Telegram LLM bot that allows users to deploy their own Telegram bot in 3 simple steps by creating a flow function, configuring access to the Telegram bot, and connecting to an LLM backend. Users need to sign into flows.network, have a bot token from Telegram, and an OpenAI API key. The bot can be customized with ChatGPT prompts and integrated with OpenAI and Telegram for various functionalities.

whisplay-ai-chatbot

Whisplay-AI-Chatbot is a pocket-sized AI chatbot device built using a Raspberry Pi Zero 2w. It features a PiSugar Whisplay HAT with an LCD screen, on-board speaker, and microphone. Users can interact with the chatbot by pressing a button, speaking, and receiving responses, similar to a futuristic walkie-talkie. The tool supports various functionalities such as adjusting volume autonomously, resetting conversation history, local ASR and TTS capabilities, image generation, and integration with APIs like Google Gemini and Grok. It also offers support for LLM8850 AI Accelerator for offline capabilities like ASR, TTS, and LLM API. The chatbot saves conversation history and generated images in a data folder, and users can customize the tool with different enclosure cases available for Pi02 and Pi5 models.

TerminalGPT

TerminalGPT is a terminal-based ChatGPT personal assistant app that allows users to interact with OpenAI GPT-3.5 and GPT-4 language models. It offers advantages over browser-based apps, such as continuous availability, faster replies, and tailored answers. Users can use TerminalGPT in their IDE terminal, ensuring seamless integration with their workflow. The tool prioritizes user privacy by not using conversation data for model training and storing conversations locally on the user's machine.

ai-clone-whatsapp

This repository provides a tool to create an AI chatbot clone of yourself using your WhatsApp chats as training data. It utilizes the Torchtune library for finetuning and inference. The code includes preprocessing of WhatsApp chats, finetuning models, and chatting with the AI clone via a command-line interface. Supported models are Llama3-8B-Instruct and Mistral-7B-Instruct-v0.2. Hardware requirements include approximately 16 GB vRAM for QLoRa Llama3 finetuning with a 4k context length. The repository addresses common issues like adjusting parameters for training and preprocessing non-English chats.

aiarena-web

aiarena-web is a website designed for running the aiarena.net infrastructure. It consists of different modules such as core functionality, web API endpoints, frontend templates, and a module for linking users to their Patreon accounts. The website serves as a platform for obtaining new matches, reporting results, featuring match replays, and connecting with Patreon supporters. The project is licensed under GPLv3 in 2019.

gemini-pro-bot

This Python Telegram bot utilizes Google's `gemini-pro` LLM API to generate creative text formats based on user input. It's designed to be an engaging and interactive way to explore the capabilities of large language models. Key features include generating various text formats like poems, code, scripts, and musical pieces. The bot supports real-time streaming of the generation process, allowing users to witness the text unfold. Additionally, it can respond to messages with Bard's creative output and handle image-based inputs for multimodal responses. User authentication is optional, and the bot can be easily integrated with Docker or installed via pipenv.

fasttrackml

FastTrackML is an experiment tracking server focused on speed and scalability, fully compatible with MLFlow. It provides a user-friendly interface to track and visualize your machine learning experiments, making it easy to compare different models and identify the best performing ones. FastTrackML is open source and can be easily installed and run with pip or Docker. It is also compatible with the MLFlow Python package, making it easy to integrate with your existing MLFlow workflows.

aider-composer

Aider Composer is a VSCode extension that integrates Aider into your development workflow. It allows users to easily add and remove files, toggle between read-only and editable modes, review code changes, use different chat modes, and reference files in the chat. The extension supports multiple models, code generation, code snippets, and settings customization. It has limitations such as lack of support for multiple workspaces, Git repository features, linting, testing, voice features, in-chat commands, and configuration options.

maxheadbox

Max Headbox is an open-source voice-activated LLM Agent designed to run on a Raspberry Pi. It can be configured to execute a variety of tools and perform actions. The project requires specific hardware and software setups, and provides detailed instructions for installation, configuration, and usage. Users can create custom tools by making JavaScript modules and backend API handlers. The project acknowledges the use of various open-source projects and resources in its development.

buildware-ai

Buildware is a tool designed to help developers accelerate their code shipping process by leveraging AI technology. Users can build a code instruction system, submit an issue, and receive an AI-generated pull request. The tool is created by Mckay Wrigley and Tyler Bruno at Takeoff AI. Buildware offers a simple setup process involving cloning the repository, installing dependencies, setting up environment variables, configuring a database, and obtaining a GitHub Personal Access Token (PAT). The tool is currently being updated to include advanced features such as Linear integration, local codebase mode, and team support.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

For similar tasks

MaxKB

MaxKB is a knowledge base Q&A system based on the LLM large language model. MaxKB = Max Knowledge Base, which aims to become the most powerful brain of the enterprise.

Large-Language-Models

Large Language Models (LLM) are used to browse the Wolfram directory and associated URLs to create the category structure and good word embeddings. The goal is to generate enriched prompts for GPT, Wikipedia, Arxiv, Google Scholar, Stack Exchange, or Google search. The focus is on one subdirectory: Probability & Statistics. Documentation is in the project textbook `Projects4.pdf`, which is available in the folder. It is recommended to download the document and browse your local copy with Chrome, Edge, or other viewers. Unlike on GitHub, you will be able to click on all the links and follow the internal navigation features. Look for projects related to NLP and LLM / xLLM. The best starting point is project 7.2.2, which is the core project on this topic, with references to all satellite projects. The project textbook (with solutions to all projects) is the core document needed to participate in the free course (deep tech dive) called **GenAI Fellowship**. For details about the fellowship, follow the link provided. An uncompressed version of `crawl_final_stats.txt.gz` is available on Google drive, which contains all the crawled data needed as input to the Python scripts in the XLLM5 and XLLM6 folders.

BlossomLM

BlossomLM is a series of open-source conversational large language models. This project aims to provide a high-quality general-purpose SFT dataset in both Chinese and English, making fine-tuning accessible while also providing pre-trained model weights. **Hint**: BlossomLM is a personal non-commercial project.

InternLM

InternLM is a powerful language model series with features such as 200K context window for long-context tasks, outstanding comprehensive performance in reasoning, math, code, chat experience, instruction following, and creative writing, code interpreter & data analysis capabilities, and stronger tool utilization capabilities. It offers models in sizes of 7B and 20B, suitable for research and complex scenarios. The models are recommended for various applications and exhibit better performance than previous generations. InternLM models may match or surpass other open-source models like ChatGPT. The tool has been evaluated on various datasets and has shown superior performance in multiple tasks. It requires Python >= 3.8, PyTorch >= 1.12.0, and Transformers >= 4.34 for usage. InternLM can be used for tasks like chat, agent applications, fine-tuning, deployment, and long-context inference.

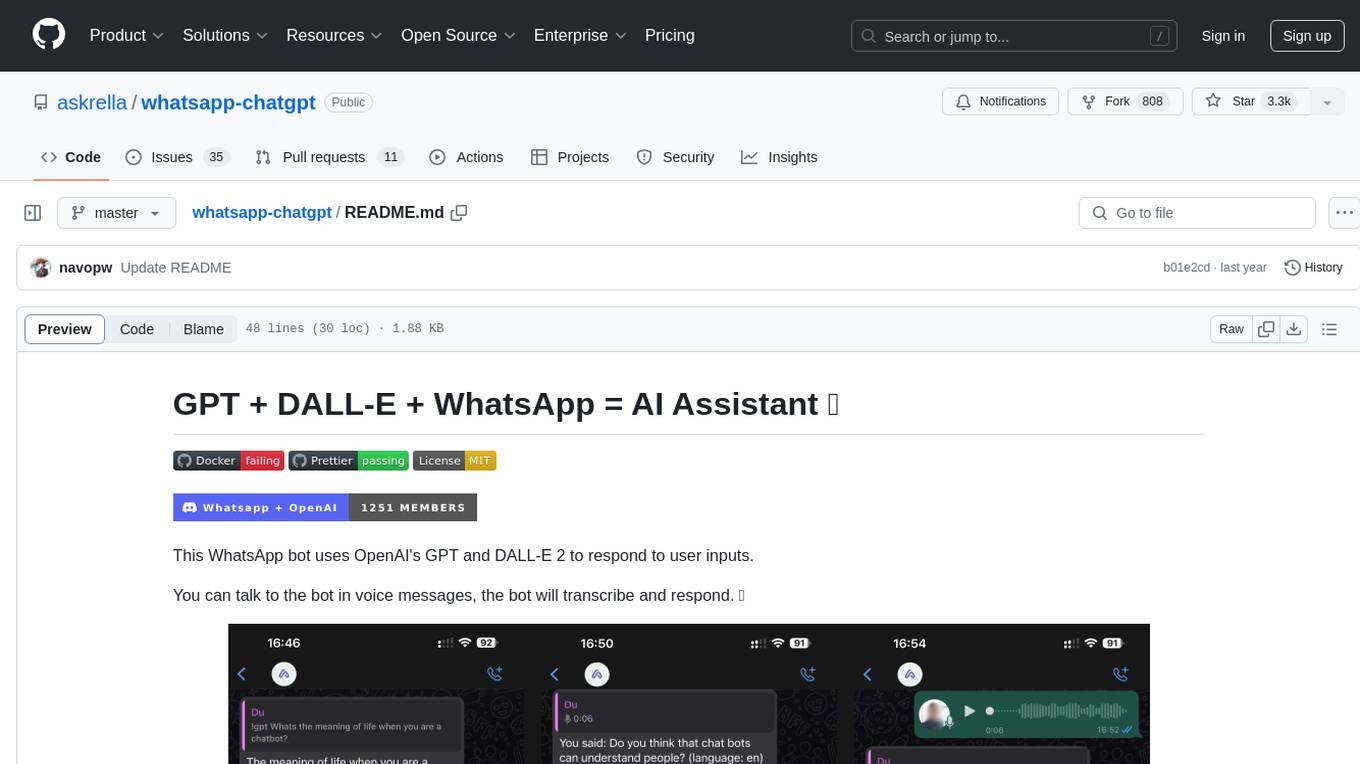

whatsapp-chatgpt

This repository contains a WhatsApp bot that utilizes OpenAI's GPT and DALL-E 2 to respond to user inputs. Users can interact with the bot through voice messages, which are transcribed and responded to. The bot requires Node.js, npm, an OpenAI API key, and a WhatsApp account. It uses Puppeteer to run a real instance of Whatsapp Web to avoid being blocked. However, there is a risk of being blocked by WhatsApp as it does not allow bots or unofficial clients on its platform. The bot is not free to use, and users will be charged by OpenAI for each request made.

discord-ai-bot

Discord AI Bot is a chatbot designed to interact with Ollama and AUTOMATIC1111 Stable Diffusion on Discord. The project is now archived due to lack of maintenance. Users can set up the bot by installing Node.js, Ollama, and a model, creating a Discord bot, and starting the bot with the necessary configurations. Additionally, Docker setup instructions are provided for easy deployment. The bot can be interacted with by mentioning it in Discord messages.

J.A.R.V.I.S

J.A.R.V.I.S. is an offline large language model fine-tuned on custom and open datasets to mimic Jarvis's dialog with Stark. It prioritizes privacy by running locally and excels in responding like Jarvis with a similar tone. Current features include time/date queries, web searches, playing YouTube videos, and webcam image descriptions. Users can interact with Jarvis via command line after installing the model locally using Ollama. Future plans involve voice cloning, voice-to-text input, and deploying the voice model as an API.

airdcpp-webclient

AirDC++ Web Client is a locally installed application designed for flexible file sharing within groups over a local network or the internet. It utilizes the Advanced Direct Connect protocol to create file sharing communities with thousands of users. The application offers a responsive web user interface, allows sharing local directories, searching for shared files, saving files, chatting capabilities, browsing shared directories, extension support, and a web API for HTTP REST and WebSockets.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.