whatsapp-chatgpt

ChatGPT + DALL-E + WhatsApp = AI Assistant :rocket: :robot:

Stars: 3351

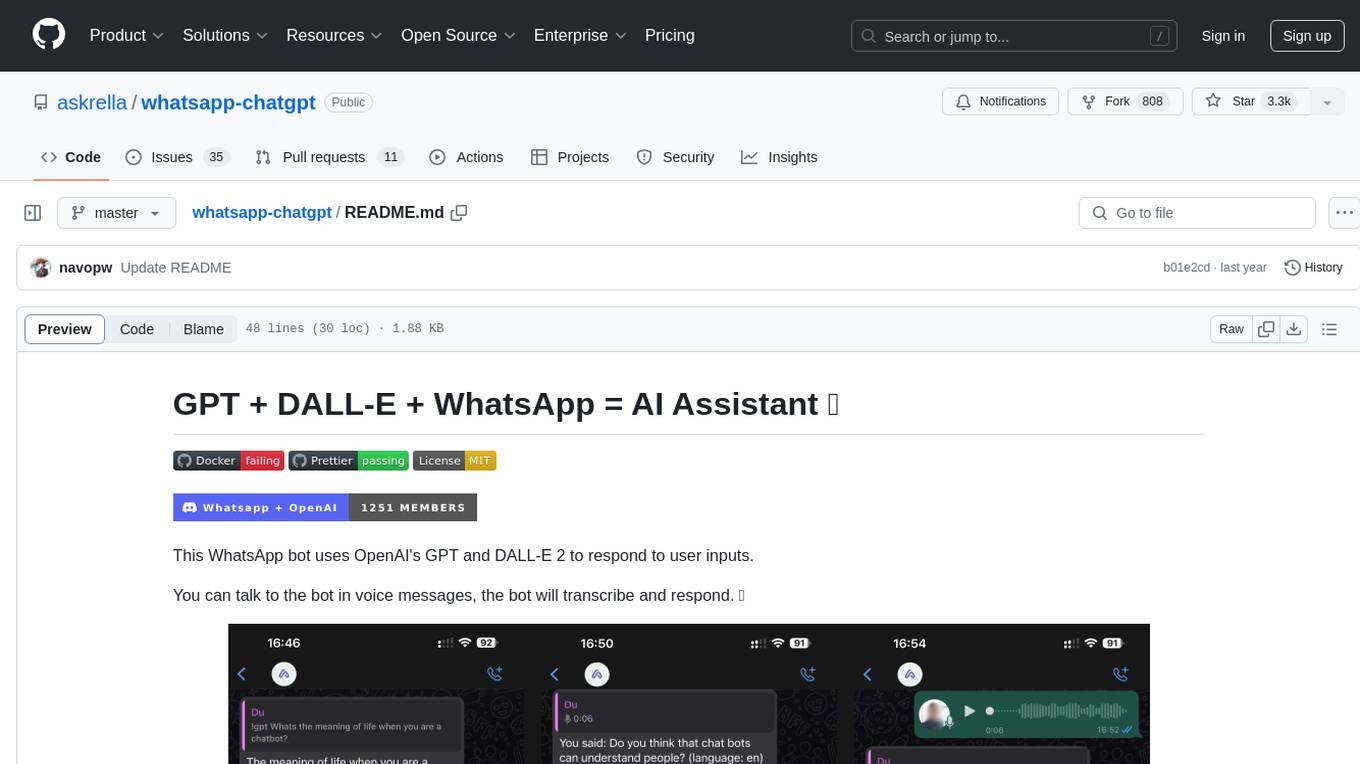

This repository contains a WhatsApp bot that utilizes OpenAI's GPT and DALL-E 2 to respond to user inputs. Users can interact with the bot through voice messages, which are transcribed and responded to. The bot requires Node.js, npm, an OpenAI API key, and a WhatsApp account. It uses Puppeteer to run a real instance of Whatsapp Web to avoid being blocked. However, there is a risk of being blocked by WhatsApp as it does not allow bots or unofficial clients on its platform. The bot is not free to use, and users will be charged by OpenAI for each request made.

README:

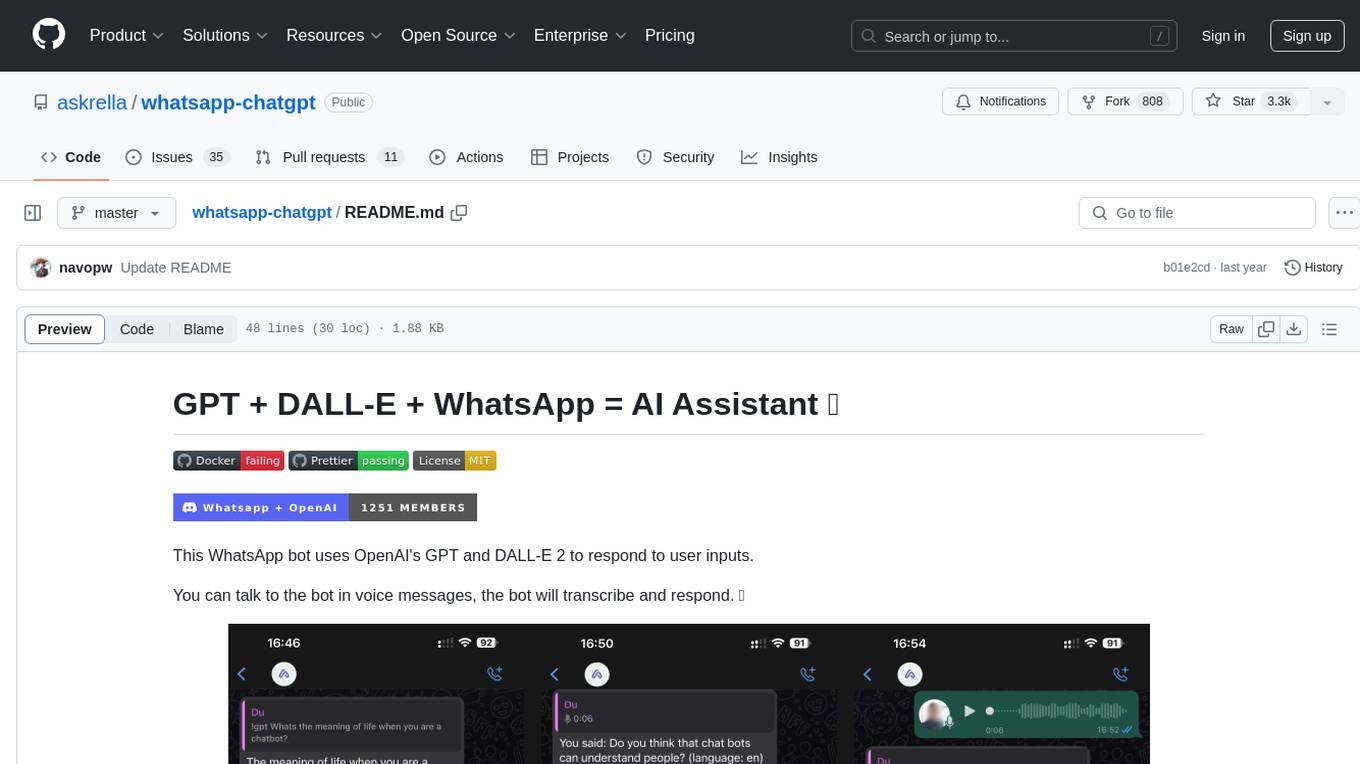

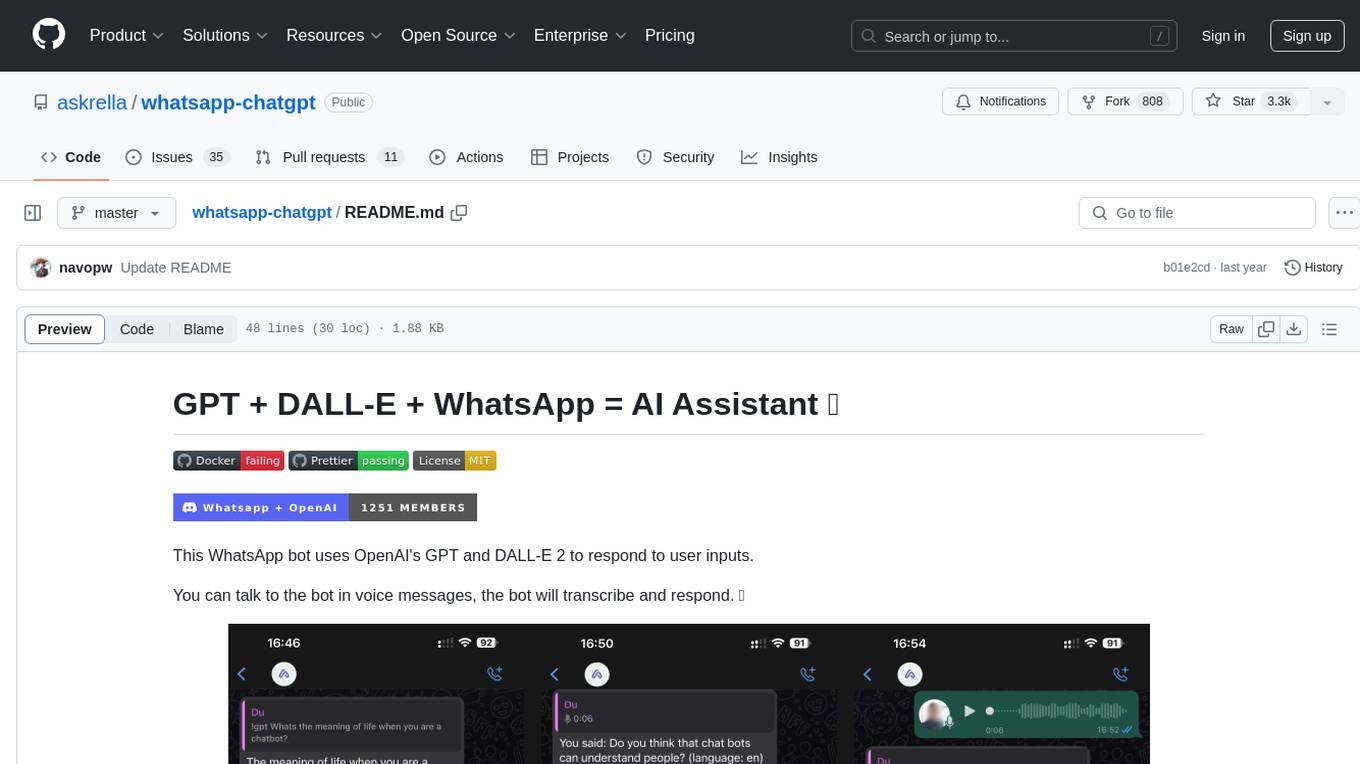

This WhatsApp bot uses OpenAI's GPT and DALL-E 2 to respond to user inputs.

You can talk to the bot in voice messages, the bot will transcribe and respond. 🤖

- Node.js (18 or newer)

- A recent version of npm

- An OpenAI API key

- A WhatsApp account

In the documentation you can find more information about how to install, configure and use this bot.

➡️ https://askrella.github.io/whatsapp-chatgpt

The operations performed by this bot are not free. You will be charged by OpenAI for each request you make.

This bot uses Puppeteer to run a real instance of Whatsapp Web to avoid getting blocked.

NOTE: We can't guarantee that you won't be blocked using this method, although it does work. WhatsApp does not allow bots or unofficial clients on its platform, so this should not be considered completely safe.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for whatsapp-chatgpt

Similar Open Source Tools

whatsapp-chatgpt

This repository contains a WhatsApp bot that utilizes OpenAI's GPT and DALL-E 2 to respond to user inputs. Users can interact with the bot through voice messages, which are transcribed and responded to. The bot requires Node.js, npm, an OpenAI API key, and a WhatsApp account. It uses Puppeteer to run a real instance of Whatsapp Web to avoid being blocked. However, there is a risk of being blocked by WhatsApp as it does not allow bots or unofficial clients on its platform. The bot is not free to use, and users will be charged by OpenAI for each request made.

temp-mail

TempMail V2 is a simple web application that allows users to generate temporary email addresses and view the emails received by these addresses. It uses guerrillamail API for creating emails. The project is for educational purposes only and should be used legally. Users can generate new temporary email addresses, load emails, view email content, and download attachments. Dependencies include jQuery for API requests and DOM manipulation, and Font Awesome for icons.

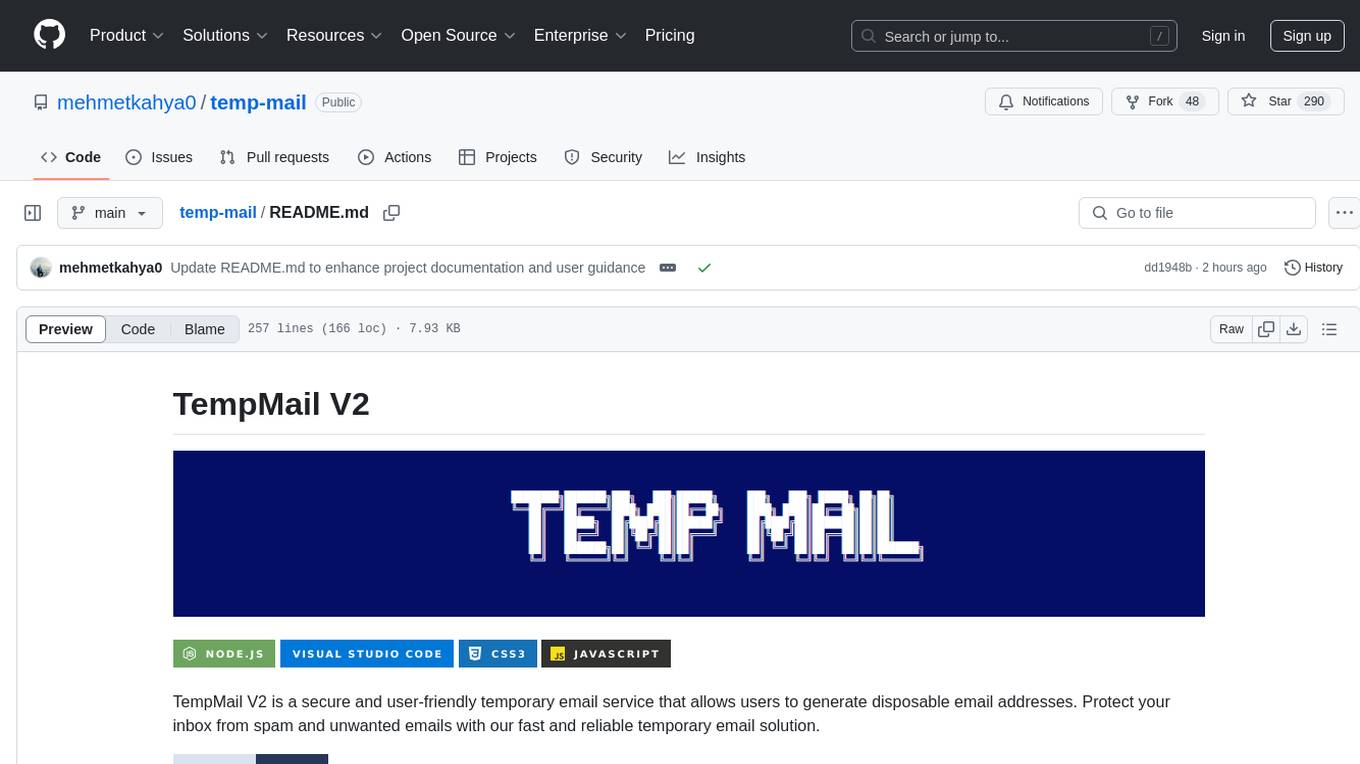

5ire

5ire is a cross-platform desktop client that integrates a local knowledge base for multilingual vectorization, supports parsing and vectorization of various document formats, offers usage analytics to track API spending, provides a prompts library for creating and organizing prompts with variable support, allows bookmarking of conversations, and enables quick keyword searches across conversations. It is licensed under the GNU General Public License version 3.

buildel

Buildel is an AI automation platform that empowers users to create versatile workflows without writing code. It supports multiple providers and interfaces, offers pre-built use cases, and allows users to bring their own API keys. Ideal for AI-powered document retrieval, conversational interfaces, and data integration. Users can get started at app.buildel.ai or run Buildel locally with Node.js, Elixir/Erlang, Docker, Git, and JQ installed. Join the community on Discord for support and discussions.

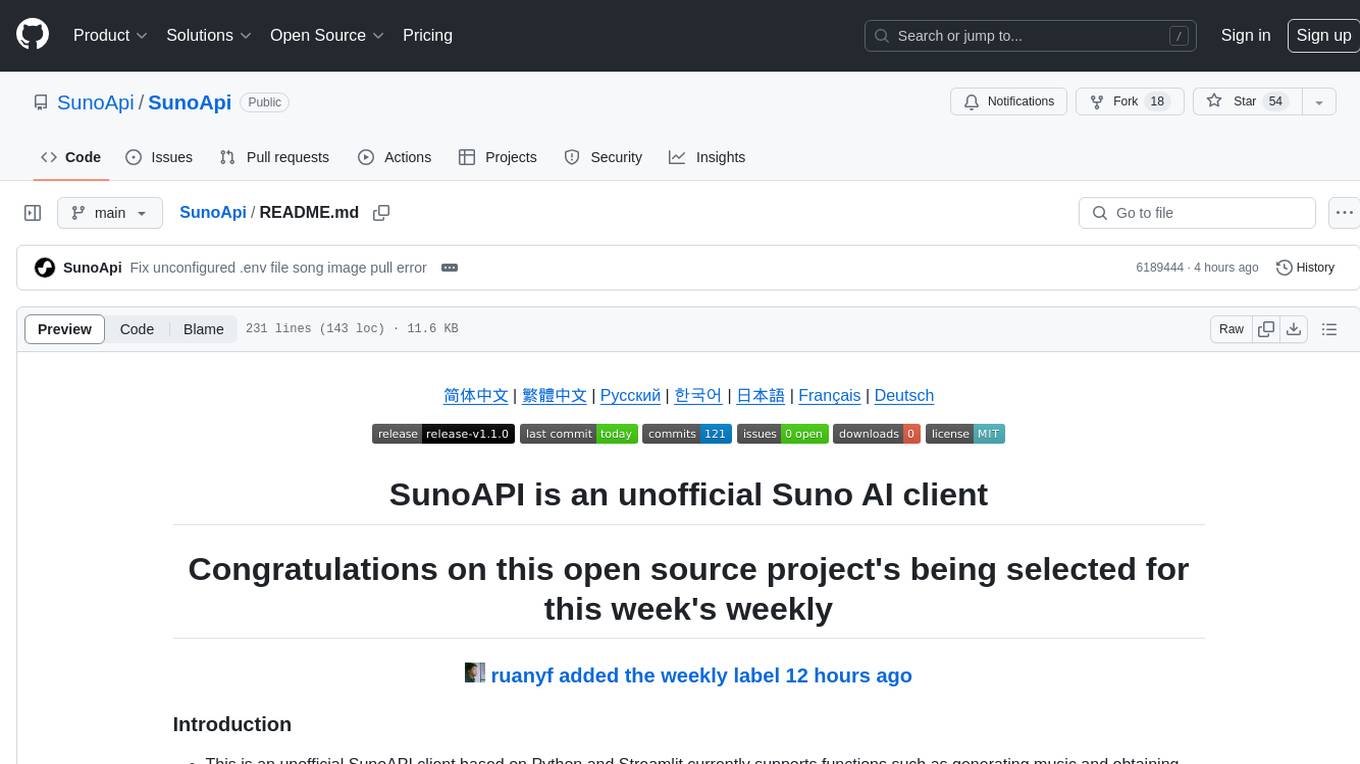

SunoApi

SunoAPI is an unofficial client for Suno AI, built on Python and Streamlit. It supports functions like generating music and obtaining music information. Users can set up multiple account information to be saved for use. The tool also features built-in maintenance and activation functions for tokens, eliminating concerns about token expiration. It supports multiple languages and allows users to upload pictures for generating songs based on image content analysis.

macOS-use

macOS-use is a project that enables AI agents to interact with a MacBook across any app. It aims to build an AI agent for the MLX by Apple framework to perform actions on Apple devices. The project is under active development and allows users to prompt the agent to perform various tasks on their MacBook. Users need to be cautious as the tool can interact with apps, UI components, and use private credentials. The project is open source and welcomes contributions from the community.

embedchain

Embedchain is an Open Source Framework for personalizing LLM responses. It simplifies the creation and deployment of personalized AI applications by efficiently managing unstructured data, generating relevant embeddings, and storing them in a vector database. With diverse APIs, users can extract contextual information, find precise answers, and engage in interactive chat conversations tailored to their data. The framework follows the design principle of being 'Conventional but Configurable' to cater to both software engineers and machine learning engineers.

ChatGPT

ChatGPT is a desktop application available on Mac, Windows, and Linux that provides a powerful AI wrapper experience. It allows users to interact with AI models for various tasks such as generating text, answering questions, and engaging in conversations. The application is designed to be user-friendly and accessible to both beginners and advanced users. ChatGPT aims to enhance the user experience by offering a seamless interface for leveraging AI capabilities in everyday scenarios.

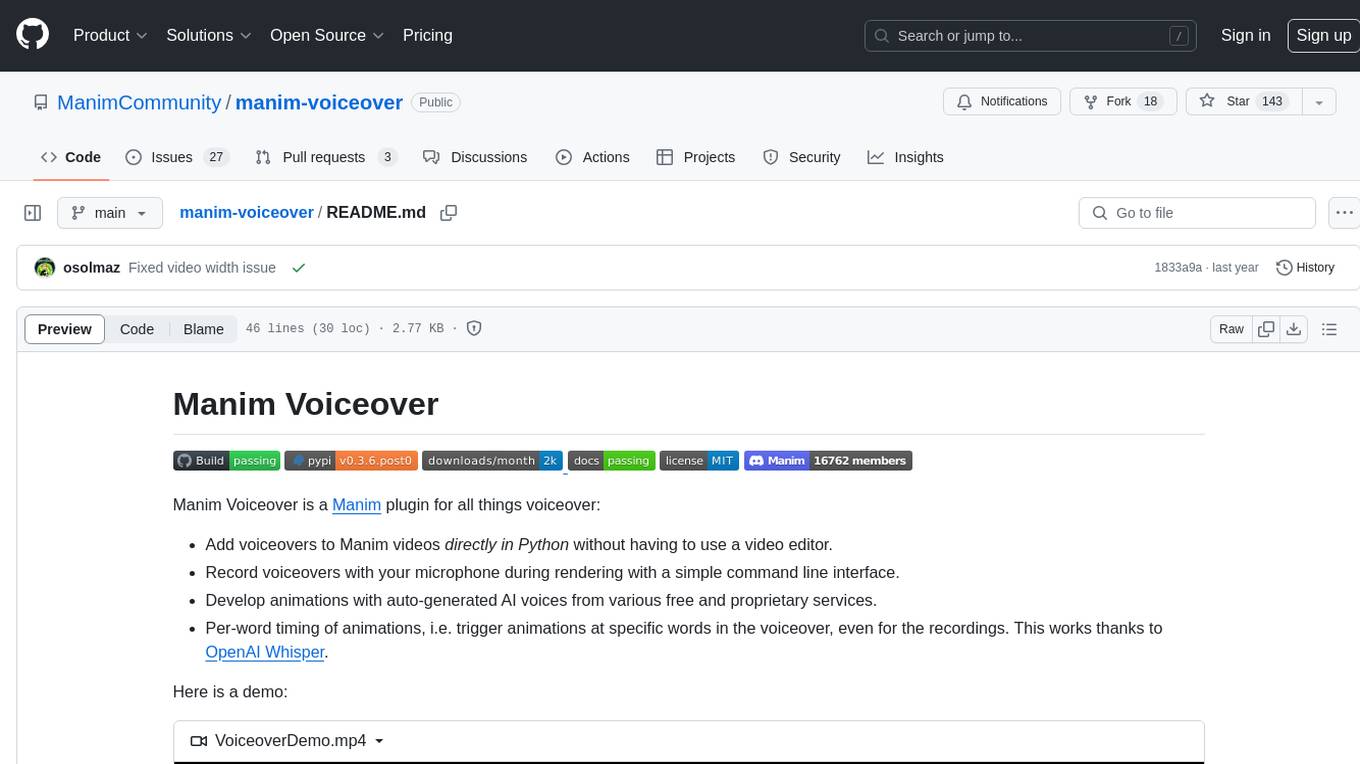

manim-voiceover

Manim Voiceover is a plugin for the Manim animation library that allows users to easily add voiceovers to their videos directly in Python without the need for a separate video editor. It also provides the ability to record voiceovers using a command line interface and supports auto-generated AI voices from various services. Users can trigger animations at specific words in the voiceover, thanks to OpenAI Whisper. The plugin supports TTS services such as Azure Text to Speech, Coqui TTS, gTTS, and pyttsx3. It also offers features for translating voiceovers into other languages using machine translation services like DeepL.

ai-chat-protocol

The Microsoft AI Chat Protocol SDK is a library for easily building AI Chat interfaces from services that follow the AI Chat Protocol API Specification. By agreeing on a standard API contract, AI backend consumption and evaluation can be performed easily and consistently across different services. It allows developers to develop AI chat interfaces, consume and evaluate AI inference backends, and incorporate HTTP middleware for logging and authentication.

mastra

Mastra is an opinionated Typescript framework designed to help users quickly build AI applications and features. It provides primitives such as workflows, agents, RAG, integrations, syncs, and evals. Users can run Mastra locally or deploy it to a serverless cloud. The framework supports various LLM providers, offers tools for building language models, workflows, and accessing knowledge bases. It includes features like durable graph-based state machines, retrieval-augmented generation, integrations, syncs, and automated tests for evaluating LLM outputs.

enterprise-commerce

Enterprise Commerce is a Next.js commerce starter that helps you launch your high-performance Shopify storefront in minutes, not weeks. It leverages the power of Vector Search and AI to deliver a superior online shopping experience without the development headaches.

NeMo-Agent-Toolkit

NVIDIA NeMo Agent toolkit is a flexible, lightweight, and unifying library that allows you to easily connect existing enterprise agents to data sources and tools across any framework. It is framework agnostic, promotes reusability, enables rapid development, provides profiling capabilities, offers observability features, includes an evaluation system, features a user interface for interaction, and supports the Model Context Protocol (MCP). With NeMo Agent toolkit, users can move quickly, experiment freely, and ensure reliability across all agent-driven projects.

moonshot

Moonshot is a simple and modular tool developed by the AI Verify Foundation to evaluate Language Model Models (LLMs) and LLM applications. It brings Benchmarking and Red-Teaming together to assist AI developers, compliance teams, and AI system owners in assessing LLM performance. Moonshot can be accessed through various interfaces including User-friendly Web UI, Interactive Command Line Interface, and seamless integration into MLOps workflows via Library APIs or Web APIs. It offers features like benchmarking LLMs from popular model providers, running relevant tests, creating custom cookbooks and recipes, and automating Red Teaming to identify vulnerabilities in AI systems.

llm-app

Pathway's LLM (Large Language Model) Apps provide a platform to quickly deploy AI applications using the latest knowledge from data sources. The Python application examples in this repository are Docker-ready, exposing an HTTP API to the frontend. These apps utilize the Pathway framework for data synchronization, API serving, and low-latency data processing without the need for additional infrastructure dependencies. They connect to document data sources like S3, Google Drive, and Sharepoint, offering features like real-time data syncing, easy alert setup, scalability, monitoring, security, and unification of application logic.

code-review-gpt

Code Review GPT uses Large Language Models to review code in your CI/CD pipeline. It helps streamline the code review process by providing feedback on code that may have issues or areas for improvement. It should pick up on common issues such as exposed secrets, slow or inefficient code, and unreadable code. It can also be run locally in your command line to review staged files. Code Review GPT is in alpha and should be used for fun only. It may provide useful feedback but please check any suggestions thoroughly.

For similar tasks

whatsapp-chatgpt

This repository contains a WhatsApp bot that utilizes OpenAI's GPT and DALL-E 2 to respond to user inputs. Users can interact with the bot through voice messages, which are transcribed and responded to. The bot requires Node.js, npm, an OpenAI API key, and a WhatsApp account. It uses Puppeteer to run a real instance of Whatsapp Web to avoid being blocked. However, there is a risk of being blocked by WhatsApp as it does not allow bots or unofficial clients on its platform. The bot is not free to use, and users will be charged by OpenAI for each request made.

MaxKB

MaxKB is a knowledge base Q&A system based on the LLM large language model. MaxKB = Max Knowledge Base, which aims to become the most powerful brain of the enterprise.

Large-Language-Models

Large Language Models (LLM) are used to browse the Wolfram directory and associated URLs to create the category structure and good word embeddings. The goal is to generate enriched prompts for GPT, Wikipedia, Arxiv, Google Scholar, Stack Exchange, or Google search. The focus is on one subdirectory: Probability & Statistics. Documentation is in the project textbook `Projects4.pdf`, which is available in the folder. It is recommended to download the document and browse your local copy with Chrome, Edge, or other viewers. Unlike on GitHub, you will be able to click on all the links and follow the internal navigation features. Look for projects related to NLP and LLM / xLLM. The best starting point is project 7.2.2, which is the core project on this topic, with references to all satellite projects. The project textbook (with solutions to all projects) is the core document needed to participate in the free course (deep tech dive) called **GenAI Fellowship**. For details about the fellowship, follow the link provided. An uncompressed version of `crawl_final_stats.txt.gz` is available on Google drive, which contains all the crawled data needed as input to the Python scripts in the XLLM5 and XLLM6 folders.

BlossomLM

BlossomLM is a series of open-source conversational large language models. This project aims to provide a high-quality general-purpose SFT dataset in both Chinese and English, making fine-tuning accessible while also providing pre-trained model weights. **Hint**: BlossomLM is a personal non-commercial project.

InternLM

InternLM is a powerful language model series with features such as 200K context window for long-context tasks, outstanding comprehensive performance in reasoning, math, code, chat experience, instruction following, and creative writing, code interpreter & data analysis capabilities, and stronger tool utilization capabilities. It offers models in sizes of 7B and 20B, suitable for research and complex scenarios. The models are recommended for various applications and exhibit better performance than previous generations. InternLM models may match or surpass other open-source models like ChatGPT. The tool has been evaluated on various datasets and has shown superior performance in multiple tasks. It requires Python >= 3.8, PyTorch >= 1.12.0, and Transformers >= 4.34 for usage. InternLM can be used for tasks like chat, agent applications, fine-tuning, deployment, and long-context inference.

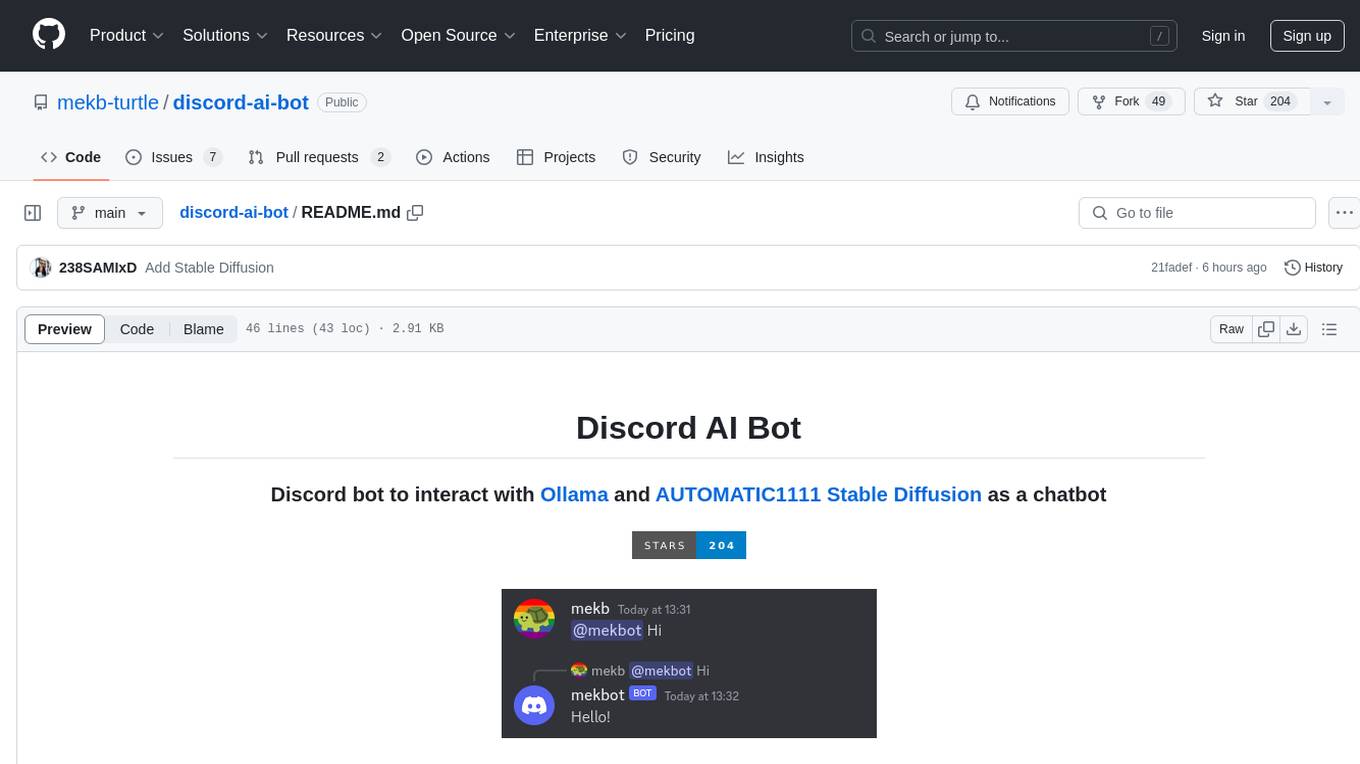

discord-ai-bot

Discord AI Bot is a chatbot designed to interact with Ollama and AUTOMATIC1111 Stable Diffusion on Discord. The project is now archived due to lack of maintenance. Users can set up the bot by installing Node.js, Ollama, and a model, creating a Discord bot, and starting the bot with the necessary configurations. Additionally, Docker setup instructions are provided for easy deployment. The bot can be interacted with by mentioning it in Discord messages.

J.A.R.V.I.S

J.A.R.V.I.S. is an offline large language model fine-tuned on custom and open datasets to mimic Jarvis's dialog with Stark. It prioritizes privacy by running locally and excels in responding like Jarvis with a similar tone. Current features include time/date queries, web searches, playing YouTube videos, and webcam image descriptions. Users can interact with Jarvis via command line after installing the model locally using Ollama. Future plans involve voice cloning, voice-to-text input, and deploying the voice model as an API.

airdcpp-webclient

AirDC++ Web Client is a locally installed application designed for flexible file sharing within groups over a local network or the internet. It utilizes the Advanced Direct Connect protocol to create file sharing communities with thousands of users. The application offers a responsive web user interface, allows sharing local directories, searching for shared files, saving files, chatting capabilities, browsing shared directories, extension support, and a web API for HTTP REST and WebSockets.

For similar jobs

zep

Zep is a long-term memory service for AI Assistant apps. With Zep, you can provide AI assistants with the ability to recall past conversations, no matter how distant, while also reducing hallucinations, latency, and cost. Zep persists and recalls chat histories, and automatically generates summaries and other artifacts from these chat histories. It also embeds messages and summaries, enabling you to search Zep for relevant context from past conversations. Zep does all of this asyncronously, ensuring these operations don't impact your user's chat experience. Data is persisted to database, allowing you to scale out when growth demands. Zep also provides a simple, easy to use abstraction for document vector search called Document Collections. This is designed to complement Zep's core memory features, but is not designed to be a general purpose vector database. Zep allows you to be more intentional about constructing your prompt: 1. automatically adding a few recent messages, with the number customized for your app; 2. a summary of recent conversations prior to the messages above; 3. and/or contextually relevant summaries or messages surfaced from the entire chat session. 4. and/or relevant Business data from Zep Document Collections.

doc2plan

doc2plan is a browser-based application that helps users create personalized learning plans by extracting content from documents. It features a Creator for manual or AI-assisted plan construction and a Viewer for interactive plan navigation. Users can extract chapters, key topics, generate quizzes, and track progress. The application includes AI-driven content extraction, quiz generation, progress tracking, plan import/export, assistant management, customizable settings, viewer chat with text-to-speech and speech-to-text support, and integration with various Retrieval-Augmented Generation (RAG) models. It aims to simplify the creation of comprehensive learning modules tailored to individual needs.

whatsapp-chatgpt

This repository contains a WhatsApp bot that utilizes OpenAI's GPT and DALL-E 2 to respond to user inputs. Users can interact with the bot through voice messages, which are transcribed and responded to. The bot requires Node.js, npm, an OpenAI API key, and a WhatsApp account. It uses Puppeteer to run a real instance of Whatsapp Web to avoid being blocked. However, there is a risk of being blocked by WhatsApp as it does not allow bots or unofficial clients on its platform. The bot is not free to use, and users will be charged by OpenAI for each request made.

OmniSteward

OmniSteward is an AI-powered steward system based on large language models that can interact with users through voice or text to help control smart home devices and computer programs. It supports multi-turn dialogue, tool calling for complex tasks, multiple LLM models, voice recognition, smart home control, computer program management, online information retrieval, command line operations, and file management. The system is highly extensible, allowing users to customize and share their own tools.

chatgpt-wechat

ChatGPT-WeChat is a personal assistant application that can be safely used on WeChat through enterprise WeChat without the risk of being banned. The project is open source and free, with no paid sections or external traffic operations except for advertising on the author's public account '积木成楼'. It supports various features such as secure usage on WeChat, multi-channel customer service message integration, proxy support, session management, rapid message response, voice and image messaging, drawing capabilities, private data storage, plugin support, and more. Users can also develop their own capabilities following the rules provided. The project is currently in development with stable versions available for use.

mcp-agent

mcp-agent is a simple, composable framework designed to build agents using the Model Context Protocol. It handles the lifecycle of MCP server connections and implements patterns for building production-ready AI agents in a composable way. The framework also includes OpenAI's Swarm pattern for multi-agent orchestration in a model-agnostic manner, making it the simplest way to build robust agent applications. It is purpose-built for the shared protocol MCP, lightweight, and closer to an agent pattern library than a framework. mcp-agent allows developers to focus on the core business logic of their AI applications by handling mechanics such as server connections, working with LLMs, and supporting external signals like human input.

Gmail-MCP-Server

Gmail AutoAuth MCP Server is a Model Context Protocol (MCP) server designed for Gmail integration in Claude Desktop. It supports auto authentication and enables AI assistants to manage Gmail through natural language interactions. The server provides comprehensive features for sending emails, reading messages, managing labels, searching emails, and batch operations. It offers full support for international characters, email attachments, and Gmail API integration. Users can install and authenticate the server via Smithery or manually with Google Cloud Project credentials. The server supports both Desktop and Web application credentials, with global credential storage for convenience. It also includes Docker support and instructions for cloud server authentication.

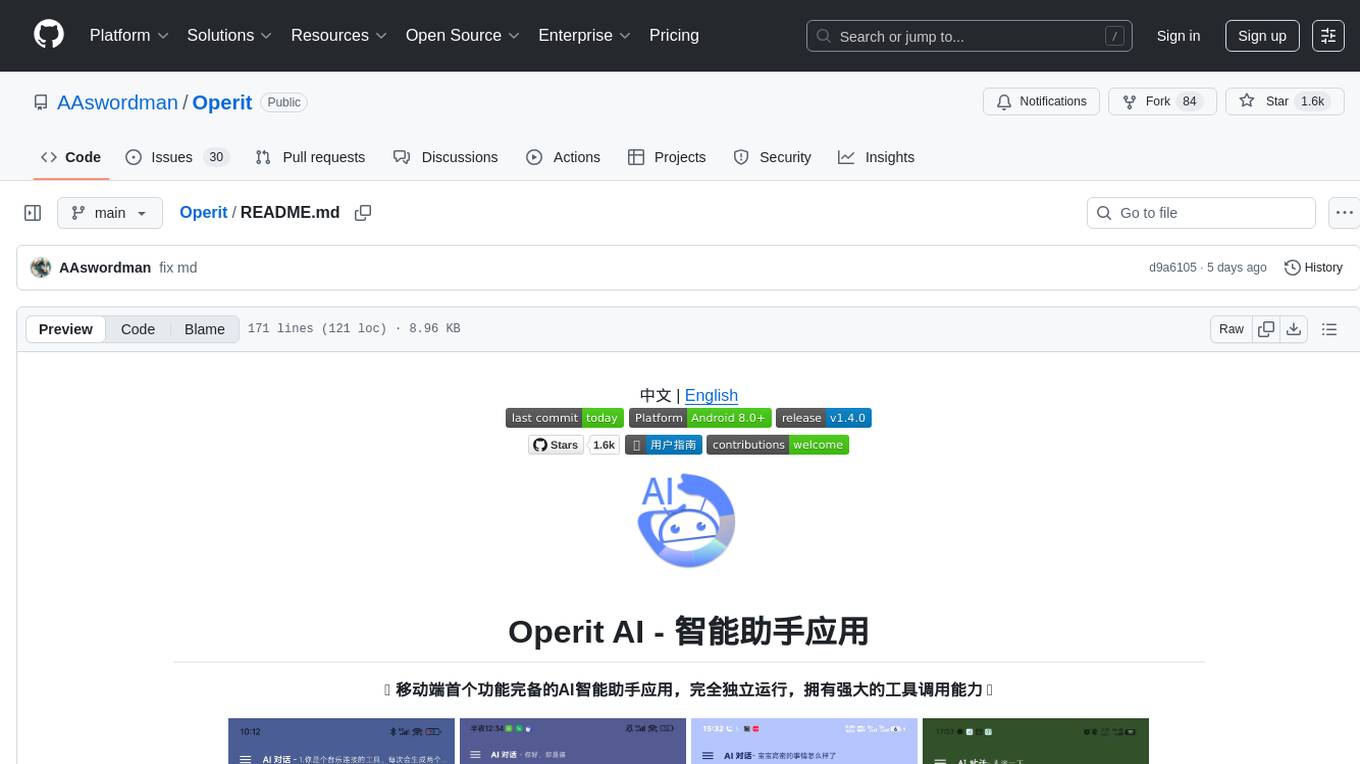

Operit

Operit AI is a fully functional AI assistant application for mobile devices, running independently on Android devices with powerful tool invocation capabilities. It offers over 40 built-in tools for file system operations, HTTP requests, system operations, UI automation, and media processing. The app combines these tools with rich plugins to enable a wide range of tasks, from simple to complex, providing a comprehensive experience of a smartphone AI assistant.