macOS-use

Make Mac apps accessible for AI agents

Stars: 475

macOS-use is a project that enables AI agents to interact with a MacBook across any app. It aims to build an AI agent for the MLX by Apple framework to perform actions on Apple devices. The project is under active development and allows users to prompt the agent to perform various tasks on their MacBook. Users need to be cautious as the tool can interact with apps, UI components, and use private credentials. The project is open source and welcomes contributions from the community.

README:

macOS-use enables AI agents to interact with your Macbook see it in action!

pip install mlx-useClone first

git clone https://github.com/browser-use/macOS-use.git && cd macOS-useDon't forget API key

Supported providers: OAI, Anthropic or Gemini (deepseek R1 coming soon!)

At the moment, macOS-use works best with OAI or Anthropic API, although Gemini is free. While Gemini works great too, it is not as reliable.

cp .env.example .envopen ./.envWe recommend using macOS-use with uv environment

brew install uv && uv venv && source .venv/bin/activateInstall locally and you're good to go! try the first exmaple!

uv pip install --editable . && python examples/try.py

Try prompting it with

open the calculator appprompt: Calculate how much is 5 X 4 and return the result, then call done.

python examples/calculate.py

prompt: Go to auth0.com, sign in with google auth, choose ofiroz91 gmail account, login to the website and call done when you finish.

python examples/login_to_auth0.pyprompt: Can you check what hour is Shabbat in israel today? call done when you finish.

python examples/check_time_online.pyTLDR: Tell every Apple device what to do, and see it done. on EVERY APP.

This project aimes to build the AI agent for the MLX by Apple framework that would allow the agent to perform any action on any Apple device. Our final goal is a open source that anyone can clone, powered by the mlx and mlx-vlm to run local private infrence at zero cost.

- Support MacBooks at SOTA reliability

- [ ] Refine the Agent prompting.

- [ ] Release the first working version to pypi.

- [ ] Improve self-correction.

- [x] Adding ability to check which apps the machine has installed.

- [x] Add feature to allow the agent to check existing apps if failing, e.g. calendar app actual name is iCal.

- [ ] Add action for the agent to ask input from the user.

- [ ] Test Test Test! and let us know what and how to improve!

- [ ] Make task cheaper and more efficient.

- Support local inference with small fine tuned model.

- [ ] Add support for inference with local models using mlx and mlx-vlm.

- [ ] Fine tune a small model that every device can run inference with.

- [ ] SOTA reliability.

- Support iPhone/iPad

This project is still under development and user discretion is advised! macOS-use can and will use your do login, use private credentials, auth services or stored passwords to complete its task, launch and interact WITH EVERY APP and UI component in your MacBook and restrictions to the model are still under active development! It is not recommended to operate it unsupervised YET macOS-use WILL NOT STOP at captcha or any other forms of bot identifications, so once again, user discretion is advised.

As this is an early stage release, You might experience varying success rates depending on task prompt, we're actively working on improvements.

talk me on X/Twitter or contact me on Discord, your input is crucial and highly valuable!

We are a new project and would love contributors! Feel free to PR, open issues for bugs or feature requests.

I would like to extend my heartfelt thanks to

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for macOS-use

Similar Open Source Tools

macOS-use

macOS-use is a project that enables AI agents to interact with a MacBook across any app. It aims to build an AI agent for the MLX by Apple framework to perform actions on Apple devices. The project is under active development and allows users to prompt the agent to perform various tasks on their MacBook. Users need to be cautious as the tool can interact with apps, UI components, and use private credentials. The project is open source and welcomes contributions from the community.

Sentient

Sentient is a personal, private, and interactive AI companion developed by Existence. The project aims to build a completely private AI companion that is deeply personalized and context-aware of the user. It utilizes automation and privacy to create a true companion for humans. The tool is designed to remember information about the user and use it to respond to queries and perform various actions. Sentient features a local and private environment, MBTI personality test, integrations with LinkedIn, Reddit, and more, self-managed graph memory, web search capabilities, multi-chat functionality, and auto-updates for the app. The project is built using technologies like ElectronJS, Next.js, TailwindCSS, FastAPI, Neo4j, and various APIs.

Ollama-SwiftUI

Ollama-SwiftUI is a user-friendly interface for Ollama.ai created in Swift. It allows seamless chatting with local Large Language Models on Mac. Users can change models mid-conversation, restart conversations, send system prompts, and use multimodal models with image + text. The app supports managing models, including downloading, deleting, and duplicating them. It offers light and dark mode, multiple conversation tabs, and a localized interface in English and Arabic.

Kuebiko

Kuebiko is a Twitch Chat Bot that reads twitch chat and generates text-to-speech responses using Google Cloud API and OpenAI's GPT-3 text completion model. It allows users to set up their own VTuber AI similar to 'Neuro-Sama'. The project is built with Python and requires setting up various API keys and configurations to enable the bot functionality. Users can customize the voice of their VTuber and route audio using VBAudio Cable. Kuebiko provides a unique way to interact with viewers through chat responses and captions in OBS.

SolidGPT

SolidGPT is an AI searching assistant for developers that helps with code and workspace semantic search. It provides features such as talking to your codebase, asking questions about your codebase, semantic search and summary in Notion, and getting questions answered from your codebase and Notion without context switching. The tool ensures data safety by not collecting users' data and uses the OpenAI series model API.

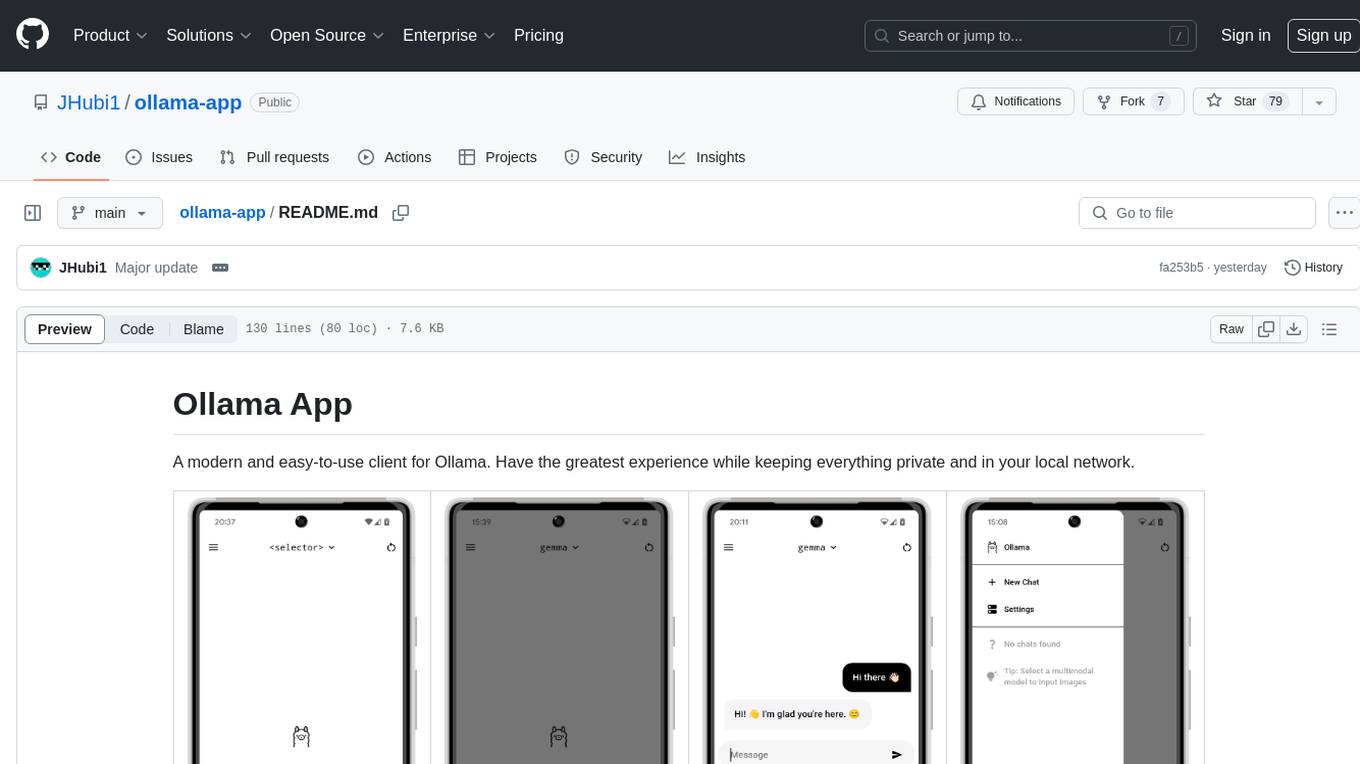

ollama-app

Ollama App is a modern and easy-to-use client for Ollama, allowing users to have a private experience within their local network. The app connects to an Ollama server using its API endpoint, enabling users to chat and interact with various models. It supports multimodal model input, a multilingual interface, and custom builds for personalized experiences. Users can easily set up the app, navigate through the side menu, select models, and create custom builds to tailor the app to their needs.

FlowTest

FlowTestAI is the world’s first GenAI powered OpenSource Integrated Development Environment (IDE) designed for crafting, visualizing, and managing API-first workflows. It operates as a desktop app, interacting with the local file system, ensuring privacy and enabling collaboration via version control systems. The platform offers platform-specific binaries for macOS, with versions for Windows and Linux in development. It also features a CLI for running API workflows from the command line interface, facilitating automation and CI/CD processes.

serverless-chat-langchainjs

This sample shows how to build a serverless chat experience with Retrieval-Augmented Generation using LangChain.js and Azure. The application is hosted on Azure Static Web Apps and Azure Functions, with Azure Cosmos DB for MongoDB vCore as the vector database. You can use it as a starting point for building more complex AI applications.

CyberScraper-2077

CyberScraper 2077 is an advanced web scraping tool powered by AI, designed to extract data from websites with precision and style. It offers a user-friendly interface, supports multiple data export formats, operates in stealth mode to avoid detection, and promises lightning-fast scraping. The tool respects ethical scraping practices, including robots.txt and site policies. With upcoming features like proxy support and page navigation, CyberScraper 2077 is a futuristic solution for data extraction in the digital realm.

ai

Leverage AI to generate pull request descriptions based on the diff & commit messages. Install the Chrome Extension to get started. The project uses Node.js and NPM. It provides developer documentation and usage guide. The extension can be installed on Chromium-based browsers by loading the unpacked `dist` directory. The core team includes Brian Douglas, Divyansh Singh, and Anush Shetty. Contributors can open issues and find good first issues in the Discord channel. The project uses @open-sauced/conventional-commit for commit utility and semantic-release for generating changelogs and releases. Join the community in Discord, watch videos on the YouTube Channel, and find resources on the Dev.to org. Licensed under MIT © Open Sauced.

gpt-pilot

GPT Pilot is a core technology for the Pythagora VS Code extension, aiming to provide the first real AI developer companion. It goes beyond autocomplete, helping with writing full features, debugging, issue discussions, and reviews. The tool utilizes LLMs to generate production-ready apps, with developers overseeing the implementation. GPT Pilot works step by step like a developer, debugging issues as they arise. It can work at any scale, filtering out code to show only relevant parts to the AI during tasks. Contributions are welcome, with debugging and telemetry being key areas of focus for improvement.

shadcn-nextjs-boilerplate

Horizon AI Boilerplate is an open-source Admin Dashboard template designed for Shadcn UI, NextJS, and Tailwind CSS. It provides over 30 dark/light frontend elements for creating Chat AI SaaS Apps quickly. The documentation is detailed and complex, guiding users through installation and usage. Users can start their local server with simple commands. The tool requires a valid OpenAI API key for ChatGPT functionality. Additionally, a Figma version is available for design purposes. The PRO version offers more components and pages. Users can report issues on GitHub and connect with the community via Discord. The tool credits open-source resources like Shadcn UI Library, NextJS Subscription Payments, and ChatBot UI by mckaywrigley.

langchainjs-quickstart-demo

Discover the journey of building a generative AI application using LangChain.js and Azure. This demo explores the development process from idea to production, using a RAG-based approach for a Q&A system based on YouTube video transcripts. The application allows to ask text-based questions about a YouTube video and uses the transcript of the video to generate responses. The code comes in two versions: local prototype using FAISS and Ollama with LLaMa3 model for completion and all-minilm-l6-v2 for embeddings, and Azure cloud version using Azure AI Search and GPT-4 Turbo model for completion and text-embedding-3-large for embeddings. Either version can be run as an API using the Azure Functions runtime.

code-review-gpt

Code Review GPT uses Large Language Models to review code in your CI/CD pipeline. It helps streamline the code review process by providing feedback on code that may have issues or areas for improvement. It should pick up on common issues such as exposed secrets, slow or inefficient code, and unreadable code. It can also be run locally in your command line to review staged files. Code Review GPT is in alpha and should be used for fun only. It may provide useful feedback but please check any suggestions thoroughly.

chatgpt-ai-template

Horizon ChatGPT AI Template is an open-source Free ChatGPT AI Admin Template for NextJS & React. It is built with React, NextJS, and Chakra UI, offering over 30+ dark/light frontend elements for creating outstanding Chat AI SaaS Apps faster. The template comes with detailed documentation and example pages to help users get started quickly. Users need to have a valid OpenAI account and API key to use ChatGPT. The template is available in both Free and PRO versions, with a Figma version also available. Users can connect with the community through the #HorizonUI Discord Community.

dataline

DataLine is an AI-driven data analysis and visualization tool designed for technical and non-technical users to explore data quickly. It offers privacy-focused data storage on the user's device, supports various data sources, generates charts, executes queries, and facilitates report building. The tool aims to speed up data analysis tasks for businesses and individuals by providing a user-friendly interface and natural language querying capabilities.

For similar tasks

macOS-use

macOS-use is a project that enables AI agents to interact with a MacBook across any app. It aims to build an AI agent for the MLX by Apple framework to perform actions on Apple devices. The project is under active development and allows users to prompt the agent to perform various tasks on their MacBook. Users need to be cautious as the tool can interact with apps, UI components, and use private credentials. The project is open source and welcomes contributions from the community.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.