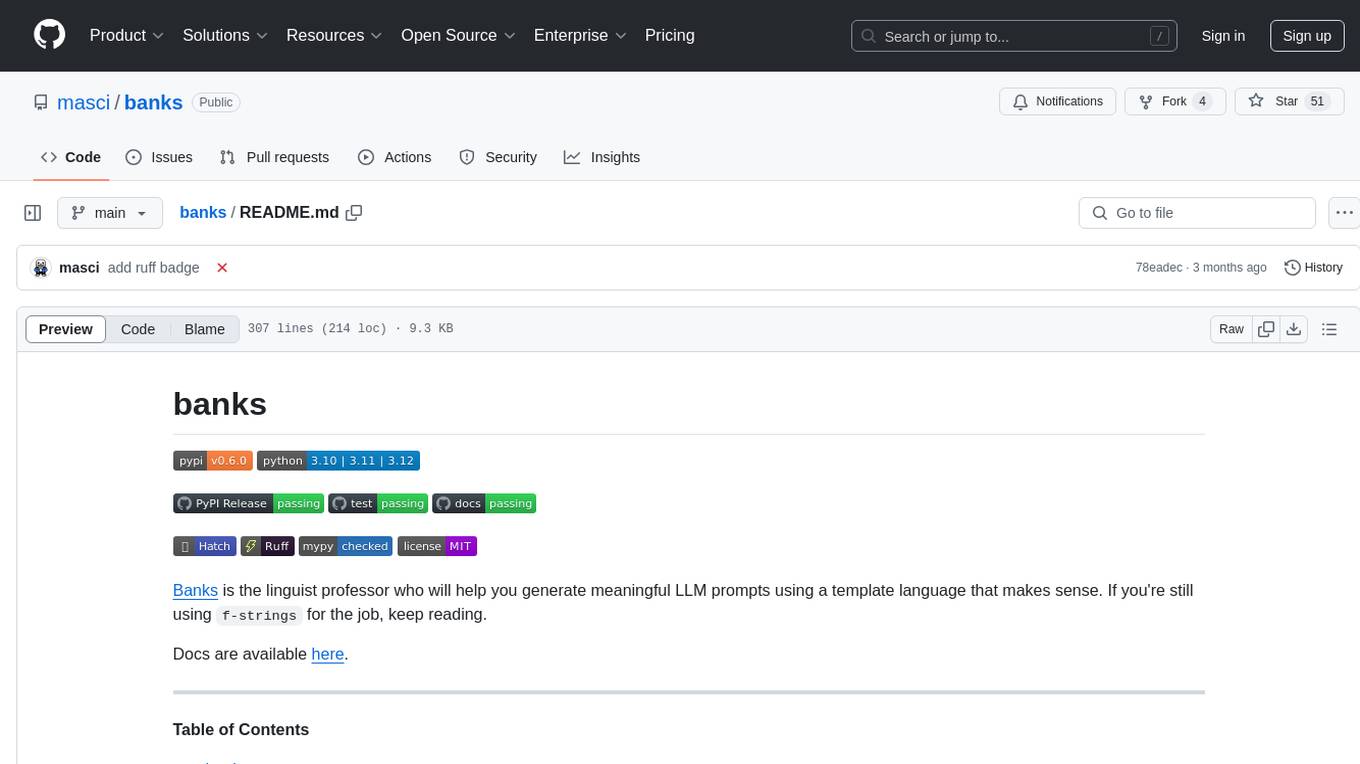

banks

LLM prompt language based on Jinja. Banks provides tools and functions to build prompts text and chat messages from generic blueprints. It allows attaching metadata to prompts to ease their management, and versioning is first-class citizen. Banks provides ways to store prompts on disk along with their metadata.

Stars: 88

Banks is a linguist professor tool that helps generate meaningful LLM prompts using a template language. It provides a user-friendly way to create prompts for various tasks such as blog writing, summarizing documents, lemmatizing text, and generating text using a LLM. The tool supports async operations and comes with predefined filters for data processing. Banks leverages Jinja's macro system to create prompts and interact with OpenAI API for text generation. It also offers a cache mechanism to avoid regenerating text for the same template and context.

README:

Banks is the linguist professor who will help you generate meaningful

LLM prompts using a template language that makes sense. If you're still using f-strings for the job, keep reading.

Docs are available here.

Table of Contents

- banks

pip install banks

# install optional deps; litellm, redis

pip install "banks[all]"Prompts are instrumental for the success of any LLM application, and Banks focuses around specific areas of their lifecycle:

- 📙 Templating: Banks provides tools and functions to build prompts text and chat messages from generic blueprints.

- 🎟️ Versioning and metadata: Banks supports attaching metadata to prompts to ease their management, and versioning is first-class citizen.

- 🗄️ Management: Banks provides ways to store prompts on disk along with their metadata.

For a more extensive set of code examples, see the documentation page.

You'll find yourself feeding an LLM a list of chat messages instead of plain text more often than not. Banks will help you remove the boilerplate by defining the messages already at the prompt level.

from banks import Prompt

prompt_template = """

{% chat role="system" %}

You are a {{ persona }}.

{% endchat %}

{% chat role="user" %}

Hello, how are you?

{% endchat %}

"""

p = Prompt(prompt_template)

print(p.chat_messages({"persona": "helpful assistant"}))

# Output:

# [

# ChatMessage(role='system', content='You are a helpful assistant.\n'),

# ChatMessage(role='user', content='Hello, how are you?\n')

# ]If you're working with a multimodal model, you can include images directly in the prompt, and Banks will do the job needed to upload them when rendering the chat messages:

import litellm

from banks import Prompt

prompt_template = """

{% chat role="user" %}

Guess where is this place.

{{ picture | image }}

{%- endchat %}

"""

pic_url = (

"https://upload.wikimedia.org/wikipedia/commons/thumb/4/4d/CorcianoMar302024_01.jpg/1079px-CorcianoMar302024_01.jpg"

)

# Alternatively, load the image from disk

# pic_url = "/Users/massi/Downloads/CorcianoMar302024_01.jpg"

p = Prompt(prompt_template)

as_dict = [msg.model_dump(exclude_none=True) for msg in p.chat_messages({"picture": pic_url})]

r = litellm.completion(model="gpt-4-vision-preview", messages=as_dict)

print(r.choices[0].message.content)Sometimes it might be useful to ask another LLM to generate examples for you in a

few-shots prompt. Provided you have a valid OpenAI API key stored in an env var

called OPENAI_API_KEY you can ask Banks to do something like this (note we can

annotate the prompt using comments - anything within {# ... #} will be removed

from the final prompt):

from banks import Prompt

prompt_template = """

{% set examples %}

{% completion model="gpt-3.5-turbo-0125" %}

{% chat role="system" %}You are a helpful assistant{% endchat %}

{% chat role="user" %}Generate a bullet list of 3 tweets with a positive sentiment.{% endchat %}

{% endcompletion %}

{% endset %}

{# output the response content #}

Generate a tweet about the topic {{ topic }} with a positive sentiment.

Examples:

{{ examples }}

"""

p = Prompt(prompt_template)

print(p.text({"topic": "climate change"}))The output would be something similar to the following:

Generate a tweet about the topic climate change with a positive sentiment.

Examples:

- "Feeling grateful for the sunshine today! 🌞 #thankful #blessed"

- "Just had a great workout and feeling so energized! 💪 #fitness #healthyliving"

- "Spent the day with loved ones and my heart is so full. 💕 #familytime #grateful"[!IMPORTANT] The

completionextension uses LiteLLM under the hood, and provided you have the proper environment variables set, you can use any model from the supported model providers.

[!NOTE] Banks uses a cache to avoid generating text again for the same template with the same context. By default the cache is in-memory but it can be customized.

Banks provides a filter tool that can be used to convert a callable passed to a prompt into an LLM function call.

Docstrings are used to describe the tool and its arguments, and during prompt rendering Banks will perform all the LLM

roundtrips needed in case the model wants to use a tool within a {% completion %} block. For example:

import platform

from banks import Prompt

def get_laptop_info():

"""Get information about the user laptop.

For example, it returns the operating system and version, along with hardware and network specs."""

return str(platform.uname())

p = Prompt("""

{% set response %}

{% completion model="gpt-3.5-turbo-0125" %}

{% chat role="user" %}{{ query }}{% endchat %}

{{ get_laptop_info | tool }}

{% endcompletion %}

{% endset %}

{# the variable 'response' contains the result #}

{{ response }}

""")

print(p.text({"query": "Can you guess the name of my laptop?", "get_laptop_info": get_laptop_info}))

# Output:

# Based on the information provided, the name of your laptop is likely "MacGiver."Several inference providers support prompt caching to save time and costs, and Anthropic in particular offers fine-grained control over the parts of the prompt that we want to cache. With Banks this is as simple as using a template filter:

prompt_template = """

{% chat role="user" %}

Analyze this book:

{# Only this part of the chat message (the book content) will be cached #}

{{ book | cache_control("ephemeral") }}

What is the title of this book? Only output the title.

{% endchat %}

"""

p = Prompt(prompt_template)

print(p.chat_messages({"book":"This is a short book!"}))

# Output:

# [

# ChatMessage(role='user', content=[

# ContentBlock(type='text', text='Analyze this book:\n\n'),

# ContentBlock(type='text', cache_control=CacheControl(type='ephemeral'), text='This is a short book!'),

# ContentBlock(type='text', text='\n\nWhat is the title of this book? Only output the title.\n')

# ])

# ]The output of p.chat_messages() can be fed to the Anthropic client directly.

We can get the same result as the previous example loading the prompt template from a registry

instead of hardcoding it into the Python code. For convenience, Banks comes with a few registry types

you can use to store your templates. For example, the DirectoryTemplateRegistry can load templates

from a directory in the file system. Suppose you have a folder called templates in the current path,

and the folder contains a file called blog.jinja. You can load the prompt template like this:

from banks import Prompt

from banks.registries import DirectoryTemplateRegistry

registry = DirectoryTemplateRegistry(populated_dir)

prompt = registry.get(name="blog")

print(prompt.text({"topic": "retrogame computing"}))To run banks within an asyncio loop you have to do two things:

- set the environment variable

BANKS_ASYNC_ENABLED=true. - use the

AsyncPromptclass that has an awaitablerunmethod.

Example:

from banks import AsyncPrompt

async def main():

p = AsyncPrompt("Write a blog article about the topic {{ topic }}")

result = await p.text({"topic": "AI frameworks"})

print(result)

asyncio.run(main())Contributions are very welcome, the CONTRIBUTING.md file contains all the details about how to do it.

banks is distributed under the terms of the MIT license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for banks

Similar Open Source Tools

banks

Banks is a linguist professor tool that helps generate meaningful LLM prompts using a template language. It provides a user-friendly way to create prompts for various tasks such as blog writing, summarizing documents, lemmatizing text, and generating text using a LLM. The tool supports async operations and comes with predefined filters for data processing. Banks leverages Jinja's macro system to create prompts and interact with OpenAI API for text generation. It also offers a cache mechanism to avoid regenerating text for the same template and context.

suno-api

Suno AI API is an open-source project that allows developers to integrate the music generation capabilities of Suno.ai into their own applications. The API provides a simple and convenient way to generate music, lyrics, and other audio content using Suno.ai's powerful AI models. With Suno AI API, developers can easily add music generation functionality to their apps, websites, and other projects.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

LightRAG

LightRAG is a PyTorch library designed for building and optimizing Retriever-Agent-Generator (RAG) pipelines. It follows principles of simplicity, quality, and optimization, offering developers maximum customizability with minimal abstraction. The library includes components for model interaction, output parsing, and structured data generation. LightRAG facilitates tasks like providing explanations and examples for concepts through a question-answering pipeline.

langchainrb

Langchain.rb is a Ruby library that makes it easy to build LLM-powered applications. It provides a unified interface to a variety of LLMs, vector search databases, and other tools, making it easy to build and deploy RAG (Retrieval Augmented Generation) systems and assistants. Langchain.rb is open source and available under the MIT License.

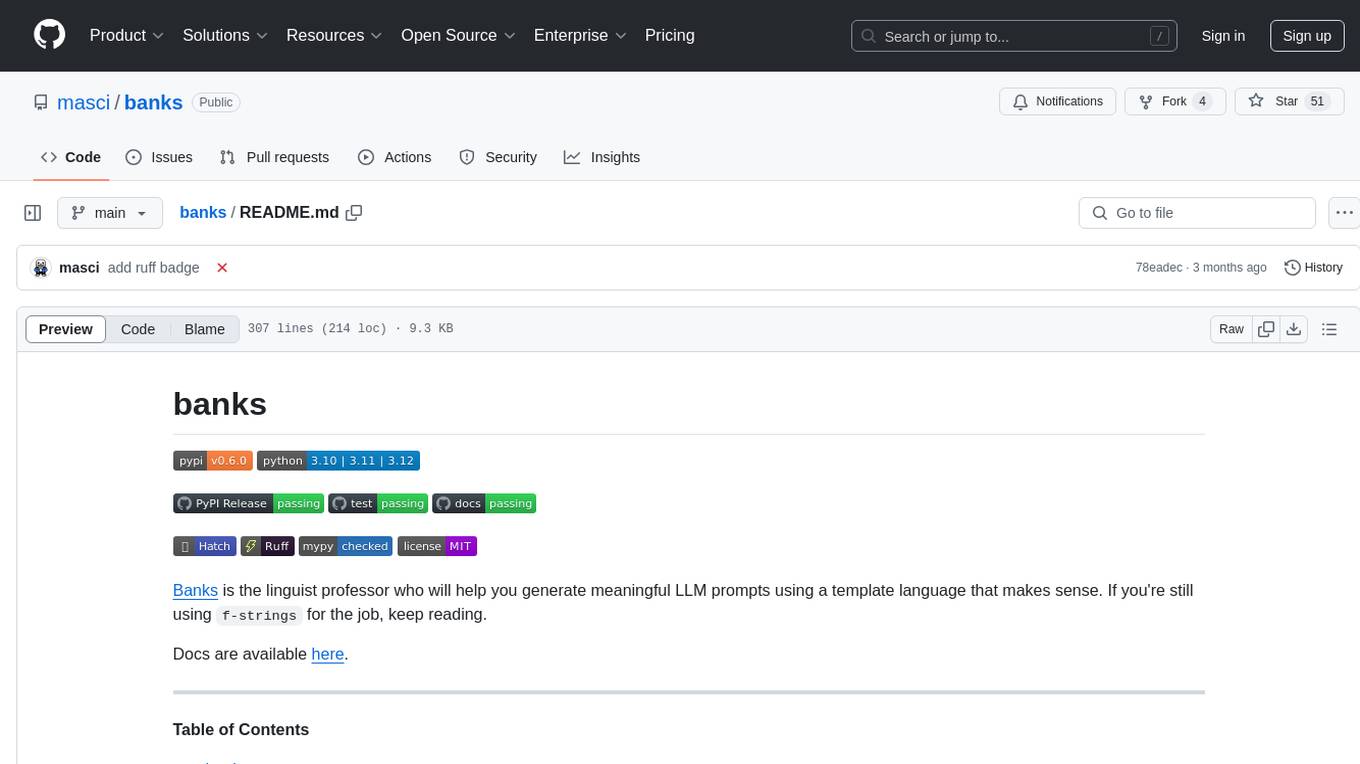

langchain-decorators

LangChain Decorators is a layer on top of LangChain that provides syntactic sugar for writing custom langchain prompts and chains. It offers a more pythonic way of writing code, multiline prompts without breaking code flow, IDE support for hinting and type checking, leveraging LangChain ecosystem, support for optional parameters, and sharing parameters between prompts. It simplifies streaming, automatic LLM selection, defining custom settings, debugging, and passing memory, callback, stop, etc. It also provides functions provider, dynamic function schemas, binding prompts to objects, defining custom settings, and debugging options. The project aims to enhance the LangChain library by making it easier to use and more efficient for writing custom prompts and chains.

Lumos

Lumos is a Chrome extension powered by a local LLM co-pilot for browsing the web. It allows users to summarize long threads, news articles, and technical documentation. Users can ask questions about reviews and product pages. The tool requires a local Ollama server for LLM inference and embedding database. Lumos supports multimodal models and file attachments for processing text and image content. It also provides options to customize models, hosts, and content parsers. The extension can be easily accessed through keyboard shortcuts and offers tools for automatic invocation based on prompts.

gitleaks

Gitleaks is a tool for detecting secrets like passwords, API keys, and tokens in git repos, files, and whatever else you wanna throw at it via stdin. It can be installed using Homebrew, Docker, or Go, and is available in binary form for many popular platforms and OS types. Gitleaks can be implemented as a pre-commit hook directly in your repo or as a GitHub action. It offers scanning modes for git repositories, directories, and stdin, and allows creating baselines for ignoring old findings. Gitleaks also provides configuration options for custom secret detection rules and supports features like decoding encoded text and generating reports in various formats.

memobase

Memobase is a user profile-based memory system designed to enhance Generative AI applications by enabling them to remember, understand, and evolve with users. It provides structured user profiles, scalable profiling, easy integration with existing LLM stacks, batch processing for speed, and is production-ready. Users can manage users, insert data, get memory profiles, and track user preferences and behaviors. Memobase is ideal for applications that require user analysis, tracking, and personalized interactions.

ragtacts

Ragtacts is a Clojure library that allows users to easily interact with Large Language Models (LLMs) such as OpenAI's GPT-4. Users can ask questions to LLMs, create question templates, call Clojure functions in natural language, and utilize vector databases for more accurate answers. Ragtacts also supports RAG (Retrieval-Augmented Generation) method for enhancing LLM output by incorporating external data. Users can use Ragtacts as a CLI tool, API server, or through a RAG Playground for interactive querying.

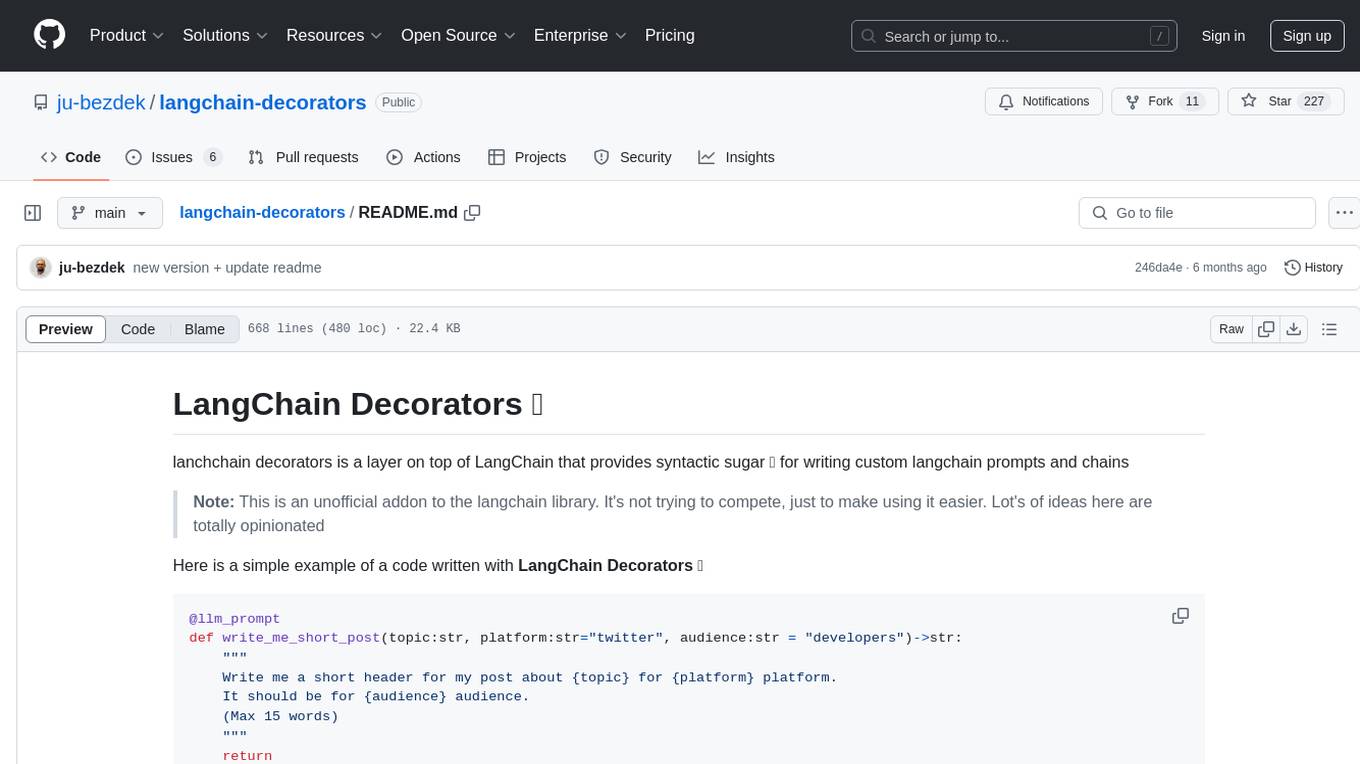

parea-sdk-py

Parea AI provides a SDK to evaluate & monitor AI applications. It allows users to test, evaluate, and monitor their AI models by defining and running experiments. The SDK also enables logging and observability for AI applications, as well as deploying prompts to facilitate collaboration between engineers and subject-matter experts. Users can automatically log calls to OpenAI and Anthropic, create hierarchical traces of their applications, and deploy prompts for integration into their applications.

openai

An open-source client package that allows developers to easily integrate the power of OpenAI's state-of-the-art AI models into their Dart/Flutter applications. The library provides simple and intuitive methods for making requests to OpenAI's various APIs, including the GPT-3 language model, DALL-E image generation, and more. It is designed to be lightweight and easy to use, enabling developers to focus on building their applications without worrying about the complexities of dealing with HTTP requests. Note that this is an unofficial library as OpenAI does not have an official Dart library.

llm-rag-workshop

The LLM RAG Workshop repository provides a workshop on using Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) to generate and understand text in a human-like manner. It includes instructions on setting up the environment, indexing Zoomcamp FAQ documents, creating a Q&A system, and using OpenAI for generation based on retrieved information. The repository focuses on enhancing language model responses with retrieved information from external sources, such as document databases or search engines, to improve factual accuracy and relevance of generated text.

vinagent

Vinagent is a lightweight and flexible library designed for building smart agent assistants across various industries. It provides a simple yet powerful foundation for creating AI-powered customer service bots, data analysis assistants, or domain-specific automation agents. With its modular tool system, users can easily extend their agent's capabilities by integrating a wide range of tools that are self-contained, well-documented, and can be registered dynamically. Vinagent allows users to scale and adapt their agents to new tasks or environments effortlessly.

agentlang

AgentLang is an open-source programming language and framework designed for solving complex tasks with the help of AI agents. It allows users to build business applications rapidly from high-level specifications, making it more efficient than traditional programming languages. The language is data-oriented and declarative, with a syntax that is intuitive and closer to natural languages. AgentLang introduces innovative concepts such as first-class AI agents, graph-based hierarchical data model, zero-trust programming, declarative dataflow, resolvers, interceptors, and entity-graph-database mapping.

minja

Minja is a minimalistic C++ Jinja templating engine designed specifically for integration with C++ LLM projects, such as llama.cpp or gemma.cpp. It is not a general-purpose tool but focuses on providing a limited set of filters, tests, and language features tailored for chat templates. The library is header-only, requires C++17, and depends only on nlohmann::json. Minja aims to keep the codebase small, easy to understand, and offers decent performance compared to Python. Users should be cautious when using Minja due to potential security risks, and it is not intended for producing HTML or JavaScript output.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

react-native-vercel-ai

Run Vercel AI package on React Native, Expo, Web and Universal apps. Currently React Native fetch API does not support streaming which is used as a default on Vercel AI. This package enables you to use AI library on React Native but the best usage is when used on Expo universal native apps. On mobile you get back responses without streaming with the same API of `useChat` and `useCompletion` and on web it will fallback to `ai/react`

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.