smartfunc

Turn docstrings into LLM-functions

Stars: 110

smartfunc is a Python library that turns docstrings into LLM-functions. It wraps around the llm library to parse docstrings and generate prompts at runtime using Jinja2 templates. The library offers syntactic sugar on top of llm, supporting backends for different LLM providers, async support for microbatching, schema support using Pydantic models, and the ability to store API keys in .env files. It simplifies rapid prototyping by focusing on specific features and providing flexibility in prompt engineering. smartfunc also supports async functions and debug mode for debugging prompts and responses.

README:

Turn docstrings into LLM-functions

uv pip install smartfuncHere is a nice example of what is possible with this library:

from smartfunc import backend

@backend("gpt-4")

def generate_summary(text: str):

"""Generate a summary of the following text: {{ text }}"""

passThe generate_summary function will now return a string with the summary of the text that you give it.

This library wraps around the llm library made by Simon Willison. The docstring is parsed and turned into a Jinja2 template which we inject with variables to generate a prompt at runtime. We then use the backend given by the decorator to run the prompt and return the result.

The llm library is minimalistic and while it does not support all the features out there it does offer a solid foundation to build on. This library is mainly meant as a method to add some syntactic sugar on top. We do get a few nice benefits from the llm library though:

- The

llmlibrary is well maintained and has a large community - An ecosystem of backends for different LLM providers

- Many of the vendors have

asyncsupport, which allows us to do microbatching - Many of the vendors have schema support, which allows us to use Pydantic models to define the response

- You can use

.envfiles to store your API keys

The following snippet shows how you might create a re-useable backend decorator that uses a system prompt. Also notice how we're able to use a Pydantic model to define the response.

from smartfunc import backend

from pydantic import BaseModel

from dotenv import load_dotenv

load_dotenv(".env")

class Summary(BaseModel):

summary: str

pros: list[str]

cons: list[str]

llmify = backend("gpt-4o-mini", system="You are a helpful assistant.", temperature=0.5)

@llmify

def generate_poke_desc(text: str) -> Summary:

"""Describe the following pokemon: {{ text }}"""

pass

print(generate_poke_desc("pikachu"))This is the result that we got back:

{

'summary': 'Pikachu is a small, electric-type Pokémon known for its adorable appearance and strong electrical abilities. It is recognized as the mascot of the Pokémon franchise, with distinctive features and a cheerful personality.',

'pros': [

'Iconic and recognizable worldwide',

'Strong electric attacks like Thunderbolt',

'Has a cute and friendly appearance',

'Evolves into Raichu with a Thunder Stone',

'Popular choice in Pokémon merchandise and media'

],

'cons': [

'Not very strong in higher-level battles',

'Weak against ground-type moves',

'Limited to electric-type attacks unless learned through TMs',

'Can be overshadowed by other powerful Pokémon in competitive play'

],

}Not every backend supports schemas, but you will a helpful error message if that is the case.

[!NOTE]

You might look at this example and wonder if you might be better off using instructor. After all, that library has more support for validation of parameters and even has some utilities for multi-turn conversations. And what about ell or marvin?!You will notice that

smartfuncdoesn't do a bunch of things those other libraries do. But the goal here is simplicity and a focus on a specific set of features. For example; instructor requires you to learn a fair bit more about each individual backend. If you want to to use claude instead of openai then you will need to load in a different library. Similarily I felt that all the other platforms that similar things missing: async support or freedom for vendors.The goal here is simplicity during rapid prototyping. You just need to make sure the

llmplugin is installed and you're good to go. That's it.

The simplest way to use smartfunc is to just put your prompt in the docstring and to be done with it. You can also run jinja2 in it if you want, but if you need the extra flexibility then you can also use the inner function to write the logic of your promopt. Any string that the inner function returns will be added at the back of the docstring prompt.

import asyncio

from smartfunc import backend

from pydantic import BaseModel

from dotenv import load_dotenv

load_dotenv(".env")

class Summary(BaseModel):

summary: str

pros: list[str]

cons: list[str]

# This would also work, but has the benefit that you can use the inner function to write

# the logic of your prompt which allows for more flexible prompt engineering

@backend("gpt-4o-mini")

def generate_poke_desc(text: str) -> Summary:

"""Describe the following pokemon: {{ text }}"""

return " ... but make it sound as if you are a 10 year old child"

resp = generate_poke_desc("pikachu")

print(resp) # This response should now be more child-likeThe library also supports async functions. This is useful if you want to do microbatching or if you want to use the async backends from the llm library.

import asyncio

from smartfunc import async_backend

from pydantic import BaseModel

from dotenv import load_dotenv

load_dotenv(".env")

class Summary(BaseModel):

summary: str

pros: list[str]

cons: list[str]

@async_backend("gpt-4o-mini")

async def generate_poke_desc(text: str) -> Summary:

"""Describe the following pokemon: {{ text }}"""

pass

resp = asyncio.run(generate_poke_desc("pikachu"))

print(resp)The library also supports debug mode. This is useful if you want to see the prompt that was used or if you want to see the response that was returned.

import asyncio

from smartfunc import async_backend

from pydantic import BaseModel

from dotenv import load_dotenv

load_dotenv(".env")

class Summary(BaseModel):

summary: str

pros: list[str]

cons: list[str]

@async_backend("gpt-4o-mini", debug=True)

async def generate_poke_desc(text: str) -> Summary:

"""Describe the following pokemon: {{ text }}"""

pass

resp = asyncio.run(generate_poke_desc("pikachu"))

print(resp)This will return a dictionary with the debug information.

{

'summary': 'Pikachu is a small, yellow, rodent-like Pokémon known for its electric powers and iconic status as the franchise mascot. It has long ears with black tips, red cheeks that store electricity, and a lightning bolt-shaped tail. Pikachu evolves from Pichu when leveled up with high friendship and can further evolve into Raichu when exposed to a Thunder Stone. Pikachu is often depicted as cheerful, playfully energetic, and is renowned for its ability to generate electricity, which it can unleash in powerful attacks such as Thunderbolt and Volt Tackle.',

'pros': [

'Iconic mascot of the Pokémon franchise', 'Popular among fans of all ages', 'Strong electric-type moves', 'Cute and friendly appearance'

],

'cons': [

'Limited range of evolution (only evolves into Raichu)', 'Commonly found, which may reduce uniqueness', 'Vulnerable to ground-type moves', 'Requires high friendship for evolution to Pichu, which can be a long process'

],

'_debug': {

'template': 'Describe the following pokemon: {{ text }}',

'func_name': 'generate_poke_desc',

'prompt': 'Describe the following pokemon: pikachu',

'system': None,

'template_inputs': {

'text': 'pikachu'

},

'backend_kwargs': {},

'datetime': '2025-03-13T16:05:44.754579',

'return_type': {

'properties': {

'summary': {'title': 'Summary', 'type': 'string'},

'pros': {'items': {'type': 'string'}, 'title': 'Pros', 'type': 'array'},

'cons': {'items': {'type': 'string'}, 'title': 'Cons', 'type': 'array'}

},

'required': ['summary', 'pros', 'cons'],

'title': 'Summary',

'type': 'object'

}

}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for smartfunc

Similar Open Source Tools

smartfunc

smartfunc is a Python library that turns docstrings into LLM-functions. It wraps around the llm library to parse docstrings and generate prompts at runtime using Jinja2 templates. The library offers syntactic sugar on top of llm, supporting backends for different LLM providers, async support for microbatching, schema support using Pydantic models, and the ability to store API keys in .env files. It simplifies rapid prototyping by focusing on specific features and providing flexibility in prompt engineering. smartfunc also supports async functions and debug mode for debugging prompts and responses.

Toolio

Toolio is an OpenAI-like HTTP server API implementation that supports structured LLM response generation, making it conform to a JSON schema. It is useful for reliable tool calling and agentic workflows based on schema-driven output. Toolio is based on the MLX framework for Apple Silicon, specifically M1/M2/M3/M4 Macs. It allows users to host MLX-format LLMs for structured output queries and provides a command line client for easier usage of tools. The tool also supports multiple tool calls and the creation of custom tools for specific tasks.

npcpy

npcpy is a core library of the NPC Toolkit that enhances natural language processing pipelines and agent tooling. It provides a flexible framework for building applications and conducting research with LLMs. The tool supports various functionalities such as getting responses for agents, setting up agent teams, orchestrating jinx workflows, obtaining LLM responses, generating images, videos, audio, and more. It also includes a Flask server for deploying NPC teams, supports LiteLLM integration, and simplifies the development of NLP-based applications. The tool is versatile, supporting multiple models and providers, and offers a graphical user interface through NPC Studio and a command-line interface via NPC Shell.

npcsh

`npcsh` is a python-based command-line tool designed to integrate Large Language Models (LLMs) and Agents into one's daily workflow by making them available and easily configurable through the command line shell. It leverages the power of LLMs to understand natural language commands and questions, execute tasks, answer queries, and provide relevant information from local files and the web. Users can also build their own tools and call them like macros from the shell. `npcsh` allows users to take advantage of agents (i.e. NPCs) through a managed system, tailoring NPCs to specific tasks and workflows. The tool is extensible with Python, providing useful functions for interacting with LLMs, including explicit coverage for popular providers like ollama, anthropic, openai, gemini, deepseek, and openai-like providers. Users can set up a flask server to expose their NPC team for use as a backend service, run SQL models defined in their project, execute assembly lines, and verify the integrity of their NPC team's interrelations. Users can execute bash commands directly, use favorite command-line tools like VIM, Emacs, ipython, sqlite3, git, pipe the output of these commands to LLMs, or pass LLM results to bash commands.

langchain-extract

LangChain Extract is a simple web server that allows you to extract information from text and files using LLMs. It is built using FastAPI, LangChain, and Postgresql. The backend closely follows the extraction use-case documentation and provides a reference implementation of an app that helps to do extraction over data using LLMs. This repository is meant to be a starting point for building your own extraction application which may have slightly different requirements or use cases.

awadb

AwaDB is an AI native database designed for embedding vectors. It simplifies database usage by eliminating the need for schema definition and manual indexing. The system ensures real-time search capabilities with millisecond-level latency. Built on 5 years of production experience with Vearch, AwaDB incorporates best practices from the community to offer stability and efficiency. Users can easily add and search for embedded sentences using the provided client libraries or RESTful API.

ActionWeaver

ActionWeaver is an AI application framework designed for simplicity, relying on OpenAI and Pydantic. It supports both OpenAI API and Azure OpenAI service. The framework allows for function calling as a core feature, extensibility to integrate any Python code, function orchestration for building complex call hierarchies, and telemetry and observability integration. Users can easily install ActionWeaver using pip and leverage its capabilities to create, invoke, and orchestrate actions with the language model. The framework also provides structured extraction using Pydantic models and allows for exception handling customization. Contributions to the project are welcome, and users are encouraged to cite ActionWeaver if found useful.

CEO

CEO is an intuitive and modular AI agent framework designed for task automation. It provides a flexible environment for building agents with specific abilities and personalities, allowing users to assign tasks and interact with the agents to automate various processes. The framework supports multi-agent collaboration scenarios and offers functionalities like instantiating agents, granting abilities, assigning queries, and executing tasks. Users can customize agent personalities and define specific abilities using decorators, making it easy to create complex automation workflows.

chatmemory

ChatMemory is a simple yet powerful long-term memory manager that facilitates communication between AI and users. It organizes conversation data into history, summary, and knowledge entities, enabling quick retrieval of context and generation of clear, concise answers. The tool leverages vector search on summaries/knowledge and detailed history to provide accurate responses. It balances speed and accuracy by using lightweight retrieval and fallback detailed search mechanisms, ensuring efficient memory management and response generation beyond mere data retrieval.

motorhead

Motorhead is a memory and information retrieval server for LLMs. It provides three simple APIs to assist with memory handling in chat applications using LLMs. The first API, GET /sessions/:id/memory, returns messages up to a maximum window size. The second API, POST /sessions/:id/memory, allows you to send an array of messages to Motorhead for storage. The third API, DELETE /sessions/:id/memory, deletes the session's message list. Motorhead also features incremental summarization, where it processes half of the maximum window size of messages and summarizes them when the maximum is reached. Additionally, it supports searching by text query using vector search. Motorhead is configurable through environment variables, including the maximum window size, whether to enable long-term memory, the model used for incremental summarization, the server port, your OpenAI API key, and the Redis URL.

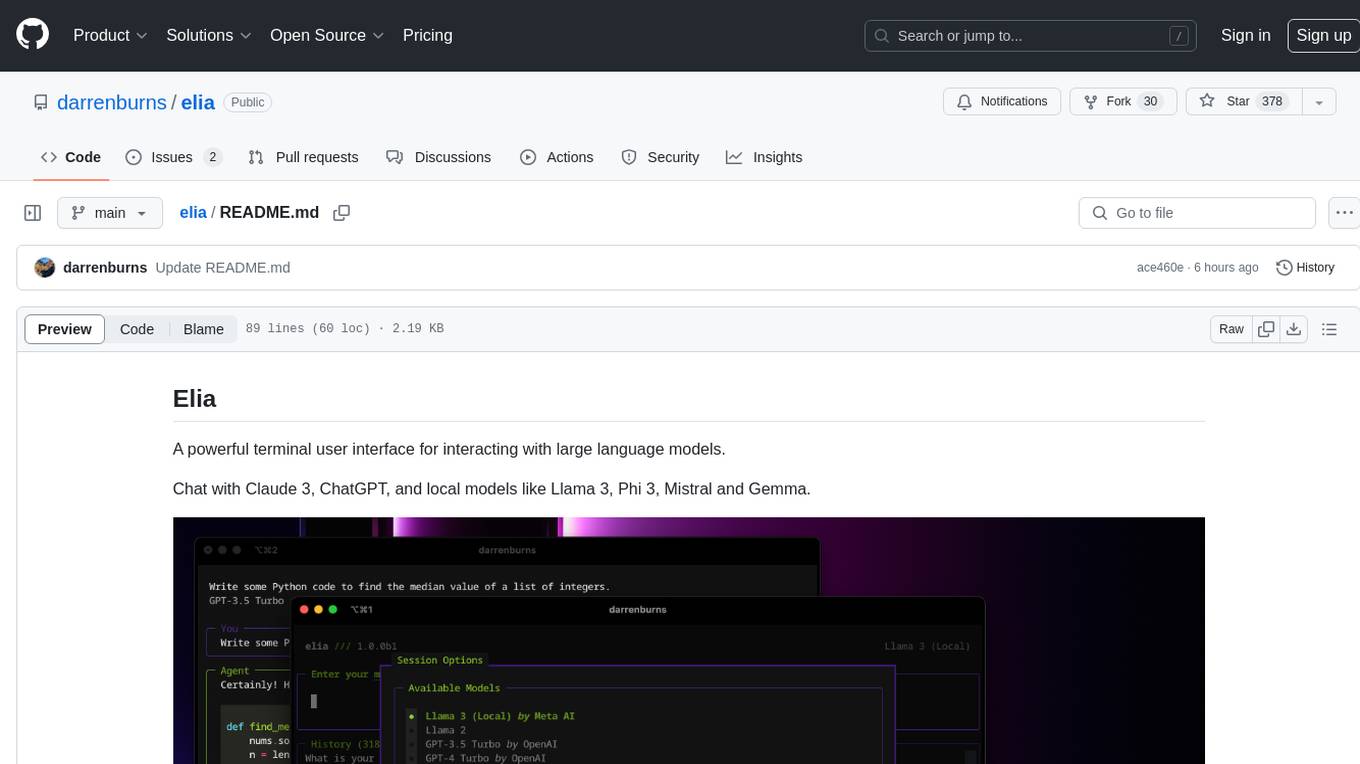

elia

Elia is a powerful terminal user interface designed for interacting with large language models. It allows users to chat with models like Claude 3, ChatGPT, Llama 3, Phi 3, Mistral, and Gemma. Conversations are stored locally in a SQLite database, ensuring privacy. Users can run local models through 'ollama' without data leaving their machine. Elia offers easy installation with pipx and supports various environment variables for different models. It provides a quick start to launch chats and manage local models. Configuration options are available to customize default models, system prompts, and add new models. Users can import conversations from ChatGPT and wipe the database when needed. Elia aims to enhance user experience in interacting with language models through a user-friendly interface.

pg_vectorize

pg_vectorize is a Postgres extension that automates text to embeddings transformation, enabling vector search and LLM applications with minimal function calls. It integrates with popular LLMs, provides workflows for vector search and RAG, and automates Postgres triggers for updating embeddings. The tool is part of the VectorDB Stack on Tembo Cloud, offering high-level APIs for easy initialization and search.

Tiny-Predictive-Text

Tiny-Predictive-Text is a demonstration of predictive text without an LLM, using permy.link. It provides a detailed description of the tool, including its features, benefits, and how to use it. The tool is suitable for a variety of jobs, including content writers, editors, and researchers. It can be used to perform a variety of tasks, such as generating text, completing sentences, and correcting errors.

CoPilot

TigerGraph CoPilot is an AI assistant that combines graph databases and generative AI to enhance productivity across various business functions. It includes three core component services: InquiryAI for natural language assistance, SupportAI for knowledge Q&A, and QueryAI for GSQL code generation. Users can interact with CoPilot through a chat interface on TigerGraph Cloud and APIs. CoPilot requires LLM services for beta but will support TigerGraph's LLM in future releases. It aims to improve contextual relevance and accuracy of answers to natural-language questions by building knowledge graphs and using RAG. CoPilot is extensible and can be configured with different LLM providers, graph schemas, and LangChain tools.

neo4j-runway

Neo4j Runway is a Python library that simplifies the process of migrating relational data into a graph. It provides tools to abstract communication with OpenAI for data discovery, generate data models, ingestion code, and load data into a Neo4j instance. The library leverages OpenAI LLMs for insights, Instructor Python library for modeling, and PyIngest for data loading. Users can visualize data models using graphviz and benefit from a seamless integration with Neo4j for efficient data migration.

notebook-intelligence

Notebook Intelligence (NBI) is an AI coding assistant and extensible AI framework for JupyterLab. It greatly boosts the productivity of JupyterLab users with AI assistance by providing features such as code generation with inline chat, auto-complete, and chat interface. NBI supports various LLM Providers and AI Models, including local models from Ollama. Users can configure model provider and model options, remember GitHub Copilot login, and save configuration files. NBI seamlessly integrates with Model Context Protocol (MCP) servers, supporting both Standard Input/Output (stdio) and Server-Sent Events (SSE) transports. Users can easily add MCP servers to NBI, auto-approve tools, set environment variables, and group servers based on functionality. Additionally, NBI allows access to built-in tools from an MCP participant, enhancing the user experience and productivity.

For similar tasks

smartfunc

smartfunc is a Python library that turns docstrings into LLM-functions. It wraps around the llm library to parse docstrings and generate prompts at runtime using Jinja2 templates. The library offers syntactic sugar on top of llm, supporting backends for different LLM providers, async support for microbatching, schema support using Pydantic models, and the ability to store API keys in .env files. It simplifies rapid prototyping by focusing on specific features and providing flexibility in prompt engineering. smartfunc also supports async functions and debug mode for debugging prompts and responses.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.