AIClient-2-API

Simulates Gemini CLI, Antigravity, Qwen Code, and Kiro client requests, compatible with the OpenAI API. It supports thousands of Gemini model requests per day and offers free use of the built-in Claude model in Kiro. Easily connect to any client via the API, making AI development more efficient!

Stars: 4031

AIClient-2-API is a versatile and lightweight API proxy designed for developers, providing ample free API request quotas and comprehensive support for various mainstream large models like Gemini, Qwen Code, Claude, etc. It converts multiple backend APIs into standard OpenAI format interfaces through a Node.js HTTP server. The project adopts a modern modular architecture, supports strategy and adapter patterns, comes with complete test coverage and health check mechanisms, and is ready to use after 'npm install'. By easily switching model service providers in the configuration file, any OpenAI-compatible client or application can seamlessly access different large model capabilities through the same API address, eliminating the hassle of maintaining multiple sets of configurations for different services and dealing with incompatible interfaces.

README:

A powerful proxy that can unify the requests of various client-only large model APIs (Gemini CLI, Antigravity, Qwen Code, Kiro ...), simulate requests, and encapsulate them into a local OpenAI-compatible interface.

AIClient2API is an API proxy service that breaks through client limitations, converting free large models originally restricted to client use only (such as Gemini, Antigravity, Qwen Code, Kiro) into standard OpenAI-compatible interfaces that can be called by any application. Built on Node.js, it supports intelligent conversion between OpenAI, Claude, and Gemini protocols, enabling tools like Cherry-Studio, NextChat, and Cline to freely use advanced models such as Claude Opus 4.5, Gemini 3.0 Pro, and Qwen3 Coder Plus at scale. The project adopts a modular architecture based on strategy and adapter patterns, with built-in account pool management, intelligent polling, automatic failover, and health check mechanisms, ensuring 99.9% service availability.

[!NOTE] 🎉 Important Milestone

- Thanks to Ruan Yifeng for the recommendation in Weekly Issue 359

📅 Version Update Log

Click to expand detailed version history

- 2026.01.26 - Added Codex protocol support: supports OpenAI Codex OAuth authorization access

- 2026.01.25 - Enhanced AI Monitor plugin: supports monitoring request parameters and responses before and after AI protocol conversion. Optimized log management: unified log format, visual configuration

- 2026.01.15 - Optimized provider pool manager: added async refresh queue mechanism, buffer queue deduplication, global concurrency control, node warmup and automatic expiry detection

- 2026.01.07 - Added iFlow protocol support, enabling access to Qwen, Kimi, DeepSeek, and GLM series models via OAuth authentication with automatic token refresh

- 2026.01.03 - Added theme switching functionality and optimized provider pool initialization, removed the fallback strategy of using provider default configuration

- 2025.12.30 - Added main process management and automatic update functionality

- 2025.12.25 - Unified configuration management: All configs centralized to

configs/directory. Docker users need to update mount path to-v "local_path:/app/configs"- 2025.12.11 - Automatically built Docker images are now available on Docker Hub: justlikemaki/aiclient-2-api

- 2025.11.30 - Added Antigravity protocol support, enabling access to Gemini 3 Pro, Claude Sonnet 4.5, and other models via Google internal interfaces

- 2025.11.16 - Added Ollama protocol support, unified interface to access all supported models (Claude, Gemini, Qwen, OpenAI, etc.)

- 2025.11.11 - Added Web UI management console, supporting real-time configuration management and health status monitoring

- 2025.11.06 - Added support for Gemini 3 Preview, enhanced model compatibility and performance optimization

- 2025.10.18 - Kiro open registration, new accounts get 500 credits, full support for Claude Sonnet 4.5

- 2025.09.01 - Integrated Qwen Code CLI, added

qwen3-coder-plusmodel support- 2025.08.29 - Released account pool management feature, supporting multi-account polling, intelligent failover, and automatic degradation strategies

- Configuration: Add

PROVIDER_POOLS_FILE_PATHparameter inconfigs/config.json- Reference configuration: provider_pools.json

- History Developed

- Support Gemini CLI, Kiro and other client2API

- OpenAI, Claude, Gemini three-protocol mutual conversion, automatic intelligent switching

- Multi-Model Unified Interface: Through standard OpenAI-compatible protocol, configure once to access mainstream large models including Gemini, Claude, Qwen Code, Kimi K2, MiniMax M2

- Flexible Switching Mechanism: Path routing, support dynamic model switching via startup parameters or environment variables to meet different scenario requirements

- Zero-Cost Migration: Fully compatible with OpenAI API specifications, tools like Cherry-Studio, NextChat, Cline can be used without modification

- Multi-Protocol Intelligent Conversion: Support intelligent conversion between OpenAI, Claude, and Gemini protocols for cross-protocol model invocation

- Bypass Official Restrictions: Utilize OAuth authorization mechanism to effectively break through rate and quota limits of services like Gemini, Antigravity

- Free Advanced Models: Use Claude Opus 4.5 for free via Kiro API mode, use Qwen3 Coder Plus via Qwen OAuth mode, reducing usage costs

- Intelligent Account Pool Scheduling: Support multi-account polling, automatic failover, and configuration degradation, ensuring 99.9% service availability

- Full-Chain Log Recording: Capture all request and response data, supporting auditing and debugging

- Private Dataset Construction: Quickly build proprietary training datasets based on log data

- System Prompt Management: Support override and append modes, achieving perfect combination of unified base instructions and personalized extensions

- Web UI Management Console: Real-time configuration management, health status monitoring, API testing and log viewing

- Modular Architecture: Based on strategy and adapter patterns, adding new model providers requires only 3 steps

- Complete Test Coverage: Integration and unit test coverage 90%+, ensuring code quality

- Containerized Deployment: Provides Docker support, one-click deployment, cross-platform operation

- 💡 Core Advantages

- 🚀 Quick Start

- 🔐 Authorization Configuration Guide

- 📁 Authorization File Storage Paths

- 🦙 Ollama Protocol Usage Examples

- ⚙️ Advanced Configuration

- ❓ FAQ

- 📄 Open Source License

- 🙏 Acknowledgements

⚠️ Disclaimer

The most recommended way to use AIClient-2-API is to start it through an automated script and configure it visually directly in the Web UI console.

docker run -d -p 3000:3000 -p 8085-8087:8085-8087 -p 1455:1455 -p 19876-19880:19876-19880 --restart=always -v "your_path:/app/configs" --name aiclient2api justlikemaki/aiclient-2-apiParameter Description:

-

-d: Run container in background -

-p 3000:3000 ...: Port mapping. 3000 is for Web UI, others are for OAuth callbacks (Gemini: 8085, Antigravity: 8086, iFlow: 8087, Codex: 1455, Kiro: 19876-19880) -

--restart=always: Container auto-restart policy -

-v "your_path:/app/configs": Mount configuration directory (replace "your_path" with actual path, e.g.,/home/user/aiclient-configs) -

--name aiclient2api: Container name

You can also use Docker Compose for deployment. First, navigate to the docker directory:

cd docker

mkdir -p configs

docker compose up -dTo build from source instead of using the pre-built image, edit docker-compose.yml:

- Comment out the

image: justlikemaki/aiclient-2-api:latestline - Uncomment the

build:section - Run

docker compose up -d --build

-

Linux/macOS:

chmod +x install-and-run.sh && ./install-and-run.sh -

Windows: Double-click

install-and-run.bat

After the server starts, open your browser and visit: 👉 http://localhost:3000

Default Password:

admin123(can be changed in the console or by modifying thepwdfile after login)

Go to the "Configuration" page, you can:

- ✅ Fill in the API Key for each provider or upload OAuth credential files

- ✅ Switch default model providers in real-time

- ✅ Monitor health status and real-time request logs

========================================

AI Client 2 API Quick Install Script

========================================

[Check] Checking if Node.js is installed...

✅ Node.js is installed, version: v20.10.0

✅ Found package.json file

✅ node_modules directory already exists

✅ Project file check completed

========================================

Starting AI Client 2 API Server...

========================================

🌐 Server will start on http://localhost:3000

📖 Visit http://localhost:3000 to view management interface

⏹️ Press Ctrl+C to stop server

💡 Tip: The script will automatically install dependencies and start the server. If you encounter any issues, the script provides clear error messages and suggested solutions.

A functional Web management interface, including:

📊 Dashboard: System overview, interactive routing examples, client configuration guide

⚙️ Configuration: Real-time parameter modification, supporting all providers (Gemini, Antigravity, OpenAI, Claude, Kiro, Qwen), including advanced settings and file uploads

🔗 Provider Pools: Monitor active connections, provider health statistics, enable/disable management

📁 Config Files: Centralized OAuth credential management, supporting search filtering and file operations

📜 Real-time Logs: Real-time display of system and request logs, with management controls

🔐 Login Verification: Default password admin123, can be modified via pwd file

Access: http://localhost:3000 → Login → Sidebar navigation → Take effect immediately

Supports various input types such as images and documents, providing you with a richer interaction experience and more powerful application scenarios.

Seamlessly support the following latest large models, just configure the corresponding endpoint in Web UI or configs/config.json:

- Claude 4.5 Opus - Anthropic's strongest model ever, now supported via Kiro, Antigravity

- Gemini 3 Pro - Google's next-generation architecture preview, now supported via Gemini, Antigravity

- Qwen3 Coder Plus - Alibaba Tongyi Qianwen's latest code-specific model, now supported via Qwen Code

- Kimi K2 / MiniMax M2 - Synchronized support for top domestic flagship models, now supported via custom OpenAI, Claude

Click to expand detailed authorization configuration steps for each provider

💡 Tip: For the best experience, it is recommended to manage authorization visually through the Web UI console.

In the Web UI management interface, you can complete authorization configuration rapidly:

- Generate Authorization: On the "Provider Pools" page or "Configuration" page, click the "Generate Authorization" button in the upper right corner of the corresponding provider (e.g., Gemini, Qwen).

- Scan/Login: An authorization dialog will pop up, you can click "Open in Browser" for login verification. For Qwen, just complete the web login; for Gemini and Antigravity, complete the Google account authorization.

-

Auto-Save: After successful authorization, the system will automatically obtain credentials and save them to the corresponding directory in

configs/. You can see the newly generated credentials on the "Config Files" page. - Visual Management: You can upload or delete credentials at any time in the Web UI, or use the "Quick Associate" function to bind existing credential files to providers with one click.

- Obtain OAuth Credentials: Visit Google Cloud Console to create a project and enable Gemini API

-

Project Configuration: You may need to provide a valid Google Cloud project ID, which can be specified via the startup parameter

--project-id - Ensure Project ID: When configuring in the Web UI, ensure the project ID entered matches the project ID displayed in the Google Cloud Console and Gemini CLI.

- Personal Account: Personal accounts require separate authorization, application channels have been closed.

- Pro Member: Antigravity is temporarily open to Pro members, you need to purchase a Pro membership first.

- Organization Account: Organization accounts require separate authorization, contact the administrator to obtain authorization.

- First Authorization: After configuring the Qwen service, the system will automatically open the authorization page in the browser

-

Recommended Parameters: Use official default parameters for best results

{ "temperature": 0, "top_p": 1 }

- Environment Preparation: Download and install Kiro client

-

Complete Authorization: Log in to your account in the client to generate

kiro-auth-token.jsoncredential file - Best Practice: Recommended to use with Claude Code for optimal experience

- Important Notice: Kiro service usage policy has been updated, please visit the official website for the latest usage restrictions and terms

- First Authorization: In Web UI's "Configuration" or "Provider Pools" page, click the "Generate Authorization" button for iFlow

- Phone Login: The system will open the iFlow authorization page, complete login verification using your phone number

- Auto Save: After successful authorization, the system will automatically obtain the API Key and save credentials

- Supported Models: Qwen3 series, Kimi K2, DeepSeek V3/R1, GLM-4.6/4.7, etc.

- Auto Refresh: The system will automatically refresh tokens when they are about to expire, no manual intervention required

- Generate Authorization: On the Web UI "Provider Pools" or "Configuration" page, click the "Generate Authorization" button for Codex

- Browser Login: The system opens the OpenAI Codex authorization page to complete OAuth login

- Auto Save: After successful authorization, the system automatically saves the Codex OAuth credential file

-

Callback Port: Ensure the OAuth callback port

1455is not occupied

- Create Pool Configuration File: Create a configuration file referencing provider_pools.json.example

-

Configure Pool Parameters: Set

PROVIDER_POOLS_FILE_PATHinconfigs/config.jsonto point to the pool configuration file -

Startup Parameter Configuration: Use the

--provider-pools-file <path>parameter to specify the pool configuration file path - Health Check: The system will automatically perform periodic health checks and avoid using unhealthy providers

Click to expand default storage locations for authorization credentials

Default storage locations for authorization credential files of each service:

| Service | Default Path | Description |

|---|---|---|

| Gemini | ~/.gemini/oauth_creds.json |

OAuth authentication credentials |

| Kiro | ~/.aws/sso/cache/kiro-auth-token.json |

Kiro authentication token |

| Qwen | ~/.qwen/oauth_creds.json |

Qwen OAuth credentials |

| Antigravity | ~/.antigravity/oauth_creds.json |

Antigravity OAuth credentials (supports Claude 4.5 Opus) |

| iFlow | ~/.iflow/oauth_creds.json |

iFlow OAuth credentials (supports Qwen, Kimi, DeepSeek, GLM) |

| Codex | ~/.codex/oauth_creds.json |

Codex OAuth credentials |

Note:

~represents the user home directory (Windows:C:\Users\username, Linux/macOS:/home/usernameor/Users/username)

Custom Path: Can specify custom storage location via relevant parameters in configuration file or environment variables

This project supports the Ollama protocol, allowing access to all supported models through a unified interface. The Ollama endpoint provides standard interfaces such as /api/tags, /api/chat, /api/generate, etc.

Ollama API Call Examples:

- List all available models:

curl http://localhost:3000/ollama/api/tags \

-H "Authorization: Bearer your-api-key"- Chat interface:

curl http://localhost:3000/ollama/api/chat \

-H "Content-Type: application/json" \

-H "Authorization: Bearer your-api-key" \

-d '{

"model": "[Claude] claude-sonnet-4.5",

"messages": [

{"role": "user", "content": "Hello"}

]

}'- Specify provider using model prefix:

-

[Kiro]- Access Claude models using Kiro API -

[Claude]- Use official Claude API -

[Gemini CLI]- Access via Gemini CLI OAuth -

[OpenAI]- Use official OpenAI API -

[Qwen CLI]- Access via Qwen OAuth

Click to expand proxy configuration, model filtering, and Fallback advanced settings

This project supports flexible proxy configuration, allowing you to configure a unified proxy for different providers or use provider-specific proxied endpoints.

Configuration Methods:

- Web UI Configuration (Recommended): Convenient configuration management

In the "Configuration" page of the Web UI, you can visually configure all proxy options:

- Unified Proxy: Fill in the proxy address in the "Proxy Settings" area and check the providers that need to use the proxy

- Provider Endpoints: In each provider's configuration area, directly modify the Base URL to a proxied endpoint

- Click "Save Configuration": Takes effect immediately without restarting the service

-

Unified Proxy Configuration: Configure a global proxy and specify which providers use it

- Web UI Configuration: Fill in the proxy address in the "Proxy Settings" area of the "Configuration" page and check the providers that need to use the proxy

-

Configuration File: Configure in

configs/config.json

{ "PROXY_URL": "http://127.0.0.1:7890", "PROXY_ENABLED_PROVIDERS": [ "gemini-cli-oauth", "gemini-antigravity", "claude-kiro-oauth" ] } -

Provider-Specific Proxied Endpoints: Some providers (like OpenAI, Claude) support configuring proxied API endpoints

- Web UI Configuration: In each provider's configuration area on the "Configuration" page, modify the corresponding Base URL

-

Configuration File: Configure in

configs/config.json

{ "OPENAI_BASE_URL": "https://your-proxy-endpoint.com/v1", "CLAUDE_BASE_URL": "https://your-proxy-endpoint.com" }

Supported Proxy Types:

-

HTTP Proxy:

http://127.0.0.1:7890 -

HTTPS Proxy:

https://127.0.0.1:7890 -

SOCKS5 Proxy:

socks5://127.0.0.1:1080

Use Cases:

- Network-Restricted Environments: Use in network environments where Google, OpenAI, and other services cannot be accessed directly

- Hybrid Configuration: Some providers use unified proxy, others use their own proxied endpoints

- Flexible Switching: Enable/disable proxy for specific providers at any time in the Web UI

Notes:

- Proxy configuration priority: Unified proxy configuration > Provider-specific endpoints > Direct connection

- Ensure the proxy service is stable and available, otherwise it may affect service quality

- SOCKS5 proxy usually performs better than HTTP proxy

Support excluding unsupported models through notSupportedModels configuration, the system will automatically skip these providers.

Configuration: Add notSupportedModels field for providers in configs/provider_pools.json:

{

"gemini-cli-oauth": [

{

"uuid": "provider-1",

"notSupportedModels": ["gemini-3.0-pro", "gemini-3.5-flash"],

"checkHealth": true

}

]

}How It Works:

- When requesting a specific model, the system automatically filters out providers that have configured the model as unsupported

- Only providers that support the model will be selected to handle the request

Use Cases:

- Some accounts cannot access specific models due to quota or permission restrictions

- Need to assign different model access permissions to different accounts

When all accounts under a Provider Type (e.g., gemini-cli-oauth) are exhausted due to 429 quota limits or marked as unhealthy, the system can automatically fallback to another compatible Provider Type (e.g., gemini-antigravity) instead of returning an error directly.

Configuration: Add providerFallbackChain configuration in configs/config.json:

{

"providerFallbackChain": {

"gemini-cli-oauth": ["gemini-antigravity"],

"gemini-antigravity": ["gemini-cli-oauth"],

"claude-kiro-oauth": ["claude-custom"],

"claude-custom": ["claude-kiro-oauth"]

}

}How It Works:

- Try to select a healthy account from the primary Provider Type pool

- If all accounts in that type are unhealthy or return 429:

- Look up the configured fallback types

- Check if the fallback type supports the requested model (protocol compatibility check)

- Select a healthy account from the fallback type's pool

- Supports multi-level degradation chains:

gemini-cli-oauth → gemini-antigravity → openai-custom - Only returns an error if all fallback types are also unavailable

Use Cases:

- In batch task scenarios, the free RPD quota of a single Provider Type can be easily exhausted in a short time

- Through cross-type Fallback, you can fully utilize the independent quotas of multiple Providers, improving overall availability and throughput

Notes:

- Fallback only occurs between protocol-compatible types (e.g., between

gemini-*, betweenclaude-*) - The system automatically checks if the target Provider Type supports the requested model

Click to expand FAQ and solutions (port occupation, Docker startup, 429 errors, etc.)

Problem Description: After clicking "Generate Authorization", the browser opens the authorization page but authorization fails or cannot be completed.

Solutions:

- Check Network Connection: Ensure you can access Google, Alibaba Cloud, and other services normally

- Check Port Occupation: OAuth callbacks require specific ports (Gemini: 8085, Antigravity: 8086, iFlow: 8087, Codex: 1455, Kiro: 19876-19880), ensure these ports are not occupied

- Clear Browser Cache: Try using incognito mode or clearing browser cache and retry

- Check Firewall Settings: Ensure the firewall allows access to local callback ports

- Docker Users: Ensure all OAuth callback ports are correctly mapped

Problem Description: When starting the service, it shows the port is already in use (e.g., EADDRINUSE).

Solutions:

# Windows - Find the process occupying the port

netstat -ano | findstr :3000

# Then use Task Manager to end the corresponding PID process

# Linux/macOS - Find and end the process occupying the port

lsof -i :3000

kill -9 <PID>Or modify the port configuration in configs/config.json to use a different port.

Problem Description: Docker container fails to start or exits immediately.

Solutions:

-

Check Logs:

docker logs aiclient2apito view error messages -

Check Mount Path: Ensure the local path in the

-vparameter exists and has read/write permissions - Check Port Conflicts: Ensure all mapped ports are not occupied on the host

-

Re-pull Image:

docker pull justlikemaki/aiclient-2-api:latest

Problem Description: After uploading or configuring credential files, the system shows it cannot be recognized or format error.

Solutions:

- Check File Format: Ensure the credential file is valid JSON format

- Check File Path: Ensure the file path is correct, Docker users need to ensure the file is in the mounted directory

- Check File Permissions: Ensure the service has permission to read the credential file

- Regenerate Credentials: If credentials have expired, try re-authorizing via OAuth

Problem Description: API requests frequently return 429 Too Many Requests error.

Solutions:

-

Configure Account Pool: Add multiple accounts to

provider_pools.json, enable polling mechanism -

Configure Fallback: Configure

providerFallbackChaininconfig.jsonfor cross-type degradation - Reduce Request Frequency: Appropriately increase request intervals to avoid triggering rate limits

- Wait for Quota Reset: Free quotas usually reset daily or per minute

Problem Description: When requesting a specific model, it returns an error or shows the model is unavailable.

Solutions:

- Check Model Name: Ensure you're using the correct model name (case-sensitive)

- Check Provider Support: Confirm the currently configured provider supports that model

- Check Account Permissions: Some advanced models may require specific account permissions

-

Configure Model Filtering: Use

notSupportedModelsto exclude unsupported models

Problem Description: Browser cannot open http://localhost:3000.

Solutions:

- Check Service Status: Confirm the service has started successfully, check terminal output

-

Check Port Mapping: Docker users ensure

-p 3000:3000parameter is correct -

Try Other Address: Try accessing

http://127.0.0.1:3000 - Check Firewall: Ensure the firewall allows access to port 3000

Problem Description: When using streaming output, the response is interrupted midway or incomplete.

Solutions:

- Check Network Stability: Ensure network connection is stable

- Increase Timeout: Increase request timeout in client configuration

- Check Proxy Settings: If using a proxy, ensure the proxy supports long connections

- Check Service Logs: Check for error messages

Problem Description: After modifying configuration in Web UI, service behavior doesn't change.

Solutions:

- Refresh Page: Refresh the Web UI page after modification

- Check Save Status: Confirm the configuration was saved successfully (check prompt messages)

- Restart Service: Some configurations may require service restart to take effect

-

Check Configuration File: Directly check

configs/config.jsonto confirm changes were written

Problem Description: When calling API endpoints, it returns 404 Not Found error.

Solutions:

-

Check Endpoint Path: Ensure you're using the correct endpoint path, such as

/v1/chat/completions,/ollama/api/chat, etc. -

Check Client Auto-completion: Some clients (like Cherry-Studio, NextChat) automatically append paths (like

/v1/chat/completions) after the Base URL, causing path duplication. Check the actual request URL in the console and remove redundant path parts -

Check Service Status: Confirm the service has started normally, visit

http://localhost:3000to view Web UI - Check Port Configuration: Ensure requests are sent to the correct port (default 3000)

- View Available Routes: Check "Interactive Routing Examples" on the Web UI dashboard page to see all available endpoints

Problem Description: When calling API endpoints, it returns Unauthorized: API key is invalid or missing. error.

Solutions:

-

Check API Key Configuration: Ensure API Key is correctly configured in

configs/config.jsonor Web UI -

Check Request Header Format: Ensure the request contains the correct Authorization header format, such as

Authorization: Bearer your-api-key - Check Service Logs: View detailed error messages on the "Real-time Logs" page in Web UI to locate the specific cause

Problem Description: When calling API, it returns No available and healthy providers for type xxx error.

Solutions:

- Check Provider Status: Check if providers of the corresponding type are in healthy status on the "Provider Pools" page in Web UI

- Check Credential Validity: Confirm OAuth credentials have not expired; if expired, regenerate authorization

- Check Quota Limits: Some providers may have reached free quota limits; wait for quota reset or add more accounts

-

Enable Fallback: Configure

providerFallbackChaininconfig.jsonto automatically switch to backup providers when the primary provider is unavailable - View Detailed Logs: Check specific health check failure reasons on the "Real-time Logs" page in Web UI

Problem Description: API requests return 403 Forbidden error.

Solutions:

- Check Node Status: If you see the node status is normal (health check passed) on the "Provider Pools" page in Web UI, you can ignore this error as the system will handle it automatically

- Check Account Permissions: Confirm the account has permission to access the requested model or service

- Check API Key Permissions: Some providers' API Keys may have access scope restrictions; ensure the Key has sufficient permissions

- Check Regional Restrictions: Some services may have regional access restrictions; try using a proxy or VPN

- Check Credential Status: OAuth credentials may have been revoked or expired; try regenerating authorization

- Check Request Frequency: Some providers have strict request frequency limits; reduce request frequency and retry

- View Provider Documentation: Visit the official documentation of the corresponding provider to understand specific access restrictions and requirements

This project follows the GNU General Public License v3 (GPLv3) license. For details, please check the LICENSE file in the root directory.

The development of this project was greatly inspired by the official Google Gemini CLI and referenced part of the code implementation of gemini-cli.ts in Cline 3.18.0. Sincere thanks to the Google official team and the Cline development team for their excellent work!

Thanks to all the developers who contributed to the AIClient-2-API project:

We are grateful for the support from our sponsors:

Your sponsorship is the driving force for the project's continued development ❤️

This project (AIClient-2-API) is for learning and research purposes only. Users assume all risks when using this project. The author is not responsible for any direct, indirect, or consequential losses resulting from the use of this project.

This project is an API proxy tool and does not provide any AI model services. All AI model services are provided by their respective third-party providers (such as Google, OpenAI, Anthropic, etc.). Users should comply with the terms of service and policies of each third-party service when accessing them through this project. The author is not responsible for the availability, quality, security, or legality of third-party services.

This project runs locally and does not collect or upload any user data. However, users should protect their API keys and other sensitive information when using this project. It is recommended that users regularly check and update their API keys and avoid using this project in insecure network environments.

Users should comply with the laws and regulations of their country/region when using this project. It is strictly prohibited to use this project for any illegal purposes. Any consequences resulting from users' violation of laws and regulations shall be borne by the users themselves.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AIClient-2-API

Similar Open Source Tools

AIClient-2-API

AIClient-2-API is a versatile and lightweight API proxy designed for developers, providing ample free API request quotas and comprehensive support for various mainstream large models like Gemini, Qwen Code, Claude, etc. It converts multiple backend APIs into standard OpenAI format interfaces through a Node.js HTTP server. The project adopts a modern modular architecture, supports strategy and adapter patterns, comes with complete test coverage and health check mechanisms, and is ready to use after 'npm install'. By easily switching model service providers in the configuration file, any OpenAI-compatible client or application can seamlessly access different large model capabilities through the same API address, eliminating the hassle of maintaining multiple sets of configurations for different services and dealing with incompatible interfaces.

vibesdk

Cloudflare VibeSDK is an open source full-stack AI webapp generator built on Cloudflare's developer platform. It allows companies to build AI-powered platforms, enables internal development for non-technical teams, and supports SaaS platforms to extend product functionality. The platform features AI code generation, live previews, interactive chat, modern stack generation, one-click deploy, and GitHub integration. It is built on Cloudflare's platform with frontend in React + Vite, backend in Workers with Durable Objects, database in D1 (SQLite) with Drizzle ORM, AI integration via multiple LLM providers, sandboxed app previews and execution in containers, and deployment to Workers for Platforms with dispatch namespaces. The platform also offers an SDK for programmatic access to build apps programmatically using TypeScript SDK.

anilist-mcp

AniList MCP Server is a Model Context Protocol server that interfaces with the AniList API, allowing LLM clients to access and interact with anime, manga, character, staff, and user data from AniList. It supports searching for anime, manga, characters, staff, and studios, detailed information retrieval, user profiles and lists access, advanced filtering options, genres and media tags retrieval, dual transport support (HTTP and STDIO), and cloud deployment readiness.

OrChat

OrChat is a powerful CLI tool for chatting with AI models through OpenRouter. It offers features like universal model access, interactive chat with real-time streaming responses, rich markdown rendering, agentic shell access, security gating, performance analytics, command auto-completion, pricing display, auto-update system, multi-line input support, conversation management, auto-summarization, session persistence, web scraping, file and media support, smart thinking mode, conversation export, customizable themes, interactive input features, and more.

nanocoder

Nanocoder is a local-first CLI coding agent that supports multiple AI providers with tool support for file operations and command execution. It focuses on privacy and control, allowing users to code locally with AI tools. The tool is designed to bring the power of agentic coding tools to local models or controlled APIs like OpenRouter, promoting community-led development and inclusive collaboration in the AI coding space.

CyberStrikeAI

CyberStrikeAI is an AI-native security testing platform built in Go that integrates 100+ security tools, an intelligent orchestration engine, role-based testing with predefined security roles, a skills system with specialized testing skills, and comprehensive lifecycle management capabilities. It enables end-to-end automation from conversational commands to vulnerability discovery, attack-chain analysis, knowledge retrieval, and result visualization, delivering an auditable, traceable, and collaborative testing environment for security teams. The platform features an AI decision engine with OpenAI-compatible models, native MCP implementation with various transports, prebuilt tool recipes, large-result pagination, attack-chain graph, password-protected web UI, knowledge base with vector search, vulnerability management, batch task management, role-based testing, and skills system.

Groqqle

Groqqle 2.1 is a revolutionary, free AI web search and API that instantly returns ORIGINAL content derived from source articles, websites, videos, and even foreign language sources, for ANY target market of ANY reading comprehension level! It combines the power of large language models with advanced web and news search capabilities, offering a user-friendly web interface, a robust API, and now a powerful Groqqle_web_tool for seamless integration into your projects. Developers can instantly incorporate Groqqle into their applications, providing a powerful tool for content generation, research, and analysis across various domains and languages.

tingly-box

Tingly Box is a tool that helps in deciding which model to call, compressing context, and routing requests efficiently. It offers secure, reliable, and customizable functional extensions. With features like unified API, smart routing, context compression, auto API translation, blazing fast performance, flexible authentication, visual control panel, and client-side usage stats, Tingly Box provides a comprehensive solution for managing AI models and tokens. It supports integration with various IDEs, CLI tools, SDKs, and AI applications, making it versatile and easy to use. The tool also allows seamless integration with OAuth providers like Claude Code, enabling users to utilize existing quotas in OpenAI-compatible tools. Tingly Box aims to simplify AI model management and usage by providing a single endpoint for multiple providers with minimal configuration, promoting seamless integration with SDKs and CLI tools.

figma-console-mcp

Figma Console MCP is a Model Context Protocol server that bridges design and development, giving AI assistants complete access to Figma for extraction, creation, and debugging. It connects AI assistants like Claude to Figma, enabling plugin debugging, visual debugging, design system extraction, design creation, variable management, real-time monitoring, and three installation methods. The server offers 53+ tools for NPX and Local Git setups, while Remote SSE provides read-only access with 16 tools. Users can create and modify designs with AI, contribute to projects, or explore design data. The server supports authentication via personal access tokens and OAuth, and offers tools for navigation, console debugging, visual debugging, design system extraction, design creation, design-code parity, variable management, and AI-assisted design creation.

nanocoder

Nanocoder is a versatile code editor designed for beginners and experienced programmers alike. It provides a user-friendly interface with features such as syntax highlighting, code completion, and error checking. With Nanocoder, you can easily write and debug code in various programming languages, making it an ideal tool for learning, practicing, and developing software projects. Whether you are a student, hobbyist, or professional developer, Nanocoder offers a seamless coding experience to boost your productivity and creativity.

coding-agent-template

Coding Agent Template is a versatile tool for building AI-powered coding agents that support various coding tasks using Claude Code, OpenAI's Codex CLI, Cursor CLI, and opencode with Vercel Sandbox. It offers features like multi-agent support, Vercel Sandbox for secure code execution, AI Gateway integration, AI-generated branch names, task management, persistent storage, Git integration, and a modern UI built with Next.js and Tailwind CSS. Users can easily deploy their own version of the template to Vercel and set up the tool by cloning the repository, installing dependencies, configuring environment variables, setting up the database, and starting the development server. The tool simplifies the process of creating tasks, monitoring progress, reviewing results, and managing tasks, making it ideal for developers looking to automate coding tasks with AI agents.

persistent-ai-memory

Persistent AI Memory System is a comprehensive tool that offers persistent, searchable storage for AI assistants. It includes features like conversation tracking, MCP tool call logging, and intelligent scheduling. The system supports multiple databases, provides enhanced memory management, and offers various tools for memory operations, schedule management, and system health checks. It also integrates with various platforms like LM Studio, VS Code, Koboldcpp, Ollama, and more. The system is designed to be modular, platform-agnostic, and scalable, allowing users to handle large conversation histories efficiently.

MassGen

MassGen is a cutting-edge multi-agent system that leverages the power of collaborative AI to solve complex tasks. It assigns a task to multiple AI agents who work in parallel, observe each other's progress, and refine their approaches to converge on the best solution to deliver a comprehensive and high-quality result. The system operates through an architecture designed for seamless multi-agent collaboration, with key features including cross-model/agent synergy, parallel processing, intelligence sharing, consensus building, and live visualization. Users can install the system, configure API settings, and run MassGen for various tasks such as question answering, creative writing, research, development & coding tasks, and web automation & browser tasks. The roadmap includes plans for advanced agent collaboration, expanded model, tool & agent integration, improved performance & scalability, enhanced developer experience, and a web interface.

stenoai

StenoAI is an AI-powered meeting intelligence tool that allows users to record, transcribe, summarize, and query meetings using local AI models. It prioritizes privacy by processing data entirely on the user's device. The tool offers multiple AI models optimized for different use cases, making it ideal for healthcare, legal, and finance professionals with confidential data needs. StenoAI also features a macOS desktop app with a user-friendly interface, making it convenient for users to access its functionalities. The project is open-source and not affiliated with any specific company, emphasizing its focus on meeting-notes productivity and community collaboration.

g4f.dev

G4f.dev is the official documentation hub for GPT4Free, a free and convenient AI tool with endpoints that can be integrated directly into apps, scripts, and web browsers. The documentation provides clear overviews, quick examples, and deeper insights into the major features of GPT4Free, including text and image generation. Users can choose between Python and JavaScript for installation and setup, and can access various API endpoints, providers, models, and client options for different tasks.

Zero

Zero is an open-source AI email solution that allows users to self-host their email app while integrating external services like Gmail. It aims to modernize and enhance emails through AI agents, offering features like open-source transparency, AI-driven enhancements, data privacy, self-hosting freedom, unified inbox, customizable UI, and developer-friendly extensibility. Built with modern technologies, Zero provides a reliable tech stack including Next.js, React, TypeScript, TailwindCSS, Node.js, Drizzle ORM, and PostgreSQL. Users can set up Zero using standard setup or Dev Container setup for VS Code users, with detailed environment setup instructions for Better Auth, Google OAuth, and optional GitHub OAuth. Database setup involves starting a local PostgreSQL instance, setting up database connection, and executing database commands for dependencies, tables, migrations, and content viewing.

For similar tasks

hCaptcha-Solver

hCaptcha-Solver is an AI-based hcaptcha text challenge solver that utilizes the playwright module to generate the hsw N data. It can solve any text challenge without any problem, but may be flagged on some websites like Discord. The tool requires proxies since hCaptcha also rate limits. Users can run the 'hsw_api.py' before running anything and then integrate the usage shown in 'main.py' into their projects that require hCaptcha solving. Please note that this tool only works on sites that support hCaptcha text challenge.

AIClient-2-API

AIClient-2-API is a versatile and lightweight API proxy designed for developers, providing ample free API request quotas and comprehensive support for various mainstream large models like Gemini, Qwen Code, Claude, etc. It converts multiple backend APIs into standard OpenAI format interfaces through a Node.js HTTP server. The project adopts a modern modular architecture, supports strategy and adapter patterns, comes with complete test coverage and health check mechanisms, and is ready to use after 'npm install'. By easily switching model service providers in the configuration file, any OpenAI-compatible client or application can seamlessly access different large model capabilities through the same API address, eliminating the hassle of maintaining multiple sets of configurations for different services and dealing with incompatible interfaces.

Helios

Helios is a powerful open-source tool for managing and monitoring your Kubernetes clusters. It provides a user-friendly interface to easily visualize and control your cluster resources, including pods, deployments, services, and more. With Helios, you can efficiently manage your containerized applications and ensure high availability and performance of your Kubernetes infrastructure.

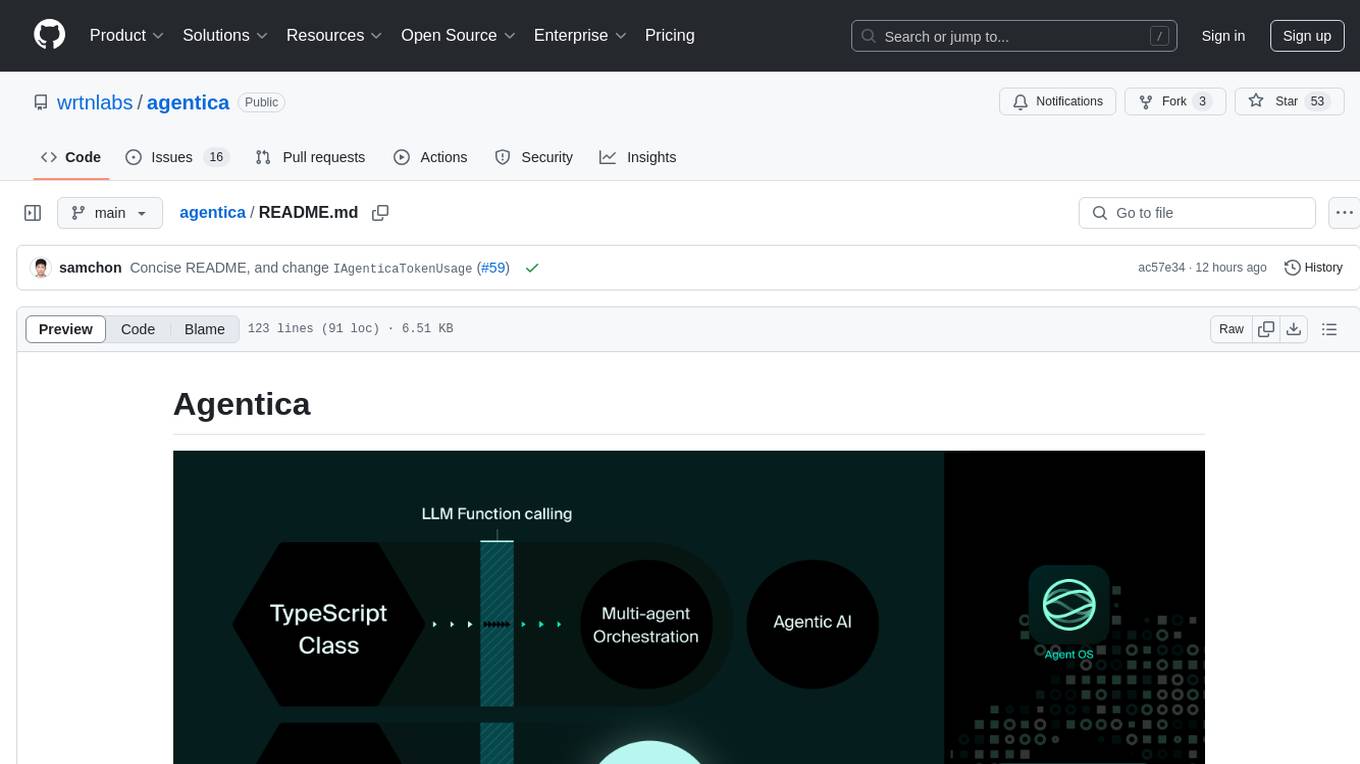

agentica

Agentica is a specialized Agentic AI library focused on LLM Function Calling. Users can provide Swagger/OpenAPI documents or TypeScript class types to Agentica for seamless functionality. The library simplifies AI development by handling various tasks effortlessly.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.