copilot-lsp

Copilot LSP: A lightweight and extensible Neovim plugin for integrating GitHub Copilot's AI-powered code suggestions via Language Server Protocol (LSP).

Stars: 357

Copilot LSP is a configuration tool for Neovim that enhances the native LSP functionality. It provides features such as text document focusing, inline completion, next edit suggestion, and status notifications. Users can easily integrate Copilot LSP into their Neovim setup to improve their coding experience. The tool offers smart clearing of suggestions, customizable defaults for Next Edit Suggestion (NES), and integration with Blink for inline completions. Copilot LSP requires installation via Mason or system and should be added to the PATH for seamless usage.

README:

- TextDocument Focusing

- Inline Completion

- Next Edit Suggestion

- Uses native LSP Binary

- [x] Sign In Flow

- Status Notification

To use the plugin, add the following to your Neovim configuration:

return {

"copilotlsp-nvim/copilot-lsp",

init = function()

vim.g.copilot_nes_debounce = 500

vim.lsp.enable("copilot_ls")

vim.keymap.set("n", "<tab>", function()

local bufnr = vim.api.nvim_get_current_buf()

local state = vim.b[bufnr].nes_state

if state then

-- Try to jump to the start of the suggestion edit.

-- If already at the start, then apply the pending suggestion and jump to the end of the edit.

local _ = require("copilot-lsp.nes").walk_cursor_start_edit()

or (

require("copilot-lsp.nes").apply_pending_nes()

and require("copilot-lsp.nes").walk_cursor_end_edit()

)

return nil

else

-- Resolving the terminal's inability to distinguish between `TAB` and `<C-i>` in normal mode

return "<C-i>"

end

end, { desc = "Accept Copilot NES suggestion", expr = true })

end,

}You can map the <Esc> key to clear suggestions while preserving its other functionality:

-- Clear copilot suggestion with Esc if visible, otherwise preserve default Esc behavior

vim.keymap.set("n", "<esc>", function()

if not require("copilot-lsp.nes").clear() then

-- fallback to other functionality

end

end, { desc = "Clear Copilot suggestion or fallback" })You don’t need to configure anything, but you can customize the defaults:

move_count_threshold is the most important. It controls how many cursor moves happen before suggestions are cleared. Higher = slower to clear.

require('copilot-lsp').setup({

nes = {

move_count_threshold = 3, -- Clear after 3 cursor movements

}

})return {

keymap = {

preset = "super-tab",

["<Tab>"] = {

function(cmp)

if vim.b[vim.api.nvim_get_current_buf()].nes_state then

cmp.hide()

return (

require("copilot-lsp.nes").apply_pending_nes()

and require("copilot-lsp.nes").walk_cursor_end_edit()

)

end

if cmp.snippet_active() then

return cmp.accept()

else

return cmp.select_and_accept()

end

end,

"snippet_forward",

"fallback",

},

},

}It can also be combined with fang2hou/blink-copilot to get inline completions. Just add the completion source to your Blink configuration and it will integrate

- Copilot LSP installed via Mason or system and on PATH

https://github.com/user-attachments/assets/1d5bed4a-fd0a-491f-91f3-a3335cc28682

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for copilot-lsp

Similar Open Source Tools

copilot-lsp

Copilot LSP is a configuration tool for Neovim that enhances the native LSP functionality. It provides features such as text document focusing, inline completion, next edit suggestion, and status notifications. Users can easily integrate Copilot LSP into their Neovim setup to improve their coding experience. The tool offers smart clearing of suggestions, customizable defaults for Next Edit Suggestion (NES), and integration with Blink for inline completions. Copilot LSP requires installation via Mason or system and should be added to the PATH for seamless usage.

UnrealGenAISupport

The Unreal Engine Generative AI Support Plugin is a tool designed to integrate various cutting-edge LLM/GenAI models into Unreal Engine for game development. It aims to simplify the process of using AI models for game development tasks, such as controlling scene objects, generating blueprints, running Python scripts, and more. The plugin currently supports models from organizations like OpenAI, Anthropic, XAI, Google Gemini, Meta AI, Deepseek, and Baidu. It provides features like API support, model control, generative AI capabilities, UI generation, project file management, and more. The plugin is still under development but offers a promising solution for integrating AI models into game development workflows.

wllama

Wllama is a WebAssembly binding for llama.cpp, a high-performance and lightweight language model library. It enables you to run inference directly on the browser without the need for a backend or GPU. Wllama provides both high-level and low-level APIs, allowing you to perform various tasks such as completions, embeddings, tokenization, and more. It also supports model splitting, enabling you to load large models in parallel for faster download. With its Typescript support and pre-built npm package, Wllama is easy to integrate into your React Typescript projects.

EasySteer

EasySteer is a unified framework built on vLLM for high-performance LLM steering. It offers fast, flexible, and easy-to-use steering capabilities with features like high performance, modular design, fine-grained control, pre-computed steering vectors, and an interactive demo. Users can interactively configure models, adjust steering parameters, and test interventions without writing code. The tool supports OpenAI-compatible APIs and provides modules for hidden states extraction, analysis-based steering, learning-based steering, and a frontend web interface for interactive steering and ReFT interventions.

letta

Letta is an open source framework for building stateful LLM applications. It allows users to build stateful agents with advanced reasoning capabilities and transparent long-term memory. The framework is white box and model-agnostic, enabling users to connect to various LLM API backends. Letta provides a graphical interface, the Letta ADE, for creating, deploying, interacting, and observing with agents. Users can access Letta via REST API, Python, Typescript SDKs, and the ADE. Letta supports persistence by storing agent data in a database, with PostgreSQL recommended for data migrations. Users can install Letta using Docker or pip, with Docker defaulting to PostgreSQL and pip defaulting to SQLite. Letta also offers a CLI tool for interacting with agents. The project is open source and welcomes contributions from the community.

neocodeium

NeoCodeium is a free AI completion plugin powered by Codeium, designed for Neovim users. It aims to provide a smoother experience by eliminating flickering suggestions and allowing for repeatable completions using the `.` key. The plugin offers performance improvements through cache techniques, displays suggestion count labels, and supports Lua scripting. Users can customize keymaps, manage suggestions, and interact with the AI chat feature. NeoCodeium enhances code completion in Neovim, making it a valuable tool for developers seeking efficient coding assistance.

suno-api

Suno AI API is an open-source project that allows developers to integrate the music generation capabilities of Suno.ai into their own applications. The API provides a simple and convenient way to generate music, lyrics, and other audio content using Suno.ai's powerful AI models. With Suno AI API, developers can easily add music generation functionality to their apps, websites, and other projects.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

python-sdks

Python SDK for LiveKit enables developers to easily integrate real-time video, audio, and data features into their Python applications. By connecting to a LiveKit server, users can quickly build interactive live streaming or video call applications with minimal code. The SDK includes packages for real-time participant connection and access token generation, making it simple to create rooms and manage participants. With asyncio and aiohttp support, developers can seamlessly interact with the LiveKit server API and handle real-time communication tasks effortlessly.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

mcp-agent

mcp-agent is a simple, composable framework designed to build agents using the Model Context Protocol. It handles the lifecycle of MCP server connections and implements patterns for building production-ready AI agents in a composable way. The framework also includes OpenAI's Swarm pattern for multi-agent orchestration in a model-agnostic manner, making it the simplest way to build robust agent applications. It is purpose-built for the shared protocol MCP, lightweight, and closer to an agent pattern library than a framework. mcp-agent allows developers to focus on the core business logic of their AI applications by handling mechanics such as server connections, working with LLMs, and supporting external signals like human input.

hugging-chat-api

Unofficial HuggingChat Python API for creating chatbots, supporting features like image generation, web search, memorizing context, and changing LLMs. Users can log in, chat with the ChatBot, perform web searches, create new conversations, manage conversations, switch models, get conversation info, use assistants, and delete conversations. The API also includes a CLI mode with various commands for interacting with the tool. Users are advised not to use the application for high-stakes decisions or advice and to avoid high-frequency requests to preserve server resources.

consult-llm-mcp

Consult LLM MCP is an MCP server that enables users to consult powerful AI models like GPT-5.2, Gemini 3.0 Pro, and DeepSeek Reasoner for complex problem-solving. It supports multi-turn conversations, direct queries with optional file context, git changes inclusion for code review, comprehensive logging with cost estimation, and various CLI modes for Gemini and Codex. The tool is designed to simplify the process of querying AI models for assistance in resolving coding issues and improving code quality.

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

GraphRAG-SDK

Build fast and accurate GenAI applications with GraphRAG SDK, a specialized toolkit for building Graph Retrieval-Augmented Generation (GraphRAG) systems. It integrates knowledge graphs, ontology management, and state-of-the-art LLMs to deliver accurate, efficient, and customizable RAG workflows. The SDK simplifies the development process by automating ontology creation, knowledge graph agent creation, and query handling, enabling users to interact and query their knowledge graphs effectively. It supports multi-agent systems and orchestrates agents specialized in different domains. The SDK is optimized for FalkorDB, ensuring high performance and scalability for large-scale applications. By leveraging knowledge graphs, it enables semantic relationships and ontology-driven queries that go beyond standard vector similarity, enhancing retrieval-augmented generation capabilities.

react-native-fast-tflite

A high-performance TensorFlow Lite library for React Native that utilizes JSI for power, zero-copy ArrayBuffers for efficiency, and low-level C/C++ TensorFlow Lite core API for direct memory access. It supports swapping out TensorFlow Models at runtime and GPU-accelerated delegates like CoreML/Metal/OpenGL. Easy VisionCamera integration allows for seamless usage. Users can load TensorFlow Lite models, interpret input and output data, and utilize GPU Delegates for faster computation. The library is suitable for real-time object detection, image classification, and other machine learning tasks in React Native applications.

For similar tasks

copilot-lsp

Copilot LSP is a configuration tool for Neovim that enhances the native LSP functionality. It provides features such as text document focusing, inline completion, next edit suggestion, and status notifications. Users can easily integrate Copilot LSP into their Neovim setup to improve their coding experience. The tool offers smart clearing of suggestions, customizable defaults for Next Edit Suggestion (NES), and integration with Blink for inline completions. Copilot LSP requires installation via Mason or system and should be added to the PATH for seamless usage.

llama-coder

Llama Coder is a self-hosted Github Copilot replacement for VS Code that provides autocomplete using Ollama and Codellama. It works best with Mac M1/M2/M3 or RTX 4090, offering features like fast performance, no telemetry or tracking, and compatibility with any coding language. Users can install Ollama locally or on a dedicated machine for remote usage. The tool supports different models like stable-code and codellama with varying RAM/VRAM requirements, allowing users to optimize performance based on their hardware. Troubleshooting tips and a changelog are also provided for user convenience.

llm.nvim

llm.nvim is a neovim plugin designed for LLM-assisted programming. It provides a no-frills approach to integrating language model assistance into the coding workflow. Users can configure the plugin to interact with various AI services such as GROQ, OpenAI, and Anthropics. The plugin offers functions to trigger the LLM assistant, create new prompt files, and customize key bindings for seamless interaction. With a focus on simplicity and efficiency, llm.nvim aims to enhance the coding experience by leveraging AI capabilities within the neovim environment.

ai-auto-free

AI Auto Free is a comprehensive automation tool that enables unlimited usage of AI-powered IDEs like Cursor and Windsurf. It offers cross-platform support and multiple language capabilities. The tool automates tasks such as account creation and email verification, overcoming trial limits and unauthorized requests. Users can use it for educational and research purposes to enhance their coding experience with AI.

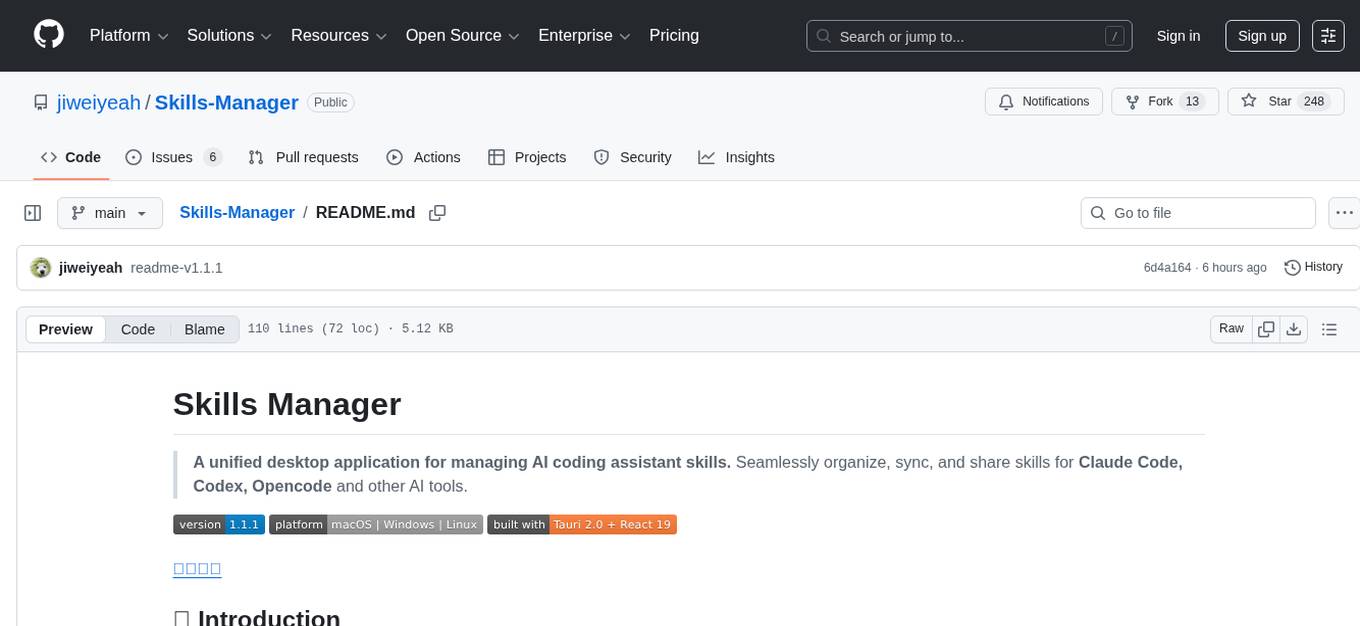

Skills-Manager

Skills Manager is a unified desktop application designed to centralize and manage AI coding assistant skills for tools like Claude Code, Codex, and Opencode. It offers smart synchronization, granular control, high performance, cross-platform support, multi-tool compatibility, custom tools integration, and a modern UI. Users can easily organize, sync, and share their skills across different AI tools, enhancing their coding experience and productivity.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.