codespin-chrome-extension

CodeSpin.AI Chrome Plugin

Stars: 68

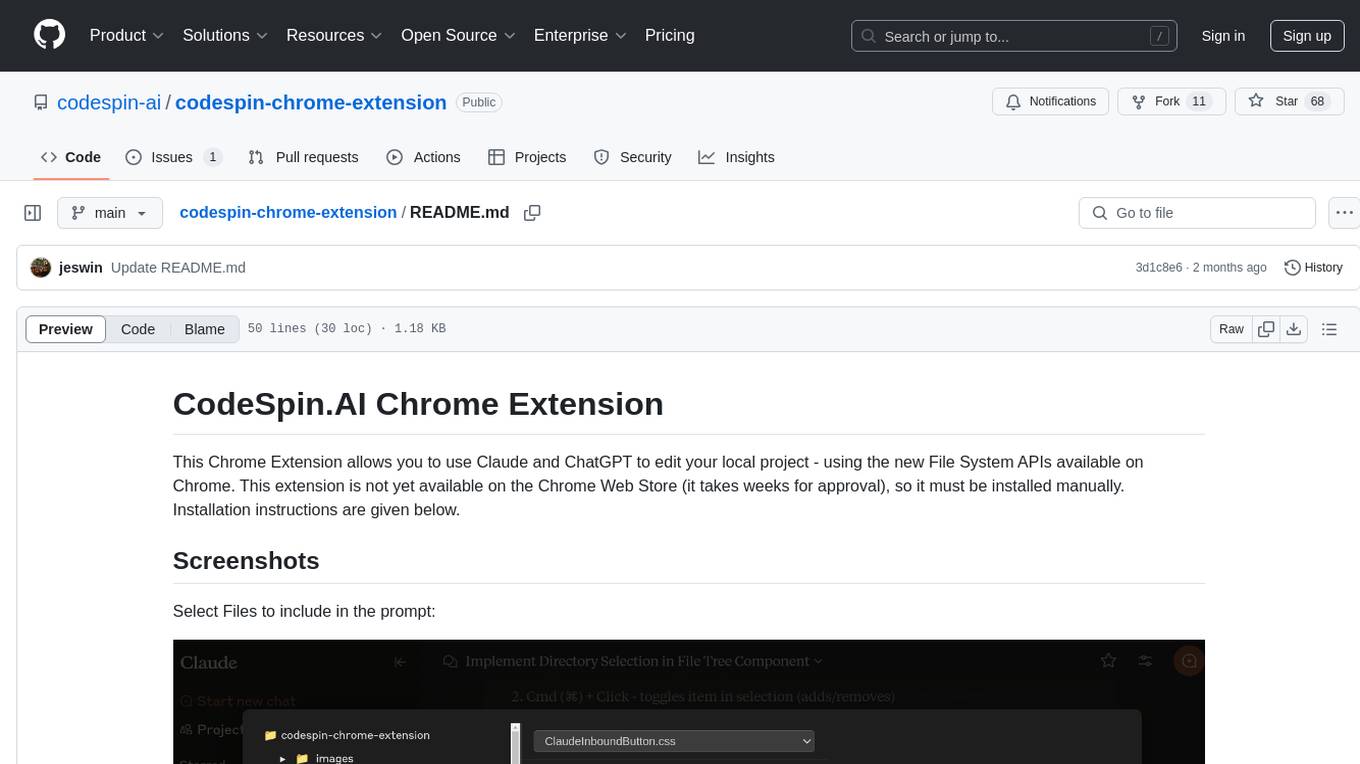

CodeSpin.AI Chrome Extension allows users to utilize Claude and ChatGPT to edit local projects using Chrome's File System APIs. The extension is not yet available on the Chrome Web Store and requires manual installation. Users can select files, sync with their projects, and write back changes easily.

README:

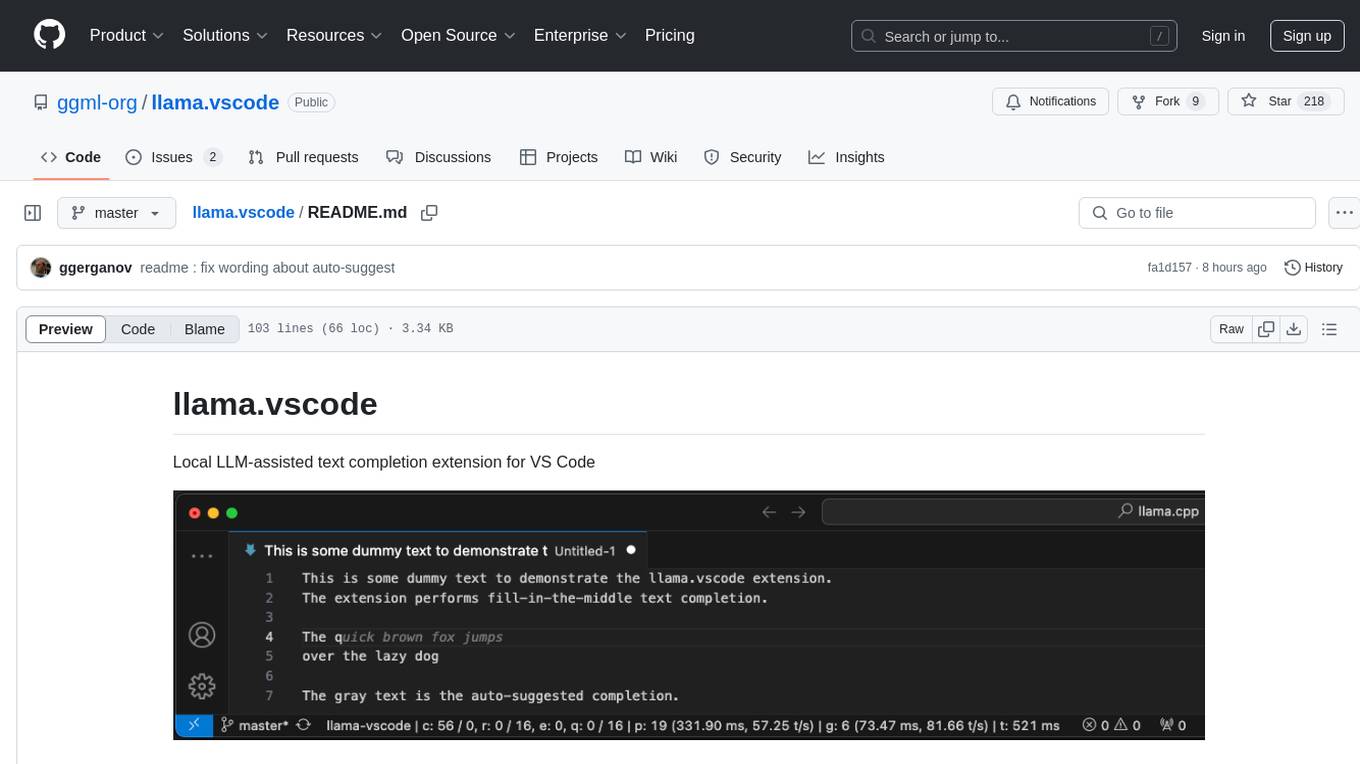

This Chrome Extension allows you to use Claude and ChatGPT to edit your local project - using the new File System APIs available on Chrome. This extension is not yet available on the Chrome Web Store (it takes weeks for approval), so it must be installed manually. Installation instructions are given below.

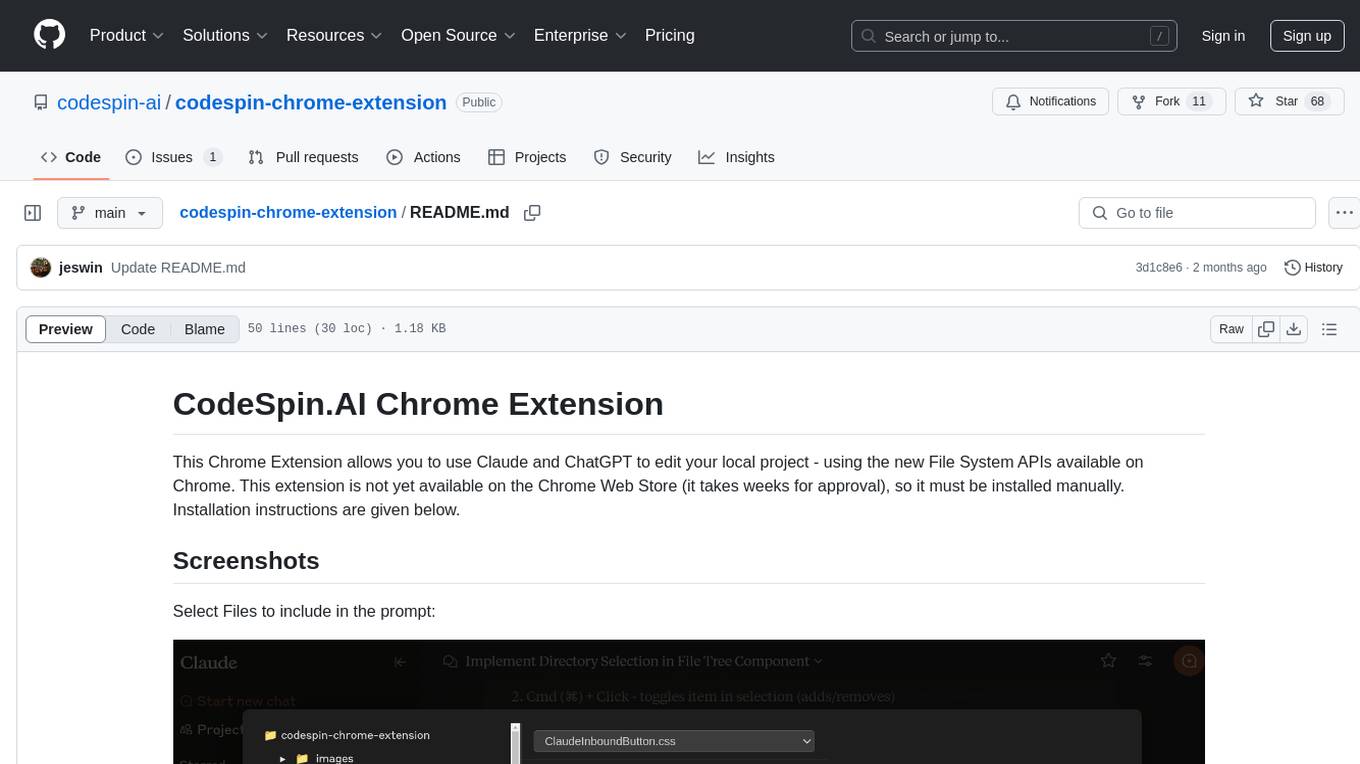

Select Files to include in the prompt:

Add files to prompt, and Sync back with your project

Write it back!

Clone this project

git clone https://github.com/codespin-ai/codespin-chrome-extensionSwitch to the project directory

cd codespin-chrome-extensionInstall deps. Note that you need Node.JS.

npm iBuild it.

./build.shNow go to Chrome > Extensions > Manage Extensions, and click on "Load Unpacked".

Point it at the codespin-chrome-extension directory.

Enjoy.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for codespin-chrome-extension

Similar Open Source Tools

codespin-chrome-extension

CodeSpin.AI Chrome Extension allows users to utilize Claude and ChatGPT to edit local projects using Chrome's File System APIs. The extension is not yet available on the Chrome Web Store and requires manual installation. Users can select files, sync with their projects, and write back changes easily.

ollama4j-web-ui

Ollama4j Web UI is a Java-based web interface built using Spring Boot and Vaadin framework for Ollama users with Java and Spring background. It allows users to interact with various models running on Ollama servers, providing a fully functional web UI experience. The project offers multiple ways to run the application, including via Docker, Docker Compose, or as a standalone JAR. Users can configure the environment variables and access the web UI through a browser. The project also includes features for error handling on the UI and settings pane for customizing default parameters.

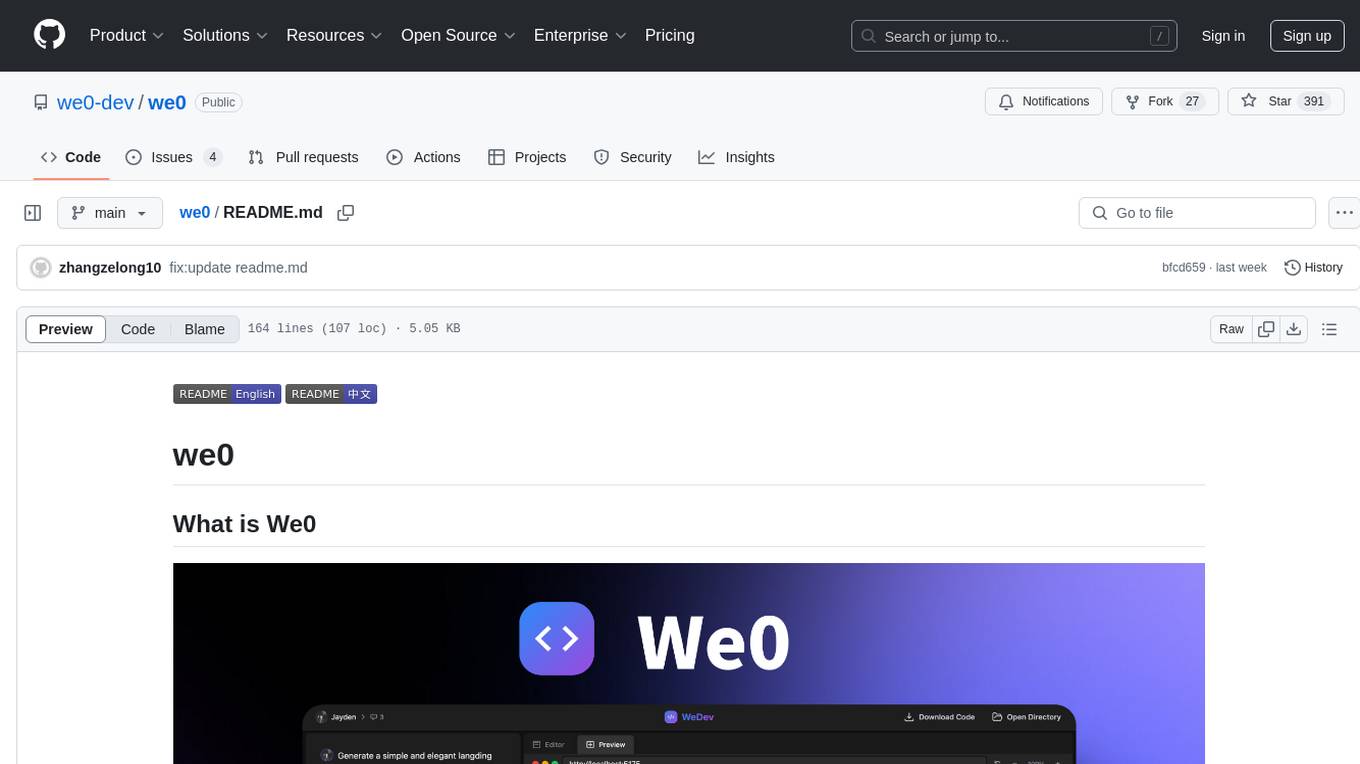

we0

We0 is a web project generation tool that offers browser-based debugging, high-fidelity design restoration, importing historical projects, integration with WeChat Mini Program Developer Tools, and multi-platform support. It supports code generation, design-to-code conversion, open-source projects, WeChat Mini Program Tools preview, existing projects, and Deepseek. The tool uses pnpm as the package management tool and requires Node.js version 18.20. Users can install and configure the tool for web development and utilize quick start methods for building the web editor. Additionally, instructions are provided for installing and using the client version on Mac, along with troubleshooting tips. For any questions or support, users can contact [email protected] or join the WeChat group chat.

LafTools

LafTools is a privacy-first, self-hosted, fully open source toolbox designed for programmers. It offers a wide range of tools, including code generation, translation, encryption, compression, data analysis, and more. LafTools is highly integrated with a productive UI and supports full GPT-alike functionality. It is available as Docker images and portable edition, with desktop edition support planned for the future.

QodeAssist

QodeAssist is an AI-powered coding assistant plugin for Qt Creator, offering intelligent code completion and suggestions for C++ and QML. It leverages large language models like Ollama to enhance coding productivity with context-aware AI assistance directly in the Qt development environment. The plugin supports multiple LLM providers, extensive model-specific templates, and easy configuration for enhanced coding experience.

ChatGPT-desktop

ChatGPT Desktop Application is a multi-platform tool that provides a powerful AI wrapper for generating text. It offers features like text-to-speech, exporting chat history in various formats, automatic application upgrades, system tray hover window, support for slash commands, customization of global shortcuts, and pop-up search. The application is built using Tauri and aims to enhance user experience by simplifying text generation tasks. It is available for Mac, Windows, and Linux, and is designed for personal learning and research purposes.

NekoImageGallery

NekoImageGallery is an online AI image search engine that utilizes the Clip model and Qdrant vector database. It supports keyword search and similar image search. The tool generates 768-dimensional vectors for each image using the Clip model, supports OCR text search using PaddleOCR, and efficiently searches vectors using the Qdrant vector database. Users can deploy the tool locally or via Docker, with options for metadata storage using Qdrant database or local file storage. The tool provides API documentation through FastAPI's built-in Swagger UI and can be used for tasks like image search, text extraction, and vector search.

langstream

LangStream is a tool for natural language processing tasks, providing a CLI for easy installation and usage. Users can try sample applications like Chat Completions and create their own applications using the developer documentation. It supports running on Kubernetes for production-ready deployment, with support for various Kubernetes distributions and external components like Apache Kafka or Apache Pulsar cluster. Users can deploy LangStream locally using minikube and manage the cluster with mini-langstream. Development requirements include Docker, Java 17, Git, Python 3.11+, and PIP, with the option to test local code changes using mini-langstream.

airbnb-clone

This Airbnb clone project is a web application built with Next.js, TypeScript, Tailwind CSS, MongoDB, Prisma, Next auth, and Leaflet. It allows users to register, list and browse properties, make bookings and reservations, search and filter properties, and view property locations on an interactive map.

eidos

Eidos is an extensible framework for managing personal data in one place. It runs inside the browser as a PWA with offline support. It integrates AI features for translation, summarization, and data interaction. Users can customize Eidos with Prompt extension, JavaScript for Formula functions, TypeScript/JavaScript for data processing logic, and build apps using any framework. Eidos is developer-friendly with API & SDK, and uses SQLite standardization for data tables.

tts-generation-webui

TTS Generation WebUI is a comprehensive tool that provides a user-friendly interface for text-to-speech and voice cloning tasks. It integrates various AI models such as Bark, MusicGen, AudioGen, Tortoise, RVC, Vocos, Demucs, SeamlessM4T, and MAGNeT. The tool offers one-click installers, Google Colab demo, videos for guidance, and extra voices for Bark. Users can generate audio outputs, manage models, caches, and system space for AI projects. The project is open-source and emphasizes ethical and responsible use of AI technology.

Auto-Gmail-Creator

Auto-Gmail-Creator is an open-source automation script designed for Python enthusiasts to learn automation basics and for marketers to create multiple Google accounts efficiently. The script automates the process of creating Gmail accounts using sms-activate.org API for phone verification. It handles the download of Chromedriver or Geckodriver automatically and can be customized to prevent blocking. The tool is useful for projects related to automation, scraping, and machine learning.

SecureAI-Tools

SecureAI Tools is a private and secure AI tool that allows users to chat with AI models, chat with documents (PDFs), and run AI models locally. It comes with built-in authentication and user management, making it suitable for family members or coworkers. The tool is self-hosting optimized and provides necessary scripts and docker-compose files for easy setup in under 5 minutes. Users can customize the tool by editing the .env file and enabling GPU support for faster inference. SecureAI Tools also supports remote OpenAI-compatible APIs, with lower hardware requirements for using remote APIs only. The tool's features wishlist includes chat sharing, mobile-friendly UI, and support for more file types and markdown rendering.

aio-switch-updater

AIO-Switch-Updater is a Nintendo Switch homebrew app that allows users to download and update custom firmware, firmware files, cheat codes, and more. It supports Atmosphère, ReiNX, and SXOS on both unpatched and patched Switches. The app provides features like updating CFW with custom RCM payload, updating Hekate/payload, custom downloads, downloading firmwares and cheats, and various tools like rebooting to specific payload, changing color schemes, consulting cheat codes, and more. Users can contribute by submitting PRs and suggestions, and the app supports localization. It does not host or distribute any files and gives special thanks to contributors and supporters.

llama.vscode

llama.vscode is a local LLM-assisted text completion extension for Visual Studio Code. It provides auto-suggestions on input, allows accepting suggestions with shortcuts, and offers various features to enhance text completion. The extension is designed to be lightweight and efficient, enabling high-quality completions even on low-end hardware. Users can configure the scope of context around the cursor and control text generation time. It supports very large contexts and displays performance statistics for better user experience.

ComfyUI-IF_LLM

ComfyUI-IF_AI_LLM is a lighter version of ComfyUI-IF_AI_tools, providing custom nodes to run local and API LLMs and LMMs. It supports various models like Ollama, LlamaCPP, LMstudio, Koboldcpp, TextGen, Transformers, and APIs such as Anthropic, Groq, OpenAI, Google Gemini, Mistral, xAI. Users can create their own profiles (SystemPrompts) with custom presets. The tool offers features like xAI Grok Vision, Mistral, Google Gemini, Anthropic Haiku, OpenAI preview, auto prompts generation, image generation with IF_PROMPTImaGEN via Dalle3, and more. Installation involves searching for IF_LLM in the manager or manually installing ComfyUI-IF_AI_ImaGenPromptMaker by cloning the repository and installing requirements.

For similar tasks

codespin-chrome-extension

CodeSpin.AI Chrome Extension allows users to utilize Claude and ChatGPT to edit local projects using Chrome's File System APIs. The extension is not yet available on the Chrome Web Store and requires manual installation. Users can select files, sync with their projects, and write back changes easily.

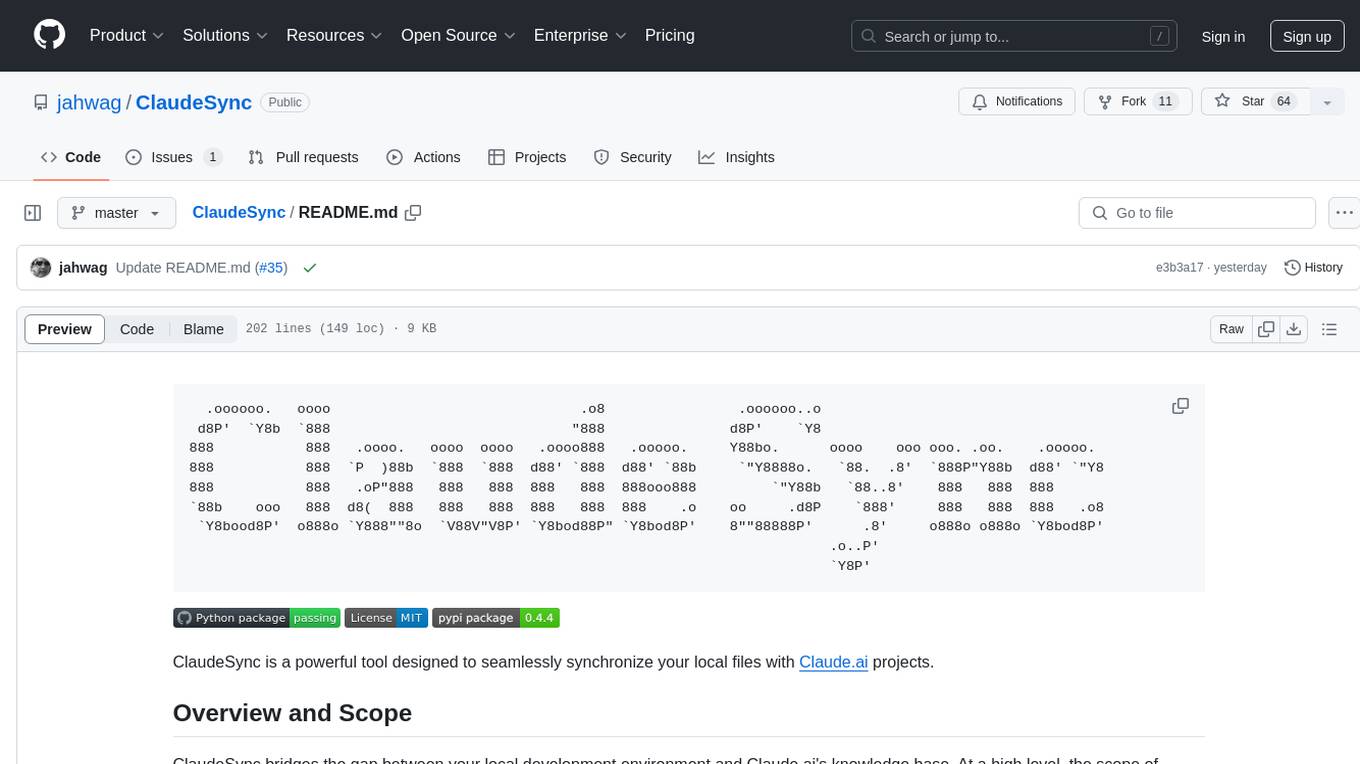

ClaudeSync

ClaudeSync is a powerful tool designed to seamlessly synchronize local files with Claude.ai projects. It bridges the gap between local development environment and Claude.ai's knowledge base, offering real-time synchronization, CLI for easy management, support for multiple organizations and projects, intelligent file filtering, configurable sync interval, two-way synchronization, and more. It ensures data privacy, open source transparency, and comes with disclaimers for use at own risk. Users can quickly start syncing by installing, logging in, selecting organization and project, and running sync. Advanced features include API, organization, project, file, chat management, configuration, synchronization modes, scheduled sync, providers, custom ignore file, and troubleshooting. Contributions are welcome, and communication channels include GitHub Issues and Discord. Licensed under MIT License.

focusany

FocusAny is a desktop toolbar system that supports one-click startup of market plugins and local plugins, quickly expands functionality, and improves work efficiency. It features customizable keyboard shortcuts, plugin management, command management, quick file launching, global shortcut launching, data center for file synchronization, support for dark mode, and various plugins available in the market. The tool is built using Electron, Vue3, and TypeScript.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

langfun

Langfun is a Python library that aims to make language models (LM) fun to work with. It enables a programming model that flows naturally, resembling the human thought process. Langfun emphasizes the reuse and combination of language pieces to form prompts, thereby accelerating innovation. Unlike other LM frameworks, which feed program-generated data into the LM, langfun takes a distinct approach: It starts with natural language, allowing for seamless interactions between language and program logic, and concludes with natural language and optional structured output. Consequently, langfun can aptly be described as Language as functions, capturing the core of its methodology.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.